Various use cases and applications for AI and machine learning in the healthcare industry are proposed more frequently now than ever. Healthcare leaders may find it difficult to keep up with where AI is being applied in their sector.

Similar to the pharmaceutical industry, the healthcare industry is one with numerous uses for nearly every AI approach, including machine vision, predictive analytics, natural language processing, and in the case of health insurance, anomaly detection for fraud detection purposes.

In this article, we give a comprehensive overview of the most prominent applications of AI in the healthcare industry and detail how physicians, hospitals, and healthcare providers have found success with them.

This report is a culmination of years of our secondary research into AI in the healthcare space, including work we’ve done with the World Bank on how to bring AI to the healthcare systems of the developing world. It highlights many of the key healthcare functions that AI may currently serve in the hospital setting and features insights from PhDs and healthcare AI executives we’ve interviewed for our weekly podcast.

Doctors and nurses at various hospital departments could use AI and machine learning for:

- Transcribing physician notes into an EMR or EHR

- Planning for surgery

- Analyzing medical images to find anomalies and take measurements

- Managing emergencies and assessing patient condition

- Helping doctors diagnose cancer and other diseases

- Monitoring surgery for adherence to planned procedures and best practices

We begin our overview of AI in the healthcare industry with applications for medical transcription:

Medical Transcription

Doctors and physicians could use AI to transcribe their voice into medical documents such as electronic health records (EHR). Currently, many doctors spend their own time transcribing their recorded audio notes from patient visits into an EHR system.

With natural language processing (NLP) applications used for voice recognition, medical transcriptionists could automate the transcription and have their speech typed directly into the EHR system.

Although AI for medical transcription is nascent compared to other AI healthcare applications, healthcare companies such as Allina Health are purchasing medical transcription software powered by natural language processing. The software is being sold primarily by Nuance Communications, a developer of NLP software. More information on other white collar automation strategies in healthcare are discussed in this AI in Industry Podcast interview.

Nuance Communications offers a software they call Dragon Medical One, and they claim it can help healthcare companies and providers transcribe a physician’s speech into an EHR using natural language processing. It appears that healthcare providers can integrate the software into their existing EHR system.

The machine learning model behind Dragon Medical One would need to be trained on thousands of requests to transcribe speech in order to accurately recognize a physician’s voice. The voices used to train that model would necessarily include multiple accents, inflections, and pitches so that the software can recognize the voice of any and all doctors. The machine learning model would then transcribe the requests into the EHR.

An EHR specialist would then correct the transcription and run the new draft through the machine learning model again. This would train the algorithm to recognize healthcare terms and more effectively transcribe what the doctor says while recording EHR notes.

Below is a short demonstration video on how Nuance’s Dragon Medical One works:

Nuance Communications published a case study in which they claim to have helped Nebraska Medicine raise the efficiency of updating patient medical records for all their healthcare providers. According to the case study, Nebraska Medicine integrated Dragon Medical One into their EHR system.

Before integration, the client company was paying a third party for transcription services to type out audio notes from doctors and physicians.

Nebraska Medicine was purportedly able to decrease their transcription costs by 23%. The company surveyed all of their physicians on their experience with Dragon Medical One, and 71% of them said that the quality of the EHR notes had improved. Additionally, 50% said they saved a minimum of 30 minutes per day using the software.

Nuance communications has Allina Health and Baptist Health South Florida listed on their website as previous clients.

We had a chance to speak to Charles Ortiz, the previous Director of the AI and Natural Language Processing Lab at Nuance Communications. When asked about his company’s history in staying updated with speech recognition technology, Ortiz said,

Our systems have to become more proficient in being able to understand what the user wants done and not just in terms of an answer, like ‘what’s the temperature outside?’ It’s more that the user wants to do something, say reserve a table somewhere for dinner or they want to find a store that has something in particular…you have to take the natural language processing in the front and carry it forth to the backend, which is responsible for doing the reasoning about the task.

This is an important point for those considering AI medical transcription solutions, because it refers to Dragon Medical One’s ability to discern between verbal commands and dictated text to be typed into the EHR.

For example, a doctor could speak into their microphone to record an EHR note. If the doctor said, “the patient suffered a hairline break in their right ulna,” the software could recognize the distinction between the phrase “hairline break” and the phonetic sounds that correlate to the command “line break.”

This way, the software would not start a new line or receive other phrases as operational commands while EHR notes are being transcribed.

In the future, a doctor might be able to say to the NLP system, “0.15 units of insulin per kilogram,” and the NLP system might be able to interpret that the doctor is looking for the system to write a prescription for the patient she’s talking with. It might comply because the doctor said her command slightly louder than the voice she used to speak with the patient and based on the context that the doctor is currently in a conversation with a patient who has diabetes.

As such, the NLP system might pull up a prescription form that it could then print out for the patient and/or send along to an insurance company. Again, NLP software is unlikely to be able to do this now, but it is a future possibility.

Planning for Surgery

Orthopedic surgeons could leverage AI and machine learning for planning surgery. AI and machine learning could also help orthopedics departments with medical imaging and the identification of distinct body structures in those medical images.

In orthopedics, it is imperative to understand the exact shape of the patient’s body structures, such as bones, cartilage, and ligaments, in the area where they are suffering from pain or disability. Medical images such as MRIs and X-rays can lack the details, the exact measurements and depth of the imaged structures, required to fully assess the situation.

Machine learning solutions could help with measuring the patient’s bones, ligaments, and cartilage from those images to extract more information from them. For example, an orthopedic specialist would want to know the size of each bone, ligament, and muscle in their patient’s ankle in order to more accurately replace parts of it if need be.

Determining these measurements could reveal acute inflammation or other problems that may be hard to see with typical medical imaging technology.

In addition, healthcare providers may use an AI-based predictive software to create estimates on the success of certain surgical procedures. This could allow healthcare companies to make better recommendations on how to go about surgery.

This type of software typically uses a scoring system to determine the likelihood of success for a given medical procedure and could estimate the score of alternate procedures that might be more or less to the patient’s benefit.

Healthcare companies seeking predictive analytics solutions can also come to vendors such as Medicrea for customized tools or implants for the surgeries in question in conjunction with those software solutions.

The company offers implants made specifically to fit each patient and are in accordance with the surgical plan the client healthcare company made using Medicrea’s UNiD platform.

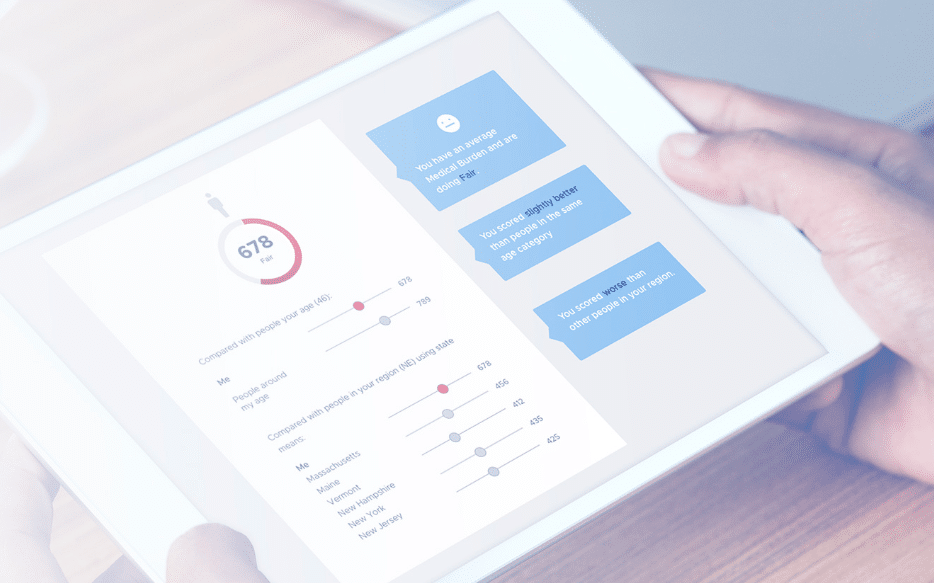

OM1 offers predictive analytics software they call OM1 Medical Burden Index (OMBI), which they claim helps providers predict the outcomes of surgeries. Their website also states the software can create a plan suggestion for those surgeries using predictive analytics. The OMBI calculates an estimate of how much a patient’s ailments and diseases are affecting their daily life.

The OMBI evaluation is a measurement of the combined burden on an individual patient from all of their medical problems, and is scored from 1 to 1000.

The OMBI scoring metric was generated from analyzing over 200 million patient profiles across the United States. OM1’s website states the OMBI score is a strong predictor of future patient resource utilization and mortality.

Below is an image from OM1’s website that shows what the OMBI software would look like on a tablet:

The machine learning model behind OMBI would have needed to be trained on tens of thousands of electronic medical and health records (EMRs/EHRs). Each factor that would debilitate a patient’s normal daily functions, such as being paralyzed or unable to speak, would be given a numerical value, and then run through the OMBI algorithm to provide the user with the OMBI score.

OM1’s software could then predict the complications that may accompany a patient’s disease, along with the volume of resources they will require in the future. This may require the user to include information about the patient’s health insurance into the software beforehand.

OM1 provides very few demonstrational materials for its software. For example, we could not find an explanatory video about the software nor any case studies on OM1’s website. That said, the company has raised $36 million in venture capital and are backed by General Catalyst and Polaris Partners.

That said, the company’s website also states the OMBI score functioned better than a leading competitor called the LACE Index. This was determined at the American Heart Association Scientific Sessions in November 2017.

Analyzing Medical Images

With machine vision software becoming more widespread across the healthcare industry, it follows that medical imaging would be an applicable use case. Machine vision software for medical imaging typically consists of applications that scan medical images to extract more information from them, as we referred to in the previous section regarding orthopedics.

Some software may segment the medical images or 3D models it produces in order to highlight specific parts of the imaged area of the body or foreign or malignant bodies such as tumors or cancer cells.

RSIP Vision, for example, focuses primarily on orthopedic image scanning, however, Sigtuple developed a software called Shonit that the company claims can extract information from images of blood. Sigtuple’s claims its solution can scan for blood cells in an image using microscopes programmed to upload pictures to the cloud.

By storing their medical images in the cloud, they help facilitate telepathology for their client healthcare providers. They also claim to offer remote access to blood smear information if a provider needs a more detailed understanding of patient’s medical history, for example.

The existence of both of these applications could help mitigate the problem of non-digital data when working with AI. Because machine learning algorithms need to be trained on digital data, medical images will need to be digitized before being fed to an AI algorithm. RSIP Vision is meant to be used in conjunction with a medical imaging device such as an MRI and Shonit captures digital images natively on its microscopes.

We spoke to Zhigang Chen, Director of the Healthcare Big Data Lab at Tencent. When asked about what barriers have to be overcome to make AI work in healthcare, Chen said:

Without that foundation of data and the digitalization, it’s hard, or it’s almost impossible to get really good models out of it. So that’s the third point, which is, without digitalization, without this whole process being online and being digitalized, how are you going to realize or how are you going to bring the value of the AI back to the business?

Another AI medical image scanning software is the Netherlands based SkinVision‘s mobile app. The company claims the software checks skin lesions and moles for risk of cancer using machine vision.

The software purportedly categorizes photos of skin lesions by the amount of risk associated with the lesion. These risk thresholds are listed as low, medium, and high. Users who receive results that show high risk can also request their results be reviewed by the company’s dermatologists.

According to a 2017 study published on the SkinVision app in the journal of the American Telemedicine Association, the software scored lesions with 80% sensitivity. Additionally, the software detected malignant and premalignant conditions with 78% specificity. The study used a sample of 108 images and was conducted by the Department of Dermatology at Catharina Hospital Eindhoven in the Netherlands.

ECG Testing and Emergency Management

In the case of an emergency, accuracy and speed are always the highest priority. This is especially true in the healthcare industry where medical professionals cannot wait for extended periods of time for computer systems to finish processing. This includes critical situations such as cardiac arrest or life-threatening injuries.

AI applications for these situations typically provide insights from the patient’s medical data using predictive analytics. A prescriptive analytics solution may also offer a recommendation for how to treat the patient.

For example, a patient’s ECG/EKG results could be run through a machine learning application to help doctors find abnormalities in the heartbeat.

If the software in question is a prescriptive analytics application, it may provide recommendations for how to treat the patient listed in order of priority. It follows that an AI application could extract insights and make recommendations based on other types of medical data, such as blood sugar or alcohol levels, blood pressure, or white blood cell count.

Some applications might need to leverage machine vision technology in order to properly assess the patient’s situation and recommend the best treatments. This could be imperative for determining blood cell counts and lesions or other marks on the outside of the body.

Medical machine vision applications face the challenge of finding the best hardware to run them on. For some applications, an iPad is chosen for its portability and ease of use. Otherwise, a patient may need to be laid underneath a larger camera during care so that the doctors and the camera have a clear view of the patient.

Providing Information That Could Lead to Diagnosis

AI breakthroughs in assisting medical diagnostics continue to push the technology forward with innovations for each use case. We spoke with Dr. Alexandre Le Bouthillier, COO and Co-founder of AI and medical imaging company Imagia, about the possibilities for machine vision in medical diagnostics in the future. When asked about what breakthroughs healthcare leaders might expect from it in the future, Le Bouthillier said:

The breakthrough actually occurred a few years ago with deep learning with regards to what you can see with patient outcomes and even genetic mutations. Just by looking at an image, the computer can see if there’s any genetic mutations, so we wouldn’t need to do a blood sample or biopsy. The ultimate end goal would be to, just by looking at an image, be able to accelerate the standard of care. By combining all of the available data, we will be able to find the best treatment for each patient.

Perhaps one of the most prominent use cases for AI and ML applications in healthcare is in helping doctors with medical diagnostics. Currently, there are AI vendors offering mobile apps for information on patients’ symptoms, as well as chatbots that can be accessed through apps or company websites that offer a similar function.

Some smartphone apps are also built to process internet of things (IoT) sensor data through machine learning algorithms, which would allow the software to track heart rate. The IoT sensor could be attached to the smartphone itself, a smartwatch, or other wearable devices along the lines of a FitBit. The results of measuring this data would then be displayed on the patient’s smartphone app, which could include recommendations or reminders such as medication times.

Patients could use chatbot applications to quickly find information about their symptoms by typing them into the chat window. A machine learning model trained to recognize symptoms in text written in typed language would then be able to find information on illnesses that correlate with the input symptoms.

It is important to note that the integration of these types of diagnostic applications may take some deeper consideration than is immediately obvious. We spoke to Yufeng Deng, Chief Scientist and Director of the North America Division of Infervision. Deng’s company is a machine vision firm with a specialty in medical imaging and diagnostics. When asked about what their most successful clients had in common, Deng said:

I think the first point is workflow integration because if you have a good AI model, that’s not enough. In the medical world, you have to integrate your solution seamlessly into the workflow of the doctors and the physicians, so that they could really use your tool to improve their efficiency, rather your tool is an extra step for them, and that could really decrease their efficiency. We have worked with a lot of hospitals and collaborators, and the first thing we talk to them is how do we integrate our tool into their medical system.

It is clear that for business leaders in healthcare to reliably see success with diagnostic AI applications, they will need to consider how it will affect the workflow at each level.

For example, a research and development team may need to adapt to a new user dashboard so that their research data can be easily accessed by other departments. If the process proved difficult for the research and development team, it could cause physicians to be less informed of critical details regarding their diagnoses.

Some AI diagnostic applications include machine vision software to assist doctors in diagnosing physical abnormalities in the body that could be signs of disease. Medical fields with AI research and applications for machine vision software include oncology and radiology.

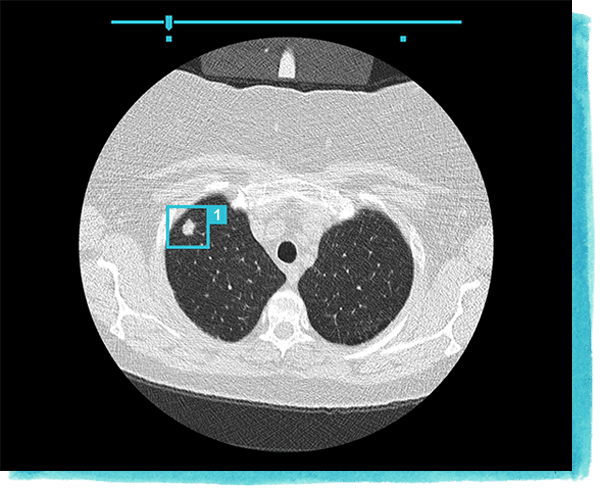

Aidence claims their machine vision software Veye Chest can help radiologists report on detected pulmonary nodules, or small growths in the lungs. They also claim the training dataset for the software was initially only 45,000 labeled scans.

The company claims the Veye Chest software can be integrated with a hospital’s existing reading and reporting software. We can infer this means an EMR/EHR system or database, though it is unclear if the software is compatible with all brands such as EPIC EHR.

The software can purportedly detect the the presence or signs of pulmonary nodules in CT scans of a patient’s lungs. This may help radiologists discern if the nodules are a threat to the patient’s health, and possibly if they are malignant.

Veye Chest displays a text description of its findings along with an annotated image of the lungs. The indicator annotations and text to diagnose the patient more accurately and record the size of the pulmonary nodules throughout treatment.

Below is an image from Aidence that shows what the software could display on a doctor’s computer screen:

The Veye Chest software has received the CE marking, which is a certification indicating conformity with the standards of the European Economic Area. The company does not list any case studies regarding the software’s performance with clients, but the CE certification does approve the software’s use across the European Union.

Aidence’s listed clientele consists largely of hospitals in the Netherlands, which includes Albert Schweitzer Hospital and Tergooi Hospital.

Mark-Jane Harte is co-founder and CEO at Aidence. He holds a master’s degree in Computer Science from Eindhoven Institute of Technology. Prior to working at Aidence, Harte served as co-founder and CTO for smaller Dutch marketing and tech firms.

Monitoring Surgery

Monitoring surgery is a use case for which several machine vision applications may be applicable. As such with oncology and radiology, machine vision software could be used to track the progress of surgery.

Some vendors may offer software that recommends a step by step surgical procedure when it detects when the surgeon has completed a step. Others may monitor the surgery for patient vital signs or adherence to the hospital’s best practices. Conditions could include blood loss, blood sugar levels, heart rate, and blood oxygen levels.

Gauss offers their iPad software Triton, which they claim can help physicians keep track of patient blood loss using machine vision.

Physicians can purportedly point the iPad’s camera at used surgical sponges so that it can measure the patient’s blood loss and the rate at which they are losing blood. In order to determine these factors accurately, Gauss likely trained the machine learning model behind Triton on millions of images of surgical sponges with various amounts of blood on them. The algorithm could then discern the images that correlate to higher rates of blood loss.

A surgeon could then hold up a blood sponge to the Triton enabled iPad, and the software would determine how much blood is present on the sponge. The software purportedly uses this information to determine how much blood the patient has lost before or during a surgery. It is unclear how Gauss’ machine learning model determines blood loss amount and blood loss rate.

Below is a short demonstrational video on how Triton works:

Gauss does not display its marquee clients on its website, but the Triton software has been approved by the FDA. The company raised $51.5 million in venture capital and is backed by Softbank Ventures Korea and Polaris Partners.

Gauss’ website cites an independent study on the Triton software as reported in the American Journal of Perinatology. The study compared the software to surgeons’ ability to discern how much blood loss occurred within C-section patients across 2,781 participants. The study states that Triton identified signification hemorrhages in the patients more frequently than the surgeons’ estimates.

Surgeons used less of the blood product on patients with C-sections involving Triton than for those without Triton. C-section patients where the physician used triton were also able to leave the hospital earlier than those with traditional C-section procedures.

Siddarth Satish is the CEO and a co-founder of Gauss. He holds a master’s degree in Bioengineering from Berkley. Satish was also part of a fellowship at Stanford for surgical simulations.