This article is based on a presentation at a joint United Nations / INTERPOL conference for law enforcement agencies held in Singapore entitled “Machine Learning and Machine Vision Cutting-Edge Trends and Implications in Law Enforcement” delivered by Emerj CEO Daniel Faggella.

Enforcing the law is an expensive business. According to a 2012 report by the Justice Policy Institute (the most recent report we could find on their site), the US spends $100 billion per year on policing.

Today, many companies are using artificial intelligence technology to develop various methods to prevent and detect crime in both the private and public sectors. Some AI software identifies locations where crimes are most likely to happen based on data gathered from previous events. Others use machine vision-based algorithms to solve crime using facial recognition and validation software.

AI-related tools are beginning to emerge into law enforcement, but many of the applications that will make their way into policing weren’t intended for policing at all. AI tools for advertisers, retailers, or building managers will be re-purposed for security applications, as will many novel academic AI experiments (as we’ll explore in the article below).

These applications open up new vistas of security potential – but they also allow for new criminal uses, and new ways to deceive the public, and to deceive law enforcement.

Machine vision is rife for applications in medicine, heavy industries, advertising, and of course, law enforcement, where video surveillance data (at the level of a county or a country) can be massive.

This article will focus on two different types of visual machine learning applications:

- Detection Technologies – Applications that detect patterns in images or videos, locating items, identifying behaviors, identifying people, and more.

- Creation Technologies – Applications that generate new visual information – either as video, static images, or augmented reality imagery.

At the end of this article, I’ll discuss the policymaking implications of these technologies in the years ahead.

See the presentation deck from this original presentation directly below and the full article further down:

Visual Detection Technologies for Law Enforcement

1. Facial Recognition

Perhaps the most recognizable of machine vision technologies is facial recognition. Some businesses use types of facial recognition software to help improve the customer experience as well as prevent fraud.

In law enforcement, however, the value of facial recognition is for surveillance, apprehension, and crime prevention, and quite a few companies are developing software to make it more effective.

Recognizing a wide range of emotions can be very helpful in detecting when a person may have in some way been involved in a crime either as a perpetrator or an eyewitness.

A face recognition system may be able to detect certain expressions in people’s faces that may indicate guilt or that a person is keeping secret knowledge that can help identify persons of interest for a given crime. Having that on record may prove to be a valuable tool in solving crimes or detecting possible criminal activities.

Another way that facial recognition software can help law enforcement is to help find a person of interest. This is not always easy, especially when that person does not what to be found.

One company that works to make this possible is Kairos. When presented with an image or video of a person, such as a mug shot or video footage, they claim their facial recognition software does not only train itself on that particular face as it sees it.

It also performs a series of experiments by placing that face at different angles, under various lighting, and wearing a variety of things that may cover the face, such as glasses, hats, or beards. The system extracts all these data points and associates it with a particular face.

By doing this, the software can identify the same face moving in a crowd even under less-than-perfect conditions lighting conditions. Below is a demonstration of Kairos Emotion Analysis:

2. Facial Validation

On the flip side of the facial recognition coin is facial validation. Instead of finding people who may be in disguise, facial validation software detects when a person is not who they seem. It is possible for people to try to get around facial recognition systems to commit a crime.

It may be to unlock a phone, get into a banking app, or gain access to a secure facility. They may use a gadget like an iPad with the face of another person on it, or a print out of another person’s face, and use that to trick a facial recognition system into granting them access.

Companies like True Face.ai are working on technology that detects whether the image in front of it is a digital or physical picture or an actual human face. The technology is purportedly simple to use. The TrueFace.ai system claims to work not only with still images, but also with videos and with many types of cameras. It can also be embedded in existing facial recognition systems.

In fact, in the future, it’s possible many facial recognition technologies will have this type of fake-image detection built-into them. Below is a video demonstration of Tru Face.ai’s facial validation software:

3. Movement and Occupancy

Movement and occupancy detection technology has obvious uses in real estate and retail industries. It could determine the occupancy level of different rooms within a building or it could help in managing the traffic flow on the premises.

One company that focuses on using machine vision to do these in physical premises is PointGrab. Based in Israel, the company installs physical captors in different rooms and hallways to figure out how many people are in a given room, where they go, and from where they are coming in real time. The company is still in its first phases of selling the technology, but as it only involves sensors that are not complicated to install, it may become commonplace in the near future.

The 1-minute marketing video below shows some of the purported capabilities of PointGrab‘s CogniPoint product, along with what appear to be screenshots of its user interface:

Of course, the current issue with this type of technology is privacy. Companies selling this software may have to guarantee the anonymity of individuals – but this anonymity seems somewhat hard to maintain.

For example, Bill Stevens (a hypothetical employee) works in Room 206, it may be reasonable to assume that whoever walks out of Room 206 is probably Bill Stevens, and that the red dot in that room is Bill himself.

The user only sees Bill Stevens as a red dot on their screen that shows the direction he is moving and whether he is stagnant or in motion. The user will thus be able to track the movement patterns of Bill Stevens without breaking any privacy protection laws because all the user sees is a red dot.

In terms of law enforcement, the ability to detect movement and occupancy may have a profound impact on carrying out certain activities.

For example, if law enforcement was executing an arrest warrant, it would be useful to know where an individual is at a given time. Such technology may also be useful in reconstructing the movements of an individual at the time of a crime.

4. Behavior Detection

Behavior detection is another interesting application of machine vision. It can help monitor the health of certain people, for example. Companies such as AiCure claim to be able to monitor if a senior citizen is taking the pill they are supposed to take every Thursday at 5 PM using a camera. The senior citizen will show the camera the pill and swallow it. The system is trained to detect that kind of behavior.

Another company can use behavior to prevent shoplifting at the counter. The StopLift system detects when somebody does not scan an item, moves the item over the scanners, or engages in any behavior that suggests shoplifting.

These are both discrete behaviors, and detecting them is actually a challenging task. It requires many data points to describe specific behavior, and many behavior data sets would have to be integrated into the system.

Swallowing a pill is relatively simple and discrete action, but that is just one scenario but with the second example, there are many ways to shoplift at the counter. The StopLift software must have separate data sets for each one. That is not to mention the efforts of competitors working on detecting theft down the aisles, which would require completely different sets of data for the machine to process, such as people putting something under their shirts or hiding merchandise in a bag.

Many companies are already using tools like StopLift today to minimize their losses to petty theft. Below is a video showing footage of StopLift’s software in use at an actual store:

However, the use of behavior detection technology in law enforcement is still in development. Hypothetically, it can be used to detect potential or ongoing criminal behavior. For example, a system may detect a man acting suspiciously, such as reaching into his jacket as he gains rapidly on someone with a handbag.

It may be a potential holdup, or he may simply be in a rush and reaching for his phone to call a cab. An effective behavior detection system has the potential to have a big impact on crime prevention, but it will take massive amounts of time and resources to put it in place.

5. Non-Visual Proxies for Behavior

It is also possible to make use of behavior detection technology with non-visual data. This would still use machine vision algorithms, but the visual data would be proxied.

One way to do this is through heat signatures. MIT’s Computer Science and AI Lab (MIT CSAIL) is working on collecting heat detection data and turning that into a kind of proxy for human movement. Using heat as a proxy for visual data, the lab can track the location and movement of people in a given area. It is possible to do this across certain barriers, or literally “look through walls,” as shown in the video below:

The advantage of using non-visual proxies is two-fold. One is the data capturing equipment does not need to be in the actual location of the subjects under observation. The people inside a room will have no idea they are being observed or their movements recorded.

The other advantage is it does not require the usual factors needed for visual data, such as light and direct line of sight. The recorded persons can be anywhere in the room, and the heat-seeking equipment would be able to detect them.

These two features of non-visual proxies are particularly useful for law enforcement. This is the beginning of a phase when all kinds of proxy data will be used in replacement of visual data. Aside from heat, it is possible to gather behavior and movement data using vibration and sound.

The collected data will allow the system to make solid estimations of how the number of people in the room, their location, and their movements or lack thereof. This is all useful information a SWAT team may want to know before commencing a raid for a closely guarded room or building, for example.

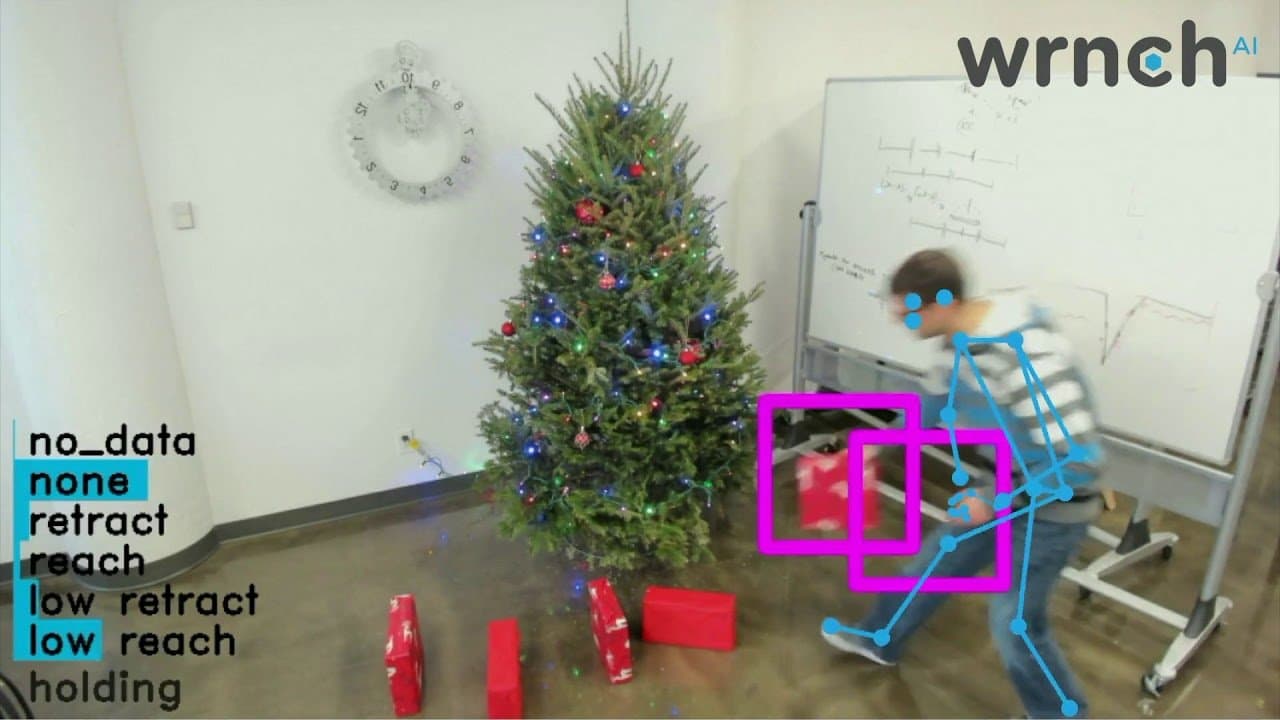

6. Skeletal Construction

A type of detection technology that seems to go a bit into the realm of science fiction is skeletal construction and behavior detection. The purpose of this type of detection technology is to predict future behavior based on a person’s movement triggers. This allows the software to make accurate predictions about a person’s movements and behavior in a given situation.

This can be a useful feature in law enforcement to gather information about how certain movements by an individual may indicate criminal intent. It may also help them track criminals as well as prevent accidents.

Such software may help inform decisions for law enforcement. It can also be used for what I’ve referred to here as “creation technologies”, as we’ll see the in the next example below.

Creation Technologies

1. Augmenting Human Movement

The whole category of visual creative application of AI has not seen much coverage in security circles, but it deserves attention. We interviewed Wrnch CEO Paul Kruszewski back in December of last year.

Wrnch uses the same skeletons used for behavior detection to create a layer over a person’s physical body on their camera. By doing this, a user can hold up an iPad and make that person seem to wear a winter jacket, take on the guise of a Stormtrooper from Star Wars, or anything else they want, and make it look realistic. If this person waves, for example, it would look like the Storm Trooper is actually waving.

Below is a 12-second demo video of what this augmented human movement looks like:

This is an interesting application of machine vision for special effects in movies or gaming graphics. The software can create realistic, programmatically-generated human movements, making it indistinguishable from actual human movement and poses.

This could also hypothetically be a way to veil people in a video or image. The technology can allow a user to alter a person’s skeleton to look different, and it would be difficult to notice the difference even when that person is moving – possibly by imposing onto them a hat, jacket, or other clothing that they weren’t actually wearing at all.

While the technology still needs some time to mature (as the demo above illustrates), it is reasonable to suspect that this kind of technology will be able to be used for tampering with surveillance footage or other video and image data.

Criminals can use the technology to hide their presence in security footage or to provide themselves with an alibi (either by putting someone else in their place in a video, or putting themselves somewhere where they never were). Law enforcement can use it to hide witnesses or to recreate a crime scene to convince a suspect that the police have proof of participation in a crime during an interrogation.

In other words, both opposing parties could doctor video evidence or claim it isn’t evidence of anything. I’ll address this in greater depth at the end of this article in the policy considerations section.

2. Future-Casting Videos

The whole concept of The Minority Report may seem far-fetched, but it may not be for much longer. One application of creation technologies is the ability to predict the future. While future-casting videos may not actually be the projections of people who can see the future (precogs), it does bring you a few seconds in time through predictive analysis. In law enforcement, it is a long way from a pre-crime division, but it has interesting possibilities.

Who is driving this type of technology? MIT CSAIL is in the early stages of developing ways to predict the next 5 or 10 seconds of video. They take a video of a beach with waves lapping up on the shore and plot the data points of the movement of water.

At the end of the video, the system can predict what the next 5 or 10 seconds of that video will look like. The technology is not yet perfect, but it can already predict if a certain wave will crash and slash and if another one is small enough to simply fade into the water. That is impressive enough.

The 1-minute CSAIL video below demostrates the technology in action:

Hypothetically, this can be used to make people believe that something happened when it did not. It can show them an AI-generated view of events. For example, the system can programmatically create a video showing a person turning right, when in reality, the person turned left. This would have obvious ramifications for law enforcement, and could be used by law enforcement or by criminals.

For example, law enforcement can use it to convince a suspect they have video proof of a crime, when in fact the act itself was not recorded. On the other hand, the criminal can present video proof that no crime was committed.

Another way future-casting videos can help law enforcement is in combination with behavior detection data sets. The system can predict the potential for a crime or accident. For example, it can predict if a person observed in front of a store is likely to break in by that person’s behavior based on body language.

There would be ethical issues with this kind of pre-crime technology, and the potential for biased decisions (based on race, neighborhood, gender, etc) is not to be overlooked.

While the initial experiments of these technologies are impressive, it’s unlikely that the technology will be used in police surveillance in the near future. It is somewhat probable that technology of this kind will be used in self-driving cars – predicting collisions or common traffic incidents.

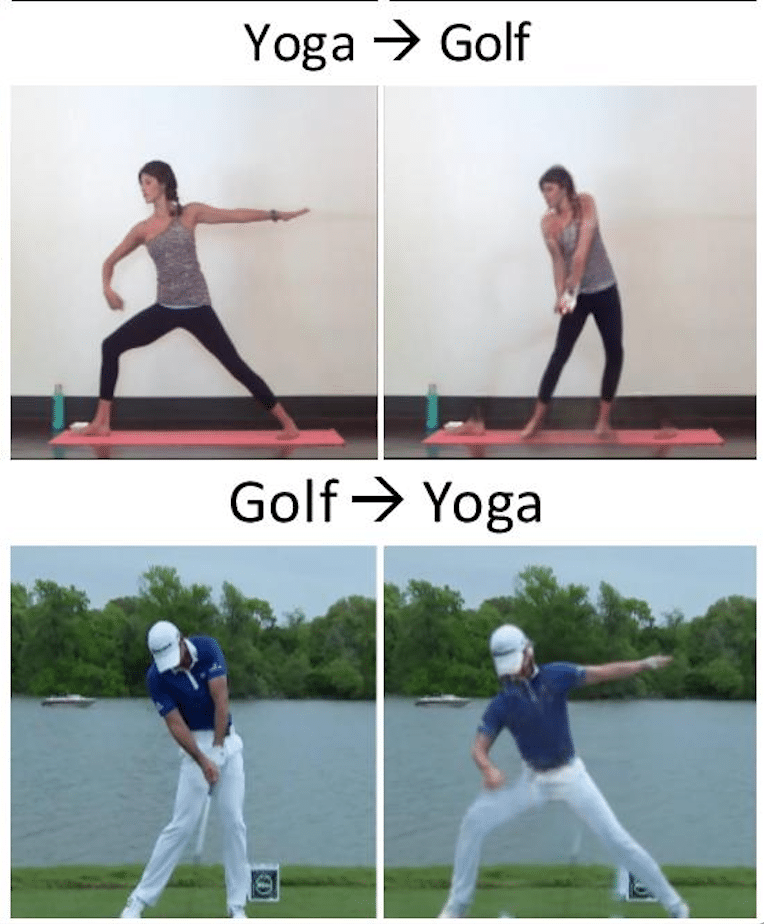

3. Manipulate Body Movement in Video

Another instance of how creation technology can programmatically generate visual content is by manipulating body movement.

MIT CSAIL’s news page features a June 2018 post called Put any person in any pose. They claim their software can take images or a video of an individual executing certain behaviors, such as playing a sport, walking, or talking, and then, using that same person’s body in the same environment, have them do something else (as seen in the image above). It will take the same body with the same dimensions and move it in a different way.

4. Deep Fakes

According to Wikipedia, the term “deepfakes” seems to have originated with its applications to pornography. Videos of pornography were overlaid with the faces of various celebrities to create a particularly vile class of entertainment which was banned from Reddit, Twitter, and other platforms.

This technology is already out there for anyone to use. There are small affordable apps that allow a user to wear somebody else’s face. It does this by mapping the points of the user’s face to the points of a target face. When the user talks or makes any movement, the app will show the target person’s face doing the facial movements on top of the user’s face.

The BBC explored and explained the technology pretty well in a “BBC Click” video episode from earlier this year:

The implications are immense. Imagine a president giving a speech. Now imagine someone changing two or three sentences of that speech using voice and face replication. It would not be necessary to manipulate the whole video. All it takes is to change just a small part of the speech to say something different from what was actually said, and that could have serious consequences in terms of the reaction of people hearing it.

This would be the epitome of fake news, and unfortunately, it will be extremely easy to do convincingly in the near future as these creation technologies become more sophisticated and widely available.

Two Concluding Concerns for Public Policy

Through my analysis of machine vision-related technologies in law enforcement – and in one-to-one interviews with the founders behind them – it is clear that they pose opportunities for both crime prevention, and for crime itself.

For the sake of brevity, I’ve chosen to avoid analyzing a dozen individual hypothetical uses of these technologies (I’ll save that for another article). Rather, I’ll address what I consider to be the two biggest overarching “themes” of ethical considerations for detection and creation technologies, respectively:

Detection Technologies: Place a Priority on Sensors and Data Collection

The Gist: Settling laws around the ethical use and storage of data is important – but governments and businesses will do whatever they please with data behind closed doors.

Our initial conversations should be – first and foremost – about where sensors can be placed in public places or places of business. Data collection should be the first debate – as it sets the stage for the use of that data – and it can be more clearly enforced.

People have a right to expect a certain amount of privacy. However, the consensus seems to be that the concept of privacy outside the home may not be around for much longer. While governments, people, companies should focus on the policies they want to put in place for these technologies, it may be increasingly impossible to enforce them.

For one thing, it would be extremely difficult to keep governments from using them. In the US, privacy is a major issue. The concept of a Big Brother type of surveillance has met with heavy criticism from civil rights activists as well as the general population. However, this may not hold true for other cultures.

Many countries may be more accepting of this increased scrutiny in the spirit of increased safety and security than in the US. They may agree with a famed quote from Confucius:

The virtue of a noble ruler is like the wind; the virtue of his subjects is like grass. If the wind sweeps across the grass, the grass will bend.

Some societies are more likely to be the grass, and I believe that as societies like China continue to implement over-the-moon-level Big Brother-like surveillance, this will shift the global perception of normal, and perpetuate more of the same technologies. It is extremely possible that when more societies accept granular surveillance under all circumstances, other societies will follow suit.

Another factor working against the regulation of the technology is that it’s difficult to regulate the use of collected data. Even if there were laws preventing companies or governments from gathering certain types of data without a license, enforcing them would be difficult. There is no easy way to monitor data usage. A company can simply port and duplicate the data in another location and do what they want with it.

For privacy-protection policies to have any chance of enforcement, the focus should be on the physical components of data collection. Policies can specify zones and spaces where companies can legally put monitoring equipment. This will at least limit the kind of data the company can collect. It is not going to be easy with cameras and sensors becoming progressively slower, but there is a possibility it will work in many cases.

Creation Technologies: Prepare for a Future Where Visual Media is Divorced from Facts

The Gist: What was once a reliable representative “artifact of a past reality” – such as videos, images, and audio recordings – will no longer be so. We need to develop solutions to allow law enforcemence, citizens, and media to separate fact from indistinguishably “real”-looking fiction.

Creation technology has the same dynamic as cybersecurity: people on polar opposites are aiming to get ahead of each other. Law enforcement has to be ready to counteract any deceptive practices that may result from the spread of creation technology. In the next two to four years, any high school student will be able to generate a video programmatically, creating almost anything for $10 in the next half-decade.

There is no one way to fix this potential problem. Law enforcement will have to figure out ways to detect increasingly sophisticated altered or changed images and videos constantly. It will be an ongoing battle that few people in security circles even discuss. The potential for damage is great, yet most people do not appreciate the dangers and give it little attention. The consequences of this inattention will be apparent soon enough.

No one will be able to trust his or her own eyes (or ears) any longer. The time is not yet, but imagining future implications can go a long way towards managing the new technologies more effectively. I hope that in future INTERPOL and United Nations events, these issues will be grappled with further.

Header Image Credit: straitstimes.com