Picking first AI projects is challenging – and leadership is right to be wary of making the wrong investment. The challenge lies in both (a) identifying the right projects, and (b) ranking and determining the right ones.

Leaders might presume that an AI project could fail because the right data doesn’t exist, or in-house talent isn’t experienced enough to handle the project.

But many more projects fail long before data or talent concerns even factor in. Projects often fail by being outright misinformed in terms of what the technology can achieve, and when/how an ROI might be achieved.

Projects are often misinformed from the beginning. They’re predicated on obtaining unrealistic results, or achieving an unrealistic level of capability. This almost always involves an over-emphasis on short-term AI results without any consideration for long-term strategic benefits, and no ties to an existing strategic mandate.

In this article, we break down Emerj’s 4S “Bullseye” model for determining initial AI projects. This framework is intended to be a criterion for ranking projects that helps to eliminate many of the most crucial causes of AI project failure, while helping teams discover early opportunities for a realistic AI ROI.

Picking AI Projects – Using the 4S “Bullseye” Model

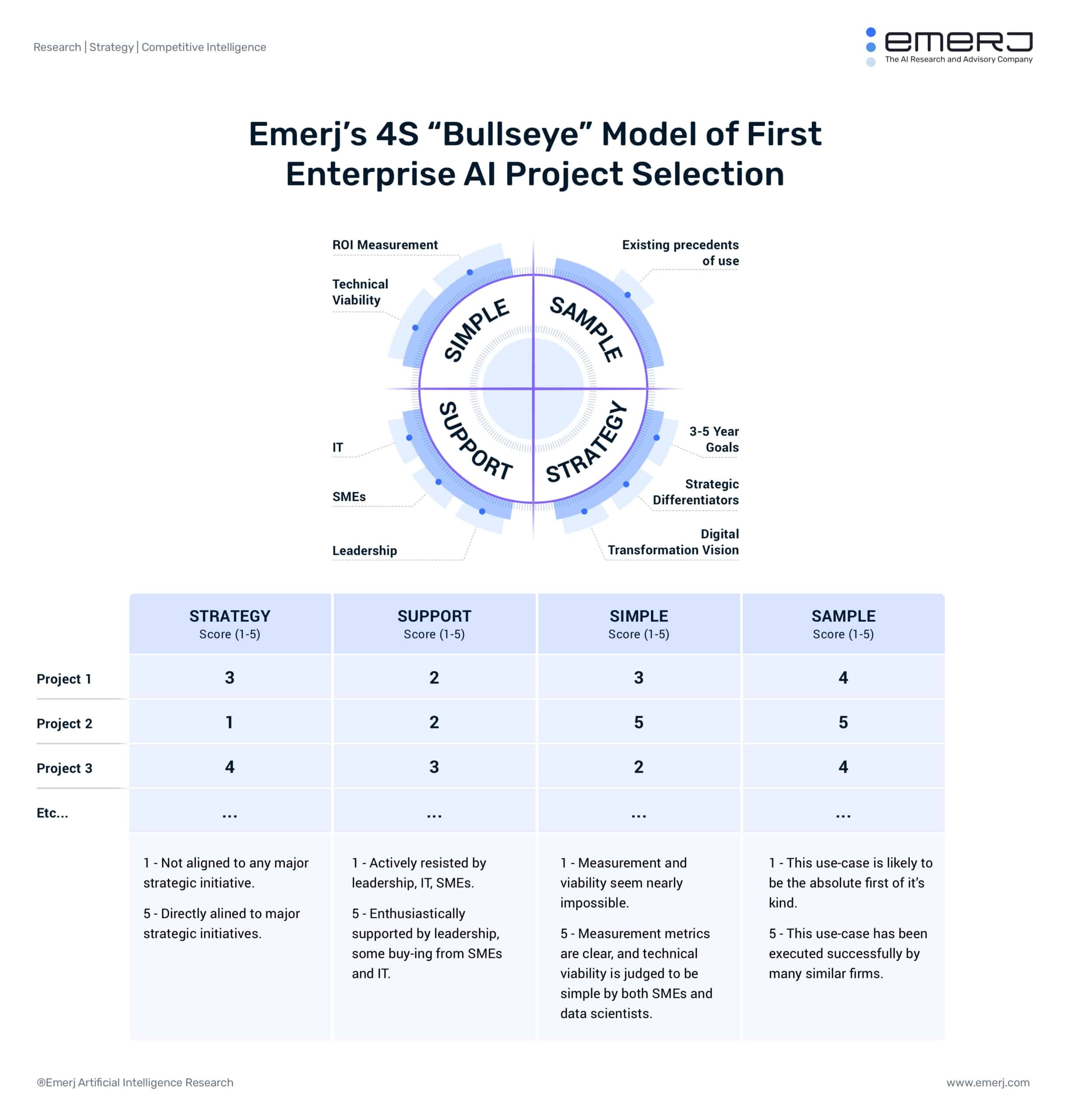

The “Bullseye” model relies on four unique scoring factors, each of which serves to inform the potential ROI of a project, but also to screen out potential causes of project failure.

We’ll discuss each of the four factors in depth, and then put the pieces together and walk through how to use this framework.

Strategy

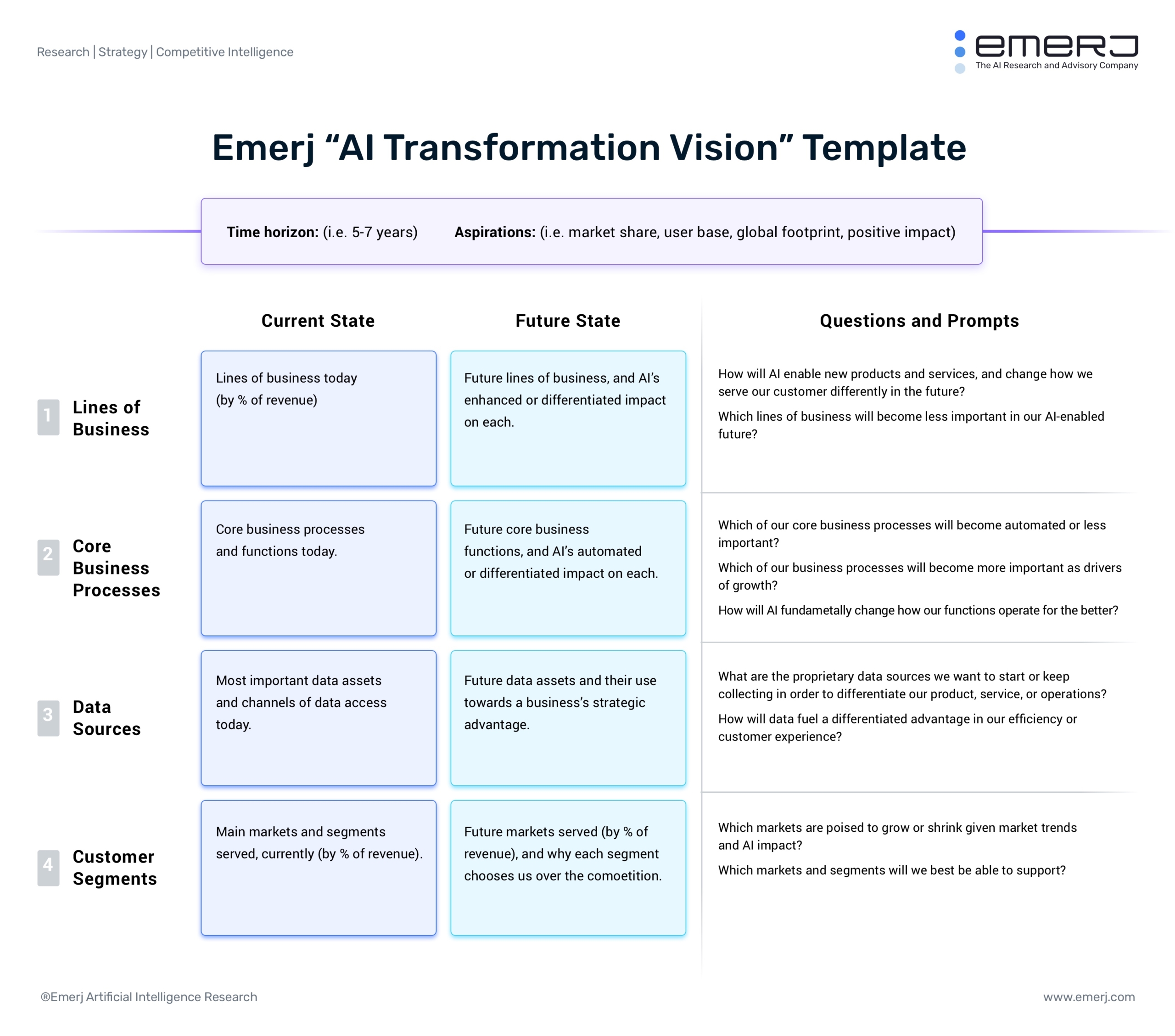

-

Early AI projects should ideally be aligned with a great AI Transformation Vision. Source: Emerj Plus AI Best Practice Guides. Description: Strategy refers to the degree to which a project ties directly to long-term strategic outcomes for the organization or department (or what we often refer to as “strategic anchors”).

- Why it Matters: A project wholly disconnected from strategic objectives won’t get the kind of executive support it needs. Overly short-term projects without any tie to long-term advantage are often destined to fail (read our article on Setting AI Expectations), so it’s important to find projects that have true alignment to strategy, and can serve as the first step along a coherent AI roadmap – building AI maturity in line with long-term aims.

- Scoring (1-5):

- 1 – A project with no real alignment to any strategic outcomes or long-term transformation vision.

- 5 – A project with direct alignment to specific strategic outcomes – a logical first step in building long-term advantage for the organization.

Support

-

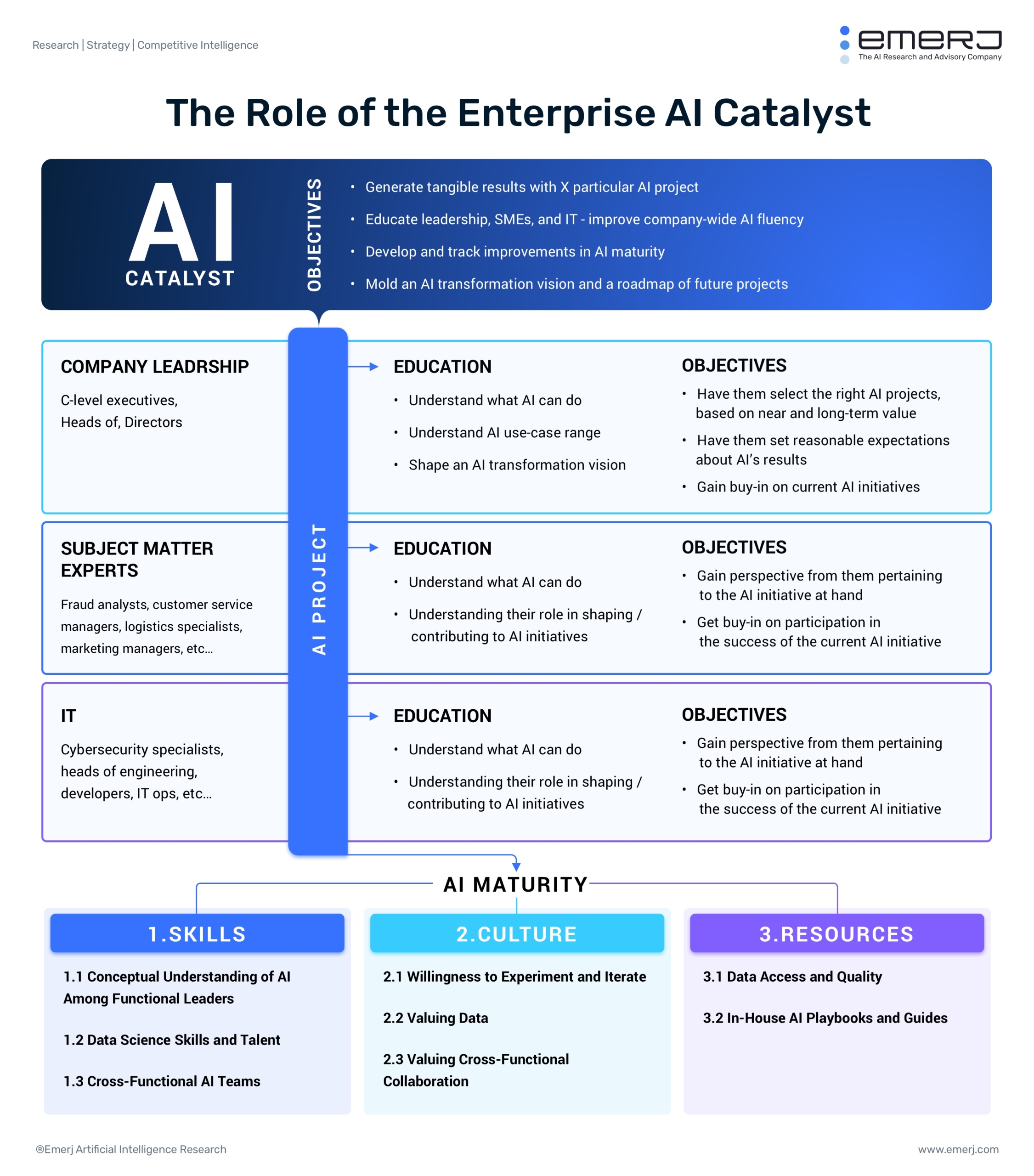

Whether you’re an internal or external AI catalyst, involving and informing stakeholders is one of the most critical elements in achieving sustained ROI. Source: Emerj Plus AI Best Practice Guides. Description: Support refers to the degree to which a project has buy-in from leadership, subject matter experts, and IT.

- Why it Matters: A project won’t get off the ground if it doesn’t have support. Often, a project with a high strategic value will have higher strategic value for leadership, but may garner resistance from SMEs and IT – who are wary of changes to their workflows and perceived threats to their jobs. The job of an AI Catalyst is to inform and involve stakeholders through the delivery process, not merely to deliver short-term results with algorithms and data.

- Scoring (1-5):

- 1 – A project with no direct support from leadership, SMEs, or IT.

- 5 – A project with enthusiastic support from leadership, and direct support from SMEs and IT.

Note: When scoring for support, consider the collective amount of support that a project has across the three stakeholder groups we’ve identified.

Leadership is the most important stakeholder group (as sufficient leadership support will often lend itself to support from other groups – and the check-cutting stakeholder’s opinion naturally holds the highest importance), but exactly how much more important it is from the other two groups will vary by project and organization.

Simple

- Description: Simple refers to the degree to which a project is achievable given the data, infrastructure, and talent available to us.

- Why it Matters: A project that is remarkably complex is almost never a best first choice for an AI project. While we do advise clients to select projects that relate to and align with big, important mandates (Strategy), we also advise them not to eat the whole elephant, but to find near-term “wins” that are clearly in line with those larger strategic mandates. If we need 18 months to clear, harmonize, or collect the data – it isn’t simple. If a project requires talent and experience vastly outside of what we have in-house – it likely isn’t the best first step forward.

- Scoring (1-5):

- 1 – A project that would be extremely difficult to achieve given the experience and data available to us.

- 5 – A project that would be relatively simple to achieve given the experience and data available to us. We have the data assets necessary, and we have the subject matter expertise and in-house data science talent necessary to potentially succeed.

Sample

- Description: Sample refers to the degree to which a project has existing precedents of success for other companies (a) similar in size, and, preferably (b) in a similar or adjacent industry.

- Why it Matters: Here’s a rule of thumb: If the largest, most powerful, tech-savvy firms haven’t been able to use AI to achieve the outcome you desire, then it’s likely not the best first project for your firm. AI already involves a lot of R&D and iteration. By leaning into truly “blue ocean” novel AI use-cases, we risk vastly longer periods of iteration, and requirements for much more in-house expertise to have any chance of success. Firms new to AI should not be focused on building their first few “wins” with unrealistic, novel projects – but with relatively well-trodden projects that help to deliver valuable near-term results for the firm. If we cannot find sample projects completed by a similar company to ourselves, we’re setting ourselves up for more complexity than we’re probably ready to handle.

- Scoring (1-5):

- 1 – A project that has absolutely no precedent of use in any other firm. A completely novel application of AI in business.

- 5 – A project that has been executed (identically or near-identically) by one or more firms around our size, in our industry or an adjacent industry.

Note: Be wary of what evidence you use in collecting previous precedents of use. The claims of both vendors and enterprises are often exaggerated. Look for use-cases that multiple vendors claim to have success with – and look for vendor claims that reference known clients (not “a large American financial services firm” but “Citizens Bank”). For enterprise press releases, ignore announcements that merely state an intention, and look only for claims of actual roll-out within an organization, and the impact of the technology. This rough 1-5 score isn’t intended to be complete – but rather a way of relative rankings. Use your sample score to compare potential initiatives side-by-side and rank them as best you can.

Putting This Framework in Action

First, collect all of the AI project ideas that you’ve developed by examining your workflows, data, and the existing precedents of AI use-cases in your industry. For helpful methodologies on how to collect AI project ideas, refer to the following resources:

- Article: Picking a First AI Project – A 3-Step Guide for Leaders

- Article: How to Discover the AI Initiatives of Fortune 500 Companies

- Interview: Assess Your Data to Find AI ROI Opportunity – With Adam Bonnifield of Airbus

- Interview: Best-Practices for Discovering Valuable AI Opportunities – with Adam Oliner of Slack

Second, put the assorted projects into the 4S “Bullseye” model, and score them to the best of your ability based on the 1-5 scoring criteria provided above. Not that these scores are at best a rule of thumb. But this rule of thumb, applied across all four criteria, and applied side-by-side across various, often related applications will lead to clarity on the cluster of projects and do or do not deserve to be considered.

Third, assess the sorted AI projects and determine which application(s) deserve to make the final cut and be considered for piloting. Some scores (i.e. Simple) should be weighted more heavily in your decision-making, given the AI readiness of your organization and the mandates of your leadership. Regardless, showing your scoring process will help leadership to understand that your AI project recommendations are grounded in the right success criteria.

Use your own judgment to decide whether a further round of deeper ROI and cost estimates should be done on the final 2-4 initiatives you’re considering.