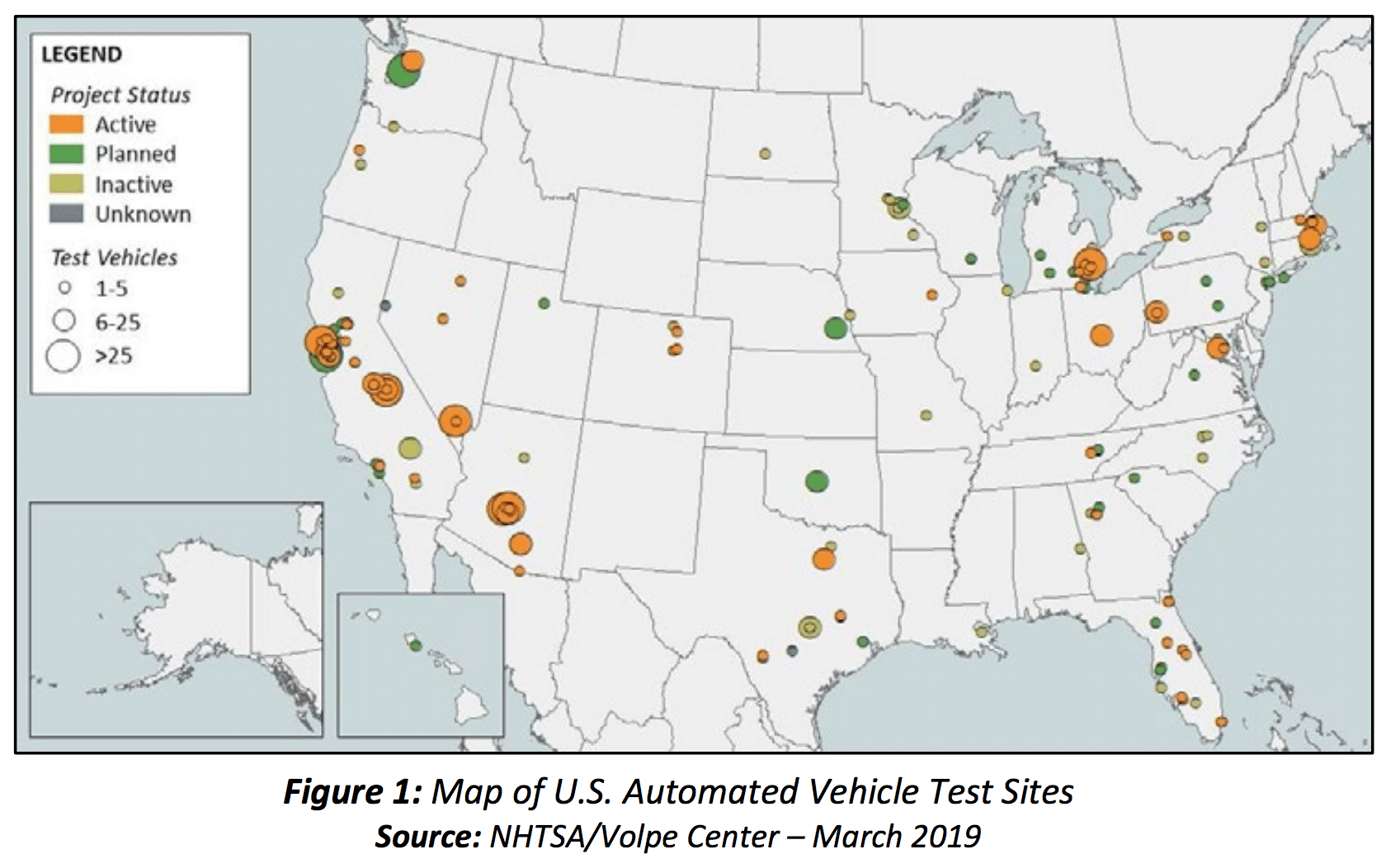

The National Highway Traffic Safety Administration (NHTSA) of the U.S. Department of Transportation recently released an overview report on the current state of self-driving technology.

According to the report, automated vehicle technologies are still in the research and development phase. The map given below depicts the controlled test sites in the U.S. where self-driving cars components and systems using modeling, simulation and on road.

This article aims to serve fundamental and introductory information on how self-driving and autonomous cars currently operate in the real world – from hardware to software.

We’ve broken this article out into three sections:

- What constitutes a self-driving car (levels of autonomy)

- How self-driving cars interact with each other and their surroundings

- Current examples of how Google’s Waymo and Tesla’s Autopilot operate

For readers looking to gather further information on self-driving car applications in real time, we have previously covered important, in-depth information on the same in articles such as The Self-Driving Car Timeline – Predictions from the Top 11 Global Automakers and AI for Self-Driving Car Safety – Current Applications.

We’ll begin by examining levels of autonomy:

Levels of Autonomy in a Self-Driving Car

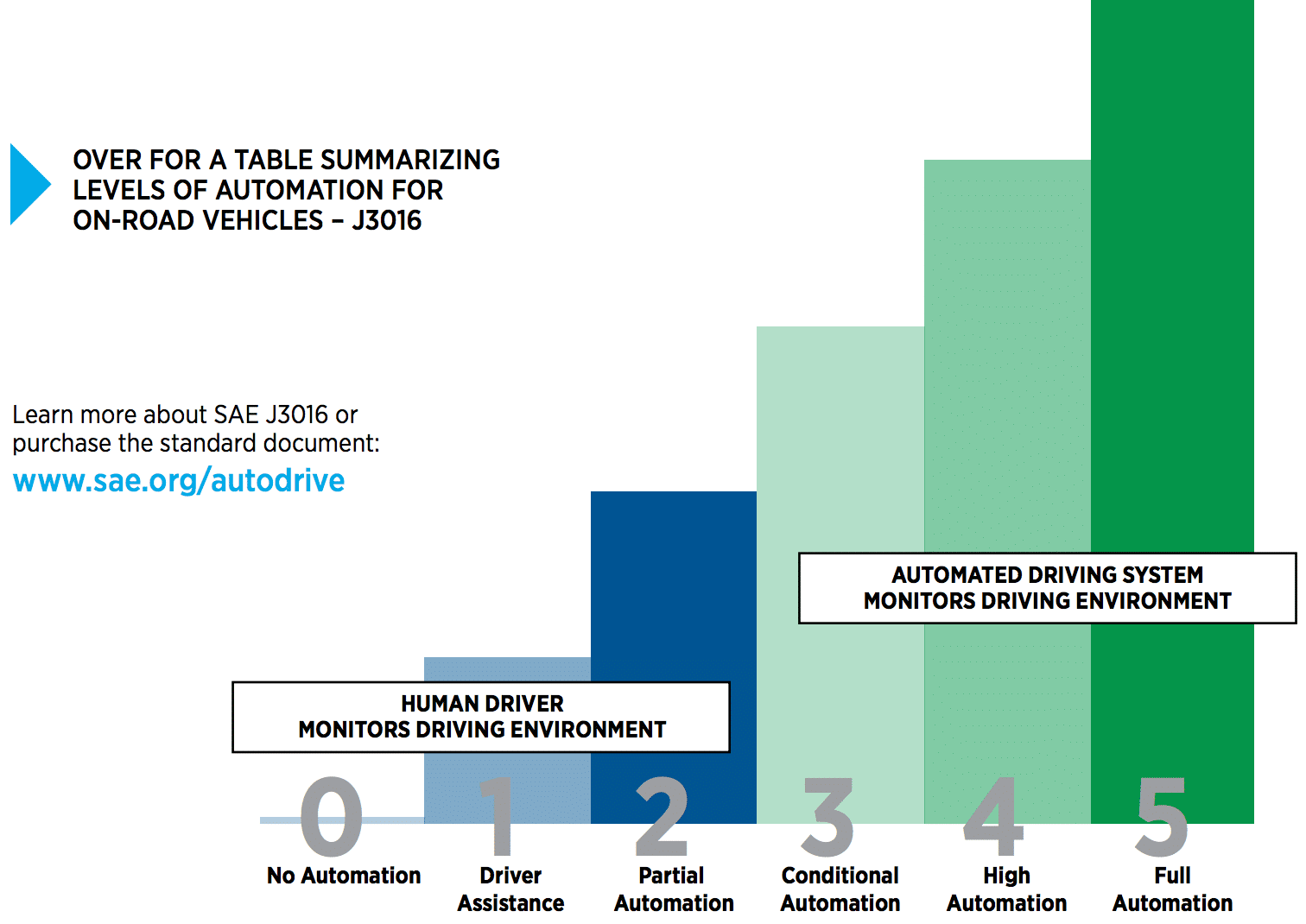

According to the SAE International, a self-driving car has five levels of autonomy:

Level 1: This is a low-level automation where the system and the human driver share control. For instance, the adaptive cruise control feature controls the engine and brake power for speed variation and maintenance while the driver would control the steering. Level 1 systems may warrant for full human control anytime.

Level 2: At this level, while vehicle operations such as acceleration, brakes and steering are in the system’s control, driver’s constant monitoring of the autonomous system is needed. Many Level 2 vehicles require the driver to hold the steering wheel for the autonomous system to continuously operate.

Level 3: Autonomous vehicles that fall under this category allow the driver to perform other tasks (like texting or watching a movie) while the system takes control of most vehicle operations. However, the system requires driver intervention within a limited time for some operations as specified by the vehicle manufacturer.

Level 4: This level supports self-driving with minimal driver intervention but it does so only in select, mapped locations called geofenced areas.

Level 5: No human intervention is required.

BMW’s self-driving car introductory video demonstrates what each of the five levels might be like for riders and drivers, with an overview of some of the technologies that enable autonomy:

While level 5 autonomy is the shared dream of many self-driving car firms, their respective path to arrive at level 5 autonomy are quite different. Some firms believe that level 3 and level 4 autonomy are too dangerous, because the hand-off from machine to human could be unpredictable and dangerous (switching from texting or watching a movie to swerving away from an accident might be an unrealistic expectation).

How a Self-Driving Car Interacts with Its Surroundings

According to the United States Department of Transportation, the connected and autonomous vehicles communicate with each other and their surroundings in three ways:

Vehicle to Vehicle (V2V) Interaction

The V2V interaction between autonomous cars allows information exchange on routes, congestion, obstacles, and hazards.

For example, if a self-driving car encounters an accident or high-volume but slow-moving traffic, it is capable of relaying the information to other self-driving cars, which can then adjust their routes according to the received data and potentially avoid accidents and traffic.

Vehicle to Infrastructure (V2I) Interaction

Self-driving cars can communicate with infrastructure components such as intelligent parking systems to plan routes and reserve parking spaces well ahead of the journey.

This information especially useful when the autonomous car has to decide how to park when it has reached its destination: parallel, perpendicular or angular. In addition, other driverless cars would “know” in advance whether a particular parking space has already been reserved or is open.

Vehicle to Pedestrian (V2P) Interaction

The V2P interaction is primarily carried out between a self-driving car and a pedestrian’s smartphone application.

According to The University of Minnesota, it funded a V2P prototype called Mobile Accessible Pedestrian Signal (MAPS). A visually-impaired pedestrian can use MAPS to receive and provide information regarding the intersection and the pedestrian’s location, respectively. Self-driving cars would then use such data, in addition to that provided by the cars’ sensors and LiDARs to more accurately locate pedestrians and potentially avoid collisions.

Examples and Use-Cases

Bosch explains some simple use-cases of V2V and V2I technology in the following two-minute video:

China’s now-embattled hardware manufacturer Huawei demonstrates a variety of potential “V2” use-cases in this 2018 demo video:

While the technology is still in its infancy, “V2” technologies are helping to pave the path to more complete autonomy – and hopefully – to vastly safer roads for drivers and pedestrians. It is unclear whether different countries will develop entirely different V2 use-cases and standards, and it’s too early to tell which V2 applications will become standard in the coming years, and which will be abandoned.

Current Examples of Self-Driving Cars and Components at Work

Google and Tesla are the biggest players in the current autonomous vehicle space. In order to better understand how self-driving cars work in real time, this article includes details about the workings and operations of Google’s Waymo and Tesla’s Autopilot.

Google’s Waymo

According to Google, Waymo is a Level 4 autonomous system, requiring minimal human intervention.

Waymo’s Hardware Infrastructure

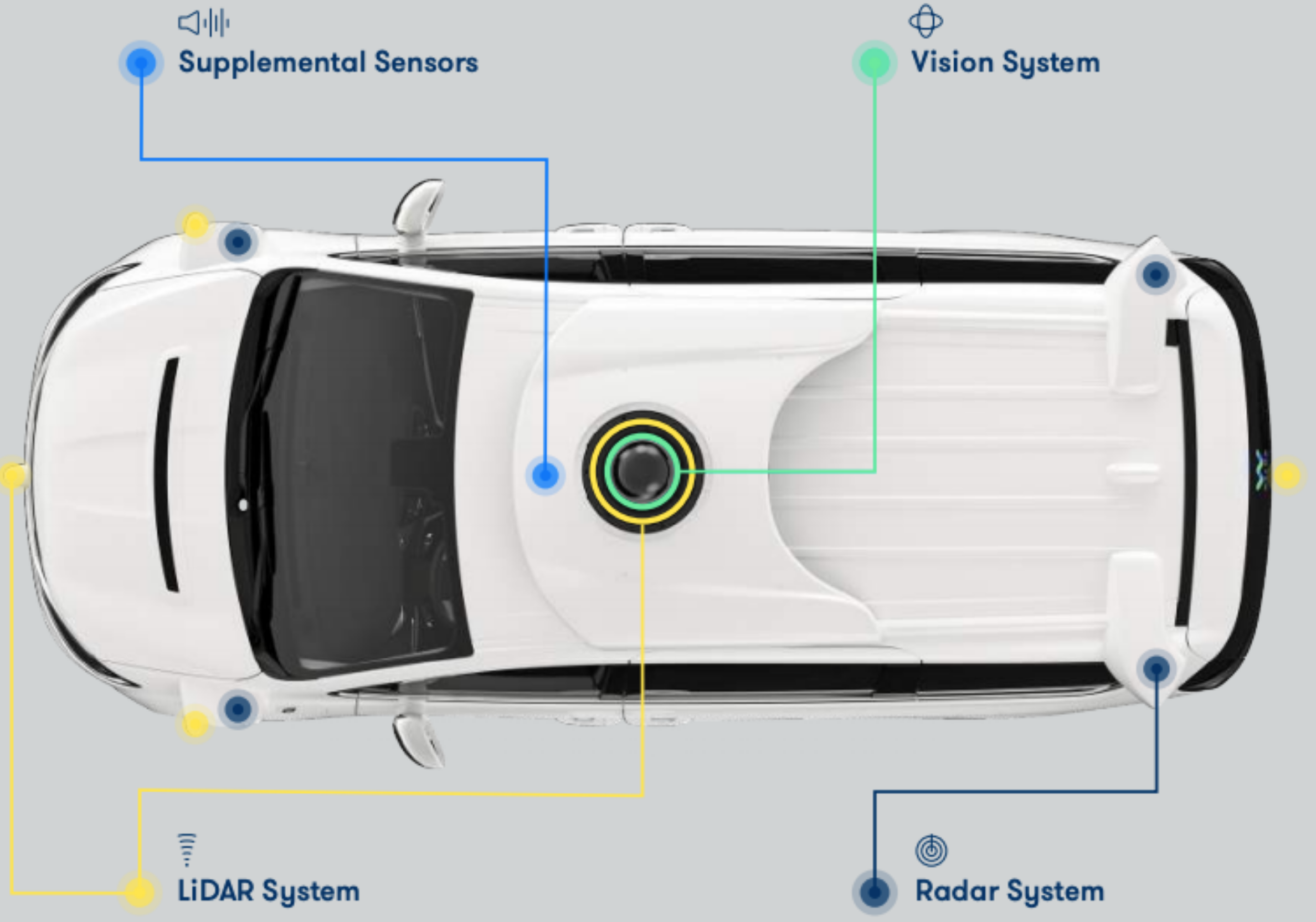

A descriptive image of Waymo’s hardware is provided below:

Waymo’s infrastructure includes a variety of sensor, radar, and camera systems.

LiDAR Sensors

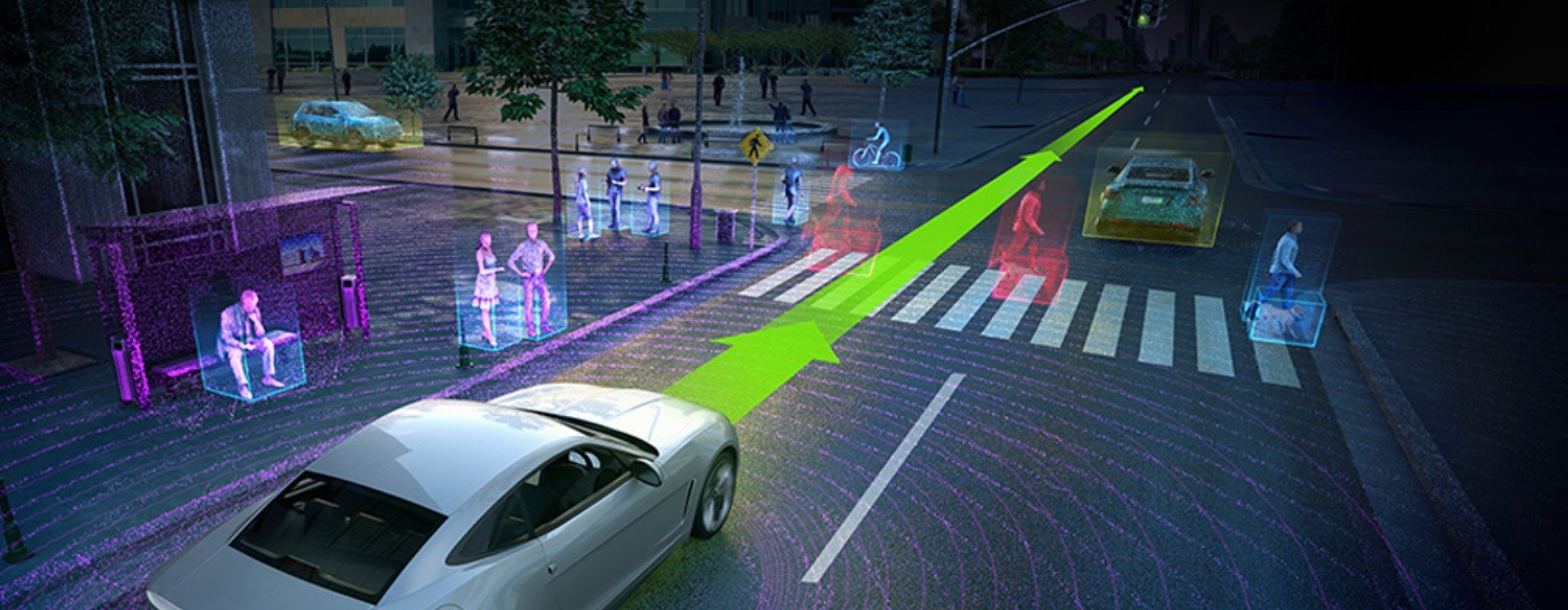

According to Google, Waymo has a multi-layered sensor suite capable of operating in different lighting conditions. This sensor suite is essentially an omnidirectional LiDAR system comprising a short-range, a high-resolution mid-range and a long-range LiDAR. These LiDARs project millions of laser pulses per second and calculate the time taken for the beams to reflect off a surface or a person and return to the self-driving car.

Based on the data received from the LiDAR beams, Waymo reportedly creates a 3D map of the surroundings, identifying mobile and immobile objects, including other vehicles, cyclists, pedestrians, traffic lights, and a variety of road features.

Vision

Waymo’s vision system is yet another omnidirectional, high-resolution camera suite purportedly capable of identifying color in low-light conditions. This helps in detecting different traffic lights, other vehicles, construction zones and emergency lights.

Radar

Google claims that Waymo uses a radar system to perceive objects and motion through wavelengths “travelling around” different light and weather conditions, such as rain, snow and fog. This radar system is also omnidirectional and can track the speed of pedestrians and other vehicles 360 degrees around the self-driving car.

Supplemental Sensors

Waymo is also supplemented with extra sensors that include an audio detection system to detect emergency sirens and a GPS to track physical locations.

Waymo’s Self-Driving Software

Google claims that Waymo’s self-driving software has been trained and tested based on “5 billion miles of simulated driving and 5 million miles of on-road driving experience.” It is powered by machine-learning algorithms.

The below video elaborates on how Waymo operates on the road:

According to Google, Waymo’s Level 4 technology detects and “understands” objects and their behavior and accordingly adjusts the behavior of the autonomous vehicle in a three-fold process.

Perception

Waymo can reportedly detect, identify and classify objects on the road, including pedestrians and other vehicles, while simultaneously measuring their speed, direction and acceleration over time.

For example, Waymo’s perception software collects the data from the sensors and radars and creates a simulated “view” of the surroundings. Owing to this capability, Waymo is able to determine whether it can proceed through the traffic when the light turns green or adjust its route due to a blocked lane indicated by traffic cones.

Behavior prediction

Waymo, according to Google, can predict the behavior of an object on the road based on its classification by inferring data from the training models constructed using “millions of miles of driving experience.

For example, the self-driving software “understands” that while pedestrians may look similar to cyclists, they move slower than the latter and exhibit more abrupt directional changes.

Planner

The planner software reportedly uses the information captured by the perception and behavior prediction software to plan appropriate routes for Waymo. Google claims that Waymo’s planner operates like a “defensive driver,” who chooses to stay away from blind spots and gives leeway to cyclists and pedestrians.

Tesla’s Autopilot

Autopilot, according to Tesla, is a Level 2 autonomous vehicle feature. Like most Level 2 systems, Autopilot requires that the driver hold the steering wheel at all time, poised to take over control.

Tesla also warns that the driver has to be fully functional and aware during autonomous operations.

Autopilot Hardware

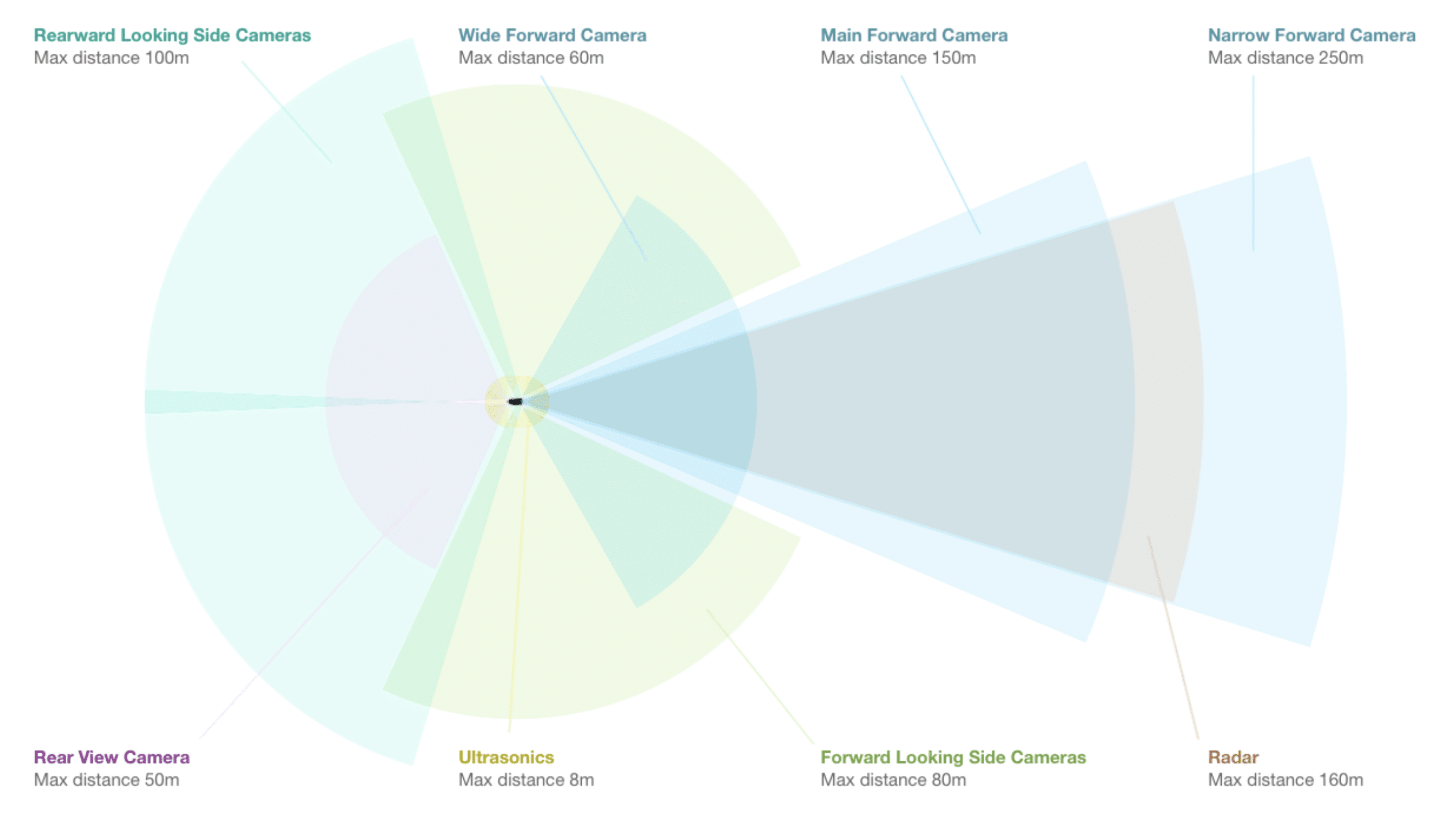

The picture below shows the hardware components of the Autopilot.

According to Tesla, its self-driving cars manufactured between 2014 and October 2016 included limited ultrasonic sensors, low-power radar and only one camera.

Those built since 2016 comprise 12 ultrasonic sensors for nearby object and pedestrian identification, a frontal radar capable of “sensing through” different weather conditions, eight external cameras that are used as feeds for Tesla’s in-house neural net and a computer system that processes inputs in milliseconds.

The Tesla connected car software is continually updated “over the air.”

Autopilot Software

- Traffic-Aware Cruise Control for maintaining speed in response to the surrounding traffic.

- Driver-assisted “Autosteer” within the boundaries of well-marked lane

- Auto Lane Change for transitioning between lanes

- Driver-assisted “Navigate on Autopilot” for guiding the vehicle from a highway’s on-ramp to off-ramp, including suggesting and making lane changes, navigating highway interchanges, and taking exits.

- Autopark for automatic parallel or perpendicular parking

- Summon for “calling” the car from its parking space

The workings of the above-mentioned features are briefly explained below:

Navigate on Autopilot

The Navigate on Autopilot feature allows the driver to input a destination into the vehicle’s navigation system, which kickstarts the “360-degree visualization” showing a planned route. This features has to be enabled for each trip for safety reasons. It does not operate on default mode, according to Tesla.

Auto Lane Change

The Navigate on Autopilot feature includes two types of lane changes: route-based and speed-based. The former allows the vehicle to stick to the navigation route irrespective of speed. The latter, based on a few settings, suggests transitions into lanes with vehicles moving faster or slower than Autopilot in reference to the set cruise speed.

Automatic lane change kicks into mode when the driver opts out of lane change confirmation notification. However, Tesla warns drivers that this feature is not fully-autonomous and that it requires their complete attention and hold over the steering wheel. Tesla claims that the driver can manually override this feature anytime.

Autopark and Summon

The driver can initiate Autopark when the car is driving at low speeds trying to detect a suitable parking spot. This needs manual intervention of putting the car in reverse and pressing start, however, before the car begins to independently control speed, change gears and steering angles.

Autopilot also has a Summon button triggered through an app when the passenger wants to “call” the car and direct it through a series of forward and reverse button clicks.

The video below shows a Tesla Autopilot live demonstration on the road in real time:

Barriers to Autonomy

While self-driving vehicle investments have skyrocketed in the last five years – there are still a number of important challenges keeping level 5 autonomy from becoming a reality:

- Road Rules in the Developing World – California highways are a different driving environment than the traffic of Cairo or Bangalore. The developing world may be left behind in terms of autonomous vehicle adoption (and thus, in terms of safety, lower emissions, and increased worker productivity) unless autonomous systems are developed to handle their unique circumstances and roadway norms. This may involve signifiant changes to driving habits and norms in these countries, or “test areas” where different roadway rules apply and where self-driving technologies can be tested.

- Unified Standards – In order for vehicles to communicate with themselves or with infrastructure, new communication channels will have to be developed. These channels should allow vehicles of different makes and models to communicate, and they should be as safe as possible from hacking and deception. While the US and other nations are working on furthering these standards, there is much work to be done to ensure safety and to create a unified intelligent layer between vehicles and infrastructure.

- Safety Thresholds – The number of deaths per passenger-mile on commercial airlines in the United States between 2000 and 2010 was about 0.2 deaths per 10 billion passenger-miles (Wikipedia). It seems safe to say that standards for self-driving vehicles will be more strict, but it isn’t clear where the cut-off will lie. Governments of different nations will have to determine acceptable mortality rates, and safety standards and guidelines for different kinds of autonomous vehicles.

- Weather and Disasters – Blizzards, floods, or damage to street signs and “V2” technologies could put self-driving cars at risk of serious error and mortal danger. Building road infrastructure to handle disasters, and building vehicles to hander abnormal or less-and-ideal conditions (visibility, tire traction, etc) is much more challenging that simply putting a car on the road on a sunny day in Mountain View, CA.

Header image credit: NVIDIA