Twitter is an American communications company based in San Francisco, California, best known for the microblogging and social networking site of the same name. As of January 2022, the company had over 229 million daily active users.

In their annual report [pdf], Twitter cites a total revenue of $5.08 billion in 2021, with revenue from advertising of $4.51 billion. The company employed 7,500 full-time staff as of December 31, 2021. Twitter is traded on the NYSE (symbol: TWTR) and has a market cap of $31.94 billion as of this article’s writing.

The company is relatively quiet about its AI endeavors. The exception is Twitter’s announcements regarding its use of machine learning algorithms in determining what content to publish — and not publish — on its platform.

A recent LinkedIn search for data scientists at Twitter yielded 751 results.

In this brief primer, we will examine two use cases showing how AI initiatives currently support Twitter’s business goals through the following two use cases:

- Automatic image cropping: Twitter uses machine learning and neural networks that streamline the image auto-cropping process for users and enhance engagement on the platform.

- Increase platform accessibility and inclusion: Twitter uses speech recognition in its Twitter Spaces platform to deliver a speech-to-text presentation to deaf or hard-of-hearing users and expand its user base.

We’ll begin by examining how Twitter uses AI to help with detecting bots.

Use Case #1: Image Cropping

According to Twitter’s engineering blog, the company cites millions of image uploads to its platform daily. The various dimensions of images make it challenging to deliver a consistent user interface (UI) experience.

To understand the business problem at hand and its impact, we must grasp how Twitter makes money and the effect of UI on revenue.

First, the bulk of Twitter’s revenue comes from advertising: According to Twitter’s 2021 annual report [pdf], over $4.5 billion of the company’s $5.1 billion revenue is from “advertising services.” Per Google’s own “Advertising Revenue Playbook,” user experience “maximizes” advertising revenue. In short, social media companies like Twitter need to deliver on user experience to earn money and scale their business.

The blog also claims Twitter’s machine learning engineers encountered shortcomings with its previous face detector. For example, the prior solution would miss faces or mistakenly detect faces when none were present.

Twitter claims to have overcome these obstacles by implementing deep learning in neural networks and machine learning algorithms. To train their model, Twitter asserts they used a combination of techniques on user images:

- Saliency prediction: A model that predicts eye fixations in an image.

- Knowledge distillation: Model “compression” whereby a bigger, already-trained model “teaches” a smaller model what to do step-by-step.

- Pruning: The removal of decision tree sections to reduce data overfit.

Machine learning engineers claim to have used saliency prediction to detect objects of interest, such as faces, text, pets, environment, etc.

However, the neural networks used were too slow, given the enormous number of image uploads to the platform. The team used knowledge distillation and saliency prediction to reduce computational requirements and model size.

According to Twitter’s marketing documentation, knowledge distillation generates prediction data on a set of images. These predictions and third-party saliency data are then used to train a smaller and, thus, faster model. Pruning removed components of the model that were costly and failed to improve model performance relative to cost.

Saliency algorithms are designed to focus on the more interesting elements of an image by mimicking what the human eye is attracted to using neural networks. The company claims that the new algorithm was more effective at locating and capturing these elements.

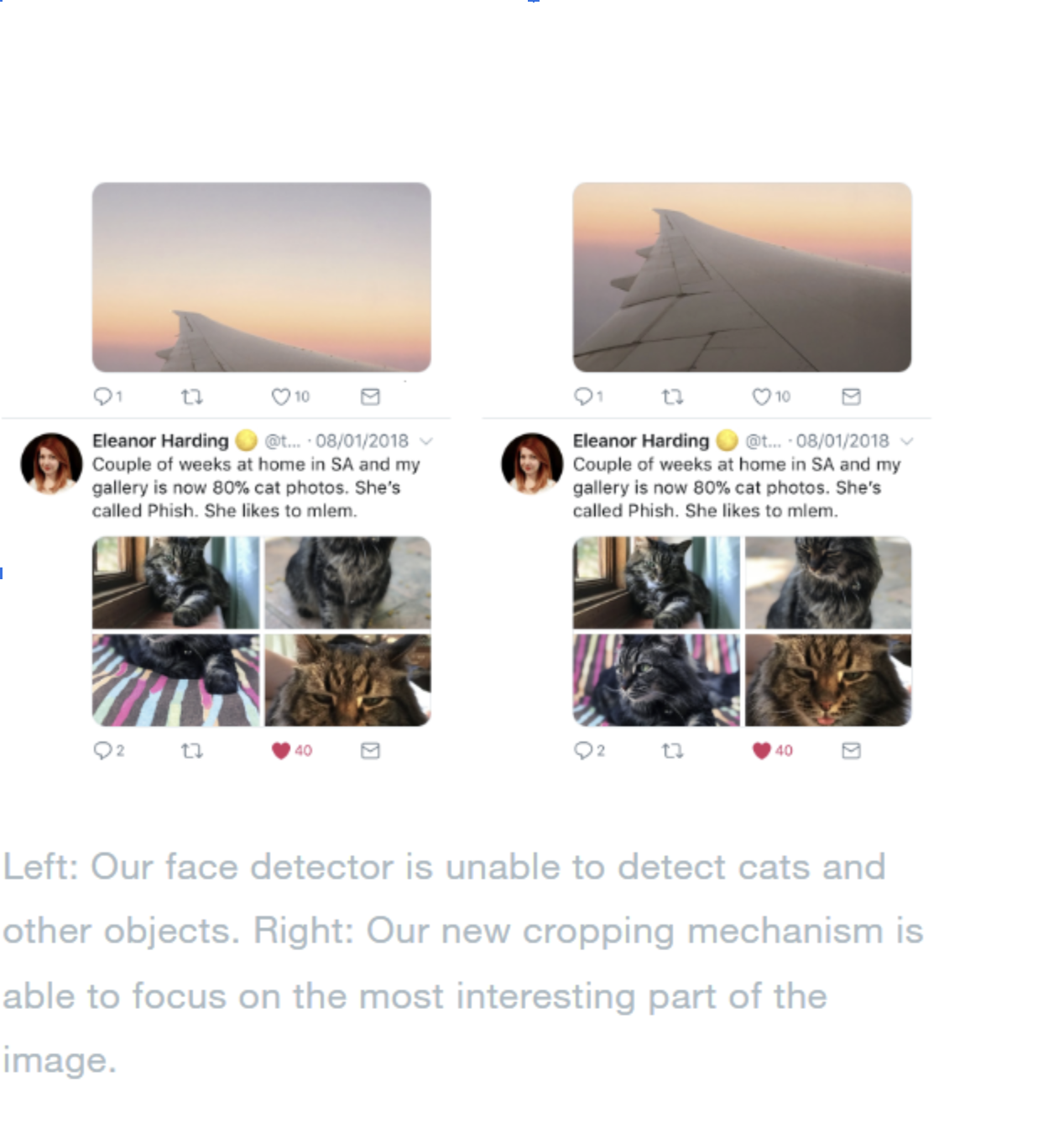

On its blog, the company released some examples of before-and-after auto-cropping:

A screenshot-cropped image showing how Twitter face detector operates with the AI-driven cropping mechanism. (Source: Twitter)

The company claims that its newest image-cropping algorithm allows users to crop images 10 times faster than previously. The model performs saliency detection on all images as soon as they are uploaded. The images are then cropped in real time.

The software was rolled out as a feature to twitter.com, iOS, and Android users.

Softly after its release, the company was assailed with complaints of racial bias after users noted that people of color were cropped out of images in favor of white people. There were also musings about the “male gaze” bias, resulting in images overly focused on a woman’s chest or leg area.

Twitter stated it would analyze its saliency algorithm for bias at the time. In a May 2021 blog post, the company revealed the findings of its quantitative analysis. If there were no biases, demographic parity meant each image had a 50% chance of being salient. Here’s what their investigation found:

- An 8% difference in favor of women over men (the “male gaze” effect)

- A 7% difference in favor of white women over black women

- A 4% difference in favor of white people over black people

- A 2% difference in favor of white men over black men

Twitter deactivated the photo-cropping feature nine months after launch.

Use Case #2: Platform Accessibility and Inclusion

Twitter Spaces is the company’s live audio and conversation platform. According to the use case report from their partner Microsoft, Twitter wanted to make the platform accessible to deaf or hard-of-hearing individuals.

As a business objective, accessibility also means more users and user experiences and, therefore, more advertising interest in the platform. In turn, Twitter also sought to improve captions’ accuracy and synchronization and provide more language support options.

To accomplish these goals, the company pursued extensive feedback across its ecosystem, which may have included suggestions to implement captions for users and potential users on Spaces.

Twitter also partnered with Azure Cognitive Services (ACS) – a division of Microsoft. ACS recommended its speech-to-text solution, which the company states provides real-time audio transcription into text using natural language processing.

For listeners, the speech-to-text feature is embedded into the Spaces platform and is activated by the listener via the “Show Captions” option under “Settings.”

Audio producers use the Azure platform to create a project, similar to other Azure services. Speech-to-text is available in various programming languages and tools. Microsoft also há a product called “Speech Studio,” which offers the same capabilities in a no-code interface.

Audio production workflows consist of the following:

- Create a custom speech project in Azure

- Upload test dataset (user-recorded audio or text data)

- Test data quality (i.e., playback and quality analysis of uploaded audio/inspection and quality analysis of speech recognition test data.)

- Test the model qualitatively (i.e., use a word error rate [WER] to determine if the model requires additional training.)

- Train model (i.e., upload written transcripts, caption text, and the corresponding audio data.)

- Deploy model

The term “transcripts” above refers to a user-downloadable text made available after the episode. However, “captions” in Twitter Spaces are similar to “closed captions” on live television, where the text corresponds to the current speaker’s words.

Azure speech-to-text uses a ‘pre-trained’ universal language model out of the box. In other words, the model is trained with various domains using various dialects and phonetics before first use by the customer.

According to the product literature, Microsoft recommends that users further train their model using a “custom speech” option that augments the pre-trained model with domain-specific terminology.

Below is a 1-minute video demonstrating how Azure Speech-to-Text works:

Microsoft further provides a website where users can test the speech-to-text capabilities using a demo application. According to the Microsoft use case report, Twitter overcame the accessibility barriers of an audio-only platform with real-time captioning and transcription through the Azure platform.

Twitter also expanded its caption support to over 100 languages and dialects as a result of leveraging Microsoft’s speech-to-text solution. Microsoft’s 2022 annual report attested that revenue from Azure cloud services – of which the speech-to-text platform is a part – increased 45 percent year-over-year.