Hearing loss is a condition affecting an estimated 48 million individuals in the U.S. While the degree of severity may vary across age groups, the Hearing Loss Association of America reports that hearing loss ranks third after arthritis and heart disease among the most common physical ailments.

Many efforts have been made to improve quality of life for the hearing impaired and the effectiveness of hearing aid technology. Today, researchers are exploring how artificial intelligence can help personalize hearing aid technology and predict the risk of deafness early in life. We set out to determine the answers to the following important questions:

- What types of AI applications are emerging to improve quality of life for the deaf and hearing impaired?

- How is the healthcare market implementing these AI applications?

Overview of Hearing Loss AI Applications

The majority of AI use-cases and emerging applications for the hearing impaired appear to fall into three main categories:

- Closed Captioning Personalization: Companies are using natural language processing to personalize closed captioning by transcribing live conversations in real-time and to automatically translate sign language to text.

- Auditory AI Assistants: Companies are developing AI assistants to predict the best fit for an individual’s cochlear implant to help improve patient outcomes and to assist the hearing impaired with daily tasks.

- Prediction of Language Ability in Cochlear Implant Recipients: Researchers are developing machine learning algorithms to analyze brain scans to predict hearing loss.

In the article below, we present representative examples from each each category, as well as the current progress (funds raised, pilot applications, etc) of each example.

Closed Captioning Personalization

Cochlear

Founded in 1982, Cochlear is an Australia-based medical device company focused on developing hearing technology. The company claims to be integrating AI into its suite of implantable hearing solutions.

In May 2017, Cochlear announced its five-year “exclusive licensing and development agreement” with Otoconsult NV for use of its AI assistant called FOX (Fitting to Outcomes eXpert). The AI assistant reportedly aids in the process of adjusting the sound processor to a comfortable and effective fit for the cochlear implant recipient. Specifically, this process is known as “fitting” or “programming” and is essential for establishing and balancing loudness and softness of sound. Essentially, FOX helps to predict the best fit.

Otoconsult claims that FOX is based on an optimization algorithm that is trained on a large database of patient performance data and predicts the best cochlear implant fit. Comparative research studies suggest that FOX performs 20 percent better than manual fitting procedures alone.

“The FOX technology will change how we program cochlear implants…The audiologist can perform a set of simple, yet critical tasks, where the patient is an active participant, to provide the evidence for target-based fitting much like hearing aid verifications today.

Additionally, it allows the clinician to take the patient out of the audiometric booth providing a better patient experience.”

-William H. Shapiro, AuD, CCC-A, Clinical Associate Professor in Otolaryngology and Supervising Audiologist, NYU Cochlear Implant Center, NYU School of Medicine

According to its website, Cochlear claims its products have reached over 450,000 individuals across the globe and that it invests $100 million AUS or approximately $75,526,420 USD in R&D each year. It is not specified how many AI-related solutions the company has sold. It is important to note that the integration of FOX is currently in pilot phase.

SignAll

Founded in 2016 and based in Budapest, Hungary, SignAll claims that its platform automatically translates sign language into text using computer vision and natural language processing.

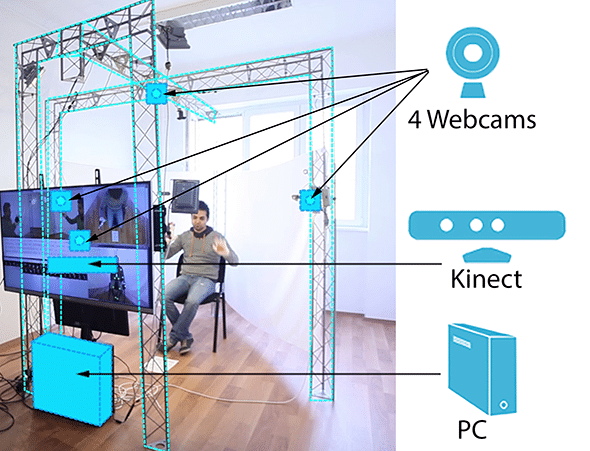

Currently, in pilot mode, the prototype is composed of three main components: Web cameras, a depth sensor, and a PC. As depicted in the image below, the translation process begins with the sign language user seated in front of a depth sensor. Web cameras are strategically positioned around the signer to capture their movements which are processed as a collection of detailed images as demonstrated in the 42-second video below:

The company claims that its natural language processing module is being trained to identify signs from the images and then translates them into complete sentences which appear on the computer screen. The system’s interpretation of movement reportedly includes arm movements, body posture, facial expressions and the rhythm and speed of the signing.

Ideally, this process will result in grammatically correct text which is understandable to anyone who reads it. As the system improves, the startup plans to replace its current hardware with smaller, faster and more portable technology. SignAll’s team is composed of 15 researchers including professionals with training and experience in data science, computer vision and American Sign Language (ASL).

According to the company’s website, SignAll has raised EUR 1.5 million or approximately $1.78 million USD in seed capital from an international consortium of funders including Deloitte. Partners and sponsors include Microsoft BizSpark and Gallaudet University. The startup is reportedly collaborating with Gallaudet University with a goal to develop the largest database of sign language sentences.

KinTrans

Founded in 2013 with headquarters in Dallas, Texas, KinTrans claims that its platform leverages machine learning to automate the translation of sign language to text. The platform shares some commonalities with competitor SignAll but there are also distinctions.

For example, KinTrans aims for its machine learning algorithm to translate signs to text and a separate algorithm converts signs into complete sentences with grammatical accuracy. The developers also aim to display an avatar that can recreate signs to communicate with a deaf user. The goal is to provide a more natural flow of communication between hearing and the deaf.

The platform is marketed as an annual subscription-based model. Service providers pay a fee to license KinTrans online software and accompanying equipment which includes a touch-screen monitor, speaker, and a mic. Currently, there is no charge to deaf customers for using KinTrans.

The 1-minute video below demonstrates how the platform captures signs for translation and depicts communication between a deaf customer and service provider

According to the company’s website, KinTrans appears to be accepting pre-orders and sign-ups prior to an official launch of the product and there doesn’t appear to be evidence of paying customers.

The platform will initially translate American Sign Language (ASL) and Arabic Union Sign Language. KinTrans Founder & CEO, Mohamed Elwazer, is a computer system engineer by training with experience in image processing and AI.

Auditory AI Assistants

Ava

Founded in 2014 in San Francisco, CA, Ava claims that its mobile app uses natural language processing to transcribe conversations in real-time for the deaf and hearing impaired to participate in any spoken communication.

For example, all participants in a conversation would begin by downloading the app to their smartphones. Using their device’s microphone and natural language processing software, dialogue is picked up by the app and transcribed for all participants to read.

The deaf or hearing impaired conversation participant can type text and it is visible to others in real-time. The 4:16-minute demo below provides a detailed walkthrough of how the app works for multiple conversation participants and its general features:

Details on the data used to train Ava’s natural language processing algorithm are not currently available on company’s website. While product details are limited, the company’s head of R&D seems to have robust AI skills and this gives us more confidence that AI is, in fact, being used in this application. Ava’s head of R&D, Alexandre Hannebelle, earned a Master’s degree in data science in Paris and leads the company’s integration of machine learning.

Ava is accessible for free for up to 5 hours per month. Unlimited monthly use is available for a $29 monthly charge. To date, the startup has raised a total of $1.8 million in seed funding.

As of May 2018, Ava has an estimated amount of over 50,000 downloads for the Android system.

Prediction of Language Ability in Cochlear Implant Recipients

In January 2018, a new international collaborative study was announced between the Brain and Mind Institute (BMI) at The Chinese University of Hong Kong (CUHK) and Ann & Robert H. Lurie Children’s Hospital of Chicago. The researchers claim that they successfully developed a machine learning algorithm which predicts language ability in deaf children who have received a cochlear implant.

“Using an advanced machine learning algorithm, we can predict individual children’s future language development based on their brain images before surgery. Such prediction ability is a key step in achieving personalized therapy, which could transform many lives.”

– Dr. Gangyi Feng, first author and research assistant professor, Department of Linguistics and Modern Languages at CUHK

The algorithm is trained on a high volume of images captured using magnetic resonance imaging (MRI). Images were obtained from pediatric cochlear implant (CI) candidates implanted below 3.5 years of age. The machine learning algorithm is trained to identify, atypical patterns which are characteristic of hearing loss early in life.

“So far, we have not had a reliable way to predict which children are at risk of developing poorer language. Our study is the first to provide clinicians and caregivers with concrete information about how much language improvement can be expected, given the child’s brain development immediately before surgery…The ability to forecast children at risk is the critical first step to improving their outcome. It will lay the groundwork for future development and testing of customized therapies.”

– Dr. Nancy M. Young, Medical Director, Audiology and Cochlear Implant Programs at Lurie Children’s Hospital

The research team hopes to expand algorithm capabilities to predict language development in other pediatric conditions in the future. The study emphasizes that the findings reflect emerging research and does not specify next steps for clinical applications based on the findings.

Concluding Thoughts

Among current and emerging AI applications in the hearing technology market, the dominant trend appears to pivot toward leveraging computer imaging, natural language processing, audio transcription and machine learning to translate sign language to text.

The companies profiled in this article are still in their early stages therefore tangible data on the most sustainable business model for delivering this service have yet to be proven. Many university partnerships are commonly seen across startups seeking training for robust data sources. SignAll’s partnership with Gallaudet University, (one of the oldest institutions for the deaf and hearing impaired reflects) is an example of this approach.

However, Software as a Service (SaaS) cloud-based platforms, as in the example of KinTrans, has proven to be a strong business model in other sectors. Therefore, there could be potential for traction in the hearing aid market.

Additionally, KinTrans’ focus on appealing to businesses could be a smart client acquisition strategy if the value of investment in the service can be proven. Based on these factors it is plausible that within 5 years we could see an increase automated closed-captioning and sign language translation services and integration into both business and home environments.

The application of machine learning to improve the fit and function of cochlear implants is an emerging area that should continue to be monitored. We should anticipate more clinical and commercial applications in the coming years.

Header image credit: Adobe Stock