AI is not IT- and adopting artificial intelligence is almost nothing like adopting traditional software solutions.

While software is deterministic, AI is probabilistic.

The process of coaxing value from data with algorithms is a challenging and often time-consuming one. While non-technical AI project leaders and executives don’t need to know how to clean data, write Python, or adjust for algorithmic drift – but they do have to understand the experimental process that subject-matter experts and data scientists go through to find value in data.

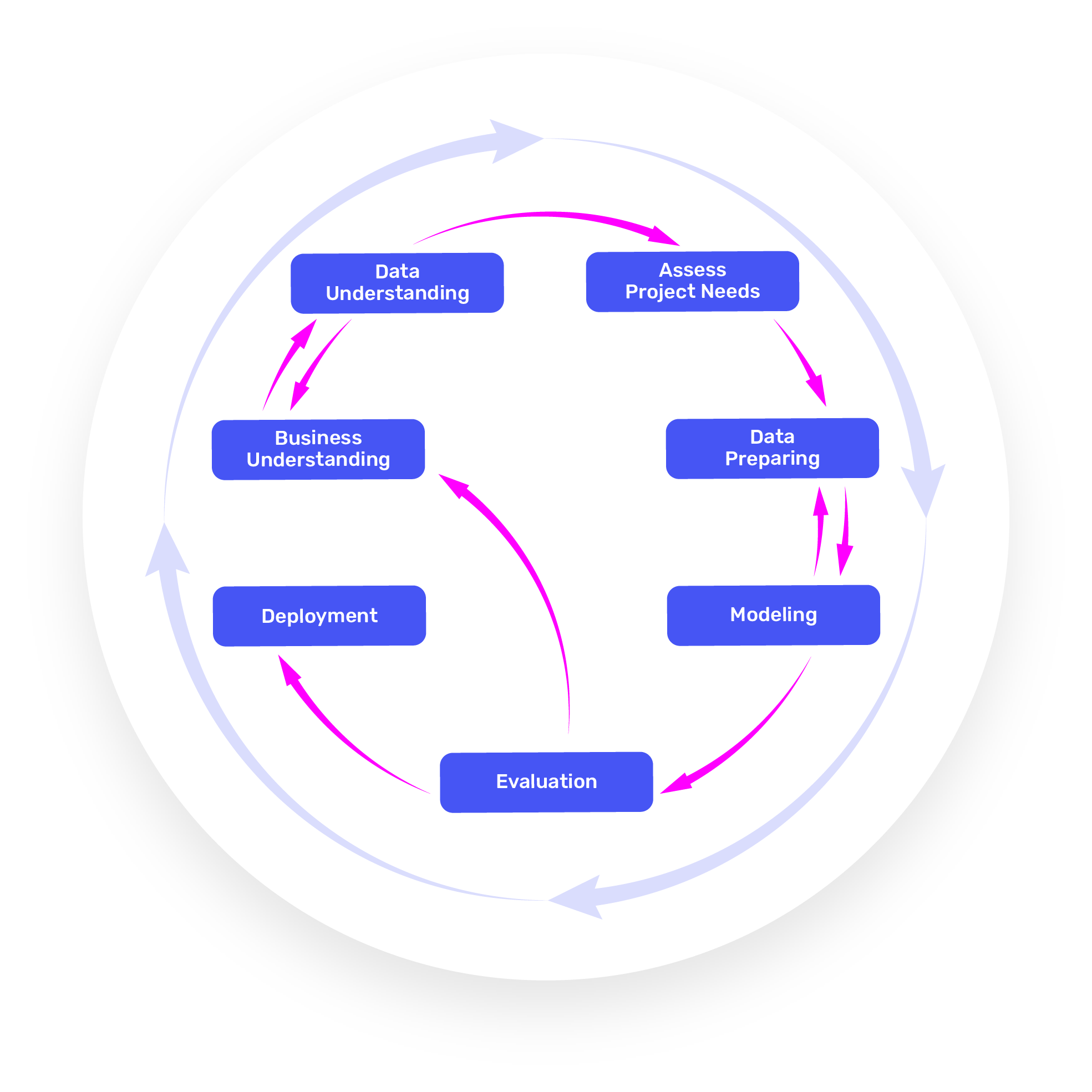

Last week we covered the three phases of AI deployment, and this week we’ll dive deeper in the seven steps of the data science lifecycle itself – and the aspects of the process that non-technical project leaders should understand. The model we’ll be using to explore the data science lifecycle (below) is inspired directly by IBM’s Cross-Industry Standard Process for Data Mining (or CRISP-DM) model. Our model differs only slightly – by placing less emphasis on technical nuance and more emphasis on business context. We’ll be referring to the steps outlined in the image below throughout the remainder of this article:

Unlike the more linear phases of the three phases of deployment (pilot, incubation, deployment), the data science lifecycle steps circulate rather quickly, and there is often jumping from one step to the next in order to iterate on a model or work towards a successful result. Steps 1 and 2 (Business Understanding and Data Understanding) and steps 4 and 5 (Data Preparation and Modelling) often happen concurrently, and so have not even been listed linearly.

The data science lifecycle has steps that can be considered in order – but that rough order is not always followed precisely in a real deployment.

For example, in the midst of data preparation, a team may decide to go “backwards” to business understanding in order to address additional budget needs (ie. data requires intensive and timely cleaning and more staff is needed), or in order to clarify a business outcome. Similarly, a team in the evaluation step might return to data understanding, or to assess project planning, before being able to actually deploy a solution.

As with the 3 phases of deployment, we’ll illustrate the phases below with the use of two example companies:

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine. The eCommerce firm sees promise in improving its cart value and improving on-site user experience, particularly for existing customers with a history of purchases and activity.

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application. The manufacturing firm has a strong digital infrastructure and aims to leverage its existing data streams to detect breakdowns and errors in the manufacturing process before they happen.

1. Business Understanding

- Goal – Determine the business aim for the project, along with the resources allocated to its achievement. Ask: “What is the result that we are after?” Ask: “Is AI really the right tool for the job?” Ask: “What is the measurable and strategic value of this potential AI initiative?”

- Challenges – Finding opportunities that are reasonable and accessible for the company to reach. Do not overreach with assumptions about what AI can do. Accepting the long iteration times and critical skills and competencies that a company must develop in order to bring AI to life in an enterprise.

- Likely Persons Involved –

- Senior leadership

- Lead data scientist

- Project manager

- Functional subject-matter experts

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine. Discuss the various options that the company has for growth and profitability – is a recommendation engine a priority when compared to the other options? What is understood about our customers and their purchase behavior that should be taken into account with this kind of marketing project?

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application. Determine how a predictive model would be measured. Think through which machines require this kind of predictive maintenance – which risks and breakdowns are most costly for the company to endure, and can we focus on those first?

2. Data Understanding

- Goal – Determine the accessibility and potential value of your data. Ask: “Can we achieve our business aims with our present data assets?” Ask: “Are there challenges with this data, or opportunities to use this data in new ways to achieve our desired business outcomes?”

- Challenges – Accessing the value of data, getting subject-matter experts and data scientists to look at data together to determine how it should be accessed, how it should be improved, and which features are likely of the highest value for the business outcomes.

- Likely Persons Involved –

- Lead data scientist

- Project manager

- Functional subject-matter experts

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine. Assess the quality of customer purchase behavior. Does this data tell a coherent story? Do we feel confident that one customer account is one person, or do multiple family members (different ages, priorities, genders, preferences) shop on one account, making things more complicated?

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application. Look at existing data sources from manufacturing equipment. Is this time series and telemetry data from similar machines stored in similar ways, and stored in the same way? Can we ensure that the data is reliable? Where has it been least reliable, and can we reduce the factors that influenced the data this way?

3. Assess Project Needs

- Goal – Determine the requirements and resources to continue forward with the project. This might include additional budget, additional training for staff, additional subject-matter experts to join the cross-functional project team, or access to new data systems.

- Challenges – Getting senior leadership to endure the inevitably complex and changing needs of real AI projects (especially for firms who lack previous practical data science experience).

- Likely Persons Involved –

- Senior leadership

- Lead data scientist

- Project manager

- Functional subject-matter experts

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine. The cross-functional team assigned to the project may decide that they need access to more historical data, and the resources to clean and organize it. They may also determine that – given the ROI opportunities in different parts of the business – they will want to apply the recommendation engine to two very specific product categories (as opposed to all products on file), and the team might request access to a dedicated subject-matter expert from that part of the business.

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application. The team determines the number and type of sensors that they plan to put on their various devices – and the specific subject-matter experts they would need in order to properly set up, interpret, and understand these new data streams in order to run a successful PoC.

4. Data Preparation

- Goal – Accessing, cleaning, and harmonizing data. Feature engineering to determine and distill meaningful aspects of the data corpus. Determining the feasibility of the project given the data available.

- Challenges – Data scientists speaking frankly with business leadership about the challenges and costs of organizing data, which are often substantial (particularly in older firms, or firms with little or no practical data science experience). Admitting that a project is not viable or feasible if the amount or quality of the data is not viable for use.

- Likely Persons Involved –

- Senior leadership

- Lead data scientist

- Data science team

- Functional subject-matter experts

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine. The team cleans and harmonized historical data, and determines the specific format that new data will need to take in order to help feed the recommendation engine. The data scientists and subject matter experts work together to determine the features within the purchase and user behavior data that they believe to be most important for initially training their models.

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application. The data science team works closely with engineers and machinists to determine the most important telemetry signals (heat, vibration) of the equipment that they are aiming to place sensors on. Then, initial sets of data is collected and analyzed, and combined in a time series with existing data streams coming from central manufacturing software. Sensor and core system data is reformatted or reorganized in a way that will allow it to be used to train models.

5. Modeling

- Goal – Establish a relationship between inputs and outputs, iterating on the data and algorithm to reach business value.

- Challenges – Cycling back on data processing, data understanding, and business understanding in the iteration process. Pulling in subject-matter experts to contribute to the hypotheses and practical training of the models.

- Likely Persons Involved –

- Lead data scientist

- Data science team

- Functional subject-matter experts

- Project manager

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine. Bearing in mind the success metrics that the team has decided upon – the data science team tests new product recommendations within the specific product categories of focus. Feedback is used from team members, and (potentially) from a small cohort of users in order to calibrate towards improved cart values and conversion rates. New features in the data are used, or weighted at different levels in order to dial in towards the desired outcomes.

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application. The team would work together using past repair and breakdown data, and new telemetry data, to predict machines more likely to break down. This may require a relatively long time horizon, or a relatively large number of machines to initially test with, in order to find more instances of machines in need of repair, as only these events would help to inform the model’s predictive ability.

6. Evaluation

- Goal – Determining whether or not our data assets and models are capable of delivering the desired business result. This often requires many cycles back to steps 1, 2, 3, 4 or 5 – as hypotheses are refuted, and new ideas surface.

- Challenges – Handling challenges in evaluation, determining strong, quantifiable criteria for measuring success (where benchmarks are hard to determine). Involving senior leadership and subject-matter experts to contribute to a robust evaluation in order to allow for a confident deployment.

- Likely Persons Involved –

- Senior leadership

- Lead data scientist

- Project manager

- Functional subject-matter experts

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine. Over time, the team would measure their new product recommendations to previous product listings or recommendation methods. In this evaluation phase, data scientists and subject matter experts come together to determine what seems to be working, what is not working, and how to adjust the models, the data, or the user experience of the recommendation model to better drive towards desired outcomes (higher cart value, higher conversion rate of users to customers).

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application. The cross-functional team assesses the predictive models suggestions, determining if they are tangibly better or worse than previous methods. In an early Proof of Concept or Incubation phase, this may be more qualitative (ie. Do we believe that our previous methods would have detected this equipment malfunction?), while in a real deployment, this measurement would be quantitative (ie. How many breakdowns occur per month? How much lost uptime occurs across X category of machines per month? What are the rates of false positives for the predictive maintenance system?).

7. Deployment

- Goal – To successfully integrate the AI model or application into existing business processes. Ultimately, to deliver a business outcome.

- Challenges – Training staff to leverage the new AI application. Ongoing upkeep required to keep the model working, and adapting to change.

- Likely Persons Involved –

- Lead data scientist

- Data science team

- Project manager

Example 1 – An eCommerce Firm Adopting a Product Recommendation Engine.

- Phase 2: Incubation Deployment: The recommendation engine, which has been tested sufficiently in a sandbox environment, with feedback from internal team members, is integrated into a part of the eCommerce website, and 15% of users are exposed to AI-generated recommendations, rather than previous recommendations.

- Phase 3: Full Deployment: The recommendation system is integrated into the website fully becoming the default experience on all web interfaces where the team believes it will deliver value. A monitoring system is established to calibrate the results and findings from the new system, with a regular pace of meetings and diagnostics to ensure that the system is performing and improving.

Example 2 – A Manufacturing Firm Adopting a Predictive Analytics Application.

- Phase 2: Incubation Deployment: The predictive maintenance system is integrated into a part of the workflow on the manufacturing floor. Now, a small cohort of machinists and engineers, some of whom were likely not part of the cross-functional AI team, are able to use and respond to this new system, under the guidance of the AI team.

- Phase 3: Full Deployment: The predictive maintenance is integrated into the manufacturing workflow fully becoming the default process in all the machining functions that the AI team believes it can deliver value (areas that have already been tested in the PoC and incubation phases). A monitoring system is established to calibrate the results and findings from the new system, with a regular pace of meetings and diagnostics to ensure that the system is performing and improving.