Data is essential when it comes to building machine learning models for business applications. A strong AI strategy is predicated on data that is specific to the business problem a company is trying to solve, as outlined in our recent article: Data Collection and Enhancement Strategies for AI Initiatives in Business. When it comes to executing on that strategy, oftentimes the first thing a company needs to do is collect some or all of the data it will need to build the right machine learning model for its use case.

Crowdsourcing may help businesses looking to build their own machine learning models collect and label the data on which they intend to train those models. Machine vision systems in particular are predicated on volumes and volumes of data. Unlike training a natural language processing model, a crowdsourcing program is more apt for labeling machine vision data collected by other means. That said, there are machine vision use cases for which crowdsourced data collection might be viable.

We spoke with Mark Brayan, CEO of Appen, a firm that offers crowdsourced training data for machine learning applications. In our interview, Brayan discusses when a company might take advantage of the crowd to acquire the data it needs to train a machine learning model. Listen to our full interview with Mark Brayan below:

Brayan covers two use cases where companies might consider working with data collection vendors to collect and label data for machine vision systems: autonomous vehicles and checkout-less shopping.

Use Case: Autonomous Vehicles

Brayan gives an autonomous vehicle example. A self-driving car company looking to train the machine learning models behind their cars would need to collect a variety of data at scale. Brayan points out:

You only have to go and stand out in the street, and all of a sudden you sense all the things you have to know before you cross the road, let alone drive down the street. You have to be able to see people moving and where they are and whether or not they’re going to cross the street, look at someone on the other side of a parked vehicle and who they may be and where they may be going, sense what animals are going to do. All of that takes an enormous amount of data so that every one of those use cases are covered.

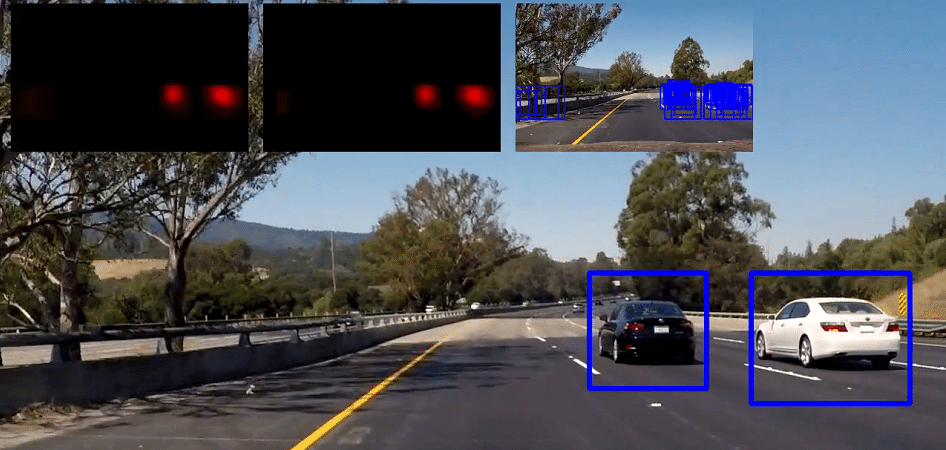

In order to train a machine vision system for use in a fleet of self-driving cars, the company first needs to identity the geolocation into which it intends to sell. If the company intends to sell its cars to buyers in major cities across the US, it will need to train its machine vision system to recognize all of the obstacles one could possibly encounter while driving in the city, the most basic of which include stop signs, yield signs, road work signs, handicap parking zones, traffic lane signs, and other cars. Obstacles more specific to the city might include people crossing the street, baby strollers, dogs, cyclists, emergency vehicles with and without their lights on, construction blockades, and traffic cops.

Each of these obstacles of various sizes and in various lightings and states of motion is visual data. This data first has to be collected. To do this, the company needs to collect hundreds of thousands if not millions of images or minutes of footage showing these obstacles in various states. In the case of self-driving cars, it might be possible to purchase a database of these images or this footage given the precedence of training machine visions systems for self-driving cars.

Another way to collect the data is by working with a crowdsourcing company or similar data collection vendor. These vendors have access to a network of people in various locations who can be placed on data collection projects. Theoretically, a company could pay a crowdsourcing company to have hundreds of humans living in the cities into which it plans to sell drive around in the company’s cars. These cars would be equipped with cameras all around the car that would collect footage showing all of the different obstacles that a car might encounter as it drives through the city. At great enough scale, this could allow the company to collect the data it needs to train its machine learning model in a relatively short period of time.

Training Machine Vision Systems for Different Locations

A self-driving car’s machine vision system would ideally function in many different locations, but this kind of broad capability requires a specific kind of training. A self-driving car trained on the objects and density of people one might find in New York City may have a difficult time navigating on a dirt road in northern Canada, for example. This is due to a few reasons.

A self-driving car company may not see a reason to train its machine vision system to recognize deer and moose that might cross the road if they’re selling into cities like Boston, New York, or San Francisco. If they intend to sell into rural Vermont, Maine, or Quebec, moose and deer crossings are all of a sudden much more common obstacles. A machine vision system that is not trained to deal with these obstacles may have trouble stopping the vehicle in the event that a moose walks across the road. In this case, the company would be wise to collect data from areas where moose crossings are more frequent if it intends to sell into those regions.

Similarly, a machine vision system trained on the streets of Seattle will have difficulty recognizing obstacles in Afghanistan. It follows that autonomous military vehicles that need to work in desert terrain should be trained on data collected from that terrain.

Labeling Data for Machine Vision Systems – Driving Footage

Once the data is collected, it must first be labeled before it is fed into a machine learning algorithm. A machine is not going to know that a few seconds of footage showing a woman crossing the street is in fact showing a woman crossing the street. Machines do not actually see in the way humans do; all they “see” is 1s and 0s. When a machine learning algorithm is fed an image or frame of footage, it interprets that image as pixels and not as the content a human might interpret. In order for a machine to determine that an image or frame shows one piece of content over another (a woman, and not a stop sign, for example), that image or frame needs to be labeled as that piece of content. The crowd can help here, too.

Data collection vendors may also provide data labeling services. These vendors develop programs where people manually label images and footage to describe their content. In this example, the self-driving car company has already collected hundreds of thousands of seconds of dashcam footage. It can then pay a crowdsourcing company to find people to label that footage.

For example, a data collection firm could find people to sit down at a computer and label many frames of footage showing a black dog crossing the street in broad daylight as “animal on the road.” They could then put the same label on frames of footage showing a black dog crossing the street at night. A machine won’t know that the pixels of an outline of a black dog, two bright eyes somewhere lower in the frame, and a band of red pixels somewhere lower are in fact a black dog with a red collar crossing the street at night.

The machine only “sees” pixels without any context. A human needs to label visual data showing the same content in different lightings as the same content if a machine is to know to take the same action regardless of the lighting situation in a real world setting: in this case, stop the car.

Use Case: Checkout-less Shopping

Brayan briefly describes another use case for machine vision in the form of checkout-less shopping:

Shops…want to have cameras…enable people to walk into the shop, take a product off the shelf, and walk out. [They want] that transaction done in the background by recognizing who that person is, recognizing what product they just picked up, and then checking the price of that product as the person leaves [the store] and charging their account. A human could do that pretty easily, but to build AI to do that is incredibly complex.

Still, Brayan says that his company is “doing a lot of work with really large data sets and tagging and labeling that data in a variety of ways.” His example of checkout-less shopping is one that is as of right now far-out in terms of viability. Although checkout-less shopping applications such as Amazon Go are extremely nascent in terms of traction, we can infer how their machine vision systems might be trained as a representative example of how data collection vendors like Appen might come into play.

A machine vision system trained for a checkout-less shopping application might require a greater variety of data when compared to the self-driving car application. First, the retail company would need to log a baseline amount of nominal data of the items on their shelves into a database, including the product’s name, price, and location on a given shelf.

Amazon Go currently requires customers to download an app and enter their credit card information into it. Then, customers scan an app on their phones on a device as they walk into one of its stores.

Cameras set up on shelves would record customers pulling an item from them. The machine learning model behind the cameras would both detect that a customer has pulled an item from the shelf and which item they pulled. The item would then be added to the customer’s cart on the app. When the customer leaves the store, the app would bill the credit card it has on file.

For this to be possible, the machine vision system needs to be trained on hours and hours of footage of every item in the store being pulled from the shelf in various ways, at various angles, and in the lighting of each store in which the company intends to deploy the system. Fortunately, a retail company will likely sell the same items and install the same light fixtures across all of its stores. In theory, a machine vision system built for use in one store might be deployable across all its stores.

Labeling Data for Machine Vision Systems – Shelf Camera Footage

Although they could, it’s unlikely a data collection vendor would find people to enter the store and pull various items off the shelf as the shelf cameras are running. That said, crowdsourcing could come into play as far as data labeling is concerned.

Crowdsourcing companies could have people sit down at a computer and review all the footage collected by a shelf camera. They could then label each frame of that footage with the name of an item the store sells. This gets more complicated when the store sells multiple brands of the same product, which it likely will. People might need to label the frame of a customer pulling a 2-liter soda bottle from a shelf as either Coca-Cola or Pepsi, for example. This makes the system difficult to scale because every time the company adds new products to its inventory, it would need to make sure to train its machine vision system to recognize those products.

Additionally, people would have to label footage as instances of a customer either pulling an item from a shelf or putting it back on the shelf. Without this step, a machine vision system may keep an item in a customer’s digital cart that they decided not to buy. Thus, they would be charged for that item when they left the store despite walking out without the item.

Only once the data is labeled could it be fed into the machine learning algorithm behind the vision system. This would in theory be the way checkout-less shopping systems could work.

Labeling Data for Machine Vision Systems – Facial Recognition

Brayan suggests an even more complex example, however. He suggests a scenario in which a person can purchase items from a store by walking in and out of that store without scanning an app on their phone. This not only requires even more data labeling, but an additional machine vision application: facial recognition.

In this example, a person would theoretically upload many images of their face at various angles and in various lightings to a retail company’s website. There they would also enter their credit card information.

Then, a customer could enter the store and look into a camera at eye-level that scans their face and pulls up their profile on a computer screen. The customer would then confirm that the system pulled up the right profile, and they would enter the store.

A camera system would follow them through the store as they pulled different items from the shelf. The cameras placed throughout the shelves would again determine which items the customer pulled. The camera system following them throughout the store would detect when the customer leaves and then charge the customer’s card for the items they pulled from the store shelves.

To train the machine vision system that follows the customer from when they enter the store to when they leave, the algorithm behind the system would first need to have been trained on human faces in a general sense. This data could theoretically be purchased from vendors with a database of images of human faces at various angles and in various lightings, but if the company intended to train the algorithm from the ground up, they could again turn to data collection vendors that offer crowdsourcing services.

People could manually label all of the parts of a face within images of human faces at various angles and in various lightings, allowing a machine vision system to detect where a person’s eyes, ears, mouth, and nose are located.

Once the groundwork is laid, the algorithm would need to be trained on the face of each customer that uploads images of their face to the company’s website. When customers upload images of their face to the website, they are in effect feeding labeled data to the machine learning algorithm behind the vision system; their face is labeled as their name and credit card information. This would in theory allow the machine vision system to detect when a particular person enters and leaves the company’s store and then charge them accordingly.

Brayan points out that such an application can get even more complex: “Two people walk in but one person walk out. How does that happen?” He asks. One answer? “It’s a mother and a child, and she walks in with the child next to her and carries the child out.” A machine would not be able to detect the difference, but a human could easily. People gathered by a data collection vendor could potentially help train the model for this common scenario, among others.

The Future of Machine Vision

Machine vision systems require deep learning, a complex expansion of machine learning which we discuss in one of our most popular articles, What is Machine Learning? Although sight—or the computational proxy for it—is a relatively difficult task for machines, Brayan expresses excitement about the possibilities of the technology. He says, “The exciting thing about image data is the same data can be used over and over again because that data can be labeled differently. So I think there’s a real endless set of possibilities with vision-based AI.”

What this means is that as companies and researchers continue to collect image and video data with which to train machine learning models, that data can in turn be used by other companies and researchers looking to train machine learning models to do even more complex or even completely different visual tasks than those that used the data before them. This opens up room for innovation that could drive more business value in finance, healthcare, and heavy industry. Crowdsourcing is one way to label this data for a variety of use cases.

This article was sponsored by Appen, and was written, edited, and published in alignment with our transparent Emerj sponsored content guidelines. Learn more about reaching our AI-focused executive audience on our Emerj advertising page.

Header Image Credit: Towards Data Science