The idea of using artificial intelligence (AI) in the military scares many people in the US, especially when it comes to the Army. The US Army typically operates on the ground, and so it may be uncomfortably closer to home for some people.

The US government largely ignored this widespread fear by pursuing an aggressive campaign to incorporate AI into the Army as part of its $717 billion budget under the National Defense Authorization Act. Civil protests against Project Maven resulted in a six-fold increase in its funding for 2019, and the Defense Advanced Research Projects Agency (DARPA) is likely to spend about $2 billion on AI.

More recently, the Combat Capabilities Development Command Army Research Laboratory (ARL) scored $72 million for AI research under the Artificial Intelligence Task Force in collaboration with Carnegie Mellon University and other top research universities.

The reason for this seemingly cavalier attitude is simple: the US government has no choice. Notwithstanding privacy and individual rights concerns, the age of AI is already here. Many countries are aggressively selling or acquiring AI-powered autonomous vehicles, weapon systems, surveillance, logistics, and training for security and defense. Falling behind in the AI race could have disastrous consequences.

According to Secretary of the Army, Mark Esper, the future battlefield requires mastery of AI. He stated:

“…if we can master AI … then I think it will just really position us better to make sure we protect the American people.

Winning on the future battlefield requires us to act faster than our enemies while placing our troops and resources at a lower risk….Whoever gets there first will maintain a decisive edge on the battlefield for years to come.”

That said, military AI does have legitimate issues. It pays to be cautious about turning over military operations and decisions to non-human intelligences, which is the reason the Department of Defense emphasizes the role of AI as augmentation rather than as the replacement of humans. In particular, AI could help in non-lethal systems to improve situational awareness, assist in decision-making, and increase efficiencies in logistics and maintenance.

This is not to say that AI has no place in weapons systems. AI can be particularly helpful in making the Army more formidable. For example, there is a plan to use AI, machine learning, and computer vision to increase the accuracy and capabilities of ground combat vehicles. While this is still in the exploratory stage, the Advanced Targeting and Lethality Automated System (ATLAS) program is looking to put the plan into action as soon as possible.

This article will discuss some recent projects involving artificial intelligence in the US Army, specifically:

- Black Hornet

- Advanced Targeting and Lethality Automated System (ATLAS)

- Integrated Visual Augmentation System (IVAS)

- TRAuma Care In a Rucksack (TRACIR)

- Palantir Defense

- Libratus

Implementation of all the above projects is pending, but given the currently gung-ho attitude in the defense sector, it is likely to happen soon for most of them.

Black Hornet

Autonomous vehicles tend to be rather large, but the Army is aiming to equip soldiers on the ground with palm-sized drones to serve as their personal spies on the battlefield. The contract went to FLIR Systems to supply Black Hornet PRS (Personal Reconnaissance System) for $39.6 million. According to the press release, the drone is a “highly capable nano-unmanned aerial vehicle (UAV) system,” 8,000 of which the company has supplied to other clients in the world.

The drone is quite small at just 6.6 inches in length and weighing in at 33 grams. it’s purportedly capable of spying out the land over 1.24 miles at about 13 mph. This may help soldiers achieve situational awareness without attracting the attention of hostile forces or exposing themselves to risk in areas beyond line of sight.

The product page describes it thus:

Extremely light, nearly silent, and with a flight time up to 25 minutes, the combat-proven, pocket-sized Black Hornet PRS transmits live video and HD still images back to the operator.

Below is a 2-minute video showing the uses of PRS in the Soldier Borne Sensors program:

Below is a 2-minute video introducing the Black Hornet PRS:

Advanced Targeting and Lethality Automated System (ATLAS)

The Army has invited all takers to propose a way to build a fully automated ground vehicle to fight alongside human soldiers in future battlefields. It is calling it the Advanced Targeting and Lethality Automated System (ATLAS) program, and it is a carryover from previous attempts use robots in combat. The latest attempt was in 2017 during a live-fire exercise as part of the TARDEC Northern Strike B-roll in Michigan. While this was an unmanned rather than autonomous vehicle, it was the first demonstration of its type.

Below is a 2-minute video giving a brief glimpse of the exercise:

As a next step, the Army plans to roll out an AI-powered autonomous combat vehicle that would “acquire, identify, and engage targets at least 3X faster than the current manual process.” The non-governmental organization coalition Campaign to Stop Killer Robots is calling for its ban, saying the problem with this is “Fully autonomous weapons would decide who lives and dies, without further human intervention, which crosses a moral threshold.”

However, a large number of autonomous weapon systems already operate globally. In fact, automated target recognition systems have been around since the 1970s, although with limited intelligence, and most are defensive in nature. The delayed reaction to the existence of autonomous weapons might be attributable to the use of AI in these systems, which makes little sense as this does not change the concept of autonomy.

At any rate, the Army clarified that ATLAS algorithms will put the final decision on pulling the trigger on humans. According to Army engineer Don Reago:

The algorithm isn’t really making the judgment about whether something is hostile or not hostile. It’s simply alerting the soldier, [and] they have to use their training and their understanding to make that final determination.

Integrated Visual Augmentation System (IVAS)

The US military, in general, has made it a recent policy to go with commercially available products for their needs, and the Army is no exception. Among the many forays it has made into the commercial sector is its use of a modified version of a HoloLens 2 headset from tech giant Microsoft, a product originally marketed for gamers looking for the augmented reality experience.

Microsoft and 12 other companies accepted the $480 million contract to produce 2,500 of these modified headsets for the Army within the next two years despite protests from employees.

The modified military version is the Integrated Visual Augmentation System (IVAS), and works much like it does for gamers. It places digital images on top of what the wearer actually sees through the visor. Added features to the IVAS include phosphorous and thermal imaging, so it helps the wearer see through conditions of limited visibility, such as at night or smoke is present. It will also have the capability of gathering data on the stress levels and reactions of the soldiers during training and combat to help make them better fighters.

The IVAS is still in development and still too large to work with standard helmets. However, the feedback from soldiers using the test units can help make it better.

Below is 2:12-minute demo video of 365 Remote Assist, one of the features of an unmodified HoloLens 2 headset.

TRAuma Care In a Rucksack (TRACIR)

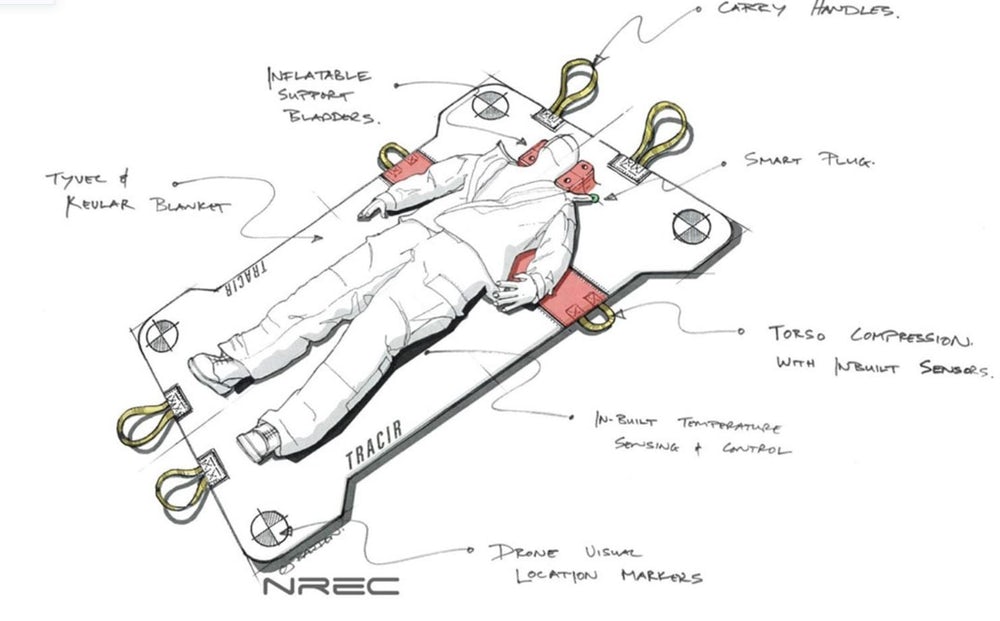

Notwithstanding the awkward acronym, the TRAuma Care In a Rucksack or TRACIR is definitely a military application of AI-powered technology that is bound to find favor with everyone. Primarily a high-tech first aid system, the aim is to provide soldiers on the battlefield access to autonomous emergency medical treatment within the “golden hour” when immediate medical attention is not possible.

The challenge is to design TRACIR so that it is small and light enough for soldiers to carry with them into battle, yet has AI technology that can stabilize a critically wounded person effectively. The Army awarded contracts to Carnegie Mellon University and The University of Pittsburgh School of Medicine, tasking them to come up with a trauma care system in four years for a total of $7.2 million.

The idea is to bring a slew of medical professionals in surgery, emergency medicine, pulmonary and critical care fields to provide data on treatment of traumatic injuries. This will enable data engineers to create algorithms that will be the moving spirit behind TRACIR.

No one has yet come up with the physical form of TRACIR, or the form of autonomy that would make it possible for the trauma care system to dress wounds or insert a breathing tube autonomously. It would take much more development in technology than currently available to enable the latter.

As to the former, CMU’s Robotics Institute research professor Artur Dubrawski said:

…we see this as being an autonomous or nearly autonomous system – a backpack containing an inflatable vest or perhaps a collapsed stretcher that you might toss toward a wounded soldier….Whatever human assistance it might need could be provided by someone without medical training.

The system would have a series of sensors to feed data to the algorithms, which would then assess and treat the patient. The treatment might take the form of resuscitation, surgery, medicine, intravenous drips, and other methods of stabilization to keep the patient alive until he or she can get better medical care. When human intervention is required, it should be possible for people with no medical training to perform the necessary tasks.

Palantir Defense

Palantir Technologies snagged an Army contract worth a possible $800 million over 10 years to develop intelligence data analytics software to improve on the existing Distributed Common Ground System-Army (DCGS-A). The DCGS-A focused on analyzing different data streams about enemy movements, terrains, and other issues of concern to come up with real-time reports.

Palantir won the contract over long-time defense contractor Raytheon, and the data analytics company is confident that, with some tweaking, its Palantir Defense product, using the Nexus Peering module of the Palantir Gotham Platform, is the perfect fit for the data analysis needs of the Army. The Marine Corps had already used the product in the battlefield and proved it was fast and accurate.

Below is a 5:27-minute demonstration of the Palantir Gotham Platform integrating intelligence data using an open-source view of the Afghan Conflict:

Libratus

Most people would probably think twice about asking a poker player to make decisions about military matters, but it actually makes sense. Poker is a game of skill, not chance. Poker players have to weigh the odds of a certain card coming up given the known and unknown cards and the play as it progresses. Expert players do this automatically and can make successful calls based on a large number of variables.

This is perhaps the reasoning behind the Army’s decision to mine the possibilities around the AI-powered bot Libratus, which won nearly $700,000 from expert human players in Texas Hold’em Poker match in 2017. Below is a 2:41-minute video of the feedback of human players pitting their skills against Libratus in that match:

The Army hired CMU professor Tuomas Sandholm, who developed Libratus together with PhD student Noam Brown, to adapt it to help the Army make strategic decisions.

Libratus uses computational game theory, and the Pentagon is betting $10 million that the technology has the potential to “tackle simulations that involve making decisions in a simulated physical space.”

Not much is known yet about how the Army will use the Libratus-based technology, and Dr. Sandhoum is not forthcoming, either. However, he does express his opinion about the ethical concerns and potential dangers of using artificial intelligence in the US Army:

I think AI’s going to make the world a much safer place.

Header Image Credit: Defense News