Many of today’s most popular digital products — from video games to social media and beyond — owe their popularity (and profitability) directly to their addictive potential.

These addictions have become more commonplace as screen time has skyrocketed across the developed world.

They’ve been described as “hijacking” our reward circuits.

And that’s a pretty accurate way to put it. We are driven by our reward circuits, from our drive for a career, prestige, a new house, a romantic partner, or a good meal — they all tie back to some fundamental evolutionary drive that served us well over a hundred thousand years ago.

Compared to the AI-augmented virtual experiences of the future, today’s technologies hardly scratch the surface of “hijacking.” Humanity is in for a wild ride.

As generative AI and virtual reality gain in popularity, the feedback loops of our virtual lives will create experiences (of entertainment, education, relaxation, etc.) that are vastly more appealing than anything the physical world has to offer.

In this article, I’ll explore how future AI-augmented digital experiences will be fundamentally appealing to our reward circuits — in ways that may lead to vastly less individual productivity and less societal power. From there, I’ll explore ways we might work with (or change) our reward circuits to counter some of the unproductive, tantalizingly distracting forces ahead.

This article is broken out into the following sections:

- TL;DR — A bulleted summary of the critical arguments of this article.

- Addictive Digital Stimuli: Today and Tomorrow — A review of today’s highly addictive stimuli (social media, gaming, pornography) and an overview of how AI-augmented near-future versions of these experiences will be vastly more addictive.

- Reward Circuits: Steering Our Future — An overview of how we might avoid suffering and keep society productive by working with our existing reward circuitry.

- Altering Our Reward Systems — Exploring the reasons why cognitive enhancement and brain-computer interface are inevitable, and why they will be crucial for our collective future survival.

- Policy Considerations — Questions for policymakers concerned about the future of society in (increasingly addictive) virtual worlds.

1. TL;DR — Closing the Human Reward Circuit

If you don’t have the time or patience to read this article, a summary is provided in bullet points below:

- Current virtual stimuli (social media, pornography, video games, etc.) can be addicting but not genuinely fulfilling.

- Soon, AI-augmented VR will almost entirely cater to the vast majority of human needs (for relaxation, entertainment, community, etc.). Most humans will live most of their lives in such VR worlds. The ability of these experiences to fulfill our needs will make them irresistible.

- Technology geared towards immediately satisfying human drives may have a natural gravitational pull away from productive tasks and towards an “audience of one.”

- Humanity should create a vision of how to wield our reward circuits along with our technologies in ways that strengthen our abilities to survive and persist. That vision should include actively avoiding our reward circuits being hijacked and pull away from situations that would harm our ability to survive and persist.

- Generative AI adoption and VR worlds will likely lead directly to cognitive enhancement (i.e., brain-computer interface) and radical changes to the human condition and species. This brain modification will be necessary to avoid the traps of addictive reward circuits and to compete economically, militarily, and otherwise.

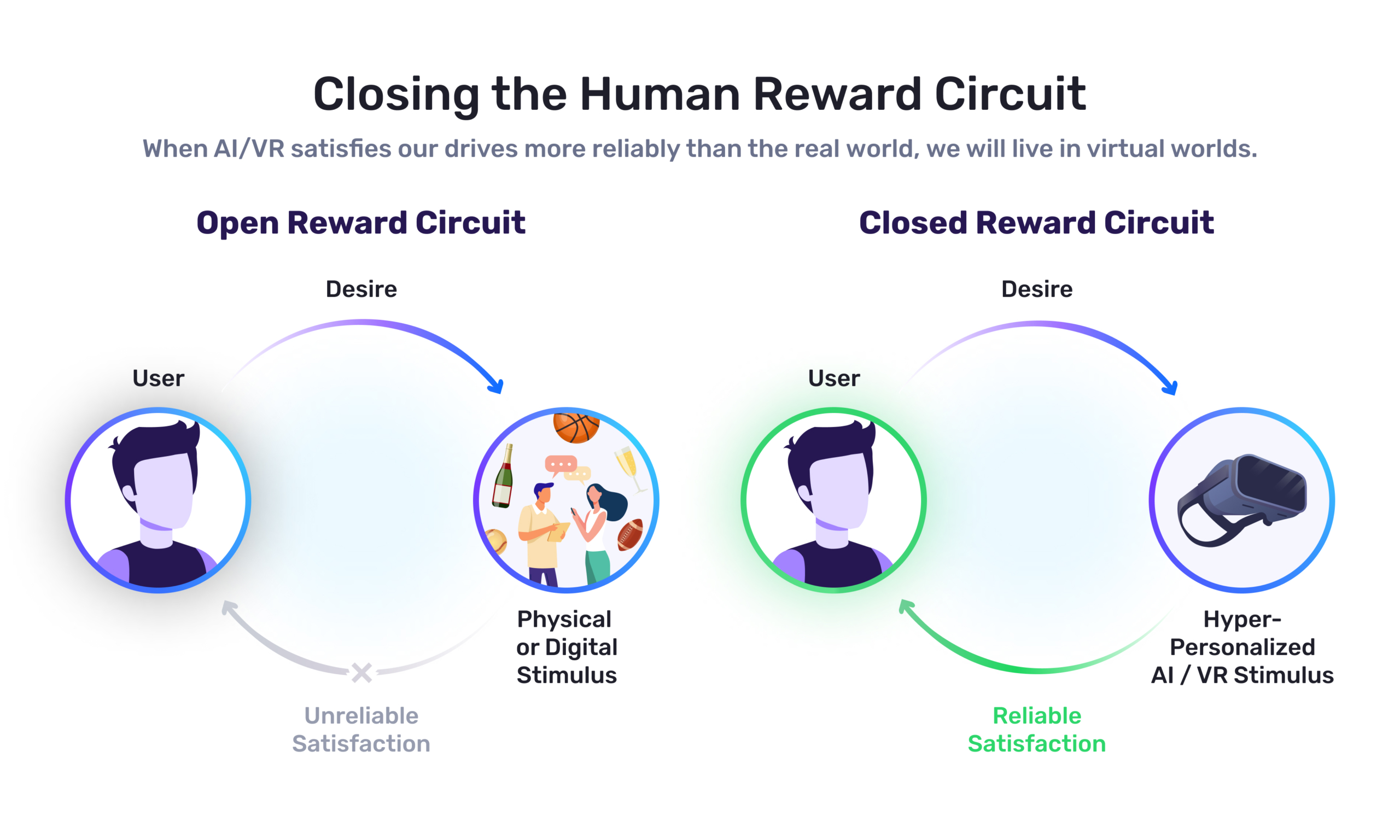

When AI/VR experiences reliably satisfy human drives more reliably (and more deeply) than the “real” world, virtual experiences will come close to closing the human reward circuit.

Here’s how I frame it in a shorter reward circuit article on my personal blog:

Today, Fulfilling Our Reward Circuits is “Hit or Miss”:

Our daily lives are an amble from one reward circuit to the next (from hunger, to boredom, etc), what we constantly desire is a change in our emotional state.

Very frequently, the actions we take to satisfy our desires (to feel relaxed, or entertained, or enthusiastic / confident, etc) don’t work. The TV show doesn’t entertain us, listening to music doesn’t calm our mental chatter before bed, pornography doesn’t really excite us. We amble on regardless.

Closing the Human Reward Circuit:

Hyper-personalized AI-generated experiences (visuals, audio, haptics, etc) will allow you to feel what you want to feel (relaxed, enthusiastic, confident, sexually desired, etc), when you want to feel it – and will draw more and more people to live almost entirely in virtual worlds. (see: Husk)

To understand the transhuman conclusion of this article, we’ll have to start at the beginning with the technologies that are already “hijacking” human reward systems:

2. Addictive Stimuli: Today and Tomorrow

The Australian jewel beetle came close to being endangered because it was trying to mate with brown beer bottles instead of actual female beetles. The beer bottle was precisely the color and texture of a female jewel beetle — and to the male jewel beetle, the beer bottle is a supernormal stimulus — i.e., a stimulus that elicits a response more potent than the stimulus for which the response mechanism evolved.

Today as human beings, we are surrounded by supernormal stimuli. For the sake of our article here, we’ll leave out things like junk food and focus exclusively on digital technologies.

Today’s Addictive Digital Stimuli:

- Reward circuit: Sexual desire.

- Product: Video pornography.

- Experience: A 13-year-old boy today can see more sexual acts with more women (and more attractive and varied women) than his ancestors might have seen in 10 lifetimes.

- Addiction stats: According to the Institute of Family Studies, 27 percent of men aged 30 – 49 responded to a poll saying they watched pornography on a weekly basis (by comparison, 44 percent of women in a similar age group responded that they had never watched pornography in their lives). In the same survey, men who were polled as having watched pornography in the previous 24 hours reported drastically higher rates of loneliness and insecurity. According to other relevant research studies, 17 percent of pornography users are compulsive.

- Reward circuit: Novelty, social approval.

- Product: Social media.

- Experience: We didn’t evolve to have a running dashboard highlighting our “cool-ness” and the cool-ness of a thousand of our loose social connections.

- Addiction stats: According to Common Sense Media, 62% of US teens use social media per day, spending an average of an hour and 27 minutes daily. Throughout the pandemic, social media use grew 17% among tweens between 2019 and 2017 – the sharpest increase in any age demographic since record-keeping began. According to researchers like Jonathan Haidt, there is a direct correlation between teenage social media use and an increase in mood disorders since 2009.

- Reward circuit: Achievement.

- Product: Video Games.

- Experience: People worldwide (especially young men) are increasingly channeling their need for achievement into virtual places of competition, void of physical or economic risk — and void of any opportunity to advance a meaningful career.

- Addiction stats: According to researchers from Lund University, 7-12% of teens experience disordered behavior with gaming, with a direct correlation to exacerbating existing mental health issues, including anxiety and depression.

While there is no concrete set of neurons we could tie to “achievement” or “novelty,” the examples above will suffice for our purposes. This is evident from the fact that a significant percentage of the American population is addicted to one or more of these three examples of supernormal stimuli above.

Tomorrow’s Addictive Digital Stimuli:

- Reward circuit: Sexual desire.

- Experience: AI-generated VR pornography.

- Description:

- Imagine a romantic “partner” whose physical form, voice tone, and personality changes in real time to whatever suits your arousal.

- Imagine a platform that allows people to act out any fantasy they desire, including those which are physically impossible in the “real” world or downright illegal. Whether or not such content is moral in depiction or sourcing is beside the point of this article. Still, for our purposes here, it’s important to point out that such content would be popular and appealing – especially if it could be consumed anonymously.

- It is somewhat evident that without haptic technology — some of which is already in development — the physical act of sex will be limited in pure VR. But arousal and fulfillment are more a matter of the mind than the loins.

- Reward circuit: Novelty, entertainment.

- Experience: AI-generated VR video media

- Description:

- Imagine a version of Netflix or TikTok where you can “sample” almost any kind of experience possible — or where optimally entertaining media (humorous skits, attractive women, horror jump-scares, etc.) are rotated before you, immersing you in a vastly more entertaining experience than anything the “real” world could possibly provide.

- In our interview with DALL-E 2 creator Aditya Ramesh, he states his fear about the creation of an AI-generated TikTok-like platform that is optimized for engagement and time in the app. I believe that the creation of such a platform is literally inevitable.

- Reward circuit: Achievement.

- Experience: AI-generated virtual worlds.

- Description:

- Imagine a virtual world where you could achieve anything, from being President of the United States, to the creator of a new field of science. Imagine an ecosystem of AI-generated “persons” who laud and reward your activities, providing praise and accolades in a way that feels unbelievably rich and genuine, beyond any fulfillment or affirmation you’ve experienced in the “real” world.

- Imagine every experience being tailored to keeping you in a flow state between challenge and incompetence, optimally engaging and pushing your skills — leaving you feeling more capable and self-confident every single time you engage with the platform. You may not be building a real talent, or achieving anything in the aforementioned “real” world. But if you are more fulfilled in that VR space than anywhere in the real world — would you care?

The reward circuits above are those we’ve already addressed in the present. What about those human needs that today’s technologies can’t touch?

- Reward circuit: Community.

- Experience: AI-generated VR “personalities” calibrated to our community and relationship needs.

- Experience/Potential Manipulation:

- Imagine an AI-generated group of “friends” with unique personalities, backstories, skills, and experience. Except, unlike real friends, they have no genuine motives of their own other than enriching your experience as a user (entertaining you, educating you, helping you with your goals, or self-development).

- Instead of being limited to what you can do in the physical world with “real” friends, your digital “friends” can slay dragons, establish trillion-dollar enterprises, make scientific discoveries, and more — all in the course of a few immersive VR hours.

- Reward circuit: Romantic love.

- Experience: AI-generated VR “personalities” calibrated to our romantic needs.

- Description/Potential AI:

- Imagine this personality being the epitome of a supportive partner, providing deep emotional support, fun, conversation, and more — all without any agenda, with no risk of a messy divorce or breakup, or infidelity.

- Imagine combining this with the powers of AI-generated VR pornography.

Given what’s been advertised in Mark Zuckerberg’s curation of the ‘metaverse’ – it’s not hard to imagine these possibilities in the very near future.

However, two key differences will make tomorrow’s addictive virtual stimuli much more potent than today’s:

- First, it will cover a broader range of human needs and reward circuits.

- Second, it will fulfill those reward circuits much more thoroughly, and more reliable (see the image above: Closing the Human Reward Circuit)

Today, Japan has the hikikomori phenomenon: people (almost always men) who stay in their parents’ homes well into adulthood and escape into online games and porn. Predictably, hikikomori are often depressed — bereft of connection or love.

Yet people who “virtually escape” in the future will have a much more improved and immersive experience – one that can not only transcend what the “real” world can provide, but the very human circuitry that results in depression.

The world of bits will be hyper-customized to the individual user in ways the world of atoms never can.

Today, 19 times out of 20, scratching the itch of “entertainment” implies something digital (Netflix, YouTube, etc.). It’s simply so much more convenient, and sometimes rewarding, than the alternatives — like seeing a play at the theater, taking a drive with the radio blasting, or whatever else people used to do before the internet.

While we see niche sectors and economies built around nostalgic “analog” technologies – the resurgence of vinyl records in the music industry comes to mind – there’s no denying that, for the mainstream, the pull of the “audience of one” will be too strong to resist.

Tomorrow, 19 times out of 20, scratching almost any reward circuit itch — from relaxation to entertainment to education and beyond — will imply something hyper-personalized, conjured precisely for you, the individual user. Because each person’s reward circuits are different, an isolated, and personal experience will be the norm.

Some might argue that existing in this immersive AI-generated world of bits is beneficial. You and I today spend most of our time online, typing on a QWERTY keyboard and speaking on Zoom calls – with little ability to grow our own food or write in cursive.

But cursive skills aren’t needed in order to be productive in today’s world. Typing speed and scheduling online meetings is useful.

An inceasingly wide swath of skills may become counter-productive to develop. In 1800’s, having a strong bold to drive a plough or load hay bales was pretty darn useful. Today it’s patently not – and generative AI is quickly shifting shifting skillsets again. Our interview with Tom Davenport focused mostly on the business implications of generative AI, where he brought up some of the currently shifting dynamics of skill/value in the workplace:

People are going to do a lot more editing and less writing of first drafts… in journalism editor skills are different than putting together the news article. With generative AI this will be more different we’ll have to check for accuracy – at least for now these systems aren’t all that factually accurate.

So is it (the work of editing) boring or commonplace? We might have to liven it up some. I think the age of the essay in school, in work, in publishing… is pretty much over.

In our interview Dr. Francesca Rossi, esteemed IBM Fellow and President of the Association for the Advancement of Artificial Intelligence (AAAI), an important question was brought up: Is there a line where generative AI automates so much that it weakens us in substantial ways, and makes life fundamentally worse?

In her own words:

I wouldn’t want humans to be de-skilled unless it was a conscious decision. For example, compared to the previous generations, I might be worse at doing complex arithmetical operations.

Today, we don’t do them with our hands, or with our mind. So clearly there are some things that we don’t do now – but we still have to understand where de-skilling is a risk, and try to avoid it.

While it’s clear that norms and skills will change (your grandparents didn’t need to know how to use Slack or order and Uber), many people question if even those new skills won’t separate us from some core elements of our agency.

3. Reward Circuits: Steering Our Future

Ultimately, what we seek as a society is the same as what we seek as individuals: To survive and persist. A civilization where most human beings fulfill their drives by spending all their waking hours in isolated, pleasure-optimizing virtual worlds is unlikely to be a civilization that can sustain itself.

The circumstances look beyond our control: We almost certainly can’t stop generative AI experiences from becoming immersive and ubiquitous, and powerless pleasure may be the gravitational pull of AI-augmented VR. However, what we can do is find ways to wield the technology to allow us to survive and persist even better than we could before.

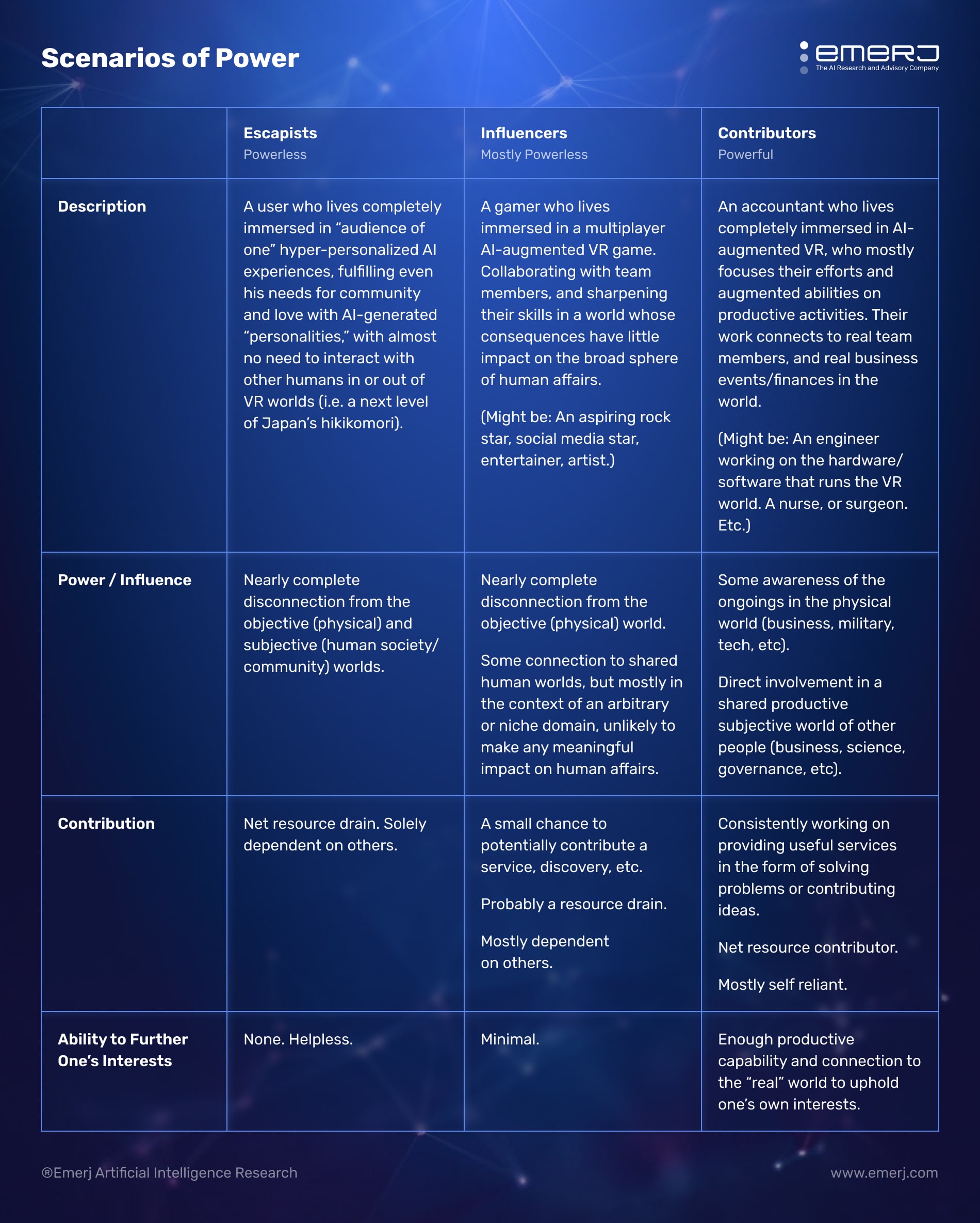

Scenarios of Power/Agency

I’m operating on the assumption that a nation or civilization that can further its own interests is composed of citizens who can do the same.

Not only do citizens who lock themselves away in AI-generated pleasure experiences contribute nothing economically, scientifically, or otherwise — they cannot further their own interests. They are utterly beholden to those who manage the computational substrate (hardware and software) they have selected as the means for their escape from reality.

We might consider a kind of gradient for how citizens use generative AI worlds — from a condition of not contributing or wielding any power, to a position of productive contribution and actually wielding power.

Any future society that exists primarily in virtual worlds (which I’m arguing as inevitable) will have some set of individuals or groups who will be “Substrate Controllers.” These individuals or groups wield the most power as they control the computing substrate that houses the experience of most people in the virtual world. Analyzing this class of persons will have to be a topic for another essay (see: Substrate Monopoly).

Already, most developed societies are drifting in the direction of fewer and fewer Contributors.

A 2017 poll of a thousand UK children aged 6-17 found that over 50% aspired to be a blogger or YouTube star as their primary career path — and Danish toy maker Lego recently found that around one-third of children aged 8-12 aspired to be YouTube stars. With the tremendous popularity of TikTok, we should expect those numbers to continue rising. Influencers, it seems, will proliferate immensely in the near future.

Japan’s hikikomori fit the mold of the Escapists today. Even in the Western world, there is reason to believe that video games are contributing to a substantial loss in workforce participation among younger men.

The complex set of fulfilling experiences gained by building a business, winning a political race, or making a scientific breakthrough (including the accolades and appreciation of AI-generated “others”) may soon be available with no real work or contribution needed. Other simple pleasures – entertainment, humor, sexual – will be magnified and calibrated to be more sustaining within AI interfaces.

The future will have much more robust and complete ways to fulfill human needs — potentially driving up the number of Escapists.

That said, there may be some natural counter-balancing forces that encourage companies to bear the agency of their users in mind. In our full interview on this topic, WSJ Bestselling author Nir Eyal explains the “selfish” capitalist motive behind preventing the negative impacts of overly habit-forming products:

The traditional news companies are not going to put up a banner that says ‘You’ve been watching way too much news you should spend time with your family.’ That’s never happened. But if you look at Apple or Google – they have features that help you use the product less…

…now why do companies do that – because of political pressures? Absolutely not. Because they’re altruistic? You’re dreaming. They did it because these types of features make the product better.

Seat belts appeared in cars 17 years before regulation said that they had to. Why did they do it? Because guest what – people want to drive cars that keep them alive. And so that’s exactly what’s happening with our technology.

4. Altering Our Reward Systems

Due for an Upgrade:

Humans used domesticated horses for transportation for at least 5,000 years.

Eventually, this gave way to boats, trains, and automobiles. The undeniable advancements in trade/profit, personal productivity, and military power of these new modes of transportation made adoption compulsory. A person or nation on horseback would be left behind.

Humans have expanded their power and productivity by working with their bungled, naturally evolved reward systems for a few hundred thousand years.

Eventually, this will give way to working around our reward systems, or fundamentally altering them. There will be undeniable advantages for individuals with augmented or enhanced motivations or drives, and nations that can produce such citizens in abundance would likely be much stronger.

Most of this essay has dealt with the near-term considerations of working with our existing reward system — but eventually, this reward system will have to evolve, likely with humanity steering those changes.

We started off this essay with the example of the Australian Jewel beetle nearly going extinct because its reward circuits were enticed to try to mate continuously with brown beer bottles. We’re likely to invent our beer bottles — and I would argue that, sooner rather than later, humanity should shift towards editing its outdated reward system, not simply coping with it.

It might easily be argued that:

- Given the rapid pace of change in our human conditions, we are “due for an upgrade” — in order to encourage us to avoid the unbounded number of future distractions we might face and to work better with technology in a noisier, more addictive future.

- Given the arms races still going on in technology, mainly between the West and China, imbuing society with superhuman drives for work and focus (with fewer drives geared towards mostly useless evolutionary leftovers) would be undeniably advantageous.

In our recent AI Futures interview series, we spoke with the United Nations Head of Data and Emerging Technologies, Lambert Hogenhout:

How do we, as humans, keep improving things for ourselves, improving our own lives, our own bodies, our own physical health and our interaction with computers?

And brain-computer interfaces are part of that course.

I think what AI is doing for biomedical sciences at the moment is also very exciting and important. And it can help people, you know, stay healthier, live longer, and root out diseases, etc. But that means we’re also going to be dependent on AI. So it’s not just that AI is used for some research and fixes it, no. We’re going to go to a stage where we have AI, personalized AI, to monitor our health constantly, and we depend on them to stay healthy.

So I think it’s very going to be a very intimate connection between us and the AIs, and I think that’s a more interesting question than whether the AI will think for itself. – (full episode here)

The Objectives of Cognitive Enhancement

We can’t even locate memories or understand our connectome very well — how can we expect to “untangle” one drive and implant another one? I do not believe that cognitive enhancement is likely in the next five years.

That said, in light of the progress of organizations like Braingate and the efforts behind firms like Kernel and Neuralink, it seems likely that it will be viable — and possibly commonplace in the coming two decades. Prairie voles can have their mating behavior switched from monogamous to non-monogamous from minor changes to their genes. Humans likely won’t be so simple, but the example is still telling.

China has been much more overt about genetically tinkering with human embryos and using a brain-computer interface to augment and improve human performance. The West cannot rest on its laurels.

Cognitive enhancement can imply many things — from memory augmentation, to the addition of new or heightened senses, to so-called “moral enhancement” and beyond. For the sake of this article, we’ll focus on adjusting human drives and motivations.

There might be a few reasons to alter our reward circuits:

- Maintain consistent bliss:

- Disconnecting well-being from the fulfillment of goals. Human beings do everything they do in order to pursue pleasure and avoid pain. Once humans have entered virtual worlds and aren’t happy, they’ll realize that the mental hardware itself is at fault — and may demand well-being as a fundamental human right.

- That said, a society of continuously fulfilled people may become complacent, unable to uphold their own interests in the world. Given human hardware today, bliss generally leads to a lack of motivation. For this reason, human drives might be altered to both increase sustained motivation for work while improving and maintaining a very high baseline of happiness.

- Removing or re-routing drives:

- Some humans may prefer not to feel jealousy or envy at all and may wish to opt out entirely of some of the default drives with which they were born.

- Some humans may prefer not to experience sexual urges and instead be able to route that psychological arousal to pursuing other goals — or more generally, towards expanding their skills.

- Some humans may prefer to remove or re-route drives related to human relationships, deriving the same rich fulfillment that humans naturally receive from time with loved ones, but instead from some new activity — whether it be video games, scientific discovery, or whatever else.

- Militaries may wish for soldiers who rarely get bored and can stay alert for long periods or who place group loyalty and valor over their own safety, etc. The US military, in particular, has already made direct investments in such technology.

- Adding additional drives:

- In order to escape the “audience of one” ecosystem of virtual escapism, humans may want to develop abstract drives related to control and understanding — forces that might consistently bend them away from virtual dead-ends and towards real wielding of their power and development of their capabilities.

- At present, we could be seen as slaves to avoiding pain and pursuing pleasure — but there might be new continuums of sentience experience beyond pain and pleasure that would permit us more autonomy in our actions and choices. Such a discovery could open up more possibilities of how to survive and thrive in the future.

Short of rewiring our circuitry and gaming hundreds of thousands of years of evolution, however, our next best option is social reform.

5. Policy Considerations

Will Durant was right: civilizations are born stoic and die epicurean — often at the hand of a stoic rival. Today we’re up against the most potent epicurean technology forces our species has ever faced.

The purposes of tech policy can be almost endless — from improving equity to reducing suffering to enhancing the welfare of specific underprivileged groups, etc. For the sake of this essay, however, we’ll take the survival and persistence of civilization as the objective of our policymaking.

We should not be thinking about fighting our reward circuitry but using it, or rewiring it, to ensure that more of society can contribute meaningfully to their individual and collective power and avoid the gravitational pull of hyper-personalized reward fulfillment in their own individual worlds.

Scenarios to Explore

As far as I can tell — a society will be better off when its individual members are contributing and cooperating.

Some conflict is inevitable and even productive. Yet a system rigged towards ongoing conflict is not only dangerous, but probably vastly less productive: I can only afford the time to write this article because I have running water, and nobody is actively trying to kill me.

With that rough premise in mind, I’ll aim to lay out potentially preferable and unpreferable future scenarios that might be worth considering for policymakers and business leaders.

Considerations for Productivity:

- Scenarios that Increase Productivity

- A rich career ecosystem for AI-augmented VR tools exists, not merely for leisure activities but for every possible productive kind of work (see: Ambitious AI).

- Technology companies compete actively to show that their products are healthy and promote users’ well-being and productive goals (not merely entertainment and distraction). In 2023, Apple has features that help iPhone users limit their screen time. In the future, people may want to adopt technologies that actively promise to deliver on the end-user’s interests.

- Scenarios that Decrease Productivity

- Most people live in virtual worlds so appealing and personalized that human relationships are no longer interesting or even relevant.

- Many ambitious people prefer to satisfy their drive for personal accomplishment and social accolades in rich achievement-related simulations. They can make scientific laws, become James Bond, take their startup public, etc. — all in the same day — with simulated people praising and respecting their actions.

Considerations for Cooperation:

- Sustained Alignment

- A platform that is not permeable to adversarial forces (unlike today’s social media platforms, which are blatantly open to influence campaigns from China and Russia).

- Many aspects of human collaboration are bolstered by AI and VR — not only preventing people from escaping wholly into isolated, atomized virtual worlds, but encouraging regular human interaction for work tasks, entertainment, etc.

- Decreased Alignment

- Virtual reality platforms encourage incentives to dissent, outrage, and conflict (today’s social media platforms are argued to have already fallen prey to this dynamic).

- Directly adversarial powers (China) control the virtual ecosystems, bending the user’s experience towards discord or unproductive habits (concerns that already exist with TikTok).

- Escapists may be oppressed or abused by substrate owners who can simply unplug them or milk them for data because these escapists have no way to defend themselves. Just as modern poor people are inundated with advertising, future poor people may be forced to play games or do activities that harvest data to help train algorithms in some helpful way.

Potential Regulatory Steps:

Even if we could agree on which futures were “preferable” (we cannot, but we can, at best, approximate), the devil is in the details as to how policy might actually bring about the “preferable” futures.

The world is rife with policy measures that did vastly more harm than good, or that encouraged the exact opposite of what was intended.

I don’t see policy as the only, or the most important, lever to change the future — but it will be one of them.

Below are a handful of ideas to consider in encouraging the productivity and health of societies as augmented reality technologies and other digital superstimuli become more popular:

- Ban of Tax Purely Escapist Tech — Isolating virtual experiences (completely lotus-eater-like Escapist tech) are taxed heavily, banned, or set to a time limit of sorts. In the same way that drugs and gambling are regulated today.

- Fund Productive AI/VR Tech — Governments might provide grants and funding for productivity-encouraging AI and VR applications. Just as the US DoD invests in AI and brain-computer interface technology to improve its military strength, it might invest in immersive technologies that will keep private citizens productive — strengthening the US’s relative position of technology leadership.

- Develop New National / International Governing Bodies — Just as the US FDA and FTC ensure that consumers aren’t misled into eating or investing in something that will harm them, new bodies might emerge with a focus on improving citizen health and well-being with AI and VR tech — while discouraging escapism.

- Moratorium on Cognitive Enhancement — Intergovernmental organizations might create an international steering and transparency committee (an idea we’ve explored in this longer AI Power article) to oversee and approve projects related to altering human mental function and reward systems. It seems somewhat obvious that humans have a hard time staving off conflict already and that wildly diverging human “variants” with different drives and values will be even more prone to conflict.

Policymakers may have little chance to determine what technologies become popular — and even innovators will find themselves riding unpredictable waves of new technologies and breakthroughs.

Nonetheless, there is a chance to ensure that these technologies add to the net strength (their ability to survive and persist) of individuals and the state.

We are at a point in the stream of evolutionary life where new challenges to our reward systems arrive. Namely: Hyper-personalized experiences that fulfill our desires almost entirely — while potentially drastically diminishing our strength or power as individuals and as a society.

Many people will get caught up in eddies on the side of the stream of life, spinning in AI-generated pleasurable experiences, but wholly at the mercy of those controlling the technologies they live in — and almost entirely unproductive for the rest of society.

As individuals, innovators, and policymakers to volitionally move towards futures that involve healthier and happier human lives and maintain our ability to be grounded in objective and subjective reality and to maintain strength. This may require us to rewire our reward systems and drives, to edit the brain itself to be more apt to deal with the fast-moving, infinitely distracting technological future we’re entering.

It behooves us to stay in the stream of life and brace ourselves to overcome the eddies ahead.

People are going to do a lot more editing and less writing of first drafts… in journalism editor skills are different than putting together the news article. With generative AI this will be more different we’ll have to check for accuracy – at least for now these systems aren’t all that factually accurate.

People are going to do a lot more editing and less writing of first drafts… in journalism editor skills are different than putting together the news article. With generative AI this will be more different we’ll have to check for accuracy – at least for now these systems aren’t all that factually accurate.  I wouldn’t want humans to be de-skilled unless it was a conscious decision. For example, compared to the previous generations, I might be worse at doing complex arithmetical operations.

I wouldn’t want humans to be de-skilled unless it was a conscious decision. For example, compared to the previous generations, I might be worse at doing complex arithmetical operations.

The traditional news companies are not going to put up a banner that says ‘You’ve been watching way too much news you should spend time with your family.’ That’s never happened. But if you look at Apple or Google – they have features that help you use the product less…

The traditional news companies are not going to put up a banner that says ‘You’ve been watching way too much news you should spend time with your family.’ That’s never happened. But if you look at Apple or Google – they have features that help you use the product less… How do we, as humans, keep improving things for ourselves, improving our own lives, our own bodies, our own physical health and our interaction with computers?

How do we, as humans, keep improving things for ourselves, improving our own lives, our own bodies, our own physical health and our interaction with computers?