The Walt Disney Company began in 1923 as the Disney Brothers Cartoon Studio. By 1940, Walt Disney Productions issued its first stock. Today, the multinational family entertainment and media conglomerate is one of the Big 6 media companies.

As of 2021, Disney trades on the NYSE with a market cap that exceeds $300 billion. For the fiscal year ended October 3, 2020, Disney reported revenues of $65.3 billion, according to its Fiscal Year 2020 10-K.

Twelve years ago, Disney established DisneyResearch|Studios, its Swiss-based research arm that puts its research talent to work in applying machine learning, artificial intelligence, and visual computing innovations to its movies and media content. Disney also operates the Disney Accelerator program, which supports venture-backed, growth-stage startups with capital, workspace, and guidance from entertainment and technology leaders, including Disney top executives.

In this article, we will look at how Disney has explored AI applications for its business and industry through two unique use-cases:

- Creating Clouds that Look Real — Disney uses machine learning and deep learning to create realistic clouds that model directional light and are influenced by the sun and sky around them.

- Analyzing and Acting upon Audience Reactions — Disney uses computer vision and machine learning to determine, predict, and influence an audience’s reaction to target content.

We will begin by examining how Disney has turned to deep scattering, which uses advanced neural networks to add clouds to its animated films that look realistic and lifelike.

Creating Clouds that Look Real

Creating realistic, immersive 3D worlds that convince and entertain today’s audiences pushes the limits of technology and the imagination. Simulating the effect of light on these virtual environments—a critical process for making them realistic—is a massive technical challenge.

Light transport—part of the larger production rendering process—takes time and adds to the costs of films that feature these 3D worlds. When forming clouds for digital scenes, for example, the “characteristic silver lining and the ‘whiteness’ of the inner body” are challenging when relying on Monte Carlo simulation or diffusion theory alone, writes a team of Disney Research/ETH Zürich authors in a 2017 publication.

The business value in seeking faster, more resourceful methods to design these clouds is clear when these new technologies can lead to the more efficient deployment of both human and computational resources.

In their 2017 paper, the Disney/ETH Zürich research team points out that rendering a realistic sunlit cloud can take some 30 hours using traditional methods, called light tracing. However, when neural networks can predict how the sun will light that cloud, the entire process can be accomplished with the new deep scattering process in a matter of minutes.

To accomplish this, Disney Research Studios brings machine learning to the production rendering and light transport process. To accelerate the process, the researchers looked to develop new machine learning, and deep learning, algorithms that could provide:

- Path guiding for efficient simulation of light paths

- Production-ready denoising

- Deep scattering in atmospheric clouds

To inform their algorithms to create the clouds, the researchers studied “the spatial and directional distribution of radiant flux” over dozens of sample cloud formations. With that information, they claim, clouds in new scenes could then be sampled, by creating the formation and geometry of the cloud while modeling realistic light sourcing and shading, a process they call deep scattering.

In the below video, Disney researchers show deep scattering and how to render clouds with radiance-predicting neural networks:

The deep neural networks then deliver the predicted radiance and improve these predictions over time, according to the research team. In creating clouds, Disney Research claims, the deep learning technology can be used for a variety of situations, including:

- Modeling directional light

- Modeling sun and sky

- Sunrise/sunset scenarios

Our research was unable to locate any financial information specific to this technology alone, however, Disney Research Studios claims that its researchers help deploy tools into production that support Pixar and Disney Animation in RenderMan and Hyperion, their proprietary 3D rendering solutions.

Analyzing and Acting upon Audience Reactions

To succeed in entertainment, you have to know your audience—sometimes even better than the audience knows itself. In the past, entertainment companies like Disney had to sample their intended population using focus groups and ask them what they thought about target content as they tested market fit.

Data scientists then had to correct for sampling error when they went to extrapolate their findings and estimate the reaction they could expect from larger audiences. This cost Disney time, money, and resources, and uncertainty around confidence levels.

Today, Disney is developing tools that can bring accuracy, confidence, and efficiency to the process. Disney is creating technologies that use computer vision to observe an audience and machine learning to gauge their reaction to content, based on visual cues like:

- Facial expressions

- Body movements

- Eye movements

Disney is exploring Affective AI, an emerging technology designed to detect and analyze human emotional states. With Affective AI, Disney could realistically model and predict audience reactions that the company could then use to influence a character or plotline, or even make content decisions on a regional or demographic basis, for instance.

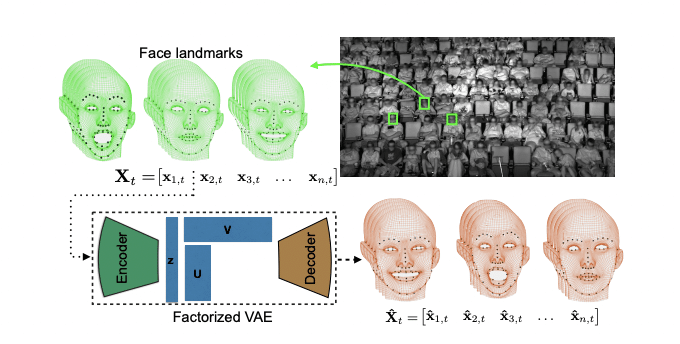

In a recent research paper, researchers from Disney Research, Queensland University of Technology, and Carnegie Mellon University describe using infrared cameras to record the subtle movements of moviegoers to determine their reactions to content. To test those reactions, the researchers say, they compared them to ratings recorded on commercial sites like rottentomatoes.com.

In another application of the technology, detailed in a 2017 Disney Research phys.org article, researchers used four infrared cameras in a 400-seat theater and observed faces in the audience.

Ultimately gathering a dataset of 16 million “facial landmarks” from over 3,000 individuals, the team applied deep learning models to predict, with high accuracy, the facial expressions an audience member would show during an entire movie, based on watching them for just a few moments.

By gathering this data and gaining confidence in the conclusions reached, Disney hopes, they can determine how their content evokes certain emotions and to what extent. Through its Audience Understanding effort, Disney claims, the company can test whether their content produces the desired effect and even predict, using machine learning, which emotions content will evoke, “moment-to-moment.”

While Disney does not share specific financial details related to its Audience Understanding effort, the company does disclose that insights from the project are “used by the Disney-ABC Television Group to inform content decisions.”