The focus of the National Aeronautics and Space Administration (NASA) is to provide information to civilian institutions to help them solve scientific problems at home and in space. This requires a continuous stream of raw data under a constantly shifting environment. According to a 2017 interview with Kevin Murphy, Earth Science Data Systems Program Executive at NASA, the biggest challenge now is not going where no man has gone before, but managing the data.

Murphy states that as of 2016, 12.1TB of data stream in from observation posts and sensors on terra firma as well as out in space on a daily basis and is projected to increase to 24TB per day as better tools come to the fore. In addition, the archives already contain 24PB (petabyte = 1,000TB) of data, projected to grow to 150PB by 2023. Within these data streams is information that has significant importance in the earth sciences, space exploration, and potential resources and threats.

This article focuses on the latest technologies in artificial intelligence and machine learning in technology that have enabled scientists to gather and use data better and faster. The goal of these applications is to make sure NASA does not miss anything of potential value for civilian and commercial use as well as space exploration.

Machine Vision and Earth Sciences

A significant amount of data gathered by NASA comes from satellites, which has a bird’s eye view of what is happening on the earth below. Satellite imagery provides millions of bytes of data from ocean currents, ice states, and volcanic activity, all of which goes to data centers back on earth, is organized and is processed into data sets. This seems simple enough until one considers the sheer volume of this raw data.

The challenge of processing big data into usable form falls on Advancing Collaborative Connections for Earth System Science (ACCESS) researchers working closely with the Earth Observing System Data and Information System (EOSDIS). The ACCESS 2017 project teams are working on different aspects of data management using the latest machine learning and data transmission technologies, but they all have to

ensure the data is in a usable and accessible form as well as “tied directly to specific issues facing Earth science and applied science users interacting with EOSDIS.”

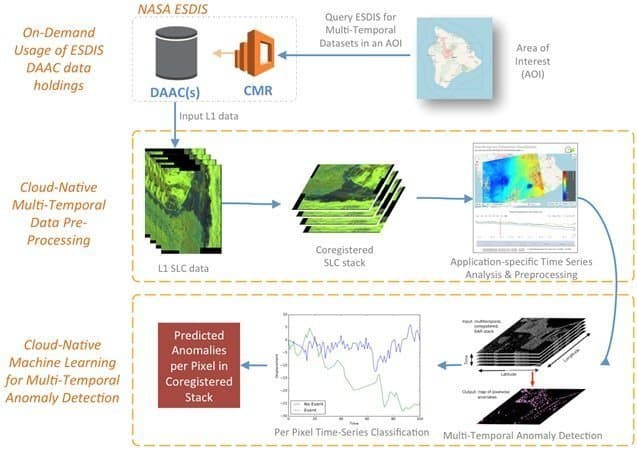

One team under the ACCESS program is working on developing a form of machine vision to automate the process of managing high volumes of geodetic data using synthetic aperture radar (SAR) images.

Multi-Temporal Anomaly Detection for SAR Earth Observations uses machine learning to help researchers use long-term, time-dependent historical data to detect anomalies in the image, such as whether they depict damage or not. This helps make predictions that are more accurate and simplify decision support systems compared to previous data systems, such as the Advanced Rapid Imaging and Analysis (ARIA).

TESS and Exoplanets

Machine learning systems typically require quite a bit of data on which to train, but there is no difficulty in this when it comes to planet-hunting. One of the goals of NASA that most excite the public is finding planets outside the Solar System, or exoplanets, that might possibly support life as it exists on Earth. Scientists do this with the use of ground and space-based telescopes.

Scientists have been able to observe in excess of 2,500 exoplanets with the use of the Kepler Space Telescope, launched a decade and 18 sectors of space ago to get a better look at these far off celestial bodies. They detect the presence of these exoplanets by observing “blips” or “dips” in the visibility of the stars visible from the Earth, also known as transit photometry, signals, and light curves.

Scientists believe these dips represent planets as they orbit around these stars on the side within the Earth’s line of sight. They note several factors about these dips to find out something about these exoplanets, such as their size, the size and shape of its orbit, as well as whether it is a false positive, (in other words, not a planet).

This has yielded copious data on these exoplanets, as well as 2,000 candidate planets still for confirmation. However, Kepler is running low on fuel, and researchers are unsure whether it will be able to complete the 20 campaigns as planned.

This is not the end of the road for exoplanets discovery, however. NASA launched TESS, or the Transiting Exoplanet Survey Satellite on April 18, 2018. TESS will look more closely at bigger, brighter stars to identify, catalog more exoplanets, and find out more details about them. TESS will cover an area roughly 400 times larger than that covered by Kepler.

TESS has already sent back the first images of its initial sweep in August, and these indicate the wealth of data the satellite will send back in its two-year sojourn in space. TESS will gather data using transit photometry, the same as Kepler, and confirm it eventually using space-based mission and ground-based telescope observations. These follow-ups will determine the nature, atmosphere, and composition of these exoplanets.

The challenge is to narrow down the number of candidates to the best ones for follow up. The Kepler data has provided excellent material for researchers in MIT and Google to develop and train machine learning algorithms. These machine vision algorithms search rapidly through these data sets and identify the dips that warrant a closer look.

Speed is of the essence in this undertaking, as TESS sweeps through each sector between 27 and 30 days. Quick identification of these candidates will give scientists the opportunity for a closer look before TESS moves on to the next sector. In one example, MIT scientists were able to identify 1,000 stars out of 20,647 by searching through the light curves. From these stars, researchers were able to identify 30 “high-quality” candidate exoplanets, that is, planets likely to harbor life. This search took a few weeks instead of the usual months.

AI and Aliens

Transit photometry is not just for finding exoplanets, however. Together with spectroscopy, it may also be useful for finding aliens.

The Fermi Paradox and the Drake Equation continue to spark debate among scientists, but the basis for both is incontrovertible: the universe is vast. Despite there being no direct proof of intelligent life on other planets, the sheer number of stars with potentially habitable planets indicate it is highly probable that there is, and the Drake Equation suggests as many as 10,000 life forms in the Milky Way galaxy alone, although perhaps not life as humans know it.

Researchers at NASA’s Frontier Development Lab (FDL) used generative adversarial networks, or GANs, to create 3.5 million possible permutations of alien life based on signals from Kepler and the European Space Agency’s Gaia telescope. With these permutations, scientists have a better idea of the type of conditions that are most likely to sustain some form of life, such as gases present, temperature, density, and biological structure, whether carbon-based, such as that on Earth, or something more exotic.

GANs is a set of two deep learning machines that work off each other to create new data based on a given data set. The machines determine if the new data is plausible (as opposed to implausible) or real (as opposed to fake), depending on the form of the ideal data fed into the machines at the outset.

In simple terms, the two machines in a GAN act like two sides of an argument. One is trying to convince the other, and by degrees, come to a compromise. GANs can “fill in the gaps” in data sets to present a complete picture provided it has enough time to produce enough iterations so that the algorithm creates “realistic” results.

For example, if the first machine produces a stick figure as a representation of a human, the second machine will point out the flaws in the stick figure. The first machine then produces another image for further critique. At the end, the image should be a good approximation of a human.

One of these GANs is Atmos, simulation software created by Google Cloud developers in response to NASA’s FDL astrobiology challenge to simulate alien atmospheres. The software is open source.

Another neural network at play is a convolutional neural network, or CNN. Embodied in a machine-learning tool called Intelligent Exoplanet Atmosphere Retrieval or INARA, it trains on spectral signatures from millions of exoplanets. It is able to assign values for each spectral signature and differentiate one from the other. When INARA encounters spectral signatures in an image, it can generate the possible chemical composition and the probability of life in seconds.

Wearable AI and Instant Robots

Most people accept robots as an integral part of space exploration, but those that closely work with them would be the first to concede that robots need to be more flexible. The design and construction of traditional robots are expensive and typically limited to specific functions. This can be a problem when in an unpredictable environment such as space.

One solution is to create something more general purpose, which can serve several functions at a pinch. NASA sent this out as a challenge to developers, and the result was a soft robot. It is a wearable, moldable “skin” with pneumatic actuators and sensors called OmniSkins.

Developed by Rebecca Kramer-Bottiglio and Yale University associates in partnership with NASA, the wearable tech are pieces of soft, lightweight, and reusable sheets. When attached to a deformable object, the skins can make it move and perform different functions adequately in a pinch. Kramer-Bottolio demonstrates it in a brief 2-minute video below.

Scientists can also use the sensors in the skins to gather data about a person’s vitals and the physical demands of space. The artificial intelligence component of the tech is still in development, but in the future, it should be able to execute specific actions if trained to do so, such as wriggle to correct the wearer’s posture. It should also be able to learn from historical data.

Robotics and Mars Habitats

The fourth planet from the Sun and Earth’s nearest neighbor, the only one that won’t instantly kill humans, is Mars. NASA has explored it more extensively than any other extraterrestrial body, primarily because it is currently the most likely surrogate to Earth. In fact, two non-governmental organizations are promoting the idea of sending humans to Mars, and it may happen as soon as 2024. This is the plan for Elon Musk and his SpaceX, at least. Another ongoing initiative is Mars One,

NASA has actually expressed pessimism about the feasibility of terraforming Mars enough to make it habitable for humans, citing insufficient amounts of carbon dioxide in its atmosphere to generate greenhouse gases. It is therefore surprising that it continues to encourage the development of technology to build habitats on Mars with its 3D-Printed Habitat Challenge, also known as MARSHA (Mars habitat)..

The challenge is currently in its third phase, which requires the onsite autonomous building of habitats using 3D printers and the Building Information Modeling (BIM) software at one-third scale. Four teams have so far made it to the seal test stage of the competition, in order of ranking: SEArch+/Apis Cor.; AI SpaceFactory; Pennsylvania State University; and Colorado School of Mines and ICON. The schedule for the final stage is between April 29 and May 4, 2019.

Below is a video showing AI SpaceFactory’s 3D printer building the foundation of a cylindrical tower for holding water:

NASA provides guidelines for building information modeling but does not specify a particular software. None of the companies participating in the MARSHA challenge specify the type of machine learning it uses to automate the 3D printing process. However, it is likely that machine vision algorithms are involved.

The big problem with building components using 3D printers is flaws due to anomalies in the printing process such as the uneven spread or heating of the material. Machine vision algorithms can monitor the process and detect anomalies as it happens. This makes the 3D printer not only autonomous, but self-correcting, which would minimize, if not eliminate, flaws in the material.

It is likely that AI SpaceFactory used machine vision algorithms in its autonomous robotic technology to build a sealed cylindrical tower capable of holding 1,200 gallons of water. The company chose to use “Martian polymer” that it claimed was native to the Mars environment. This probably requires machine learning software that can adjust to unexpected problems that might arise with the atypical material during printing.

If all goes well, the only problem is finding liquid water on Mars.

Autonomous Vehicles and Rovers

Much of the key knowledge that researchers know about Mars comes from images taken and samples gathered by autonomous vehicles. The first two sample-gathering vehicles to land on Mars successfully were the Spirit and Opportunity rovers.

They were vehicles controlled by a ground crew, but the 20-minute or so lag in communications could lead to missed opportunities, such as the discovery of the Block Island meteorite. The Opportunity rover had nearly missed it, rolling 600 feet past it before someone noticed it.

The obvious answer was to give the rovers some autonomy using AI. However, the onboard computer of the Opportunity had very limited processing speed at 25MHz, much less than a smartphone today. It took some doing, but NASA’s Jet Propulsion Laboratory was able to develop and upload the AEGIS (Autonomous Exploration for Gathering Increased Science) software to Opportunity, enabling it to identify, measure, and take images of rocks based on the parameters set by scientists.

AEGIS is simple, but it works, which is why it was good enough for the Curiosity rover (launched in 2012), and has earned a place in the Mars 2020 rover (slated to land in 2021). However, the AEGIS on the Mars 2020 rover will only serve as part of the much more sophisticated system.

The Observation and Analysis of Smectic Islands In Space (OASIS) system is an autonomous scientific gathering module controlled by the Continuous Activity Scheduling Planning Execution and Replanning or CASPER. This is the scheduling component designed to respond to changing conditions, thus maximizing limited resources.

The system depends heavily on machine vision, as the cameras are in constant use, so it is the most practical way to gather data. When the cameras take a photo of a rock, the AI first makes an identification of the rock and checks the horizon and clouds.

If anything seems unusual, the system automatically marks it for further inspection. CASPER will determine if the target is worth the use of resources required for the extra attention. If it determines it is not, the rover will move on to the next target.

Header Image Credit: Xornimo News