The future of Artificial Intelligence (AI) holds the promise of a transformative era where machines evolve from mere tools to influential collaborators. As AI technologies advance, they are set to reshape industries, augment human capabilities, and unlock unprecedented insights from data.

With the potential to automate routine tasks, enable personalized experiences, and revolutionize decision-making processes, AI’s impact on society, the economy, and daily life is bound to be profound.

Perhaps the most recent example is how, seemingly overnight, large language models and generative AI went from esoteric forms of artificial intelligence to the most easily recognized and business-changing technological developments of the last century, paving the way for AI capabilities to be embraced by popular culture worldwide.

Following the explosion in awareness after OpenAI released their ChatGPT interface in November 2022, allowing millions of users to interact with their GPT-3 language model, the world stood aghast at the remarkable capabilities of the system.

Instantly, educators decried the ease with which students could fabricate their assignments – often riddled with inaccuracies derived from ChatGPT itself. As the financial and legal industries revealed how the software could replicate sophisticated tasks in their workflows, a certain self-consciousness and fear for job security swept through both sectors.

Only some of the news coverage was as pessimistic, however. A widely cited report from McKinsey published in June 2023 made huge ripples through the mainstream media, touting a projected increase of $2.6-4.4 trillion in value for the economy generated yearly thanks to generative AI capabilities.

The generative AI explosion marks the first time our species is forced to carefully consider the ethical, societal, and economic questions that the evolution of AI raises. Striking a balance between innovation, responsible development, and preserving human values will be essential as we venture into an AI-driven future.

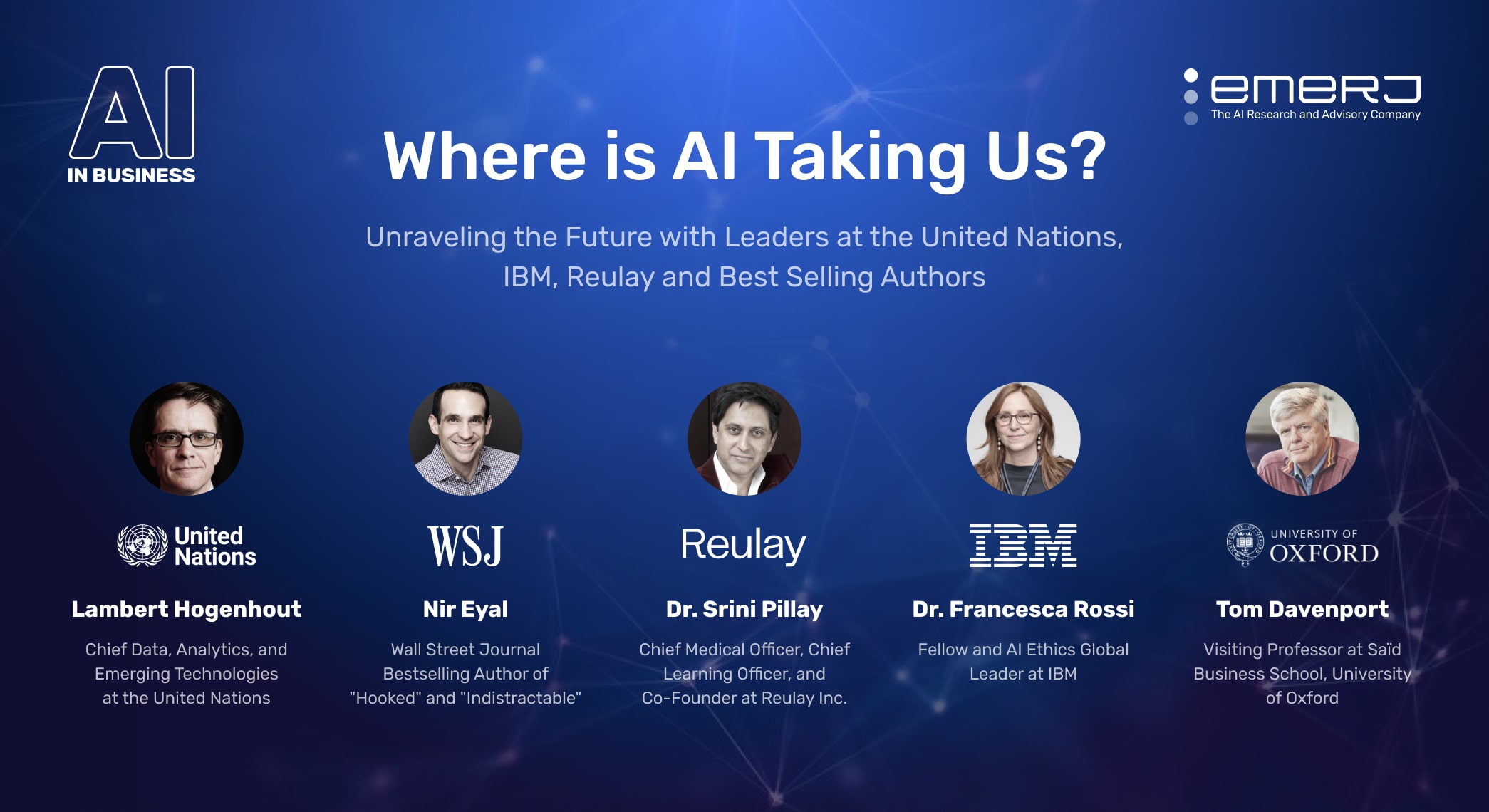

This article will explore the valuable insights shared by leaders who appeared in a special ‘AI Futures / Human Reward Systems’ episode series of Emerj’s ‘AI in Business’ podcast. Throughout the series, these leaders shed indispensable light on what the future of AI means for the global economy in the short term — and for the species in the long term.

In the following analysis of their conversation, we examine the following key insights:

- Intricacies of AI sentience: The potential advancements, impacts, and interdependence between humans and AI, including technological developments, virtual reality, and brain-computer interfaces.

- Addictive side of technology: The potentially addictive nature of these technologies, the importance of responsible policies, and the vested interests of companies in maintaining long-term user engagement.

- Exploring data, measurement, and technology for human well-being: The importance of collecting large and diverse datasets, exploring novel measurement approaches, and being aware of the potential impact of technology on human well-being.

- Potentials and challenges of generative AI: The potential of generative AI for attorneys and product designers, including legal risks and the uncertain value placed on human creations.

- Role of AI in creativity, business value, and ethics: The impact of relying on AI-generated tools for creativity and the importance of using AI ethically to enhance business value and societal progress.

Generative AI is a Waypoint to Brain-Computer Interface – with Lambert Hogenhout of the United Nations

Guest: Lambert Hogenhout, Chief Data, Analytics and Emerging Technologies, United Nations

Lambert discusses the rapid development of technology, particularly in virtual reality (VR), augmented reality (AR), and generative AI frameworks. Lambert believes that within a five to ten-year timeframe, technological advancements will allow for even more unimaginable possibilities.

He explains the concept of VR and AR in specific detail for the podcast audience, where users can either enhance their real-world surroundings with additional elements or create entirely virtual worlds. With generative AI frameworks, generating images and 3D objects is becoming a more accessible capability outside of professional media production houses.

Still, he predicts that soon it will extend to generating interactive 3D worlds and even customizable movies in VR. This advancement allows users to personalize their virtual experiences according to their preferences.

Lambert suggests that robots or AI capabilities could take over some tasks. However, he believes humans will still be crucial in managing world affairs and the global economy. He emphasizes that – for the time being – humans are responsible for ensuring that the world continues functioning, including food production and maintaining international peace.

He continues to address two main aspects of AI’s role in the future. The first is the potential sentience of AI, where AI systems develop their thinking capabilities and consciousness. Lambert touches on science fiction parallels and dystopian scenarios that explore these concepts. It raises the question of whether AI will evolve to a point where it becomes self-aware and autonomous.

The second aspect discussed is the potential of humans and AI to evolve together in ways that improve human lives, physical health and – eventually – the development of brain-computer interfaces (BCIs). Lambert highlights AI’s exciting and vital role in biomedical sciences, such as helping people maintain better health, prolonging life expectancy, and preventing diseases.

He concludes that the question of AI’s sentience and independent thinking might be overshadowed by the more pressing concern of the intimate and interdependent relationship between humans and AI.

Future AI Products Might Be Habit-Forming, In a Good Way – with Nir Eyal

Guest: Nir Eyal– Stanford professor and the author of Hooked: How to Build Habit-Forming Products.

Nir begins his podcast appearance by drawing parallels between technology and alcohol addiction to make a point about the potentially addictive nature of certain technological products and the need for responsible policies to address it.

Nir recognizes that alcohol, known for its addictive properties, doesn’t turn everyone who consumes it into an alcoholic. Similarly, Nir suggests that, despite its prevalence, technology doesn’t inherently lead to addiction for all users.

To counter this potential, he suggests implementing an abuse policy for companies or creators of potentially addictive products. The policy would monitor user usage patterns and send a special message if users exceed a set threshold.

The message would probe for potential addiction in the user, but the decision to seek help would be up to the user. Additionally, users could be placed on a blacklist to limit their access to the addictive product as a proactive measure.

Nir continues to explain that companies like Apple and Google incorporate features related to user well-being and health into their products not due to political pressure or altruism but because these features enhance the overall quality of their products.

These attention economy companies are vested in ensuring that users continue using their products for a lifetime rather than using them excessively and then abandoning them due to disruption in their lives. To achieve this, these companies aim to create a value exchange with users, who perceive the product as valuable and beneficial to their lives.

The backlash against technology’s addictive or habit-forming nature reflects a growing awareness among rational individuals who recognize the need to adapt their practices to make the most of these technologies. The process of recalibration is seen as a healthy response. Ultimately, the goal of any enterprise is not to burn out users but to foster long-term usage and engagement with their products.

Controlling Human Emotions with Immersive AI/VR Experiences – with Dr. Srini Pillay

Guest: Dr. Srini Pillay, Chief Medical Officer, Chief Learning Officer, and Co-Founder at Reulay Inc

Dr. Pillay discusses the current gold medical measurement standard: a double-anonymized, placebo-controlled trial. He explains that this type of trial provides group data and determines if a treatment, such as a virtual reality (VR) experience, is effective compared to a placebo.

He also discusses the need for a large dataset to make reliable predictions in medicine. However, Dr. Pillay acknowledges that the challenge lies in confidentiality issues, making it difficult for entrepreneurial companies to access extensive medical data. To address this, he suggests the collection of large datasets and the creation of synthetic datasets using generative adversarial networks (GANs) to enhance predictive power.

Additionally, the speaker mentions two intriguing measurement approaches that are gaining interest. The first is integrated information theory, which focuses on measuring consciousness in any integrated system, not limited to the brain. The second approach mentioned is the exposome, which refers to all environmental factors that interact with an individual’s well-being. It includes elements such as sunrises or sunsets, climate, oxygen, color, and geometry, among other factors.

Dr. Pillay further suggests that measuring consciousness outside the human body and incorporating the exposome data into the dataset could significantly advance understanding of human well-being.

Lastly, Dr. Pillay warns against developing a mentality of instant gratification from machines and technology, as it can lead to frustration in real-life situations. He questions the impact of AI lacking emotions on human empathy as he says:

“Another point that I mentioned is that if AI does not have emotion – and you know me, and it’s debatable whether AI is sentient, then we’re going to be developing a relationship with something that is not going to be activating the mirror neurons. So we get less activation. And then the question becomes: will that make us more autistic? And is autism adaptive for the future human?”

Baking Human Values into a Radically Changing Future – with IBM’s Dr. Francesca Rossi

Guest: Dr. Francesca Rossi, AI Ethics Global Leader at IBM and President of the American Association for Artificial Intelligence

Dr. Rossi begins her podcast appearance by describing a presentation she gave using visually captivating slides generated with AI. She expresses her concern about the ease of using such tools and the potential impact on creative individuals and society’s creativity if everyone relied on machines for creative tasks.

In offering an example of a recent experience she had in giving a speech at a TEDx event, the pictures featured in the presentation were all generated by the DALL-E text-to-image generation tool. It was an incredible experience, Dr. Rossi tells the Emerj podcast audience; however, it left a slightly queasy feeling in her stomach with more questions than answers:

“Those were amazing pictures. They were really able to capture the concepts that I wanted to talk about. But of course, one can think, ‘Okay, but maybe that’s too easy. If everybody would do like I did, what would happen to creative people?'” she explains.

“What would happen to the people that make a living out of making good pictures?” she continues. “Or what would happen, not just to their business model, but what would happen to the creative part of the society, if we can be happy with the creativity that the machine can supply us with?”

Dr. Rossi goes on to explain that, while she believes that innovative individuals will benefit from using the tool, there is a risk that busy users, including students, may become lazy and settle for mediocre solutions instead of developing their creativity and passion through hard work.

Hence, she feels we dive deep into exploring AI; we need to be more aware of what values we want to preserve, protect and support.

Dr. Rossi also believes AI can provide even greater value for business and societal progress when used and implemented correctly. She acknowledges that some enterprises may view AI ethics and restrictions as conflicting with business objectives but that she ultimately sees these values aligned in the long term.

She argues that the goal is not to limit what technology can do but to improve business deals and opportunities for those involved while benefiting society. The speaker hopes that more business leaders will come to understand this perspective.

Generative AI and the Future of Work Itself – with Tom Davenport

Guest: Tom Davenport, An American academic and a business leader

As a respected academic in artificial intelligence and technology, Tom begins his podcast appearance by stating that the only people who will lose their jobs to AI are those who refuse to work with AI.

He suggests that generative AI tools will revolutionize the legal profession, allowing attorneys to generate briefs more efficiently. However, they must find new ways to charge for their services. He also highlights the use of generative AI in product design, citing the example of an SUV Corvette designed using these tools, which saves time in the design process.

He believes that any content – music, art or literature – will be more valuable if humans and not genAI create it.

Davenport expresses concerns about the legal risks associated with using generative AI technology. He mentions that the potential for intellectual property-related lawsuits significantly deters widespread adoption. Since generative AI models are trained on existing content, there is a risk of infringing upon copyrighted material.

He cites an example of GitHub’s Copilot, which has already faced a lawsuit related to this issue. Due to these concerns, Davenport suggests that organizations are hesitant to utilize generative AI tools except in experimental settings.