As the web grows and we spend more and more time online, moderation becomes a bigger and bigger challenge. Content influences buyers, and businesses eager to gain the trust of those customers are likely to do whatever they can to win.

Reviews, profiles, accounts, photos, and comments — all these categories of content require oversight so that they don’t become overrun with misleading advertising messages, or outright spam.

Machine learning is critical to moderation at scale — but it’s often human effort that helps train those algorithms. I interviewed Charly Walther, VP of Product and Growth at Gengo.ai, to learn more about the crowdsourced processes that underlie many content moderation algorithms – and to determine how and when human effort is required to help machines better filter spam or inappropriate content.

Why and When Human Moderation is Required

Filtering an image that isn’t inappropriate could lead to a poor user experience – or even a backlash from offended users. Facebook has seen it’s fair share of moderation issues, leading the company to employ armies of human beings to help filter content – a job that machines are often unfit to do.

Moderation at scale usually involves the following elements:

- A trained machine learning algorithm which is informed by user data or outside data. This algorithm is designed to determine appropriate or inappropriate content.

- A set of human reviewers who help to manually approve or disapprove content, which helps to train the algorithm to make better content moderation decisions in the future.

In Walther’s words:

“You want a human in the loop flow – you might have some kind of machine learning system that can detect that there is an issue, but you may not want to delete something without human intervention.”

Walther tells us that, broadly speaking, content moderation can go one of two ways:

- Filtered later: Some platforms or sites might decide that “questionable” content should be pushed live to users, and only examined later by human crowdsourced reviewers.

- For example: Facebook might allow users to post images that seem questionable, and only take them down if they are in fact found to break from the company’s policy. This decision might be made in order to keep users from being offended by immediately filtered comments (such as this example of a graphic Vietnam war image, initially filtered for nudity, but later allowed to go live after a protest from users).

- Walther tells us that some firms prefer this method because it allows them to — in his words “Put their hands in the fire for it,” stamping the approval of a real human being in order to show the care and attention given to moderation (as opposed to a cold and impersonal, entirely algorithmic decision).

- Filtered first: Other platforms or sites might decide that “questionable” content should be hidden from users, and only be pushed live when it is approved by human reviewers.

- For example: A large media presence like the New York Times website might filter all of it’s questionable comments manually before allowing them to be published on the company’s site.

Sometimes a content moderation algorithm will be able to discern a kind of gradient of certainty.

A sufficiently well-trained machine learning algorithm might be able to fully “filter out” images which it believes with 99% certainty to contain inappropriate nudity (in the case of image filtering), or spammy links (in the case of comment moderation).

The same algorithm might place all (or some) content of lower certainly into a “review bucket” for human reviewers. For example, images that the machines deems to have a 60% of nudity (in the case of image moderation) or a 35-percent chance of including spammy links (in the case of comment moderation) might be passed along to human reviewers.

The corrections to these “grey area” moderation decisions will hopefully improve the machine’s ability to make the right call on its own the next time.

Accounts / Profiles

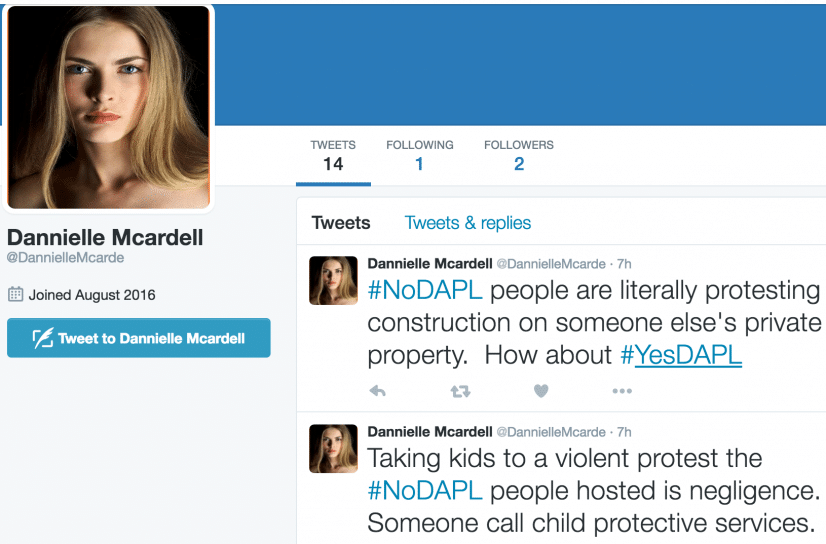

From social media networks to online forums to platforms like AirBnb, fake or inappropriate accounts are an issue that companies have to deal with. This might include false accounts pretending to be “real people,” used to ulterior aims:

- Fake social media accounts, used to artificially “like” and “share” information from advertisers by falsifying social proof

- Fake social media accounts, used to push a particular political opinion or agenda without disclosing the party behind the agenda itself

- Accounts that are secretly being used to push sexually explicit or unwanted promotional content

Machine learning systems might make two kinds of errors. “False positives” are situations when a system thinks content is inappropriate, when it is in fact appropriate (i.e. incorrectly filtering out content). “False negatives” are situations when a system appropriate, when it is, in fact, inappropriate (i.e. incorrectly allowing poor content through the filter).

In Walther’s words:

“A platform can have a button saying ‘flag this content as inappropriate’ — allowing their users to detect false negatives to help train the algorithm. You need human reviewers for false positives, to review filtered content that doesn’t go live – that’s content which users can’t help with.”

It’s those situations of false positive-prevention where crowdsourcing often comes into play.

Listings

False or inappropriate listings might include:

- AirBnb accounts that break with the terms and conditions of the site (i.e. someone trying to tent a tent in their back yard, or the back of a pickup truck)

- Fake listings on room rental websites, intending to lure users to pay for something that isn’t real

- False Craigslist listings, claiming to be offering an appliance, but actually fishing for prepayment from gullible users (an academic report published in Financial Cryptography and Data Security 2016 estimates that 1.5% of all Craigslist posts are fake)

- A car on a social car rental site (like Turo.com) might be listed in blatant violation of safety standards (i.e. the car lacks a windshield or seatbelts)

- Real estate listings (on sites like Trulia or Zillow) that feature fake images or made up addresses

Some listings may simply be a joke, others an intentional attempt to commit fraud, still others may be inadvertently posted in a way that violates the terms of service. What these listings have in common is that they degrade the user experience of the site or platform.

Any sufficiently large platform will struggle to keep track of all listings on their site. Human judgement and filtering can be used to find more and more nuanced patterns to keep up with fraudsters or policy abusers and train a machine learning system to allow less spam to go live on the site.

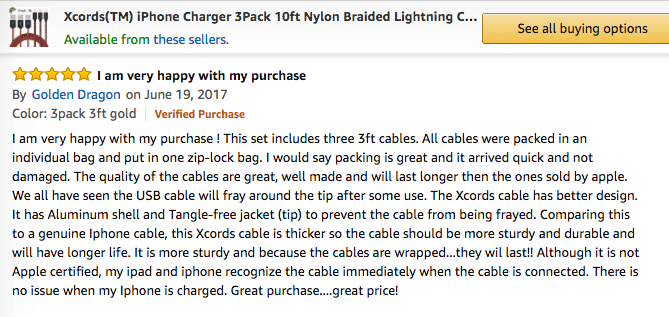

Reviews

An article in New York Magazine quotes the founder of Fakespot as stating that upwards of 40% of Amazon reviews are questionable (in terms of not being a verified purchase, or in terms of wording or repetitiveness). While this number may be inflated, it’s clear that certain product categories are awash with false reviews and sketchy accounts – notably consumer electronics.

The same can probably be said of almost any large website that allows for reviews. From eCommerce stores, product marketplaces, or service marketplaces (like Yelp or Houzz).

False reviews come in many forms, including:

- Poor reviews designed to decrease sales of a competitor, or encourage purchases from another brand, product, or service

- Positive reviews designed to provide social proof and encourage other unsuspecting customers to purchase

- Mixed reviews (say, 3 or 4 stars), designed to blend in with a larger batch of false 5-star reviews, with the aim of increasing the likelihood of the reviews appearing honest and truthful overall

All of these reviews have one thing in common — they’re posted as if they’re from genuine customers and users – but they are in fact executed by brands looking to influence the behavior of buyers.

While there are rules of thumb for identifying fake reviews (CNet has a useful article on this topic), it’s nearly impossible to train an algorithm to detect any and all fake reviews.

Large platform businesses and eCommerce business that offer reviews often already use machine learning-based systems to filter reviews, and they’re eager to see these systems improve over time. An Amazon spokesperson reportedly told DigiDay:

“We use a machine-learned algorithm that gives more weight to newer, more helpful reviews, apply strict criteria to qualify for the Amazon Verified Purchase badge and enforce a significant dollar amount requirement to participate, in addition to other mechanisms which prevent and detect inauthentic reviews.”

Clearly, it isn’t enough. We can’t blame Amazon, per say – any platform business at sufficient scale is destined to run into the same issues. Improving the machine learning models that detect fake reviews will involve high-level forethought from the engineers behind those models, but it will also involve something else: Input from human beings who can intuit the difference between fake and real reviews.

Here are some examples of how crowdsourced effort might help to refine machine learning models:

- Review spammers may adopt a new set of templates for their positive or negative reviews. Someone manually analyzing reviews might be able to pick up on this pattern quickly, and inform the algorithm that these new templates and formats are suspect, or may need to be.

- Machine learning models may be able to determine some reviews to be outright spam, but others might simply be marked as “questionable.” These questionable reviews might be sent to human reviewers who could determine the approval or deletion of the review, thus adding a bit more richness and context to the machine learning model for it’s next decision.

Reviews are important. An eCommerce study conducted by Northwestern University reports that nearly 95% of buyers will look at reviews (if available) before purchasing, and that sharing reviews can improve conversion rates by over 200%.

Comments

Comment spam is an annoyance that websites of all sizes have to deal with. Comment spam “bots” crawl the internet constantly, in an attempt to jam backlinks on unsuspecting sites. Sometimes this is done in an attempt to ruin the user experience on that site, but more often than not it’s done in an attempt to drive backlinks or traffic to a target website (often some kind of knock-off jewelry or handbags).

Blocking suspicious IP addresses and automatically filtering for specific URLs included in comments can be one way to be rid of useless spam comments – but it often isn’t enough.

Content that might be filtered immediately might include:

- Profanity

- Direct insults

- Racial slurs or references of any kind

- Spam, promotional, or unrelated links

As with other kinds of moderation, fake comments often have certain features in common (IP address, format of message, broken english, etc) which allow them to be filtered quickly – but with new spam and fake comment methods being developed by users every day, machine learning moderation algorithms are engaged in a constant game of catch-up.

As with reviews, crowdsourced comment moderation has the potential to provide valuable training data to filtering algorithms, to better catch future spam or false comment attempts. This training process is ongoing – and goes hand-in-hand with the ongoing and new efforts by users to fool the system.

Issues around filtering insults and “hate speech” aren’t easy to solve, and we interviewed a separate AI expert to explain some of the initial process required to properly train an algorithm to filter content.

Dr. Charles Martin is founder of Calculation Consulting, a machine learning consultancy firm based in Silicon Valley. Charles previously worked on machine learning-based content moderation projects at firms like Demand Media and eBay.

Charles mentions that if a large media platform (say, HuffPost or the New York Times) wants to train an algorithm to cut out hate speech, the first thing they need to do well is to define their terms and boundaries to be much more specific.

A uniform removal of hate speech needs to be based on more than the reactions and responses of people labeling the comments, it needs to be based on an agreed-upon set of boundaries around what constitutes a “curse word” or what constitutes and “insult.” Without these standards determined ahead of time, it would be impossible to train an algorithm reliably.

Charles also says that it’s possible overtrain these AI systems (probably a reference to overfitting the algorithm), and that it’s important to understand the process of designing and training algorithms in order to ensure that the system works as intended.

Photos

Images, like written content, need moderation – but are often more difficult to “filter” than written text. Machine vision is a relatively new science, and the interpretation of images requires huge amounts of human effort.

Image moderation might include:

- Filtering fake or misleading photos from hotel or destination listing websites

- Filtering inappropriate images or nudity from public social media platforms like Facebook

- Taking down listings on sites like eBay or Craigslist based on blatantly false or misleading images for a product being offered

While machine learning systems might be able to pick up on blatantly inappropriate or misleading images, Walther says: “It’s about finding edge cases. It’s about finding that 1% of cases that machine learning can’t pick up on, so that the system can improve.”

About Gengo.ai

Gengo.ai offers high-quality, multilingual, crowdsourced data services for training machine learning models. The firm boasts tens of thousands of crowdsourced workers around the globe, servicing the likes of technology giants such as Expedia, Facebook, Amazon, and more.

This article was sponsored by Gengo.ai, and was written, edited and published in alignment with our transparent Emerj sponsored content guidelines. Learn more about reaching our AI-focused executive audience on our Emerj advertising page.

Header Image Credit: Winning-Email