Earlier this month we released an article featuring all of the AI risk quotes from Elon Musk, and the article was well received.

If there’s another eminent modern businessperson who’s AI quotes have landed all over the web, it’s Bill Gates. Many of the articles about Bill’s AI statements make scant mention of his actual words, and many such articles (including those on respected publications such as The Washington Post) simply re-hash his brief mention of the topic in a Reddit AMA in late January of this year.

That being said, I figured I’d use this article as an ongoing log of Bill’s actual AI statements – separating hype from verifiable quote, and listing more than his overused Reddit blurb. I imagine I will update this piece on a number of occasions as I find new quotes, or as Emerj readers chime in with additional quotes and videos they’ve found (reach us at info [at] Emerj [dot] com).

As it turns out, there are far less varifiable mentions of Bill Gates’ AI fears (or thoughts about AI in general) than there are for Musk, but collecting them all in one place still seemed like a worthwhile idea for anyone interested in the subject.

Without further ado, here’s everything we could dig up, from oldest to most recent:

May 28, 2013 – ABC Q and A: An Audience With Bill Gates

In this little-known interview, Gates doesn’t seem all that intent on highlighting AI and robotics as particularly dangerous. At 2:08 into the video, Gates mentions:

I don’t see robots as something that will lead to more war, we have to be careful to avoid war… in general, the trend has been quite phenomenal in that regard… violence in general, particularly of that type, less genocide…

Shortly thereafter, another precocious youngster from the stands asks Gates if he believes artificial intelligence will ever surpass the human mind, and if so, how would we handle it?

His response begins playfully “That would humbling (audience chuckles)”, but he goes on to say the following:

I do believe that when computers take over certain tasks, that will be tough for us. It would be a looong time before you’re matching the type of judgement that humans exercise in many different areas… I do believe that it’s a solvable problem – now we’ll have to avoid climate change and other problems before we have this one to worry about…

When asked whether he believes artificial will be possible, he responds with the affirmative, but he states that it will take “at least five times as long as Ray Kurzweil says.”

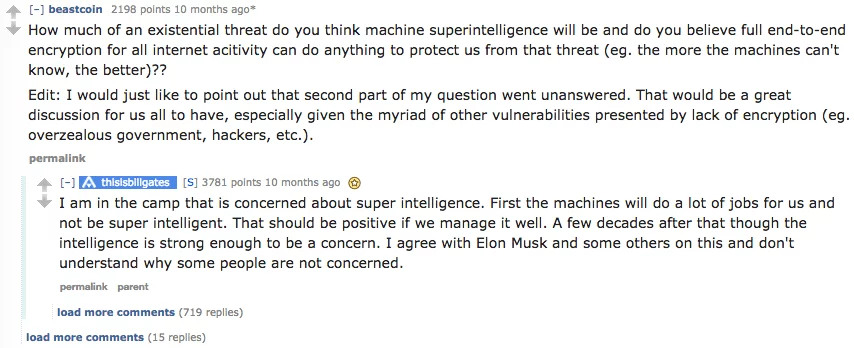

January 28, 2015 – Reddit AMA with Bill Gates

Bill’s January AMA seemed to be the “tipping point” for the flurry of attention that his artificial intelligence concerns seemed to garner. All in all, he made only one small mention of AI in the entire interview, but it was enough for the press to hold onto (see the quote in the screen shot above).

The statement doesn’t seem all that heretical, but it’s certainly press-worthy.

March 29, 2015 – Boao Forum (with Robin Li and Elon Musk)

If you want to watch the entire video, it’s worth a listen (sound quality isn’t great, but manageable). However, if you’d like to skip right ahead to Gates’ statements about AI, that kicks off at around 19:20.

Gates begins by mentioning that his views about the risks of artificial intelligence aren’t all that divergent from Musk’s own views, and proceeds to mention Nick Bostrom’s book Superintelligence as a good read on the topic (for interested Emerj fans, Bostrom was interviewed on the Emerj podcast about AI and existential risk in August 2014).

We have a general purpose learning algorithm that evolution has endowed us with, and it’s running in an extremely slow computer. Very limited memory size, (very limited) ability to send data to other computers – we have to use this funny mouth thing here…

He mentions that once such an algorithm is replicated outside of biology, it has a reasonable likelihood of surpassing human intelligence almost immediately:

You’ll be at a superhuman level almost as soon as that algorithm is implanted in silicon.

Gates continues, and mentions that he’s terribly surprised when people say that artificial intelligence is not a problem.

How can they not see what a huge challenge this is?

Clearly, Gates’ own assessment of the issue is more severe than in his ABC interview in 2013. We all have ideas and opinions that evolve – that’s not blameworthy – but it’s interesting to see that Bill’s own shift has been towards more concern about AI, not less.

January 22, 2016 – World Economic Forum

Bill generally frames drones as a force for good. He mentions agricultural drone applications and uses for search and rescue.

He addresses Stephen Hawking’s statements about AI potentially ending humanity. He expresses his concern about AI not sharing human goals – and that it does deserve to be on the risk radar, even if it isn’t a pressing concern today.

January 25, 2018 – World Economic Forum

Bill likens AI to “simply better software.”

One area he considers to be exciting is the potential of machine learning in manufacturing environments or factories, particularly using computer vision to monitor high-value or high-risk applications.

“The purpose of humanity is not just to sit behind a counter and sell things,” says Gates, in response to questions about AI creating job loss (a topic we saw as an important theme in our AI risk researcher consensus).

Conclusion

Frankly, I hope we’ll have more good examples of Gates quotes about artificial intelligence in the coming year or two. His opinions seem to be getting stronger on the subject, but there haven’t been any in-depth dialogues on the subject.

Perhaps as he thinks more on the subject his opinions grow.