In July of 2018, Daniel Faggella spoke at the Interpol–United Nations (UNICRI) Global Meeting on the Opportunities and Risks of Artificial Intelligence and Robotics for Law Enforcement. It was the very first event on the usage of AI in policing, security and law enforcement by the UN and INTERPOL.

Since the event, Daniel and has been called upon for a variery of other United Nations and INTERPOL events, as Emerj has continued to cover AI’s applications in defense and counterterrorism.

When UNICRI planned to put together a report on Artificial Intelligence and Robotics for Law Enforcement, they sought to create a primer for police leaders interested in AI’s near-term applications. Daniel and the Emerj team helped with fleshing out the use-cases section, but the complete report covers a wide variety of topics worth considering for anyone interested in the intersection of law enforcement and AI. This article summarizes the contents of the report and Emerj’s coverage of real-world AI use-cases in law enforcement.

The UNICRI report and this article address some of the important questions below:

- What are the benefits of integrating AI into law enforcement?

- What can law enforcement do to forecast and mitigate weaponization of AI and robotics?

- How can law enforcement successfully implement certain use cases of AI and robotics in the real world?

- How can AI systems used in policing balance between privacy and security?

- Why should the ethical usage of AI be clearly categorized and enforced in law enforcement as a frame of reference for other domains and industries?

The following sections present a comprehensive summary of the UNICRI report’s major points, and shed light on the working AI applications in law enforcement in detail, with linked references to our other use-case coverage on Emerj.

A Breakdown of AI Applications and Threats

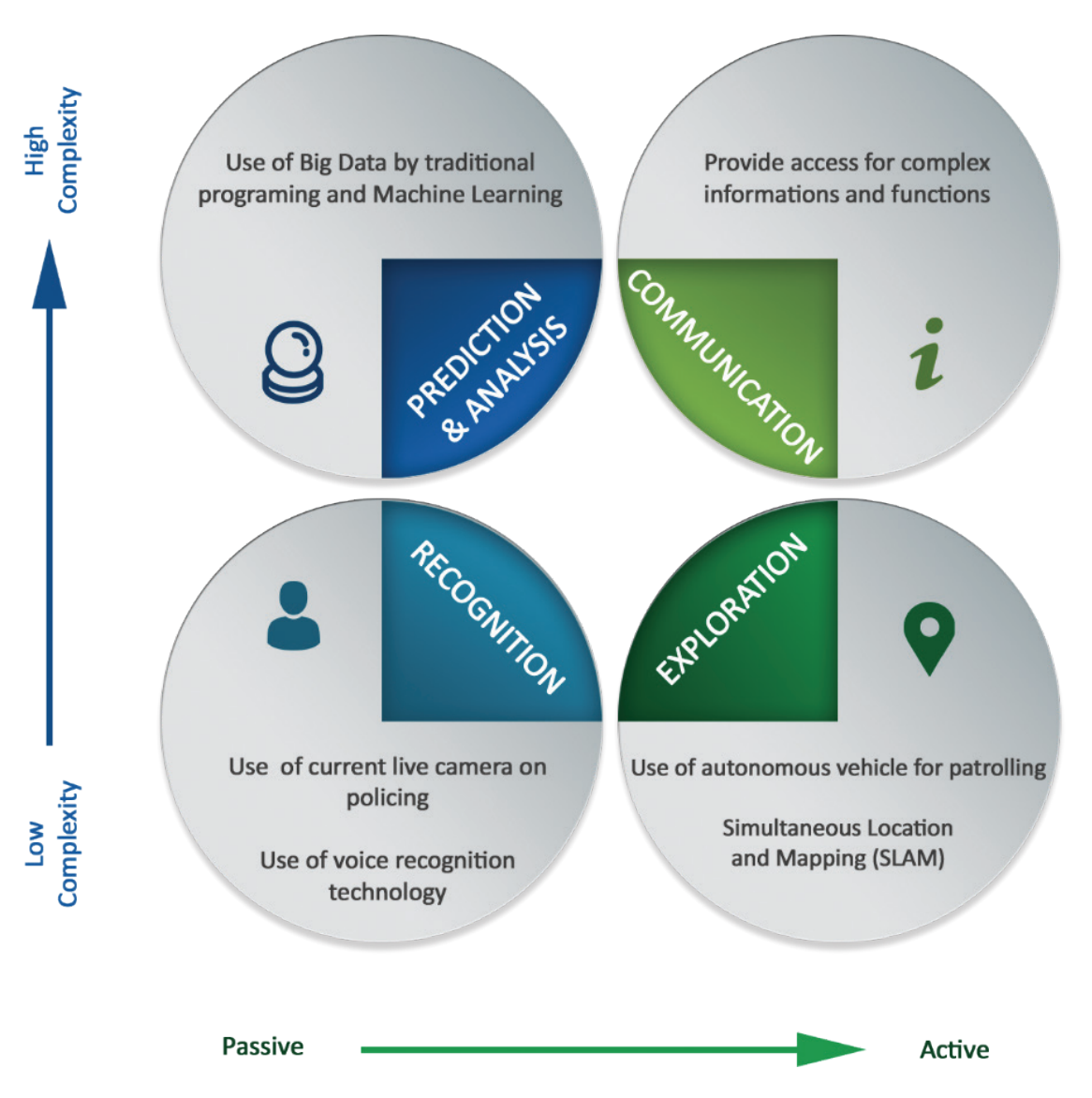

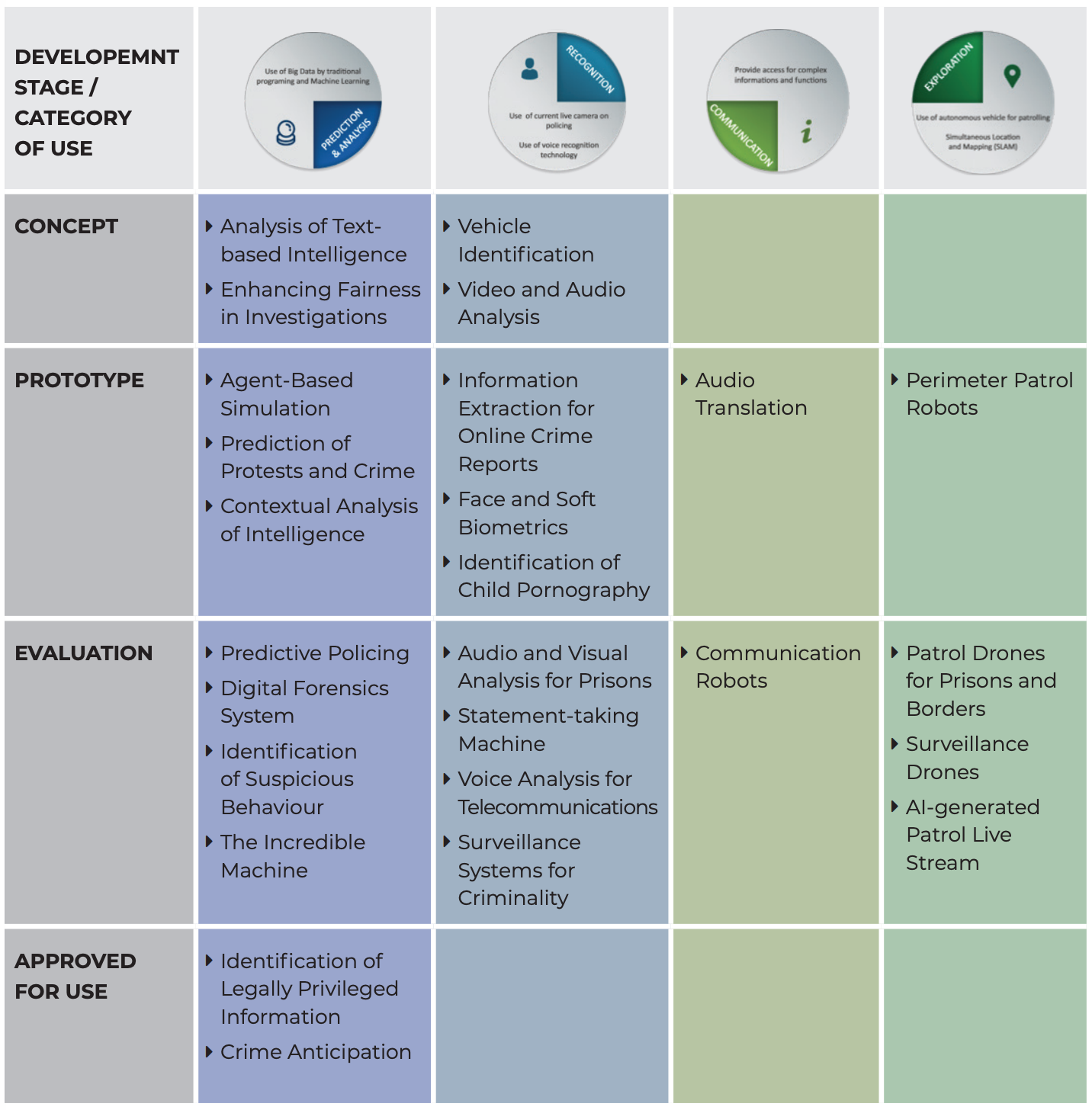

The report breaks law enforcement’s AI and robotics applications into four broad categories: Prediction and Analysis, Recognition, Exploration, and Communication – as represented in the graphic below:

Image source: Four Categories of AI Use Cases in Law Enforcement

(Report: Artificial Intelligence and Robotics for Law Enforcement)

The report says that the law enforcement would currently focus on only narrow AI and semi-autonomous agents to augment law enforcement officials, owing to some limitations of AI. Some of these use cases that could be considered as benefits of AI in law enforcement are discussed in detail in the next section.

Arguing that AI and robotics could very well be weaponized as much as it can be enforced for good in policing for crime prevention, the report states that “A recent report by 26 authors from 14 institutions (spanning academia, civil society, and industry) investigated the issue in depth and suggested that many of the same features that might make AI and robotics appealing for law enforcement (such as scale, speed, performance, distance) might make AI and robotics equally appealing for criminals and terrorist groups.”

This report, according to the report identifies three main domains of attack:

- Digital attacks, such as automated spear phishing, automated discovery and exploitation of cyber-vulnerabilities.

- Political attacks, such as the proliferation of fake news or media to generate confusion or conflict or face-swapping and spoofing tools to manipulate video and endanger trust in political figures or even result in the validity of evidence being questioned in court.

- Physical attacks, such as face-recognizing armed drones or drones smuggling contraband. In the context of digital attacks, the report further notes that AI could be used either to directly carry out a harmful act or to subvert another AI system by poisoning data sets.”

The report warns that although the weaponization of AI and robotics has not proliferated the society as much as expected, the coming years will see the rise of use of AI and robotics for criminal and terrorist purposes.

For instance, the report provides real-time malicious events in the past, such as the drones used to distribute a radioactive source to the Japanese Prime Minister’s office in Tokyo and drones used as unmanned aerial improvised explosive devices by the Islamic State of Iraq and the Levant (ISIL), etc.

In order to combat this potential criminal use, the report advises the law enforcement to take action now by being prudent in implementing a strategic approach and conscious investment of effort and resources in AI for policing.

Robotics and AI in Policing – Use-Cases Exploration

Emerj was honored to help UNICRI with real-time examples of AI and robotics case studies and applications used in law enforcement – but the useful framework for categorizing the use-cases came from the authors of the report, and the folks at INTERPOL.

UNICRI and INTERPOL Use-Case Graphic

While there are a number of potentially useful “lenses” to use with respect to analyzing AI applications in law enforcement, the report uses the same four broad categories mentioned above, combined with criteria about the relatively maturity of the application. Here is a sample graphic from the full report:

Image source: Result of the Use Cases Session

(Report: Artificial Intelligence and Robotics for Law Enforcement)

In the coming two years, we expect to see many more novel computer vision applications make their way into the mainstream of first world police forces – with predictive policing playing an increasing role in extremely well-funded cities of advanced nations. Some of this increase in adoption will be attributed to a natural, slow upgrading of IT tools – but some of the adoption will be attributed to a maturing AI vendor ecosystem that is capable of building AI-enabled tools with more simple and intuitive user interfaces.

Policing-Related AI Use-Case Coverage on Emerj

For detailed explorations of these topics, we recommend that readers explore our more in-depth use-case explorations on the following topics:

- Law Enforcement Robotics and Drones – 5 Current Applications, which covers:

- Dubai’s Police Robot

- South Korean Prison Surveillance

- SGR A1 for Korean Border Patrol

- Knightscope’s Airport Security Bot

- DJI Drones in Law Enforcement

- Facial Recognition in Law Enforcement – 6 Current Applications, which covers:

- AI and Machine Vision for Law Enforcement – Use-Cases and Policy Implications

- Artificial Intelligence for Government Surveillance – 7 Unique Use-Cases which covers:

- Robotic Bird Surveillance

- Smart Glasses

- AI-enabled Body Cameras

- EEG Headbands

- AI-enabled Uniforms

- AI-enabled Wristbands

- Chinese Social Credit Score

- Artificial Intelligence in the Chinese Military – Current Initiatives

- Artificial Intelligence at the FBI – 6 Current Initiatives and Projects which covers:

- Facial Recognition

- Fingerprint Identification

- DNA Matching

- Cybersecurity

- Insider Threat Identification

- Business Process Management

Striking An Ethical Balance – Privacy and Security

The AI and Robotics for Law Enforcement report states that with use cases increasingly expanding into enhanced surveillance capabilities, concerns over ethics and human right to privacy inevitably arise.

Considering the several philosophical, contextual and situational complexities that go into determining the ethical use of AI and robotics in law enforcement, the report lays down four fundamental features to strike “a balance between security and privacy:”

- Fairness: Refraining from breaching rights such as freedom of expression, presumption of innocence, etc.

- Example: If an AI system determines men (or men of a specific race) more likely to have been the perpetrators of a violent crime – should that training data be taken into account in which potential perpetrators are followed up with?

- Accountability: Men and machines must be accountable at an institutional and organizational level.

- Example: If an AI system aides in a wrongful accusation, or if errors in an AI surveillance tool result in personal losses to an innocent individual – who will be accountable for the error and how will it be addressed? Will the creators of the system be put in question, will those in charge of the upkeep of the algorithms be in some way responsible to the public – and if so – how?

- Transparency: Decisions taken by an AI system must be transparent, not a “black box.”

- Example: If an AI system instructs police as to where they should be patrolling in order to prevent violent crime – will the basis of these recommendations (possibly the factors weighed, and how they were weighed) be clear for human users?

- Explainability: Decisions taken by an AI system must be understandable by humans.

- Example: If an AI system flags certain communication channels as high risk or worth investigating, can it lay out the reasons why one message (or person) was flagged – but not another? Can a user ask “why” – or prompt a breakdown of the machine’s conclusions?

These tenets will be interpreted differently in different countries. For almost all nations, however, it seems likely that essentially all modes of surveillance will be improved with the advent of AI – and this inevitable wave is something that each individual society will grapple with.*

The report references some of the larger international bodies involved in the development of AI ethical principles, including:

- “The Institute of Electrical and Electronics Engineers (IEEE), who have issued a global treatise regarding the Ethics of Autonomous and Intelligent Systems (Ethically Aligned Design), to align technologies to moral values and ethical principles…” (we have covered the IEEE’s Ethically Aligned Design work in a previous in-depth article).

- “The European Parliament has also proposed an advisory code of conduct for robotics engineers to guide the ethical design, production, and use of robots, as well as a legal framework considering legal status to robots to ensure certain rights and responsibilities.”

The authors of the report insist that law enforcement AI use cases could be considered as important test cases that can set the norms and bodies for the ethical use of AI and robotics for other industries and organizations, and are worth considering at this relatively nascent phase of adoption.

We’d like to give a special thanks to the folks at UNICRI and INTERPOL for creating a forum to explore AI in law enforcement, and for their efforts in compiling the original report (linked at the beginning of this document) that this article is based upon.

* For further details on this topic, here is a podcast on How AI Ethics Impacts the Bottom Line – An Overview of Practical Concerns, or watch Daniel’s short YouTube video titled AI Ethics: Transparency, Accountability, and Moving Past Virtue Signalling.

Header image credit: CUInsight