This article was written in partnership and collaboration with the IEEE. For more information about content partnerships visit the Emerj Partnerships page.

Until somewhat recently, “moral concerns” about artificial intelligence would have been seen as absurd, something relegated science fiction. To some AI researchers, it still seems far too early for “ethics” and “AI” to be used in the same sentence.

Today’s concerns aren’t just about Schwarzenegger movies and killer paper clip machines, however. Some members of the AI research community believe that new concerns such as: AI bias (by race, gender, or other criterion), personal privacy, and algorithmic transparency (having clarity on the rules and methods by which machines make decisions) seem to be the more pressing issues of the day.

The Institute of Electrical and Electronics Engineers (IEEE) has been developing technology standards for decades (for popular technologies like Wiki protocols, and for more niche technologies). In 2016, they decided to do something new: To establish ethical standards around autonomous and intelligent technologies. In December of 2016, IEEE published version 1 of a report titled “Ethically Aligned Design: A Vision for Prioritizing Human Well-being with Artificial Intelligence and Autonomous Systems.”

My interest was sparked in hearing about the new report (and in joining the “Well-being” committee on a number of phone calls and brainstorms), but I realized that if I wanted to cover this research, I’d have to find a way to do so that would bring insights and value to my business readers. So I asked the same question I always ask when I’m writing a piece for the Emerj audience:

“…why should business leaders care about this NOW?”

This isn’t because I think business people are cold and don’t care about ethics or moral concerns. Rather, it’s because business leaders have pressing current issues that they have to solve, and they don’t have time to think about 30-years out threats. They’re concerns have to do not only with abstract moral reasoning, but with keeping their employees paid and keeping their business alive by making revenue and beating out competitors in the marketplace.

So I decided to speak to some of the Chairs of all the IEEE P7000™ Standards Working Groups in charge of putting together the document, and I asked them just two simple questions:

- Which types of businesses should be considering to your standard, and why? (i.e. Why are these standards relevant to business people now?)

- What would adherence to this standard look like in a real business setting?

My goal wasn’t to prove or disprove the usefulness of AI’s ethical concerns for businesspeople, but rather provide a platform to have them be explored by our business leader readers here at Emerj. When it comes to the ultimate mission of Emerj, few tasks are more relevant than sparking moral discourse around the directions our technologies might be taking us.

Background on the IEEE’s Global Initiative on Ethics of Autonomous and Intelligent Systems (“The IEEE Global Initiative”)

The IEEE Global Initiative’s mission is as follows:

To ensure every stakeholder involved in the design and development of autonomous and intelligent systems is educated, trained, and empowered to prioritize ethical considerations so that these technologies are advanced for the benefit of humanity.

In my talks with IEEE leadership (particularly with John C. Havens, and in my interview with Konstantinos Karachalios – Managing Director of the IEEE Standards Association), it seemed clear that the first version of Ethically Aligned Design is intended to spark conversation and flesh out possibilities – as opposed to imposing hard boundaries around “soft” ethical issues right away. This seems to be the right way to start things off, and I recommend readers take on the same mentality of openness in reading the tenets laid out below.

In the sections of the article below we explore the eleven Standards Working Groups comprising the IEEE P7000 series of standards, all of which were inspired by members of The IEEE Global Initiative in writing Ethically Aligned Design. Each section includes a summary of the Standard Working Group (supplied by IEEE), and most sections also include the responses of one or more Chairs of these Working Groups to the two questions I mentioned above.

Note that I only mention the Chairs who replied and are responsible for the answers seen below, and note that while many of these Chairs have many distinctions and titles, I’ve chosen one that seems most pertinent to this article, and I’ve included links to learn more about the individual Chairs themselves.

Without further ado we’ll dive into the “meat” of the P7000 Standards’ Working Groups and the Chairs’ respective statements about the potential business relevance of some of AI’s ethical concerns:

P7000 – Model Process for Addressing Ethical Concerns During System Design

Standard summary:

P7000 outlines an approach for identifying and analyzing potential ethical issues in a system or software program from the onset of the effort. The values-based system design methods addresses ethical considerations at each stage of development to help avoid negative unintended consequences while increasing innovation.

(Source / contact info for the P7000 working group)

Responding chairs:

John Havens, Executive Director, The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

Dr. Sarah Spiekermann-Hoff, Chair of the Institute for Management Information Systems at Vienna University of Economics and Business (WU Vienna)

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

Any type of business that builds or deploys technical systems can use P7000. It will help these businesses to sharpen their customer value proposition by focusing the entire system design process around the elicitation of value qualities a system can have. What is most important: Any human values at stake or potentially undermined by a system will be identified by following the P7000 process and mechanisms that are proposed to handle them. In this way, companies have ethical or value-related challenges on their radar from the start of a project and can mitigate many risks early on at the technical level.

What would adherence to this standard look like in a real business setting?

Adherence to P7000 would mean that new tech-projects undergo an early phase of envisioning the future, applying philosophically informed decision tools to understand their value landscape. This takes some time, but not too much. It is the outset of a rigorous, values-focused development phase.

P7001 – Transparency of Autonomous Systems

Standard summary:

Standard summary:

P7001 provides a Standard for developing autonomous technologies that can assess their own actions and help users understand why a technology makes certain decisions in different situations. The project also offers ways to provide transparency and accountability for a system to help guide and improve it, such as incorporating an event data recorder in a self-driving car or accessing data from a device’s sensors.

(Source / contact info for the P7001 working group)

Responding chairs:

Alan Winfield, Professor of Robot Ethics, University of the West of England

Nell Watson, Artificial Intelligence & Robotics Faculty, Singularity University

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

Any business developing autonomous systems (i.e. autonomous robots or AIs – including of course robots with embedded AIs) that – if they make a bad decision – could cause harm to humans. This includes physical harm (injury or death), financial or psychological harm, and covers a very wide range of systems and applications.

High profile examples include: Driverless cars, drones and assisted living (care) robots.

And soft AI systems that fall into the same category would include medical diagnosis AIs (even if they are only ‘recommender’ systems), loan/mortgage application recommender systems, or ‘companion’ chatbots.

A good test of whether a system might fall within the category I have in mind is to ask yourself if the developers might end up in court after their system goes wrong and causes some serious harm.

What would adherence to this standard look like in a real business setting?

For P7001 this is more difficult to answer since we’re still at an early stage of drafting, but the standard will define measurable, testable ‘levels’ of transparency for each of several ‘stakeholder’ groups. These groups include users, safety certification engineers and accident investigators (and others we’re still working on). It’s easy to see that the transparency needed by an elderly person so that she can understand what her care robots is doing is very different to what an engineer needs in order to certify its safety. Similarly the transparency needed by a passenger in a driverless car is completely different to that needed by an accident investigator. Thus, we are effectively drafting several standards within P7001 – each one defines levels of transparency for a different stakeholder group.

A business developing an autonomous system would use P7001 to self-assess the transparency of their system against the levels we define in P7001, for each of the different stakeholder groups and – we hope – be encouraged to increase the level of transparency in their product using the higher levels of transparency (which we expect will be demanding for designers to achieve) as targets to aim for.

The benefit to them will be to make the claim that their robot meets IEEE P7001 level 4 for users, level 5 for certification engineers, etc. We don’t expect P7001 to be mandatory since it is intended as a generic, foundational standard. However we hope there will be spin-out standards, i.e. P7001.1, P7001.2 … each for a specific application domain. Some of those – especially for safety-critical AI systems (like driverless car or drone autopilots) might become mandated within regulation.

P7002 – Data Privacy Process

Standard summary:

Standard summary:

P7002 specifies how to manage privacy issues for systems or software that collect personal data. It will do so by defining requirements that cover corporate data collection policies and quality assurance. It also includes a use case and data model for organizations developing applications involving personal information. The standard will help designers by providing ways to identify and measure privacy controls in their systems utilizing privacy impact assessments.

(Source / contact info for the P7002 working group)

Responding chairs:

Aurélie Pols, Chief Visionary and Co-founder, Mind Your Privacy

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

“All data driven organizations / businesses that at some point touch upon uniquely identifying an individual or identifiable group of individuals” is the straightforward answer to the first part of your question. And as data is the new electricity in which companies increasingly put their faith to spur growth, defending their margins, consequences on data uses about individuals, or their proxies, are increasingly influencing our lives.

This data frenzy erodes and reshuffles our societies’ Fundamental Rights, through the choices we, our family, children or loved ones, signal and the computed decisions that stem from such choices.

In Europe, “Dignity” is the first of the “6 Rights” recognized within the Charter of Fundamental Rights of the European Union. Article 1 talks of Human Dignity being inviolable, articles 7 and 8 respectively talk of the Respect for Private and Family Life as well as Protection of Personal Data.

These fundamental principles have influenced the reform of the data protection legislation dating back to 1995.

By May 2018, a General Data Protection Regulation (GDPR) will apply to all businesses who process personal data of Data Subjects who are in the Union, regardless of establishment (and under specific circumstances).

In order to assure accountability, the burden of proof is reversed while the GDPR also brings along new fines, up to a maximum of 4% of global turn-over or 20 million euros, which ever is higher.

What would adherence to this standard look like in a real business setting?

From the P7002 current outline:

“The objective is to define a process model that defines how best to preform a QA orientated repeatable process regarding the use case and data model (including meta data) for data privacy oriented considerations regarding products, services and systems utilizing employee, customer or other personal data.

By providing specific steps, diagrams and checklists, users of this standard will be able to perform a requirements based conformity assessment on the specific privacy practices &/or mechanisms. Privacy Impact Assessments (PIAs) will also be presented as a tool for both identifying where privacy controls and measures are needed and for confirming they are in place. Elements of PIAs also act as a data system of record.“

Typically this would mean at least, and aligned with the GDPR, have a process in place to define when a (D)PIA is needed – article 35 of GDPR:

“Where a type of processing in particular using new technologies, and taking into account the nature, scope, context and purposes of the processing, is likely to result in high risk to the rights and freedoms of natural persons, the data controller shall, prior to processing, carry out an assessment of the impact of the envisaged processing operations on the protection of personal data.”

Remedial measures will be established to limit risk and make this detection and these measures part of a robust process.

P7003 – Algorithmic Bias Considerations

Standard summary:

Standard summary:

P7003 provides developers of algorithms for autonomous or intelligent systems with protocols to avoid negative bias in their code. Bias could include the use of subjective or incorrect interpretations of data like mistaking correlation with causation. The project offers specific steps to take for eliminating issues of negative bias in the creation of algorithms. The standard will also include benchmarking procedures and criteria for selecting validation data sets, establishing and communicating the application boundaries for which the algorithm has been designed, and guarding against unintended consequences.

(Source / contact info for the P7003 working group)

Responding chairs:

Ansgar Koene, Casma Senior Research Fellow, Faculty of Science, University of Nottingham

Paula Boddington, University of Oxford, Department of Computer Science, Department Member

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

Businesses that should be considering P7003 are those that are using, or plan to use, automated decision (support) systems (which may or may not involve AI/machine learning) as part of process that affects customer experience. Typical examples would include anything related to personalization or individual assessment. Any system that will produce different results for some people than for others is open to challenges of being biased. Examples could include:

- Security camera applications that detect theft or suspicious behavior

- Marketing automation applications that calibrate offers, prices, or content to an individual’s preferences and behavior

- etc…

Also, any system that performs a filtering function by selecting to prioritize the ease with which customers will find some items over others (e.g. search engines or recommendation systems).

The requirements specification provided by the P7003 standard will allow creators to communicate to users, and regulatory authorities, that up-to-date best practices were used in the design, testing and evaluation of the algorithm to attempt to avoid unintended, unjustified and inappropriate differential impact on users.

These are relevant NOW because of increasingly vocal concerns in the media (e.g. https://www.theguardian.com/news/datablog/2013/aug/14/problem-with-algorithms-magnifying-misbehaviour) and among legislators (e.g. https://ec.europa.eu/futurium/en/blog/open-call-tenders-algorithmic-awareness-building-study) about possible hidden biases created or worsened by algorithms.

What would adherence to this standard look like in a real business setting?

Since the standard aims to allow for the legitimate ends of different users, such as businesses, it should assist businesses in assuring customers that they have taken steps to ensure fairness appropriate to their stated business aims and practices. For example, it may help customers of insurance companies to feel more assured that they are not getting a worse deal because of the hidden operation of an algorithm.

As a practical example, an online retailer developing a new product recommendation system might use the P7003 standard as follows:

Early in the development cycle, after outlining the intended functions of the new system P7003 guides the developer through a process of considering the likely customer groups to identify if there are subgroups that will need special consideration (e.g. people with visual impairments). In the next phase of the development the developer is establishing a testing dataset to validate if the system is performing as desired. Referencing P7003 the developer is reminded of certain methods for checking if the testing if all customer groups are sufficiently represented in the testing data to avoid reduced quality of service for certain customer groups.

Throughout the development process P7003 challenges the developer to think explicitly about the criteria that are being used for the recommendation process and the rationale, i.e. justification, for why these criteria are relevant and why they are appropriate (legally and socially). Documenting these will help the business respond to possible future challenges from customers, competitors or regulators regarding the recommendations produced by this system. At the same time this process of analysis will help the business to be aware of the context for which this recommendation system can confidently be used and which uses would require additional testing (e.g. age ranges of customers, types of products).

P7004 – Standard on Child and Student Data Governance

Standard summary:

P7004 provides processes and certifications for transparency and accountability for educational institutions that handle data meant to ensure the safety of students. The standard defines how to access, collect, share, and remove data related to children and students in any educational or institutional setting where their information will be access, stored, or shared.

(Source / contact info for the P7004 working group)

P7005 – Standard on Employer Data Governance

Standard summary:

Standard summary:

P7005 provides guidelines and certifications on storing, protecting, and using employee data in an ethical and transparent way. The project recommends tools and services that help employees make informed decisions with their personal information. The standard will help provide clarity and recommendations both for how employees can share their information in a safe and trusted environment as well as how employers can align with employees in this process while still utilizing information needed for regular work flows.

(Source / contact info for the P7005 working group)

Responding chairs:

Ulf Bengtsson, Manager, Manager at Sony Mobile Communicatins AB

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

Every business with electronic information on their employees. The bigger the company, the more important it is. There are mainly two reasons for this:

- Legislation sets a minimum standard in more than 100 countries around the world. Following a standard that satisfies these different legislation criteria will save money in the long run. E.g. fines for not fulfilling the EU GDPR can be up to 4% of annual turnover, or €20,000,000, whichever is the highest (http://www.eugdpr.org/).

- To make sure to maintain employee integrity, thus creating a good reputation of the company that will facilitate recruitment of top talent.

What would adherence to this standard look like in a real business setting?

I don’t see specific business cases based on adherence to this standard, unless the potential customers request adherence for doing business, like e.g. ISO 9000. This is more of a company infrastructure thing, to make sure that the business will be sustainable (see answer on the first question).

P7006 – Standard on Personal Data AI Agent Working Group

Standard summary:

Standard summary:

P7006 addresses concerns raised about machines making decisions without human input. This standard hopes to educate government and industry on why it is best to put mechanisms into place to enable the design of systems that will mitigate the ethical concerns when AI systems can organize and share personal information on their own. Designed as a tool to allow any individual to essentially create their own personal “terms and conditions” for their data, the AI Agent will provide a technological tool for individuals to manage and control their identity in the digital and virtual world.

(Source / contact info for the P7006 working group)

Chairs:

Gry Hasselbalch, Co-founder, DataEthics

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

Innovation means meeting new requirements in new ways. The standard addresses a shift in the big data era that businesses must stay ahead of to keep a competitive edge. Consumers are starting to worry about the lack of control over their data and the lack of insight they have into the rationale and mechanisms that define their agency in the big data environment, and they’ve begun to act by seeking out services and businesses that provide them with control and agency.

Legislation is adapting similarly with new tough requirements to businesses regarding e.g. data profiling, informed consent, transparency and accountability (the new EU data protection legislation effective May 2018 is an example of that). Companies that develop services and products with these changes in mind are not only dealing with upcoming formal requirements, but they are also addressing the future societal demands in general.

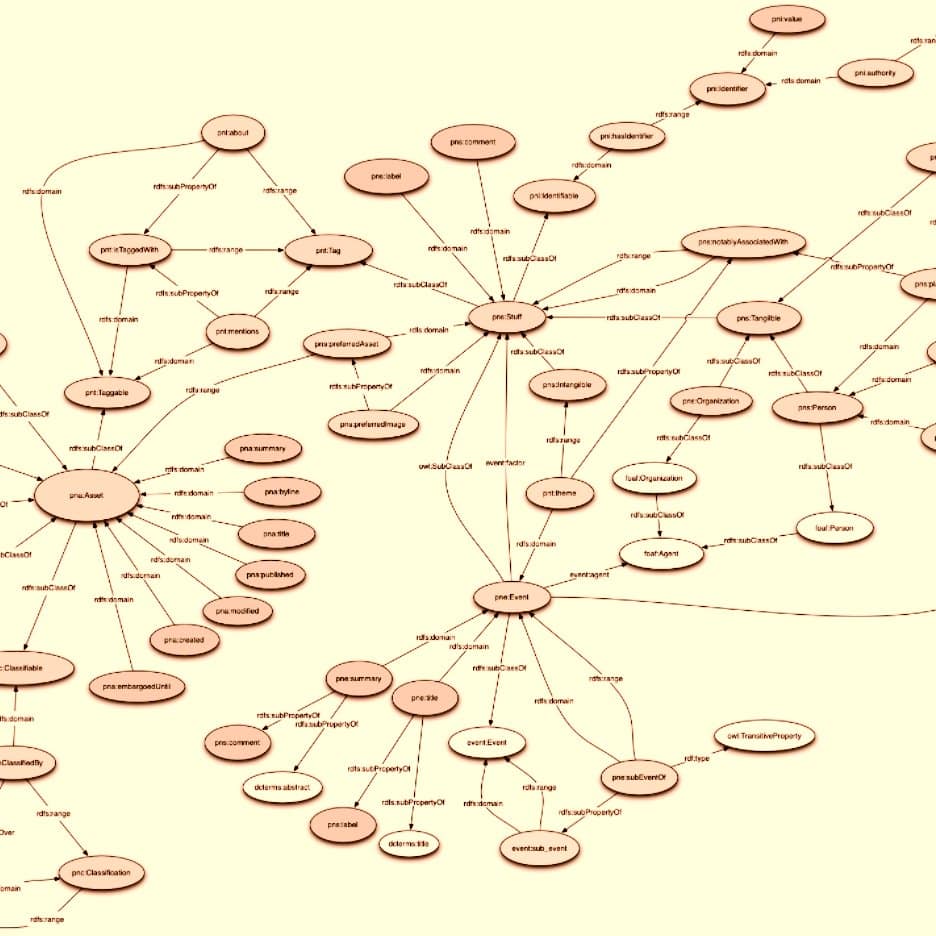

P7007 – Ontological Standard for Ethically Driven Robotics and Automation Systems

Standard summary:

Standard summary:

P7007 establishes a set of ontologies with different abstraction levels that contain concepts, definitions and axioms that are necessary to establish ethically driven methodologies for the design of Robots and Automation Systems.

(Source / contact info for the P7007 working group)

Chairs:

Edson Prestes, Professor and Head of Phi-Robotics Research Group, UFRGS

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

Any business that somehow is involved, or plan to be involved, with Robotics & Automation (R&A). The general public and civil institutions are more aware about the ethical implications of the use of AI and R&A systems in the daily life. However, they do not have a clear terminology and guidelines for judging and addressing those implications. P7007 is aimed at filling this gap.

P7007 will certainly influence what stakeholders should expect from R&A systems, which in turn will affect how R&A products will be perceived from an ethical standpoint. We have no doubt that ethical considerations will add value to any R&A system in the near future. We believe that the companies joining P7007 will be one (or two) steps ahead of their competitors. By getting involved with this initiative right now, stakeholders will be able to make P7007 deeper, broader and stronger and also be more prepared to the changes that are about to come. We also expect that P7007 will be relevant to companies that work in correlated domains, like machine learning, big data, IoT, etc.

What would adherence to this standard look like in a real business setting?

Several examples come immediately to mind. Government agencies will be pressured by the public to regulate the development of robots according to ethical standards. P7007 may be used as starting point to create regulation, either as a set of mandatory requirements or as a reference document.

In such a scenario, companies would use P7007 as a manual to help them understand and comply with regulations. That could lead to new markets, as well as decrease business risk. On a more technical note, the ontologies in P7007 could be used as a framework in many scenarios, like defining the software structure for a specific ethical behavior or improving human-robot and robot-robot communications.

Regarding the former type of communication, a company could consider to add software modules to the robot to guarantee user data privacy and protection from external third-party manipulation.

Regarding the latter, one can design interfaces to monitor and inform the user about any robot malfunctioning, abnormal situations or robot daily activities. For instance, a robot nanny could inform parents about the time it spent with their kids and activities performed, warning about unhealthy situations where the child lacks human contact, which can compromise their cognitive development.

P7008 – Standard for Ethically Driven Nudging for Robotic, Intelligent and Autonomous Systems

Standard summary:

Standard summary:

P7008 establishes a delineation of typical nudges (currently in use or that could be created) that contains concepts, functions and benefits necessary to establish and ensure ethically driven methodologies for the design of the robotic, intelligent and autonomous systems that incorporate them. “Nudges” as exhibited by robotic, intelligent or autonomous systems are defined as overt or hidden suggestions or manipulations designed to influence the behavior or emotions of a user.

(Source / contact info for the P7008 working group)

Chairs:

Laurence Devillers, Professor of Computer Science, University of Paris-Sorbonne

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

The use of AI applications of nudging on computers, chatbots or robots are NOW increasing steadily in the world, both within the private and public sector for example for health, well-being (eg, would AI tools make us drink less alcohol?), and education applications, governmental public-policy for taxes, marketing for private sector, etc.

Why are the nudging standards relevant to business ?

AI affords a tremendous opportunity not only to increase efficiencies and reduce costs, but to build nudging process with responsible and ethical rules.

Deploying AI without anchoring to robust compliance and core values may expose to significant risks including data privacy, health and safety issues. The potential fines and sanctions could be financially threatening.

The impact of the unintended consequences as the technology evolves can lead to reputation damage and, in the worst case, loss of customer trust and of shareholder value.

What would adherence to this standard look like in a real business setting?

Our intention is that this nudging standard will be used in multiple ways, including:

- During the development of robotic, intelligent and autonomous systems as a guideline, and

- As a reference of “types of positive nudge strategies” to enable a clear and precise communication among members from different communities that include robotics, intelligent and autonomous systems, ethics and correlated areas of expertise.

The use of this Standard will have several benefits that include:

- Formal definitions of nudges of a particular domain in a language independent representation;

- Tools for analyzing nudging functionality and benefits and their relationships with user acceptance and trust;

- Language to be used in the communication process among Robotic, Intelligent and Autonomous Systems from different manufacturers and users.

This standard is useful for REAL business use-case like these two real examples :

- ‘Nudge Marketing’ most effective strategy to push produce sales (ex: Nudge.ai)

- Home robot to nudge older people to stay social and active. Example: ElliQ, a robot companion for older people aims to promote activity and tackle loneliness by nudging them to take part in digital and physical activities.

- Applications like these (above) might benefit from the use of move overt standards and practices around their intentions to influence human behavior. In the future, transparency about such intended behavior change (and the means with which it can be encouraged) may be brought into question, and companies with clear standards may be more likely to gain trust and avoid legal hiccups.

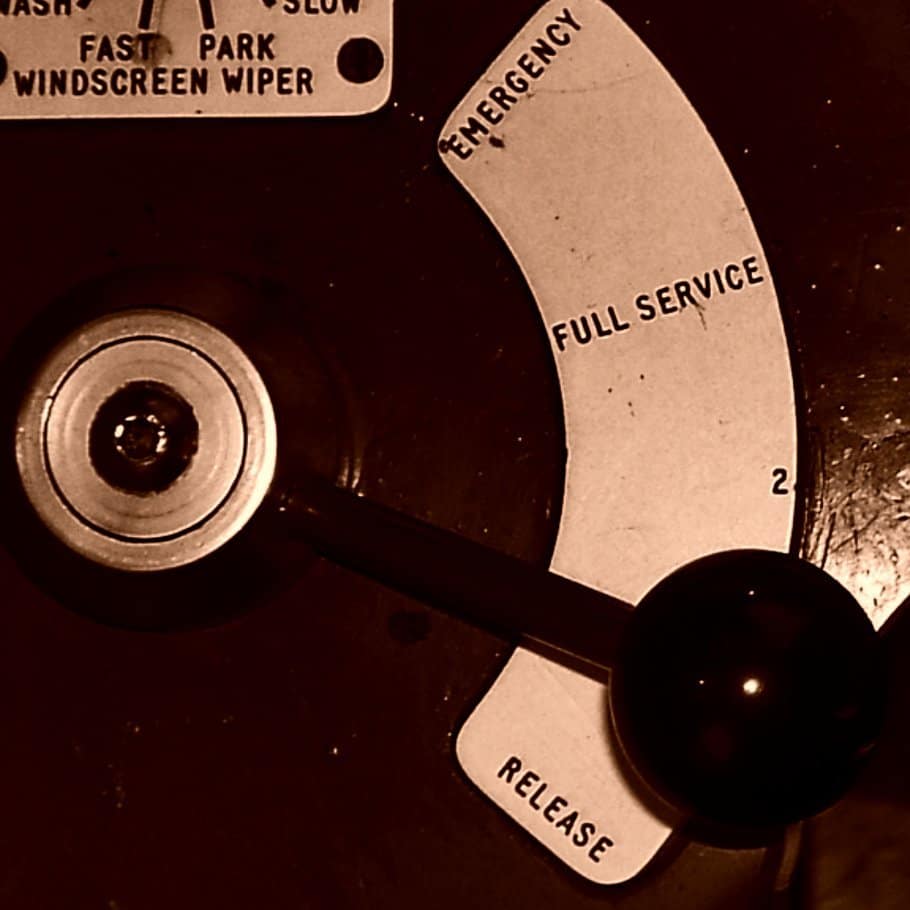

P7009 – Standard for Fail-Safe Design of Autonomous and Semi-Autonomous Systems

Standard summary:

Standard summary:

P7009 establishes a practical, technical baseline of specific methodologies and tools for the development, implementation, and use of effective fail-safe mechanisms in autonomous and semi-autonomous systems. The standard includes (but is not limited to): clear procedures for measuring, testing, and certifying a system’s ability to fail safely on a scale from weak to strong, and instructions for improvement in the case of unsatisfactory performance. The standard serves as the basis for developers, as well as users and regulators, to design fail-safe mechanisms in a robust, transparent, and accountable manner.

(Source / contact info for the P7009 working group)

Chairs:

Danit Gal, Yenching Scholar at Peking University and Strategic Advisor to the iCenter, Tsinghua University

Alan Winfield, Professor of Robot Ethics, University of the West of England

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

Every business, large, medium, small, or even a startup at its inception stage, designing, deploying, and using autonomous and semi-autonomous systems should be considering this standard. This is for the simple reason that nothing in this world is fail-proof. We design such systems to be as safe as possible, extending beyond commonplace human abilities to calculate, predict, and react to external threats in real time.

We excel in designing products to operate smoothly, yet often neglect to consider how they might cease operation in the case of a failure and what can and should be done then to ensure users’ safety. Being able to predict a wide variety of system failures and being equipped to manage and respond to them in real time is a core part of designing a safe system. Not having this key component as a part of our design means critically compromising on the quality of the product we deliver to our users.

What would adherence to this standard look like in a real business setting?

Adherence to this standard in a real business setting would be to make use of the technical baseline of specific methodologies developed to help engineers measure, test, and certify a system’s ability to fail safely. This gives developers the necessary tools to think about the different possibilities of system failure and devise methods of safely responding to them. At least until we can successfully prevent them.

The standard will be designed to be flexible enough to grow with evolving product specifications and user needs, but rigorous enough to provide robust fail-safe measurement tools. Some of the key applications we consider are autonomous vehicles, drones, companion robots, and autonomous weapon systems.

Imagine the following potential use-case:

- You sit in your autonomous car, driving your two kids to school and having your morning coffee before an important 9 AM meeting. Suddenly your car is hacked and you lose control over the autopilot.

- The hackers blackmail you to either pay a million USD or be driven into a wall. What do you do?

- Perhaps you can press the kill-switch, but if you’re on a highway, stopping so suddenly puts you and others around you at risk.

- We need an alternative solution where these intended or unintended malfunctions can be anticipated and properly addressed on the spot. For instance, a fail-proof protocol in place to override the current system and safely navigate the car to the sideline and shut down can save multiple lives and mitigate damage to your surrounding environment in the above scenario.

Systems fail. We must be ready for it when and where they do.

P7010 – Wellbeing Metrics Standard for Ethical Artificial Intelligence and Autonomous Systems

Standard summary:

Standard summary:

P7010 will identify and highlight well-being metrics relating to human factors directly affected by intelligent and autonomous systems and establish a baseline for the types of objective and subjective data these systems should analyze and include (in their programming and functioning) to proactively increase human well-being.

(Source / contact info for the P7010 working group)

Chairs:

John Havens, Executive Director, The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems

Laura Musikanski, Executive Director at Happiness Alliance

Which types of businesses should consider using to your standard, and why? (i.e. Why are these standards relevant to business people now?)

While it is understood organizations globally are aware of the need to incorporate sustainability measures as part of their efforts, the reality of bottom line, quarterly driven shareholder growth is a traditional metric prioritized within society at large. Where organizations exist in a larger societal ecosystem equating exponential growth with success, as mirrored by GDP or similar financial metrics, these companies will remain under pressure to deliver results that do not fully incorporate societal and environmental measures and goals along with existing financial imperatives.

Along with an increased awareness of how incorporating sustainability measures beyond compliance can benefit the positive association with an organization’s brand in the public sphere, by prioritizing the increase of holistic well-being, companies are also recognizing where they can save or make money and increase innovation in the process.

For instance, where a companion robot outfitted to measure the emotion of seniors in assisted living situations might be launched with a typical “move fast and break things” technological manufacturing model prioritizing largely fiscal metrics of success, these devices might fail in market because of limited adoption.

However, where they also factor in data aligning with uniform metrics measuring emotion, depression, or other factors (including life satisfaction, affect, and purpose), the device might score very high on a well-being scale comparable to the Net Promoter Score widely used today. If the device could significantly lower depression according to metrics from a trusted source like the World Health Organization, academic institutions testing early versions of systems would be more able to attain needed funding to advance autonomous and intelligent well-being study overall.

What would adherence to this standard look like in a real business setting?

The goal of the IEEE P7010 Standard is not to create a new metric but to directly link AI design and manufacturing to existing, established Indicators. Other organizations are doing work along these lines, like the OECD via their February, 2017 Workshop, Measuring Business Impacts on People’s Well-Being.

Similarly, the B-Corporation movement has created a unique legal status for “a new type of company that uses the power of business to solve social and environmental problems.” Focusing on increasing “stakeholder” value versus just shareholder returns, forward-thinking B-Corps are building trust and defining their brands by provably aligning their efforts to holistic metrics of well-being.

A similar effort has been created by The World Economic Forum via their Inclusive Development Index as outlined in their article, Toward a Human-Centered Model of Economic Growth.

In terms of how IEEE P7010 fits into this picture, our goal is to create methodologies that can connect data outputs from AI devices or systems that can correlate to various economic Indicators that can widen the lens of value beyond just fiscal issues. Otherwise, all AI will be built to prioritize exponential growth which runs the risk of accelerating automation as primarily a fiscal justification versus universal availability and benefit of this amazing technology for all.

Here’s how the WEF article states the overarching need relevant for business today along these lines:

The 21st century calls for a new kind of leadership to inspire confidence in the ability of technology to enhance human potential rather than substitute for it. This is precisely the leadership that is also required to maintain faith in the liberal international economic order’s ability to raise living standards in developed and emerging economies as they integrate, lifting us up rather than pulling us apart.

Take-Aways and Discussion Points

It’s clear from the Chairs’ comments that the P7000 Standards are in development, and are anticipating business and societal concerns that will (or may) arise from the proliferation of personal data and artificial intelligence in our daily lives.

The “business value” of the standards currently in development (as articulated by the Chairs themselves) seems to fall into the following categories:

1 – Staving off legal risk or PR disasters

As the EU introduces legislation and guidelines for personal data use, more regions may follow suit. As Facebook is taking heat for allowing Russian authorities to manipulate US election results, other companies may come under similar scrutiny.

The “wild west” of personal data may be coming to an end, and IEEE P7002’s Chairs believe that businesses should be a part of the standards development process because they’ll be the ones wrestling with authorities over how technologies are applied in the market.

2 – Anticipatory transparency

The Chairs of the IEEE’s Standards working groups seem to be of the belief that more transparency will be demanded of our common technologies. It seems to be argued by IEEE P7001 that “baking in” transparency (in a clear, structured way) could help companies adjust to what may be inevitable demands for that transparency.

Companies with no semblance of these standards may not only deal with trust issues from consumers, they may have to make more costly updates to robust AI systems that weren’t built with transparency and standards in mind.

3 – Building trust and goodwill with consumers and regulators

Consumers may become increasingly wary of systems that use their data or influence their behavior via targeted algorithms, and the general demand for clarity and ethical standards may increase. For the most part, it seems to behoove companies to keep as much of their “secret sauce” (algorithms, decision-making processes, strategic uses of data) under wraps, but social pressure may lead companies to open up (or at least feign doing so, as many companies to today).

The working group Chairs seem to argue that companies who lead the pack in transparency efforts may be seen as more socially conscious and benevolent – in much the same way that progressive policies around maternity leave or transgender employees may similarly frame companies as the “good guys.” The management of perception is important for any business (particularly publicly traded companies).

It may behoove business leaders to comb the sections above and determine areas of focus (such as algorithmic transparency, or use of data for children and students, etc…) that may become pressing for their business, or important in the minds of their stakeholders. Reading the full version of Ethically Aligned Design which inspired these Standard Working Groups (which can be found here) may be fruitful, in addition to brainstorming about “if-then scenarios” for one’s business in the years ahead.

Involvement with and Thanks to the IEEE

This article took a good deal of editorial heavy lifting, as well as the participation of over a dozen IEEE members from various P7000 Working Groups. I’d like to thank The IEEE Global Initiative and Standards Working Group members for contributing their material, and the IEEE’s John C. Havens for reaching out to all the working groups in order to gather perspectives for Emerj.

Businesses who want to be part of the conversation around AI ethics are free to contact the IEEE’s working groups (see the links under the summary sections of the working groups above) and consider joining and informing the ongoing dialogue as these standards continue to be developed.

Header image credit: Worry Free Labs