Alternative Montaigne-like Article Title: “That the Meek Will Stand United for Only as Long as it Behooves Their Aims”

Today, the world of AI ethics is a harmonious ecosystem of organizations with uncontroversial and reasonable, respectable aims.

They share conferences, include each other in thought leadership, and develop white papers together – in order to discover frameworks for governing AI and handling issues around privacy, security, bias, and individual rights.

This makes sense, for now – because AI ethics is a means to great power.

I presume that 2020 will be the year where AI ethics organizations show their fangs and fight among themselves – vying for political influence, and vying for control over technological and economic forces that will literally define the 21st century.

In this essay, I’ll do my best to explain this shift from cooperation to competition. First, here’s a brief summary of the past and future of “AI ethics,” in a few bullet points.

2012-ish:

- Where – The IEET, MIRI, and the blogs of Goertzel, Hugo de Garis, and Bostrom.

- Topics – Artificial general intelligence. Safe AGI. The Singularity.

- Everyone Getting Along Nicely? – Yes.

2015-ish:

- Where – A few conferences. A few “thought leaders” emerge.

- Topics – Privacy. Security. Individual Rights.

- Everyone Getting Along Nicely? – Yes.

2020-ish:

- Where – Every other AI conference is an AI ethics conference. Every other Twitter handle includes #AIethics.

- What – Privacy. Security. Individual Rights. Race and Gender Bias. Microaggression.

- Everyone Getting Along Nicely? – Fractures emerge. Early ideas give way to real regulatory power that cannot be shared among all groups. Mudslinging and “picking sides” begins.

20XX?:

- Where – Everywhere. The future of intelligence is all that anyone cares about.

- What – Whether or not to create vastly post-human general intelligence. Who will control that intelligence?

- Everyone Getting Along Nicely? – No. Possibly war (see “Artilect War” by Hugo de Garis)

Below I’ll explore why these groups cooperate today, why I believe they will compete brutally in the future, and what we should do about the impending power struggles ahead.

We’ll begin on the bright side:

Cooperation

So, under what conditions will AI ethics and AI governance groups cooperate?

That question has a simple answer. An answer universal to relations of humans, as to relations of beasts or insects:

Whenever it behooves them to do so.

Cooperation – Contributing Factors

Relative Power – Low.

When groups are small, weak, and outnumbered, it makes sense for them to maintain cohesion. If early Christians in the time of Nero of Aurelius had decided to fight amongst themselves – it may well have been the end of Christianity. The schisms and in-fighting only happened once the Church had established power.

The AI ethics community, whether it realizes it or not, is putting forth a relatively united effort toward supporting very reasonable aims. Individual rights. Privacy. Security. It might be said that over 50% of “AI ethics” conversations are merely liberal values or social justice issues wrapped in the colorful foil of “AI.” It isn’t radical, it is resonating with common Western values in order to gain steam, gain influence, gain power.

Only when it does gain power will AI ethics schisms be most likely to occur.

Path to Power – Unclear.

While groups like Artificial Intelligence Committee of the UK Parliament are giving AI ethics thinkers a “seat at the table,” the overall path to power for AI ethics is unclear. Some groups and individuals have ideas about how to gain such influence, but the specific roles and positions have yet to emerge. AI ethics groups and thought leaders are simply trying to figure out when and how they can become more important.

In this current, foggy position, AI ethics groups have yet to feel out the specific narrow channels to power that they will have to fight to squeeze through.

Today, cooperation is the rule in AI ethics, as it always is with underdogs.

The French Revolution Analogy

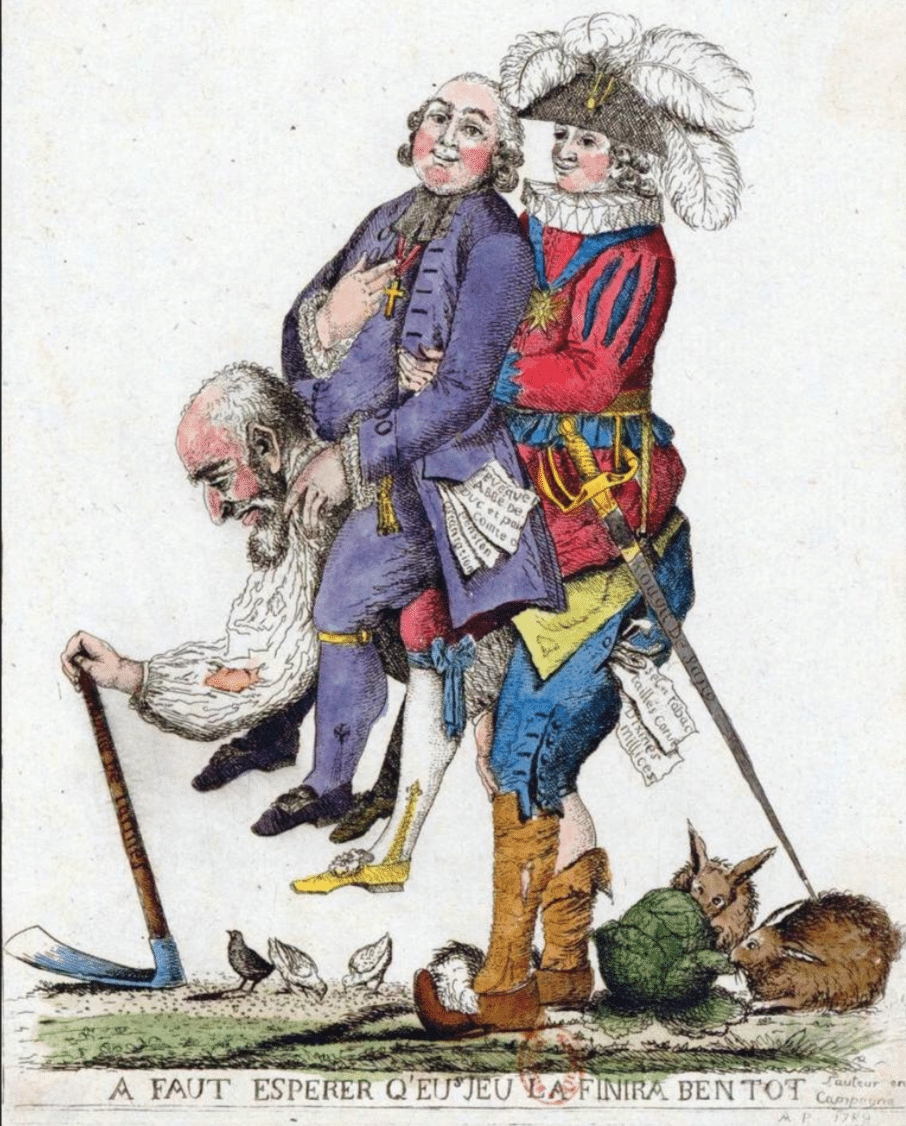

We have entered the era of the AI ethics Tennis Court Oath, a time before the AI ethics community wields real power, before the king is deposed, and certainly before anything close to The Reign of Terror.

We feel we are all underdogs (we are), that we are on the same team (we kind of are), and that we have a great and noble cause to represent (the cause of the weak always feels noble).

During the early days of French Revolution, when the Jacobins (AI ethics groups) began to take their stance against the Ancien Régime of the monarchy (mostly Big Tech, but also large governments like the USA and China), they were cohesive, banded together, sharing a somewhat vague but presumably virtuous vision.

They believed that the monarchy (Big Tech) and clergy (global superpower governments) were unfairly treating the common people, and they set out to change the system, and to gain influence (AI ethics).

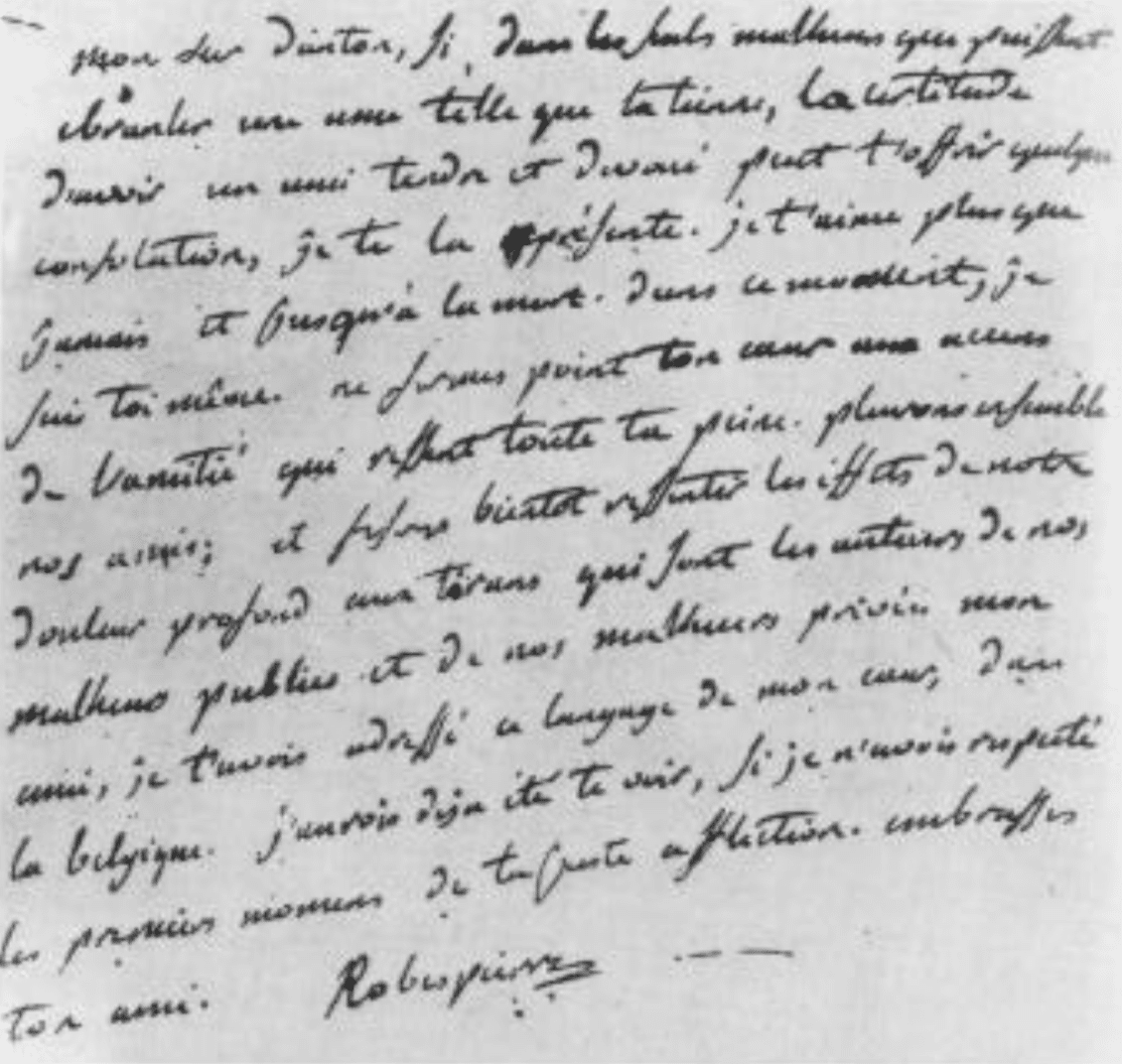

In the time of friendly relations between revolutionaries, Robespierre writes to Danton after the death of Danton’s wife. The letter is exceedingly tender and heartfelt, and ends with the words:

I love you more than ever, I love you until death. At this moment I am you.

But the tenderness would give way to other emotions, as the interests of the revolutionaries increasingly shifted – naturally – from cooperation to competition.

Competition

So when will AI ethics groups compete and mud-sling with each other?

That question has a simple answer. An answer universal to relations of humans, as to relations of beasts or insects:

Whenever it behooves them to do so.

Competition – Contributing Factors

Relative Power – High.

When AI influence implies real power – when AI regulation and “values” become part of formative national or international law – when organizations and individuals in the AI ethics world begin wielding real influence over economic and technological matters of state – then we should expect to see the harmonious AI ethics world splinter into factions.

There will be fighting when there is something to fight over. Namely: Power. Not all voices can be the same volume.

Path to Power – Clear.

At some point, countries may have “AI Ministers” that are more than figureheads. At some point, government AI ethics groups will have powerful members who wield influence over economic and technological affairs of the state. At some point, there will be an AI governance version of the World Economic Forum. At some point, the AI efforts of the United Nations will be more than experimental, and will involve positions and institutes that hold considerable international sway.

When these positions emerge, the AI ethics groups will fight to be king of the hill.

Soon, the well-intended “AI ethics” organizations will – overtly or covertly – begin undermining each other’s credibility and trustworthiness, and the genuine intention will become clear: Most AI ethics organizations are vying for power/influence over the future deity. https://t.co/jwhILS5HKp

— Daniel Faggella (@danfaggella) November 23, 2018

Today the AI ethics world is intact. They invite one another to events. They come together to publish papers. At the same time, they’re aware of the competition ahead – and some of it bubbles beneath the surface.

Competition in AI Ethics Exists Beneath the Surface

If you could hear what one AI ethics group says about the others behind closed doors, you’d sense that this is the case.

Having interviewed and been at forums, events, and conferences with AI ethics leaders since 2012 (from the United Nations to the World Government Summit to the IEEE and beyond) – I have had many experiences that have led me to believe that competition is brewing beneath the AI ethics harmony:

- Back in 2013 I remember hearing the leadership of an AI ethics group (which I won’t name here) ardently bash MIRI for being “autistic,” and bash Zoltan Istvan for being evil and “right wing.”

- The leaders of the few AI groups and initiatives within the United Nations are aware of the influence and initiatives of the other AI groups at the United Nations – and are wary of who among them will become influential.

- Back in 2016, I asked OpenAI’s Chief Scientist Ilya Sutskever about the ultimate aims of the organization, and didn’t get a straight answer – only that it would involve politically influence. I got the sense that there was an end game that needed to be kept secret because others would be gunning for the same position.

- In my interviews with IEEE’s AI governance and standards leaders, it’s clear that they have to conceal a good deal of their work implementing ethical frameworks because they’re aware that others might steal these frameworks, and so garner the political influence.

- I’ve chatting with the heads of an organization (which I won’t name here) that will compete with the Future of Life Institute in terms of AI governance influence. It’s not surprising to see a kind of “sizing up” of opponents in an overt effort to wield more influence over government and military leaders – to compete for limited influence.

I could go on, but I won’t.

It’s worth noting that I do not blame organizations for playing their cards close to their chest. If only one organization can be the premier source for AI standards in government – or only one cohort of people will have the biggest impact on AI regulation – there is a natural (and not in-and-of-itself morally wrong) reasoning to try to be that winner.

Let me make two other things clear:

- I like almost everyone I’ve ever met from the AI ethics world

- I believe they’re essentially good people

Some of these people are friends, and people I both admire and enjoy being around. I support some of these organizations with donations, or with coverage or interviews here at Emerj. I believe that the AI governance and AI ethics conversation is an important one to proliferate – as this will eventually evolve in the decades ahead into a most important conversation about the future of intelligence itself.

All of that said, the factors that turn cooperation into conflict are outside the control of any person. They are embedded in the human condition, in our incentives, and in a world of limited power and resources. This is the fault not of our volition so much as our nature and our condition.

The French Revolution Analogy

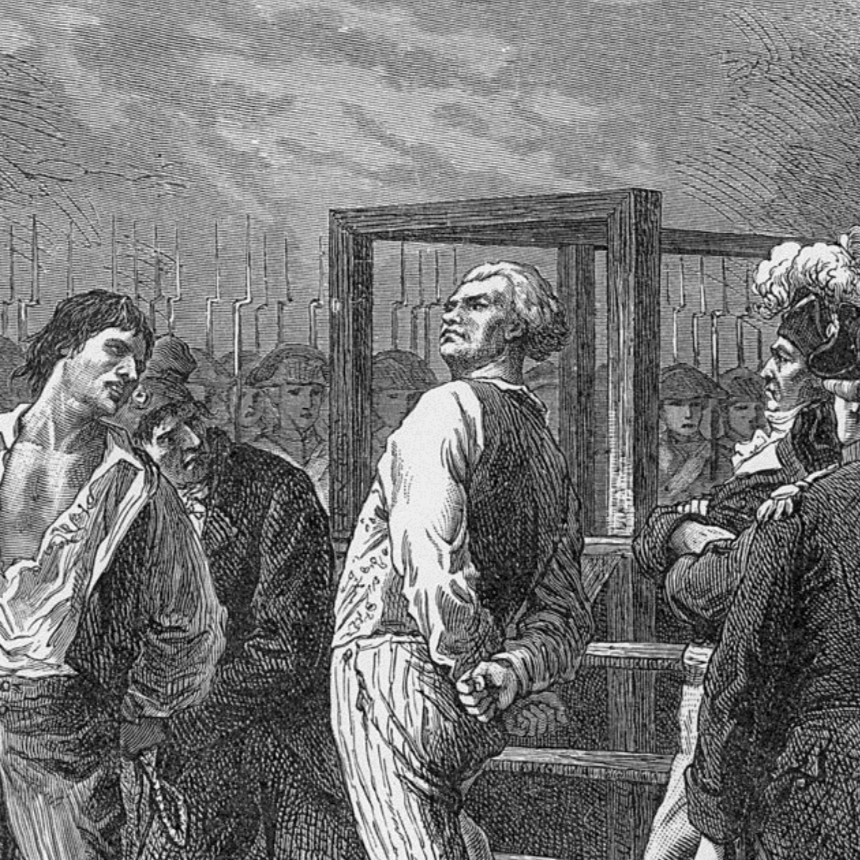

One year after sending Danton a letter of deep and sincere friendship and love, Robespierre sent Danton to the guillotine.

It is reported that as Danton passed Robespierre’s living quarters in the executioner’s cart, he yelled:

You will appear in this cart in your turn, Robespierre; and the soul of Danton will howl with joy!

The tides turn quickly, and when the position of king of the hill is available, those below will want to take it, and those on top will want to keep it.

Young and powerless groups who want to gain influence over the existing power structure will then want to become the existing power structure. As it always has been.

When we’re all Jacobins on the tennis court, with vaguely aligned goals and no real power to wield – it behooves us to collaborate and gain as much momentum as we can as a group.

Jefferson and John Adams were friends of a deep and true kind when America was but an idea, but as it became a reality, and there could only be one Vice President, their friendship was no more and a fierce rivalry replaced it.

Everyone knew Ted Kennedy would run for president against fellow Democrat Jimmy Carter when he started speaking out against him.

Similarly, at some point (I predict in the year ahead), AI ethics and governance groups will begin making stances against one another.

Today, AI ethics is a dream – and is quickly becoming more than a dream. Soon, it will be politics, and its leaders and advocates will do as politics does. They will create power bases, defeat rivals, and vie for limited power and influence, including:

- Who will hold the position of “AI [minister / etc]” in a given country or district

- Whose AI ethics standards should be used by governments, and whose should be left to obscurity

- Whose AI ethics principles should apply to international trade (and how will this affect the wealth and advantage of nations)

- Etc…

As the West embroils itself in the squabbling of a thousand AI ethics voices – as I suspect it will – China will be positioned to win.

Western AI Ethics In-Fighting is a Massive Benefit for China

China will probably just move forward in a top-down approach with much more unified force, and align AI ethics to their international goals, rather than hobble innovation with concerns for privacy or risk, and rather than listening to squabbling and opposing political factions.

This advantage is significant, and nobody is talking about it. The national advantage and prominence of Western nations aren’t well represented in their myriad “AI ethics” groups (Black in AI, Women in AI, groups about AGI, groups about data privacy and data sovereignty).

Essentially none of these Western groups consider the international competitiveness (economically and technologically) of their own country. I’m not advocating that national interests are most important – but it seems clear that national interests will be the sole point of importance in Chinese AI ethics interests (at least whatever AI ethics principles are “official,” or CCP-approved). The West needs to consider how it will remain prominent in the face of this united technological front. In-fighting among a thousand groups won’t be the answer.

I’ve written at length here on AI Power about China’s potential advantage over the West in terms of AI prominence. This topic of the relative advantage of China’s top-down AI ethics approach will have to be the topic of its own essay in the relatively near future.

Two Paths to AI Power

In my essay “Substrate Monopoly“, I pose the following prediction:

In the remaining part of the 21st century, all competition between the world’s most powerful nations or organizations (whether economic competition, political competition, or military conflict) is about gaining control over the computational substrate that houses human experience and artificial intelligence.

In other words, controlling the hardware that houses the most powerful artificial intelligence, and controlling the hardware that houses the majority of human experience (as we enter a virtual world of programmatically generated everything) – will be the highest conceivable positions of power.

There are two ways to get there:

- Build the Artificial General Intelligence, and Weild It. This is the approach of Google, of Facebook… of some groups within the Chinese government, and probably some groups within the US government, and of DeepMind and OpenAI.

- Lead the National or (Preferably) International Organization That Governs the Power AI. This is the end game of “AI ethics” and “AI governance” groups – whether their aware of it now or not. All will crescendo towards this position of governance – as I’ve written about in AI Regulation as a Means to Power.

There is only one game: Control of the trajectory of intelligence.

Strategy of AI superpower (US, China, @OpenAI, @DeepMindAI) : Build it first, be in the position of initiative.

Strategy of tech weak (UK, EU, small nations/cos) : Feign the role of the benevolent regulator. https://t.co/1dxX2GfIjE

— Daniel Faggella (@danfaggella) May 31, 2019

The era where AI makes or breaks the power standing of a nation – which may be many years ahead (but likely in our lifetimes) is where political factions will compete most intensely. Possibly to the point of war (read: The Political Singularity).

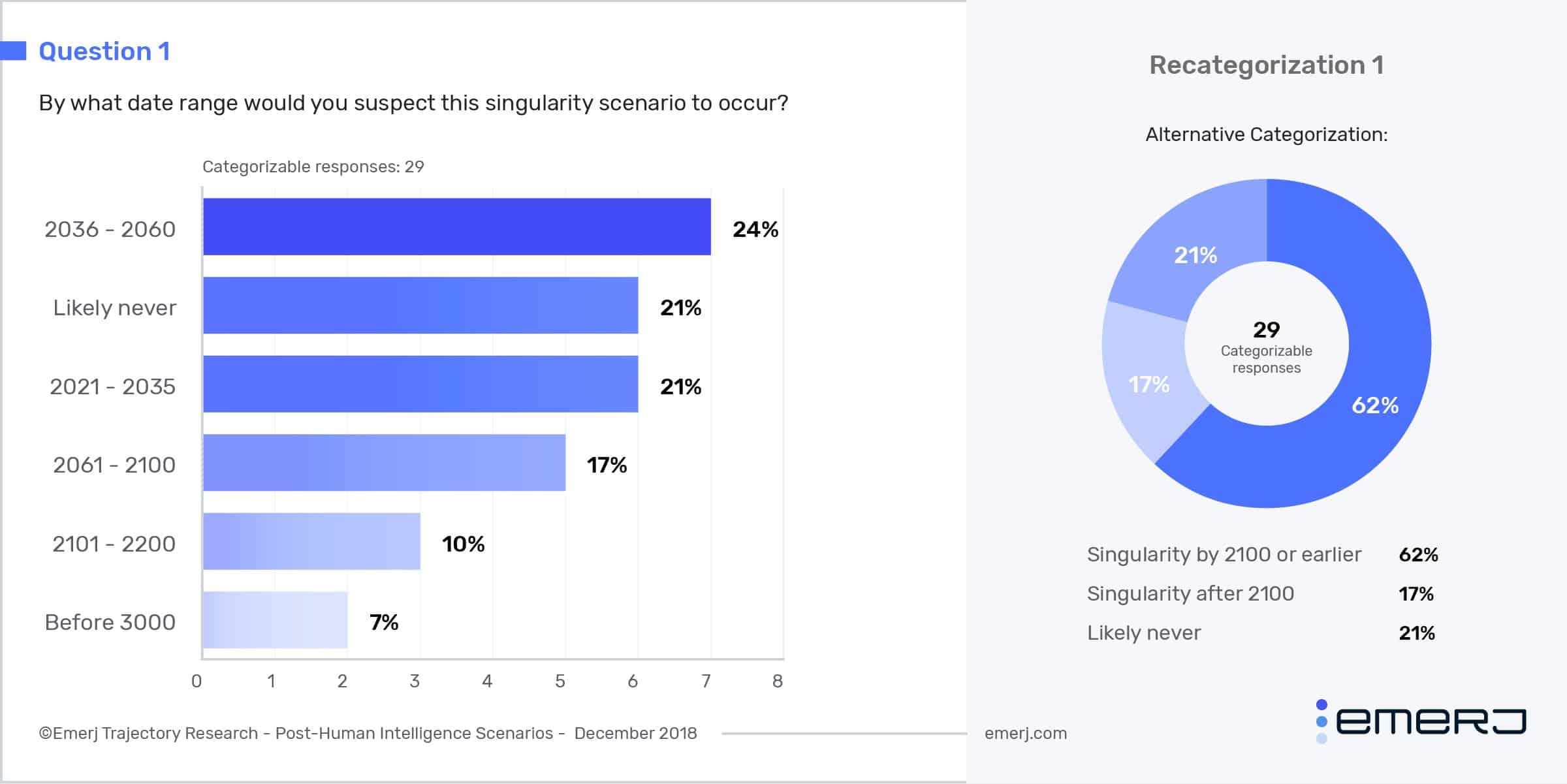

The PhD’s we’ve polled had been on both sides of the fence on the topic of when “The Singularity” (or the emergence of artificial general intelligence) will occur:

Whether you think the singularity is near or not, the potential impending conflict of various “AI ethics” organizations is worth discussing.

What to Do About AI Ethics at War

I’m not a pessimist.

I dearly hope for the international community to come up with some kind of

This entire essay is goading the AI ethics community.

I hope this premonition of competition is false. The forces that bring around competition, however, are not false, so maybe I hope for something else: That the AI ethics community will somehow find ways to limit or hedge against the dynamics that would naturally lead to competition.

Towards the end of this life, Machiavelli wrote to a friend:

I’d like to teach them the way to hell, so they can steer clear of it.

I’m no teacher. I have much more living to do to peer as far into human nature as the Father of Modern Political Theory himself. But I’ve read enough history to have seen hell – at least in print. And I’ve seen enough politicking and conniving to see its ubiquity.

Earlier I asked:

When will AI ethics groups compete and mud-sling with each other?

The answer:

Whenever it behooves them to do so.

We cannot change our amoral, selfish natures. We cannot change that we will do what behooves us. We can expect the following:

- Small nations will align themselves with AI governance policies – and AI ethics thinkers – who behoove their interests.

- Small companies will align themselves with AI governance policies – and AI ethics thinkers – who behoove their interest.

- Large companies will band together to form groups (The Partnership on AI) – in order to project virtue and behoove their interests.

- All will align themselves with AI governance policies – and AI ethics thinkers – who behoove their interests.

- All will feign benevolence by their actions, and all will be motivated by self-interest.

Today, “AI governance” and “AI ethics” don’t have the teeth to make a real difference in the lives of companies or countries. As soon as they do – factions are likely to emerge – divisions are likely be created – and AI ethics will go to war.

That “war” will be metaphorical and political, but will threaten to become real war at the point whereby artificial intelligence power is what makes or breaks the economy and relevance of nations.

The question is, how can we take into account our competing motives, and build structures that make cooperation much more in line with everyone’s incentives than competition? Mutually assured destruction is a start, but we need more forces pulling for cooperation, more forces to brook the tendencies of competition.

We associate cooperation with selflessness and competition with selfishness. Instead, we should assume that selfishness rules the world, and we should find ways to make peace and cooperation the selfish interest of great and little powers alike.

I suspect that this will involve a global steering and transparency committee to discern (a) the future paths that humanity wants to tread in terms of super-powerful AI and cognitive enhancement, and (b) how we can collaborate as a species to peacefully arrive at those beneficial futures (I touch on this briefly in my late 2015 TEDx on AGI).

This kind of united effort might have its precedent in the United Nations, or in the Treaty of Westphalia.

But neither the United Nations nor the Treaty of Westphalia would have been created without being preceded themselves with millions of deaths in war.

Approaching the goal of without hedging against our own natures (see Nayef Al-Rodhan’s “5Ps”) – and without hedging against our own tendencies to fight first, and unite only when necessary – could be our greatest point de faiblesse.

A thousand times more now than in during French Revolution, it is Liberty, Equality, Fraternity or Death.

All of this seems hyperbolic today. AI ethics is so new, so nascent, and struggles for power aren’t fully predicated on AI (though they’re getting there). I suspect this essay will be much more relevant in three years, or in fifteen years. May they be peaceful ones…