Artificial intelligence has found its way in different areas in the entertainment industry from offering customized recommendations on your Friday night movies (as in the case of Netflix) to delivering sports match highlights during live TV coverage. In the latter, this was made possible in the 2017 U.S. Open Tennis Championships when IBM Watson Media used its AI tool to showcase play highlights right after they occur.

By combining historical data, crowd reactions and players’ facial expressions, the IBM Watson was said to have identified and ranked the best shots that made up the day’s highlights. The AI selected which plays are best curated for the United States Tennis Association’s (USTA) different platforms throughout the two-week event in New York. A look into the tool’s user interface (UI) shows each highlight assessed with scores on categories such as crowd cheering, match analysis, player gestures and overall excitement.

The event, however, wasn’t the first time that the AI tool was applied. In the previous year’s Wimbledon Championships, IBM Watson analyzed 400 tweets a second to help the Wimbledon social media team identify the best content to feature in their official website. That’s aside from monitoring every match to gather 3.2 million pieces of data across all tennis courts. (For more applications of AI in sports, you may refer to our article regarding its current and future applications.)

With the capability of AI to cater to the public’s media consumption according to their preferences and demands, media companies have begun to invest in this technology to go with the projected $2 trillion global media spending projection in 2019. According to Daniel Newman, CEO of Broadsuite Media Group, “From Language processing and image recognition to speech recognition and deep learning, there is truly no end to the way AI is impacting the creative process in media and entertainment.”

Discussions in the media around the emergence of AI in entertainment range from augmented reality (AR) to emotion analysis. In this article, we set out to study the AI applications of top entertainment companies (ranked according to their quarterly revenues). This article aims to address the following questions:

- What types of AI applications are currently in use by top entertainment companies?

- What are the common trends of these innovation efforts?

Through facts and quotes from company executives, this article serves to present a concise look at the implementation of AI by the top four entertainment firms in terms of quarterly revenues indicated in their official financial reports. We sought to provide relevant insights for business leaders and professionals interested in the convergence of AI and entertainment.

(Note: This article will simply be comparing the AI initiatives of Disney, Comcast, Fox, and Viacom. Readers with a more general interest may enjoy our previous article about AI use-cases in movies and entertainment.)

We’ll explore the applications of each firm one-by-one, beginning with Disney:

The Walt Disney Company

As one of the world’s leading entertainment companies, The Walt Disney Company earned $12.8 billion in quarterly revenue for the period ending September 2017. Its lines of businesses include media networks (Disney Channel, ABC, ESPN), Parks and Resorts (Walt Disney World, Disney Cruise Line, Walt Disney Imagineering), Studio Entertainment (Walt Disney Animation Studios, Pixar Animation, Marvel Studios, Lucas Film Ltd), and Disney Consumer Products and Interactive Media.

Disney Research began when it collaborated with Carnegie Mellon University and the Swiss Federal Institute of Technology Zurich (ETH Zurich) to build research labs in 2008. Back then, the focus was to start projects on character-human interaction. “We’ll be looking for ways to sense what a person is doing or thinking so that the character can respond appropriately… We need to figure out what sensors to build and how to interpret and respond to human behavior,” according to Disney Robotics chief Jessica Hodgins. Here’s a short video of her interview showing snippets on how the team is creating interactive characters:

Today, the research lab has expanded to three sites with research areas including machine learning and data analytics, visual computing, robotics and human-computer interaction. Some of its current projects include:

- – a mixed reality (MR) and augmented reality (AR) technology where a user sits on a bench and interacts with a humanoid animal. The user’s image is projected on a video display where the computer-generated character and its surroundings can also be seen. Through haptic feedback (a type of technology that recreates the sense of touch by letting users feel vibration and motions), the user can feel the vibration when the humanoid animal sits down and then hear its voice once it opens its mouth. This is made possible by the speakers and haptic sensors attached to the bench to augment the interaction experience.

Take a look at how the team has instrumented the environment to allow humans to share space with an animated character:

- Quality Prediction for Short Story Narratives – a tool where scientists trained the AI to recognize patterns and traits on short stories and select which would most likely appeal to readers. The team developed the app by sourcing more than 28,000 answers from the question-and-answer site Quora (since most answers are in the form of narratives according to Disney’s scientists) and looked for patterns that influence story structures. They used the upvotes in these answers as quality indicators or alternative for narrative value. Three neural networks were then developed to evaluate individual parts of the story, to assess if these parts can work well together, and to analyze the entire story.

Though the team believes the project is a good start for future applications, Boyang Li, a scientist in the research lab, clarifies, “You can’t yet use them to pick out winners for your local writing competition, but they can be used to guide future research”.

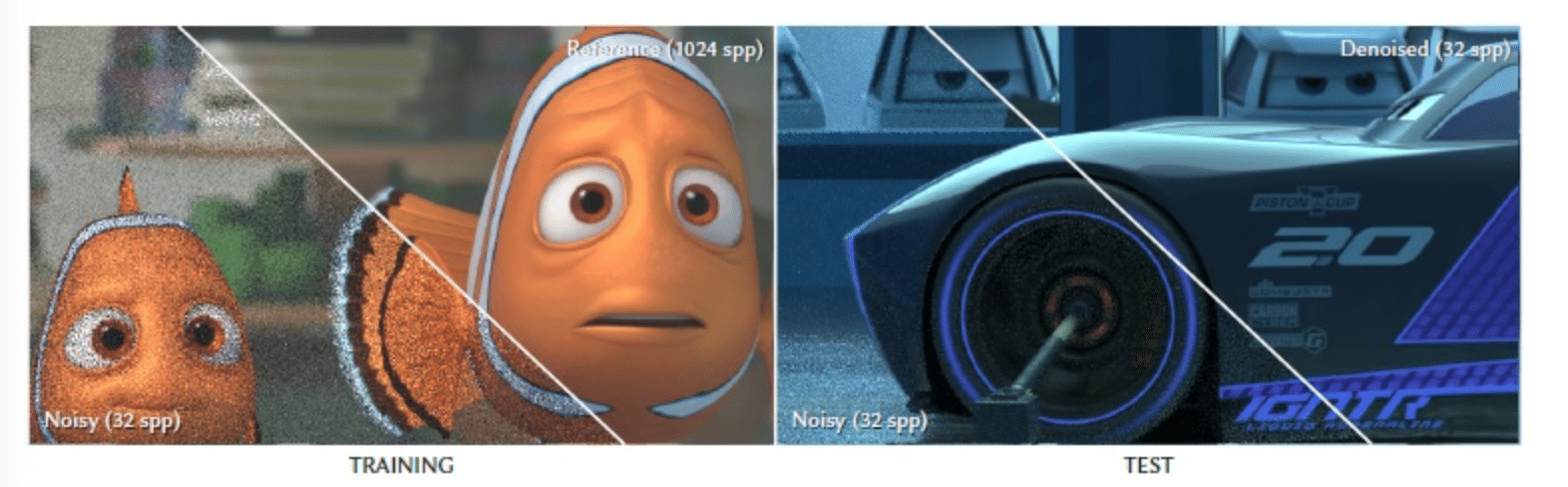

Disney Research has also ventured into using AI to improve movie quality and viewer experience. For example,it collaborated with Pixar Animation and University of California, Santa Barbara in July 2017 to eliminate image noise (or variations in brightness or color formation) in movies. Using the film, Finding Dory, the scientists were able to train a deep learning model to give the movie noise-free quality. The research team believes that it can reduce the work of its artists by eliminating the arduous task of adding light rays to images to improve picture quality.

You can compare the before and after qualities in the images below:

Another collaboration with Caltech and Simon Fraser University involves the use of facial recognition and deep learning technologies. Movie audiences were asked to fill a 400-seat cinema where four infrared cameras detected their facial expressions throughout the movie. To gather more data, 150 showings of nine movies were made, which included Disney titles such as The Jungle Book and Big Hero 6. The captured facial expressions were then categorized numerically to represent variations in reaction. For example, one number is assigned to a smiling face and another is assigned to how their eyes are wide open.

Based on their research findings, the team concludes that facial reactions such as smiling and laughing correlate with humorous scenes in the movie. The applications of this research according to them can help Disney in its future projects that concern audience analysis, group behavior and predicting reactions. Specifically, they claim that the findings would help the company understand moviegoer’s emotions and possibly predict what emotion they would have in different parts of a movie.

The research also can be used in improving its movies. According to Yisong Yue, an assistant professor at Caltech and a collaborator on the project, the technology can be used to analyze a forest and note of “differences in how trees respond to wind based on their type and size as well as wind speed,” possibly creating a more realistic quality on the animated film.

Disney also invests in tech startups focusing on consumer media and entertainment and are in need of seed funding and guidance. In collaboration with venture capital firm TechStars, the Disney Accelerator program gathers select tech innovators who can have access to all divisions of the company including mentorship from its investors and leaders. Participants then present their products during the annual Demo Day and have the opportunity to work with the company.

In 2015, Los Angeles-based imperson was selected by Disney to develop chatbots for Disney’s various projects. Today, some of the projects that the Disney Accelerator participant has launched for the company include chatbots for characters such as Miss Piggy, Judy Hopps of Zootopia, Rocket Breakout, and Guardians of the Galaxy, which is aimed at promoting the movie’s sequel. The Guardians mixtape chatbot asks users about their preferred visuals and songs from the movie and then creates a mixtape based on these preferences.

Comcast

Comcast Corporation is a telecommunications conglomerate with businesses in broadcasting, cable TV, digital phone, HDTV, home security systems, internet, movie and TV production, sport management, theme parks, venture capital and VoIP phones. It earned $20.9 billion in quarterly revenue for the period ending September 2017.

Comcast Labs develops products that run on content analysis algorithms, machine and natural language learning, computer vision, deep learning and AI. The AI team works on innovations ranging from personalized TV program recommendations to home security solutions.

In particular, the lab’s voice technology was developed to allow users to communicate with devices using natural language. The X1 Voice Remote is a television remote control that recognizes voice commands. It can be instructed to change channels, search for specific TV programs, present recommendations, select a favorite channel and control a user’s DVR. The company claims that as of August 2017, they have sold 17 million units and approximates more than 3.4 billion voice commands have been made by its users

Comcast CEO Brian Roberts’ keynote about the evolution of the X1 Voice Remote and its future development can be seen in this video:

Comcast Lab also applies machine learning models to predict customer issues right before they occur. Adam Hertz, VP of engineering in its Silicon Valley development center, claims that their technology is 90 percent accurate in terms of predicting if a technician needs to drive to a subscriber’s home to fix a connectivity problem (a process known as “truck rolls” in the cable TV industry).

To develop the system, Comcast collected data sets from calls to its its customer service centers and network operations and were made available to its systems automatically. The machine learning platform then extracted the content features on the call, selected which data can be assigned a score, and used the figures for data model training. By applying algorithm on the data, the system is said to be capable of performing predictive technology on the likelihood of performing a truck roll.

After the testing phase, Comcast rolled out the application to its customer service representatives (CSR) who receive complaints over the phone. These CSRs need to check the application to find out if a truck roll is necessary. Otherwise, they can help resolve the issue through remote troubleshooting. Some of the issues that can be resolved with the help of a CSR include resetting the modem and identifying fixes that require only battery replacement.

In his May 2016 presentation on Comcast’s data analytics projects, Jan Neumann, manager at Comcast Labs, admitted that this technology still has issues such as having skewed data (there are only a few data that relate to avoidable truck rolls) and information leakage (customers only report “avoidable truck roll” data after a truck roll has happened and the information has already been included in their data sets). Hertz, however, asserts that the technology will save the company millions of dollars owing to the expensive cost of truck rolls.

21st Century Fox

21st Century Fox (21CF), a multinational mass media company that provides cable, broadcast, film, pay-TV and satellite services is headed by its Executive Chairman, Rupert Murdoch. The company reported $7 billion in quarterly revenue for the three months ending September 2017. Its businesses include Fox Network Group, Fox Sports Networks, National Geographic, Sky plc, among others.

Its filmed entertainment arm, Fox Entertainment Group, made the headlines when 20th Century Fox approached IBM Research to create a trailer for the horror movie, Morgan, in 2016. According to John Smith, Manager for Multimedia and Vision, the process began with the IBM researchers feeding the system with 100 horror film trailers. The team then trained the AI to conduct visual, audio and scene composition analyses. These involved tagging 22,000 scenes from different movies according to different emotions, the actors’ tone of voice, musical score based on the feelings invoked, and other aspects of a film such as location, lighting and framing.

After the machine learning process, the movie was put into the system so the AI could select which scenes would appeal the most to viewers. A staff from 20th Century Fox then arranged the scenes to compose the final trailer. You can watch it on the video below and get to know more about the process:

Smith claims that the entire process of making a trailer only took about 24 hours compared to the traditional process that could last up to several weeks.

In another venture, 21CF acquired minority shares of Osterhout Design Group (ODG) in 2016. The wearable technology company manufactures “reality smartglasses” or wearable computer glasses that add information to the wearer’s view. A report by Fast Company states that the partnership will combine ODG’s AR and device technology with 21CF’s content in future product developments.

When ODG revealed its latest smartglasses during the 2017 Consumer and Electronics Show in Las Vegas, a company representative explained in an interview that the digital images that a user can see while wearing the glasses are overlaid on the actual things that they are looking at. Here’s a user’s perspective when the device is worn:

The device allows users to watch 3D movies as long as it is paired with a device that plays the film. He also adds, “The heart of the product are two stereoscopic HD displays running at 80 frames a second and in 720p, 40-degree view. That’s basically equivalent to a 100-inch television at about six feet away.” In another report, the product’s entertainment use is described as something that is “meant to be carried around for watching movies on a digital big screen, playing games, or using apps. It’s working with 21st Century Fox’s Innovation Lab on experiences that include 3D movies and an interactive augmented reality demo based on the Alien franchise.”

For more insights on this technology, you may refer to our article those talks about developments in AR and smartglasses.

Viacom

Media conglomerate Viacom offers digital media, motion pictures and television programming. Quarterly revenues amounted to $3.32 billion as of the three months ending September 2017. Some of its brands include Viacom Media Networks (BET Networks, VH1, Nickelodeon Group, MTV), Paramount Pictures, and Viacom International Media Networks.

A technological innovation by Viacom is the social TV sales platform Echo Social Graph that was launched in 2014. Now on its third version, the application is offered to Viacom clients to help their campaigns reach the right audiences by tracking data such as social activity, engagement types, hashtag use, post reach, number of digital influences, to name a few. By measuring the impact of social media campaigns, Viacom claims that it can use the data to find ways of marketing across multiple platforms such as television and social media together.

Viacom worked with social media marketing firm Mass Relevance (which acquired Spredfast) to develop the Echo Social Graph. Spreadfast’s VP of Media and Entertainment Josh Rickel, explains that the project “created a means by which Viacom could quantify the value of this audience using specific metrics, which in turn allowed their integrated marketing team to tell a value story to their sponsors.” He further describes how their collaboration with Viacom using the Echo Social Graph works in the following video:

There have been several projects that the technology was applied to. For instance, Sarah Iooss, Viacom’s former Ad Sales group lead, revealed in an interview that they investigated the best way to improve their campaign reach for the 2014 science fiction film, The Maze Runner. The team found out that after airing a half-hour TV special about the movie, online searches spiked for its lead star, Dylan O’brien (of the MTV series Teen Wolf). They discovered that most users clicked on sites related to Teen Wolf.

With this data, the team then worked on adding digital content into MTV’s social media accounts to take advantage of the web traffic. The technology, as described in Viacom’s press release, will support other projects in helping the company create custom content for advertisers and delivering ad campaigns not only on its linear, digital and mobile platforms but also on its Twitter Amplify and Tumbler.

Viacom has also ventured into using data analytics applied to brand messages when it partnered with Canvs, a tech startup that measures and interprets emotions on television by mapping characters, plotlines and moments. The system tags these with emotion categories such as “annoying,” “boring” or “like.” Canvs claims that its technology can track up to 56 emotions and it can decipher special jargon such as those used by millenials.

The 4-minute video below explores Canvs’ basic sentiment analysis features and user interface:

The partnership with Viacom’s Echo Social Graph plans to apply Canvs’ emotional analysis technology into interpreting public emotions on social media campaigns. WIth the help of the company’s content creation arm, Viacom Velocity, once a content goes viral, it claims to employ sentiment analysis in finding what triggers a specific target market to share content and become potential customers. This is service is offered to advertisers on Viacom’s media networks.

In an interview with Jeff Lucas, Marketing and Partner Solutions Head at Viacom, he explains, “the No. 1 emotion you want is brand love. And you want that to be true brand love. If you can resonate so much that a client loves that piece of content, wants to share it and really believes in it, that’s going to be someone who’s going to use that product, someone who’s going to buy that product. And you’re not going to get much closer to someone’s heart on that product.”

Concluding Thoughts on AI in Entertainment

The top entertainment companies have invested in AI and machine learning to improve their operational efficiency, cut down on costs and offer innovative digital products and services. The future of entertainment will most likely rely on these AI applications as these companies pursue more areas to apply such technology.

From our analysis of the top entertainment companies, we have observed that they seem to rely on in-house talent to develop their AI applications through their own tech labs. These media companies venture into AI to infusing AI in devices to reduce operational costs (Comcast), improve image and movie quality (Disney, 21CF), and measure audience engagement (Viacom).

Our research also indicates that these businesses benefit by partnering with other startups so they can tap existing technologies and speed up their innovation efforts. They invest in research for product development and improvement of processes. This requires participation by top talents to meet the needs of their target market as stated in our previous article.

Header image credit: Walt Disney Studios