We’ve reached the sixth and final installment of the AI FutureScape series. In this article, we discuss what our survey participants said when asked about the role of business and government leaders in ensuring that AI steers us to a better future, not a worse one.

As a recap, we interviewed 32 PhD AI researchers and asked them about the technological singularity: a hypothetical future event where computer intelligence would surpass and exceed that of human intelligence. This could either be to humanity’s great benefit or detriment.

Readers can navigate to the other FutureScape articles below, but reading them isn’t necessary for understanding this article:

- When Will We Reach the Singularity? – A Timeline Consensus from AI Researchers

- How We Will Reach the Singularity – AI, Neurotechnologies, and More

- Will Artificial Intelligence Form a Singleton or Will There Be Many AGI Agents? – An AI Researcher Consensus

- After the Singularity, Will Humans Matter? – AI Researcher Consensus

- Should the United Nations Play a Role in Guiding Post-Human Artificial Intelligence?

- (You are Here) The Role of Business and Government Leaders in Guiding Post-Human Intelligence – AI Researcher Consensus

What Should Businesses and Government Do Today to Ensure that AI Steers Humanity in a Better Direction?

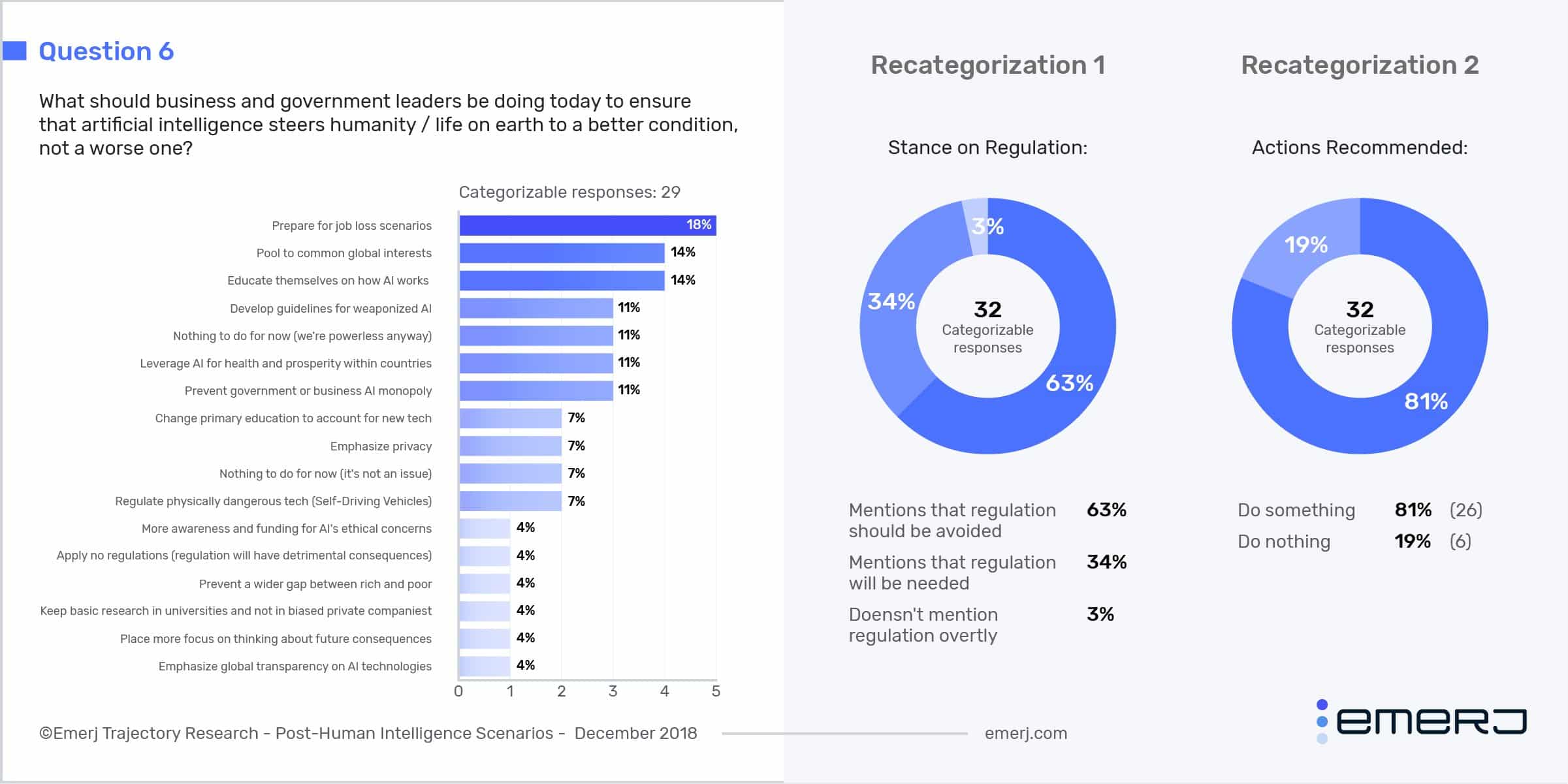

The final question that we asked our respondents was what should business and government leaders be doing today to ensure that artificial intelligence steers humanity/life on earth to a better condition, and not a worse one. Overall, our respondents overwhelmingly agreed that businesses and governments should actively influence the trajectory of AI and society:

- 81% said that businesses and governments should do something

- 19% said that businesses and governments should do nothing

However, a significant majority said that the regulation of AI should be avoided:

- 63% mentioned that regulations should be avoided

- 34% mentioned that regulations are needed

- 3% did not mention regulations overtly.

As we did with the singularity scenarios section in the previous article, we organized the responses into broader categories for the sake of discussion. The types of actions advocated for fall broadly under capacity building, power redistribution, standards, collective action, leverage benefits, and do nothing. A few responses fell under multiple categories.

Capacity Building

The largest category of responses is capacity building, where our respondents advocated for leaders to do something to help prepare themselves for the future impact of AI on society.

The largest single response in this category was to prepare for job loss scenarios, which was in 18% of total responses (6 out of 33).

Technological unemployment has been an issue in the minds of workers ever since the industrial revolution. However, there have been fresh concerns about AI, with some worrying that humans will eventually go the way of the horse. While humans have adapted to past technological change, this trend may not continue indefinitely into the future.

Many jobs can be easily automated by AI and robotics. An Oxford study shows that up to 47% of all jobs in the United States are at risk of being automated with current AI capabilities. Our own AI researcher poll of “AI Risks” confirms the legitimacy of AI’s disruptive impact on the job market. Some economists say that in the long run, people will adapt and find new jobs. However, if the short-term impacts are severe enough, this might not matter if it creates enough political turmoil.

Coming up with a solution to the problem will likely be difficult, especially as AI advances, or if fundamental changes to the political-economy of nations are necessary. Some solutions that have been proposed include efforts to reskill workers, basic minimum income, and a negative income tax.

Governments need to closely monitor shifts occurring in employment markets due to increasing automation and factor that into their policies, in particular when it comes to education and welfare. – Helgi Páll Helgason

On a political plane, we should think more about the likely future situation where human labor would not be needed or almost not needed to satisfy basic human needs. Usual market considerations might still work for more exotic and optional needs, but probably not for basic needs. – Michael Bukatin

Politicians should get serious thinking about a new political-economy in which work starts disappearing, and how to ensure that we distribute wealth and power more equitably. – James J Hughes

The next largest response for capacity building was that leaders should educate themselves on how AI works.

Understanding where the technology is at and its implications is vital to keeping business and governments from falling behind. Governments are especially slow at keeping up to date on current trends in technology. This is known as the pacing problem, where the speed of technological growth exceeds the ability for governments to adapt. For example, a Federal Department of Transportation report notes that ““The speed with which [driverless cars] are advancing… threatens to outpace the Agency’s conventional regulatory processes and capabilities.”

Make sure that they are on top of the latest AI progress and that it’s part of the agenda – Igal Raichelgauz

On a similar note, some respondents also advocated that leaders need to think more about the future consequences and thinking about where the technology is leading us.

This is reflective of a concept called anticipatory governance, where government work together with industry, scientists, and other communities to build foresight, engagement, and to help steer the trajectory of a technology in a beneficial path. In a sense, it’s being proactive about the problem as opposed to reactive.

Governments need to do some joined up thinking about where AI and its application to robotics is leading us overall… Governments and International bodies need forward thinking to deal with problems before they arise. – Noel Sharkey

It is important to understand how AI could be harmful and to explicitly design such systems with safety mechanisms to prevent potential harm. – Roman Yampolskiy

There are a variety of ethical issues from AI that are currently being worked on. Issues with algorithmic policing and sentencing have been shown to be biased based on factors like race, the trolley problem with autonomous cars, and whether or not killing a combatant using fully autonomous weapons is inhumane under international law.

As AI becomes more intelligence, some further problems might arise:

- If a sentient AI has a richer degree of conscious than a human, does it have more moral worth than a human? (See Daniel Faggella’s TEDx talk on this topic from 2015)

- In some situations, a singleton might exist that effectively eliminates national sovereignty. If so, would nations have a right to exist as sovereign entities?

- Do humans have some form of accountability or control over a singleton? Does anyone get a say in the creation of a singleton?

- Is it morally acceptable for humans to augment themselves to be “better” than someone else? Should people have a common “Factor X” that unites them? Or do people have full control over their own bodies outside of the societal consequences – to tinker with consciousness?

We need wider understanding of the issues and better support for those working to solve them. – Steve Omohundro

Power Redistribution

Another broad category of responses concerned where the centers of power would be in a world with post-human intelligence. Essentially, who controls what? These generally dealt with preventing monopolistic or authoritarian control over the technology.

Monopolistic behavior in the tech industry due to the economics of data and the network effect has become a large issue. As pointed out in our previous article, the near zero-marginal-cost of IT services produces a “winner-takes-all” effect on various markets. For example, there are no serious general-search engine competitors against Google, nor against Amazon in online marketplaces. Monopoly control over AI, especially advanced AI, could be a major problem.

Some experts also worry that the proliferation of AI and autonomous weapons could be the perfect storm for authoritarian regimes. The use of big data, lie detection, surveillance, deep-fake propaganda, and autonomous weapons could create robust totalitarianism, allowing for a small group of people to hold historically unprecedented control over populations.

Do not let any power, corporate or governmental, to get monopoly on advanced AI. – Pete Boltuc

Some artificial intelligence technologies increase the abilities and potentials of individuals, just like cars and other tools do. Other AI technologies increase the abilities of autocratic governments and other bodies that wield power over individuals… Ensuring a positive future is a tough question, but an important one. – Daniel Berleant

Monopolistic control over human augmentation technologies could also equally be dangerous. For example, products today have limits on users abilities to modify or repair them, even John Deere tractors. If human augmentation technologies that have products like cognitive prosthesis were to have similar issues, people could end up dangerously dependent on a small group of companies for their health. This isn’t to mention if, like the iPhone, old products are artificially slowed down to promote the new editions.

Start regulating AI and cognitive prostheses now. This should focus on (a) ensuring no monopoly emerges at any place in the data chain for AI, (b) establishing strong limits to cognitive prostheses, (c) establishing limits to suppliers of cognitive prostheses and AI. – Eyal Amir

Collective Action and Standards

14% of our respondents believe that the international community should pool to common interests, where common interests such as AI risk are managed on an international scale.

Different forms of international organizations to manage AI collective issues have been proposed in the past. For example, Erdelyi and Goldsmith’s idea of an “International Artificial Intelligence Organization” would serve as an area for discussion of international AI issues and standards setting. For a technology that could be as potentially powerful as AI, some of our respondents think that humanity should collectively guide its progress.

Artificial intelligence bears risk along several axes such as in the operation of safety-critical infrastructure, development of robots with lethal objectives, creation of societal unrest, and mass manipulation of populations. To protect against intentional or inadvertent behavior, the command and control intelligence structure should be subject to certification with an auditing process that reinforces societally accepted operation. An international board should be formed that monitors and ratifies which problems are presented for solution by artificial intelligence such that the necessary conditions for success may or may not be enabled. – Pieter J. Mosterman

I believe in anthropocentric instrumentalism. Humans should develop for humans what humans collectively decide is needed and appropriate for humanity and its future. We should get to work. – Philippe Pasquier

Some of our respondents believe that there are standards that international organizations and governments should be setting now.

- 11% of respondents mentioned that standards for weaponized AI should be developed

- 7% mentioned that privacy should be protected

- 7% mentioned the regulation of physically dangerous technologies like autonomous cars

- 4% advocated for transparency in these technologies.

In the UK a recent report from The Royal Society on Machine Learning had a lot of really sensible things to say about privacy, the effect of new technology on jobs, and so on. The government should probably take note. Also, the Department of Transport has published eight principles that should govern computer security for vehicles, which seem pretty sensible. There is probably a role for legislation in some of these areas, and business should take notice of what’s been put forward as well. Ultimately however this is like any powerful new technology. – Sean Holden

They should be devising general guidelines for development of AI, especially weaponized AI (which is already happening). – Kevin LaGrandeur

Leverage the Benefits of AI

11% of respondents mentioned that leaders should focus on leveraging the benefits that artificial intelligence will have on society.

Artificial intelligence has the potential to revolutionize almost every industry and vastly improve the lives of many people. The use of AI in medicine alone could help humans live a longer and healthier life. Some of our respondents believe that governments should actively foster these technologies, like they did with the internet, to maximize their potential.

What makes life better? Freedom. Health. Prosperity. AI will augment the capacity of individuals, not replace them. Leader should adopt AI to ensure that all citizens can realize their full potential. Specifically, however, AI can revolutionize primary education, something we need badly, giving every young person not only access to the world’s knowledge but the ability to use it to make their own lives better. – Charles H. Martin

See the previous answer. I think AI eventually should be considered a tool for social improvement just like genetic engineering, IVF, genetic screening etc. and so similar government agencies should be created to manage its development. –

Do Nothing

The last group of respondents does not believe that anything should be done and that the technology should just take its course. The first group of respondents within this category think that trying to manage the technology will do more harm than good, or that it’s just simply not needed.

Nothing. Stop listening to Elon Musk. – Roger Schank

Not try to control it… Banning or restricting AI development will have disastrous consequences, ss only powerful entities and outlaws will work on it, motivations will be very skewed – away from general beneficial goals. – Peter Voss

Nothing special. AI needs no other treatment than does electricity or the Internet. The international community should worry about the real problems: rich-poor divide, nuclear weapons, political stability of the middle east, global warming, decline of democracy, human rights, terrorism, (un)sustainable development…The problem of AI is an imaginary problem. – Danko Nikolic

The second group thinks that nothing can be done about it.

Managing technologies is often difficult. Part of the problem is that when an emerging technology first arrives, there’s a lack of foresight and precedent to effectively deal with it due to lack of information. After there’s enough information that this part of the problem is solved, it’s often too embedded into society to effectively control. This double-bind is known as the Collingridge Dilemma.

The other problem is that AI is ubiquitous and can easily proliferate. Arms control experts have already noted that enforcing any form of treaty on AI will be almost impossible. As discussed earlier in the article, there is no special infrastructure required to create it, it can be created modular by people all over the world, and auditing the code is very difficult.

Not only are attempts at regulating AI incredibly difficult due to the the nature of the technology, but successful regulation (such as open-source standards) or defection by stakeholders on agreements could produce other harms.

Abandon all hope of achieving this lofty goal! – Stephen L. Thaler

The transition will not happen by a single recognizable event. It will happen gradually, one stone at a time, just as today computers are protecting stock markets from a sudden crash, they are landing aircrafts, analyzing DNA for better diagnostics. Government or business leaders cannot do anything today to stop or reroute this progress. Intelligence is one of nature’s unstoppable forces. – Riza Berkan

Judging by our experience with nuclear technology, not much they can do. Transparency in AI development is the key, but it’s the last thing the militaries of the world care about. Open-sourcing critical AI technologies might help in creating antidotes, but it would also enable terrorists to put AI to bad use. – Igor Baikalov

Analysis

This section will be a more in-depth analysis of the data to find insights. We will be looking at the relationships and trends in the data that were not covered in previous sections. Please note that non-applicable or uncategorizable answers were removed from the graphs.

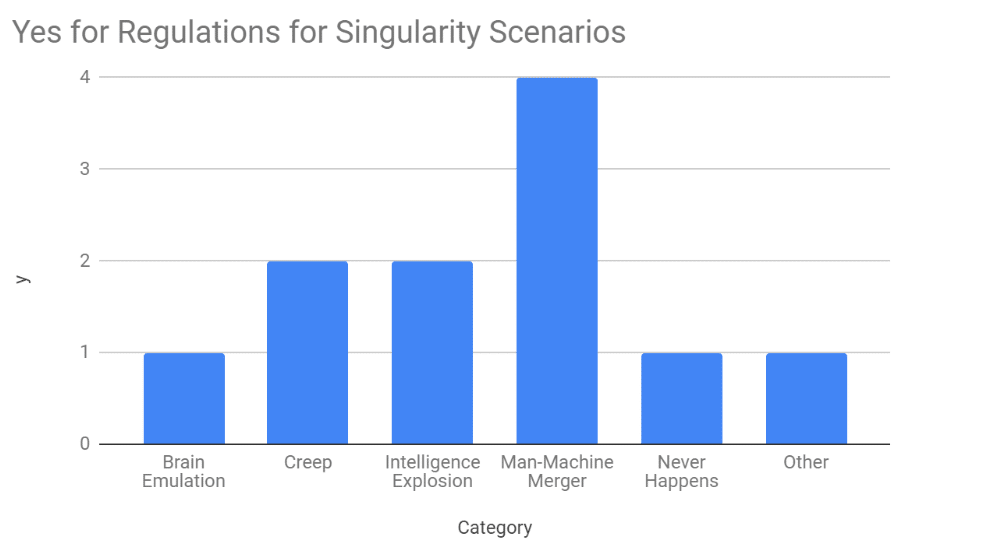

Singularity Scenarios and Business & Government Preparation

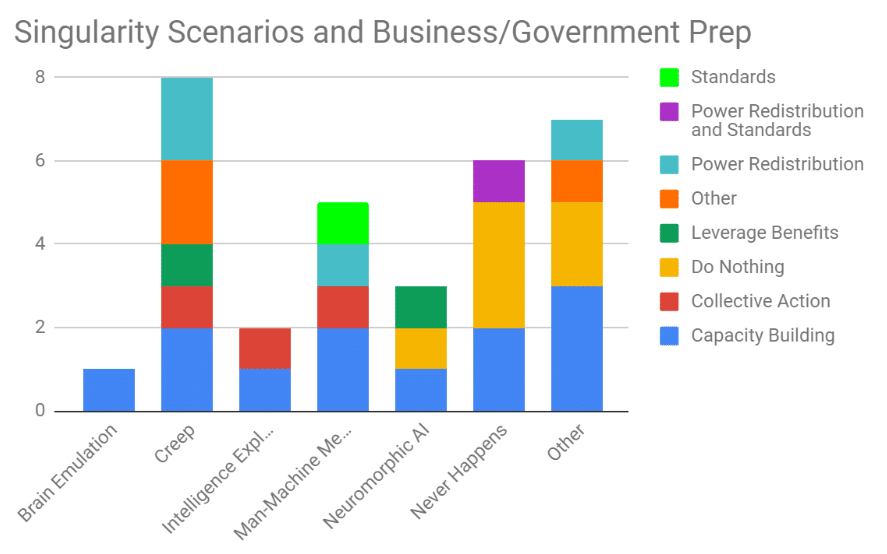

This section covers the relationship between the different singularity scenarios and what respondents thought business and government leaders should be doing to steer the future of AI.

The graph shows a relatively even distribution for capacity building across all scenarios. Capacity building included recommendation such as preparing for job loss scenarios and keeping up to date on advances in the field. This seems to be a fairly common recommendation for every scenario.

What’s not common however is that outside of the “never happens” and “other” categories, there is only one recommendation to do absolutely nothing, which is in the neuromorphic AI scenario. The reason this respondent cited for not doing anything was that they believed that attempts to control AI will turn out to be detrimental. However, outside of this one response, it seems like everyone else advocates for leaders to do something.

Another interesting finding is the distribution of responses for power redistribution, which would include recommendations like preventing monopolies and authoritarian abuse. Outside of the “other” category, this response was found in the intelligence creep and man-machine merger scenarios.

The reason why intelligence creep has power redistribution responses is not entirely clear. Respondents were overall worried about unethical behavior from monopolistic organizations, but no reason unique to the scenario itself was cited. It could be that the universalization of AI into society means that whoever has control over AI products will thus wield unprecedented influence on it.

The reason why power redistribution was present in the man-machine interface is likely because monopolization of neurotechnology would likely be dangerous due to regulatory capture. However, the exact reason the respondent cited wasn’t clear.

Man-machine merger was also the only section where someone actively recommended creating a set of standards for AI.

This also seems to be supported by the number of respondents who actively mentioned that AI should be regulated. Man-machine merger received twice the number of responses of the runner ups. This is likely because if AI-powered technologies are being integrated into human bodies, then establishing regulations and guidelines might be even more important for safety and ethical issues.

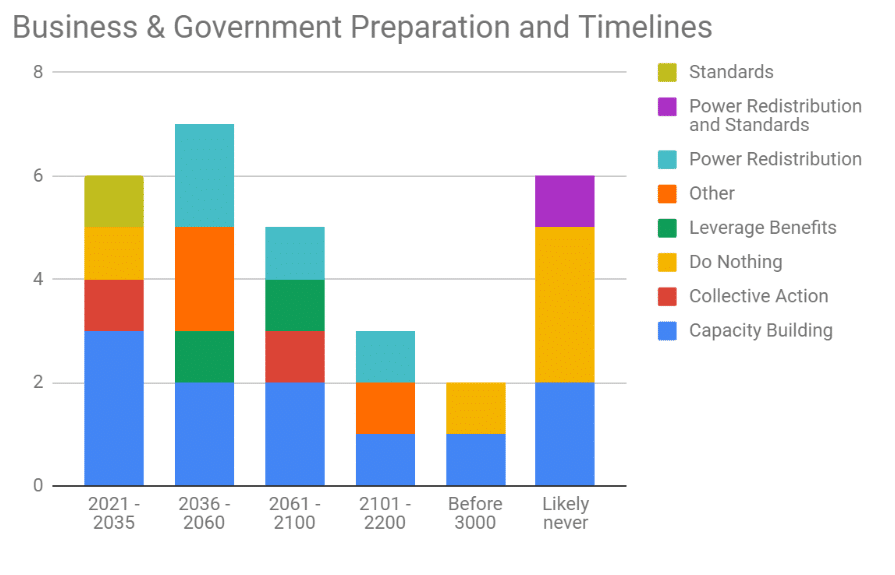

Business & Government Preparation and Timelines

This graph looks at the relationship between what advice respondents gave for business and governments and what their response was for when they expect the singularity.

The number of capacity building responses seems to trend down over time and is instead replaced by other recommendations such as power redistribution and leveraging the benefits.

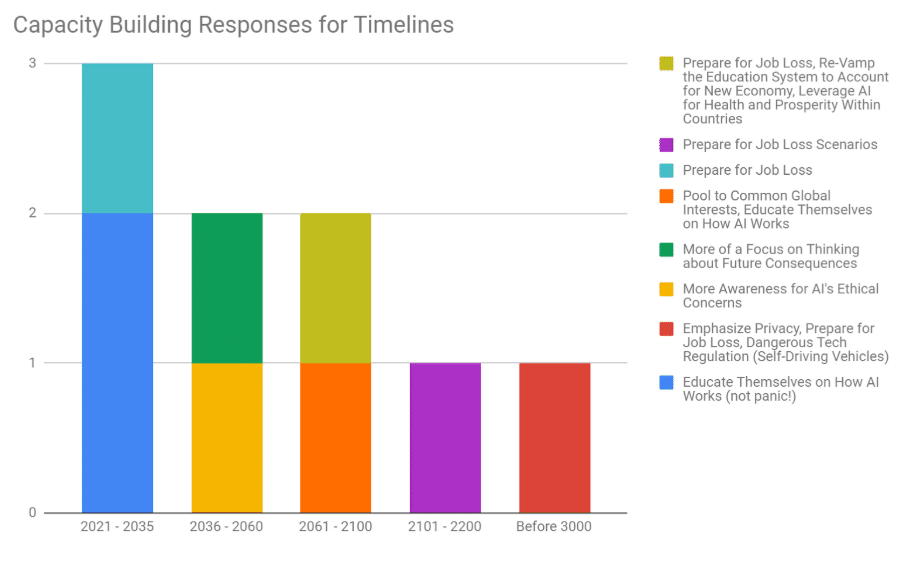

Breaking down the different capacity building responses, the types of capacity building responses change over time too.

In the very near term, the vast majority of capacity building recommendations is for leaders to educate themselves on AI. In the medium-term future, capacity building responses are more focused on thinking about the future consequences of AI. Then, from 2061 on, concerns mostly reflect job loss with some concerns for dangerous tech, privacy, and being on top of AI developments.