This report attempts to highlight the wide array of real-world use cases of machine vision in the military. Several militaries claim to leverage machine learning, working with contractors and companies in some cases to deliver AI solutions. We found that these solutions help highlight the wide breadth of current and near-term military applications of machine vision. The companies and organizations discussed in this report help various militaries with at least one of the following:

- Surveillance

- Autonomous Vehicles

- Landmine Removal

- Quality Control for Ordnance Manufacturing

What Business and Government Leaders Should Know

From both our first-hand account at the National Defense University and our independent research, it would seem that the US military has just begun asking many of the same questions business leaders were asking and solving three or four years ago. Colonel Cukor of Project Maven said it himself at a Defense One Tech summit:

We are in an AI arms race…It’s happening in industry, [and] the big five Internet companies are pursuing this heavily.

The Colonel’s remarks on the machine vision technology available commercially—“frankly…stunning”—may note the uncomfortable feeling US military leaders of the recent past have been feeling when comparing the government’s foray into AI with that of the private sector.

As this report will denote, military initiatives in machine vision are currently underway most prominently in Israel and the United States. China has also committed large budgets to modernize their military, and with that has come AI initiatives. India recently launched its own large AI project with the intention of integrating AI into all three branches of its military.

In attempts to expedite and streamline the research and development of AI initiatives within the US Department of Defense, the DoD announced the creation of JAIC (Joint Artificial Intelligence Center) on June 27th, 2018. The project is lead by Dana Deasy, former CIO at JP Morgan Chase and current CIO at the Pentagon.

JAIC would seem to symbolize the US DoD’s newfound prioritization of AI projects for military use. Deputy Secretary of Defense Shanahan wrote in his memo signifying the creation of JAIC:

This effort is a Department priority. Speed and security are of the essence…I expect all offices and personnel to provide all reasonable support necessary to make rapid enterprise-wide AI adoption a reality.

Surveillance

Project Maven

The US Department of Defense began a machine vision program in 2017 titled the Algorithmic Warfare Cross-Function Team, codenamed “Project Maven,”. The intent of the project was to develop an AI that can categorize and identify the huge sums of surveillance footage taken by combat surveillance equipment using machine vision. Project Maven was moved to JAIC control upon JAIC’s creation.

Pentagon R&D in collaboration with additional “private contractors” developed a software that integrates with the air force’s database of reconnaissance footage and analyzes the footage taken from manned and unmanned reconnaissance vehicles. The AI flags vehicles, people, and cars, as well as tracks objects of interest, for human analyst attention.

We can infer from material stated by Google and the US Department of Defense the machine learning model behind the software was trained on thousands of hours of smaller low flying drone cam footage depicting 38 strategically relevant objects from various angles and in various lighting conditions. The objects within the footage would have been labeled as what we know the objects to be, such as a traveling car, a weapon, or a person. This labeled footage would then be run through the software’s machine learning algorithm. This would have trained the algorithm to discern the sequences and patterns of 1’s and 0’s that, to the human eye, form the video of a strategically relevant combat zone as displayed in drone surveillance footage. The Pentagon has not publicly defined these 38 objects the software flags.

The surveillance team could then upload newly taken footage that is not labeled into Project Maven’s software. The algorithm behind the software would then be able to determine the contents of the footage and identify any anomalies or strategically relevant objects it has been trained to flag. The system then alerts a human operator in some unknown fashion and highlights the flagged objects within the video display.

Col. Drew Cukor commented on the data labeling process, saying, “We have a large group of people—sophisticated analysts and engineers—who are going through our data and cleaning it up. We also have a relationship with a significant data-labeling company that will provide services across our three networks—the unclassified and the classified networks—to allow our workforce to label our data and prepare it for machine learning.”

We could not find a video demonstrating the technology likely because contractors in ISIS-controlled territories and military installations in the Middle East and Africa are currently using it. In other words, many details about the software are likely classified. The known budget for Project Maven in 2017 was $131 Million Dollars.

Kari Bingen, deputy under the secretary of defense for intelligence, was quoted at the Intelligence and National Security Summit hosted by INSA and AFCEA saying, “[Project Maven took] six months from ‘authority to proceed’ to delivering a capability in theater.” Bingen further extrapolates on the possible next steps of this project: developing this algorithm to determine risks in humans. She said, “There’s a lot of data sources available, and leveraging automation tools and algorithms could enable DoD to identify insider threats.”

As an extrapolated strategic example, security camera surveillance footage of a marketplace could be monitored and flagged for certain objects in the footage. These objects would correlate to danger or insurgent support, such as gang insignia, gang tattoos or partially concealed weapons.

As of 2017 Colonel Drew Cukor was the leader of Project Maven. He is the Chief, Algorithmic Warfare Cross Function Team, ISR

Operations Directorate, Warfighter Support, Office of the Undersecretary of Defense for Intelligence in the United States of America. Colonel Cukor has a decorated history of intelligence and counterintelligence success in various US Marine Corp affairs.

Autonomous Vehicles

Autonomous vehicles are being developed today for civilian use as well as military use. Both use cases have different requirements for the task they are looking to solve. Military autonomous vehicles are held to a far less steep safety standard than autonomous vehicles being manufactured in the United States. Military use of autonomous vehicles at present have clear applications for saving lives on the battlefield, and so corner case safety considerations are not taken into account as they may be for civilian use. As an example, Boeing offers numerous autonomous craft to militaries but seems to avoid the design of autonomous ground transportation.

Autonomous car companies must create a product able to safely navigate crowded streets, traffic and traffic signs for both the driver and pedestrian alike, whereas military combatants rarely need to adhere to street signage in times of emergency. Autonomous vehicles use a combination of machine vision and other technologies in order to navigate the world around it and safely arrive at its’ intended destination.

There are many types of military vehicles and many militaries in the world, meaning the possibility space for autonomous vehicles may be rather large. Although there are deviations in the engineering of an autonomous system for a vehicle, business leaders reading this report can understand autonomous vehicles heavily relying on machine vision in a similar way.

The machine vision aspects of the self-driving car are trained at first using a supervised learning process. Autonomous vehicles are trained with numerous mounted cameras all around them at various heights and angles that allow it to detect how the car is moving from every perspective. The software is trained on thousands of hours of human driving footage showing the proper and safe way a vehicle should operate from various angles, in various lighting conditions, and in different weather conditions.

Certain aspects of this footage would have been labeled as key indicators of something while driving, a bounding box that the AI would have to recognize as an important concept or rule to follow; a stop sign indicates a stop, double yellow lines should not be crossed, dotted white lines on the road can be crossed, etc. Vehicles with military applications will not have to adhere to road law as closely but will be trained in ways pertaining to combat such as how the machine vision can detect roads likely to cause skidding or hydroplaning when traveling at 70+ miles per hour.

This labeled footage would then be run through the software’s machine learning algorithm. This would have trained the algorithm to discern the sequences and patterns of 1’s and 0’s that, to the human eye, form the video of a car safely driving as displayed in the mounted camera video.

Combining this machine vision data with data from sensors, such as gas pedal data or lidar data used for tracking the current distance the lidar is from the objects around it, allows the autonomous vehicle to be trained more comprehensively then using just machine vision data alone. Discover additional information on autonomous vehicles and their civilian applications in this recent timeline.

The vehicle could then get a command to drive to a location that is not labeled into its software. The algorithm behind the software would then be able to drive the vehicle and accompanying passengers safely to the determined location.

Israeli Aerospace Industry

Isreal Aerospace Industries received a contract for two separate partially autonomous vehicles, the adjustable Robattle and the autonomous bulldozer, Panda, from the Isreali Army. The vehicles purportedly can operate autonomously on certain tasks and also be remote-controlled. The Robattle is a vehicle able to adjust its build configuration, allowing it to scale or climb over certain large obstacles as well as flatten itself to drive under obstructions. Strategically speaking, Robattle can be used to get supplies or information to soldiers on a battlefront or rescue soldiers in danger without putting military personnel in harm’s way.

Panda, an automatized bulldozer could be used on the battlefield for key acts of demolition in combat scenarios where a human driver of the bulldozer would be too dangerous for them.

IRI claims the reconstruction of this bulldozer could set a precedent for the military, allowing older equipment to be repurposed for further use.

Below is a short 4-minute video demonstrating the automated bulldozer and Robattle in action:

The IAI is Israel’s primary defense contractor. Established in 1953, they currently employ 1004 employees in the defense industry. Amira Sharon is CTO at IAI. Her exact academic credentials are not known, along with much of IAI’s C-level leadership information. This is mostly concealed information given their direct ties to Israeli military operations and ongoing conflicts. Meir Shabtia has been quoted on the AI project and is the Vice President of R&D at G-Nius Unmanned Group Systems, a company of IAI’s. He holds a Masters in Engineering from Tel Aviv University.

Landmine Removal

Lebanese Army

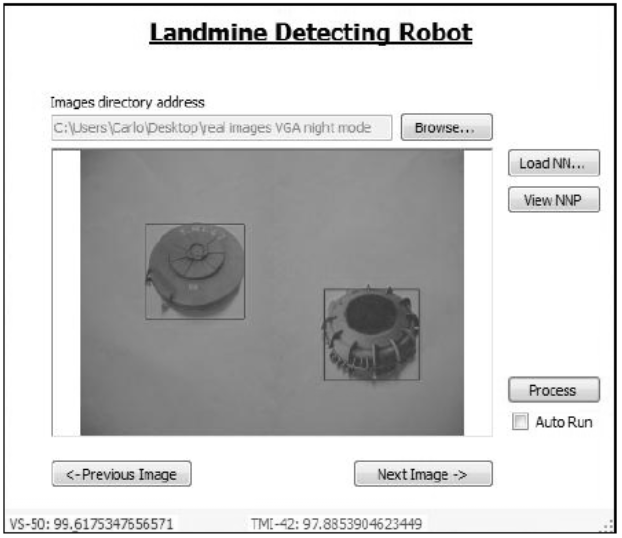

Achkar and Owayjan, of The American University of Science & Technology in Beirut, Lebanon, published a paper on a system for current Lebanese military application utilizing machine vision. It claims the software platform could help military efforts with the removal of live landmines via imaging software using machine vision.

Achkar and Owayjan claim the Lebanese army can integrate the software into the imaging software they utilize on their landmine detecting robots.

The paper states the machine learning model behind the software was trained on thousands of images of two different types of anti-tank mines showing them covered, partially covered and upside down from various angles and in various lighting conditions. These images would have been labeled as a specific type of landmine. These labeled images would then be run through the software’s machine learning algorithm. This would have trained the algorithm to discern the sequences and patterns of 1’s and 0’s that, to the human eye, form the image of one of the landmines it can detect as displayed in a photo.

The user could then upload a photo that is not labeled into the AI. The algorithm behind the software would then be able to determine where in the photo a landmine is present. The system then alerts a human observer of the landmine by displaying a red box on the display of the photo.

Below is a photo demonstrating how the machine vision software displays the landmines it has detected:

Achkar and Owayjan claim their system has a 99.6% identification rate on unobscured landmines, with reduced identification rates varying depending on the level of obscurity and the landmine model. As this is a humanitarian effort funded by a University, this method of landmine detection via machine vision means there are no marquee clients to be reported on.

Roger Achkar is the dean of engineering and co-writer of this paper at American University of Science & Technology, Beirut, Lebanon. He holds a PhD in Energetic Systems and Information from the University of Technology of Compiegne, France. Michel Owayjan is the Chairperson of American University of Science & Technology. He holds a Masters in Engineering Management from the American University of Beirut.

Quality Control for Ordnance Manufacturing

Integro Technologies

Integro Technologies has a contract with US Army manufacturers around a software which it claims can help industrial manufacturers quality-control test their armaments using machine vision. Integro Technologies offers a machine vision device that performs a 360-degree verification of the object the device is pre-trained on. We will focus on one use case of this machine vision technology offered by Integro. One of their devices detects fractures and imperfections on a bullet casing surface or grenade down to .004mm and sort the cleared item or uncleared item.

We can infer the machine learning model behind the software has and would be trained on thousands of 3-D scans showing perfect and imperfect bullets from various angles and in various lighting conditions. These images would have been labeled as indicative of a flawed bullet or indicative of a satisfactory bullet. These labeled images would then be run through the software’s machine learning algorithm. This would have trained the algorithm to discern the sequences and patterns of 1’s and 0’s that, to the human eye, form the bullet as displayed in a 3-dimensional scan.

The machine uses its mechanical arm to grab the bullet being verified and then takes 3d photos of it, which are uploaded to the software. The algorithm behind the software would then be able to determine the difference between bullet without imperfections and a bullet with surface imperfections. The system then separates the cleared bullets from the bullets with imperfections.

Below is a short 1-minute video demonstrating how the company’s software and device work. Note this video is showing the verification of wide metal rings and not bullets as detailed above:

Integro Technologies claims to have helped the manufacturers responsible for a portion of the bullets and grenades manufactured by the US army. Our research yielded no results when we tried to find case studies for their software; however, their website does display numerous videos of their machine vision technology actively working on a client’s manufactured object verification. Another marquee client Integro sites testimonials from is Proctor and Gamble.

Integro Technologies is a privately owned company established in 2001 with 48 employees. They have received no venture funding. Shawn Campion is the CEO of Integro Technologies. He (as well as all of the other noted engineers on their website) holds a bachelors in mechanical engineering from Penn State. Previously, Campion served as senior engineer at the machine visions company, Cognex Corporation.

Header Image Credit: Traffic Safety Store