The general premise of this article is different from most of my previous AI Power articles.

While most of the articles in this series have related to the near-term struggles for power between organizations and governments with regards to regulation, data, and international policy, this article will focus on the long-term trajectory that AI and technology are headed towards and what that means for the most powerful nations and organizations.

In the long term (15-40 years ahead), the power struggles around AI will not end with economic and military competition. Ultimately, AI power will involve determining the trajectory of intelligence itself.

This might involve the creation of astronomically powerful artificial general intelligence (AGI) and/or the creation of vastly more capable and powerful cognitively enhanced humans (transhuman transition).

Microsoft’s $1B investment into OpenAI was the beginning of what will become a much more overt set of commercial and national efforts to determine who should build superintelligence, and that race will indeed be an arms race unless humanity makes an overt effort to avoid an arms race dynamic.

That’s what this essay is about.

I’ll begin with a set of propositions about the explosion of AI and neurotechnology capabilities in the near future, and I’ll posit a series of steps that I believe business and government leaders could take to mitigate war or grand conflict and promote a better AI-enabled future.

This is not a complete set of ideas, it is a formalization of the same clarion call I’ve been touting since 2013, namely:

Humanity needs to discuss and determine what it should turn itself into (i.e. the trajectory of intelligence itself) and humanity needs to come together globally to discuss and determine how to reach those post-human futures without horrendous, competitive, violent conflicts.

When I’m called upon to speak for the United Nations or INTERPOL today, it’s about the near-term security implications of AI (deepfakes, cybersecurity, policing, defense). Within the next year or two, I hope to encourage that conversation to shift more towards discerning a positive path forward into a future that will involve cognitive enhancement (brain-machine interface), strong AI, and radical shifts in geopolitics and the human condition.

With power, competition and conflict will be the norm if an alternative dynamic isn’t consciously established and worked towards. When it comes to humanity guiding the future of intelligence: We unite or we fight.

That’s the hypothesis of this essay.

From my first TEDx presentation in 2014 to articles on danfaggella.com going back years before that, this theme is what occupies my time and compelled me to found Emerj in the first place.

I see this set of ideas as a first attempt at squaring the circle of the power struggles of the next forty years, and I welcome all challenges to its premises, suggested courses of actions, and conclusions.

We’ll begin with the premises.

Post-Human Premises

Technology premises:

- Super-powerful AI will become viable around 2040-2060. AI that approaches human-level intelligence will overhaul economies, power dynamics, and nearly all elements of human society (see Emerj’s research on Singularity timelines).

- Human cognitive enhancement will be viable around 2040-2060. People will be able to augment their emotional and cognitive capabilities and experiences via neural interfaces, drastically altering the human experience (i.e. transhumanism, see my essay called “Tinkering with Consciousness”).

Moral Premise:

- The trajectory of post-human intelligence is the most important conceivable thing of all time up until this point. Humans are dominant on earth and are morally valuable because of our rich consciousness and ability to do understand and create. The emergence of radically cognitively enhanced humans. or super-intelligent AI with a drastically greater ability to understand, will be the most morally consequential event on earth.

Premises on the Nature of Man and Power:

- Humans will selfishly do whatever behooves their aims. Humans are neither immoral or moral, but amoral. Humans can be expected to act in their own best interest at all times and under all circumstances. This is a theory known as psychological egoism, which has been elaborated on by policy thinker Nayef Al-Rodhan (listen to our interview with Al-Rodhan here).

- An arms race is the default condition for emerging technologies; it is part of the state of nature. Individuals or groups will aim to exert economic or physical control or quash competitors. Unchallenged relative power is the goal.

- A war in the era of AGI would be an existential threat to humanity. An arms race around super-powerful AI and cognitive enhancement technologies might result in a positively catastrophic war with tremendous detrimental impacts across the globe. Possibly human extinction via autonomous weapons, AGI, biological weapons, radioactivity, and nuclear strikes (see: de Garis “Gigadeath”).

- All species simply die off or evolve into something else. Given a long enough time horizon, the most intelligent beings (those who will be commanding the future trajectory of intelligence after them) will not be human beings. As humans bubbled up from some long-lost ancestor, in a long enough time horizon we will either become extinct, or we will become a long-lost ancestor to something beyond humanity.

- Technological development is a kind of sped-up evolution. Slowing evolution may be possible, but not stopping it. The best humanity can do is guide the evolution of intelligence, the gradual shift from current technologies to new technologies, from current species to new species in the direction that we believe is most beneficial.

The Need for Global Governance and Solidarity

If the premises above hold true, then staving off war or existential risk would potentially involve:

- Creating governance systems and international collaboration to prevent AI and transhumanism from becoming a competitive country-vs-country dynamic, in order to determine what would be best for all humans.

- Making the issue of post-human transition and future intelligence known and important to both the global citizenry, and the leaders of the most powerful nations and organizations.

In order to achieve this kind of united governance effort, humanity should establish:

- A global governance structure, and

- A felt sense of solidarity among humans – or at least a felt sense that aggression and lack of trust are undesirable as they risk mutually assured destruction

A globally united future intelligence governance structure would need to:

- Determine and maintain a living “map” of future intelligence scenarios (Steering). A map of scenarios humanity might move towards, including preferable and un-preferable scenarios. This would involve buy-in from the global populace and the leaders of nations and would involve a plan for maximizing safe and beneficial global intelligence development.

- Determine a living set of standards around the lawful development and use of intelligence tech (Transparency). This group would need to detect and deter stockpiling, aggression, and foolhardy development of emerging tech and AI. This would have to be vastly more complex and nuanced than current nuclear non-proliferation agreements and would involve a responsive and evolving set of laws and rules, and a robust global task force for ensuring transparency.

Human Solidarity in the Face of Drastic Change and Evolution

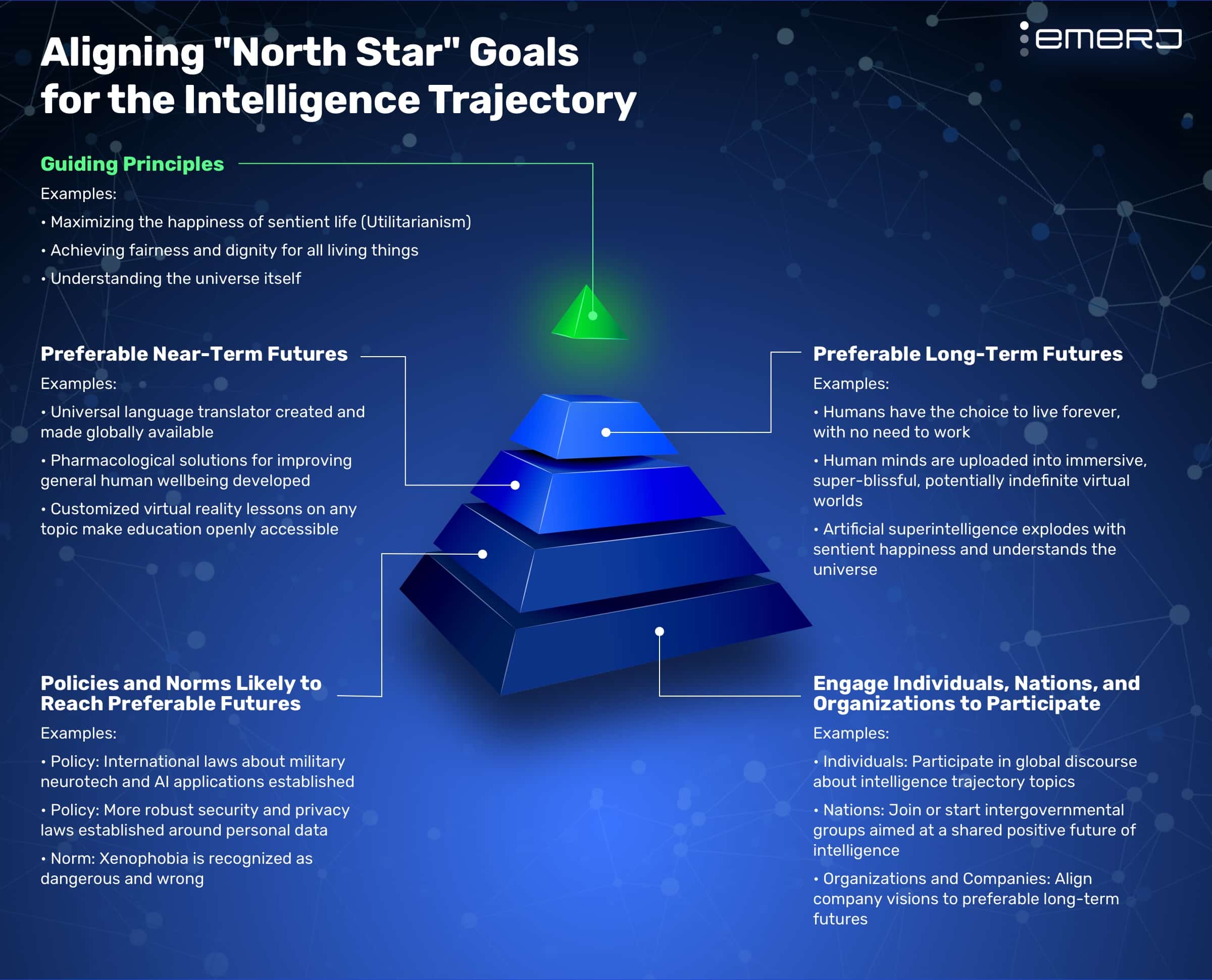

To begin construction of global governance structures, humanity will need to determine preferable and non-preferable goals and destinations for the future of intelligence itself. This would likely involve the following steps:

- Determine ultimate distant goals and values (brilliant, desirable, but far-away futures)

- Discern preferable and non-preferable long-term (say, 100-200 years) scenarios for humanity or intelligent life

- Discern preferable and non-preferable short-term (say, 20-60 years) scenarios for humanity or intelligent life

- Determine current policies, international relations norms, technology norms, that would need to be in place to get to those initial post-human transition scenarios

- Determine the role of the individuals, nations, and organizations in shaping “3” above

This process is obviously more complicated than five bullet points and would need to be constantly iterated upon and adjusted as humanity moves forward.

That said, I argue that those five general aims should be fleshed out internationally if we aim to avoid war and head into future intelligence scenarios with the best chance of avoiding catastrophe.

Here’s how these priorities might be represented visually:

There have been a great number of books and essays written on the topic of peace and human solidarity. I’ve drawn value from the history surrounding the Peace of Westphalia and the formation of the United Nations. History is rife with instances of conflict and resolution, and assessments of these cycles from Ibn Khaldun’s Muqaddimah to Kant’s Perpetual Peace.

I’ll leave a deeper assessment of this subject to more qualified scholars, but I’d like to address a few important themes and topics that I believe to be both timely and specifically relevant in the current AI arms race.

Encourage Open Communication

Groups who communicate openly are more likely to understand each other’s humanity and are less likely to be intimidated by each other’s differences. Xenophobia and nationalism seem much more likely to thrive where an isolated group can be told only stories of its superiority and about the inferiority of others.

AI for Language Translation

Having a universal real-time language translator will be one of the most important natural language processing developments of the century. The ability to converse freely with anyone about anything will permit more of humanity to forge bonds (for business, for friendship, for enjoyment of the arts). While this may also allow for more verbal conflict, it seems likely that association and trade build bonds that make war less likely (see: Kant’s Perpetual Peace).

Permit Relatively Unhindered Communication Across Borders

This could be said to be particularly important for unconnected cultures. The way I see it, there is very little downside to having more cross-border communications between the US and China (English to Mandarin, Mandarin to English) outside of the fact that the US and Chinese governments would use this information in espionage efforts.

It is possible that the forging of person-to-person social bonds across borders may do more aggregate good than the spying and “listening in” by the CIA or CCP would do harm.

Multilateral Effort for Tech Development and Governance

Governments and citizenries should find ways to organize in order to come to a shared consensus on the future trajectory of intelligence, and the management of incentives and groups involved in AI and neurotech. This might involve:

- Intergovernmental organizations and conferences to encourage ideation, discourse and collaboration

- University programs, exchange programs for youth

(Note: There is a real issue with these efforts becoming propaganda or espionage tools for tyrannical ruling parties, and that is a longer issue I’ll have to address elsewhere.)

Rule of the People

Democracies go to war less. Educated people are usually unwilling to send their children to war and to cause mayhem with a potential trading partner. (See: Democratic peace theory). It may be my own Western bias, but I believe quite firmly that more democracies would mean more peace, more adherence to human rights, and a greater ability to engage in multilateral global collaboration through organizations like the World Bank or entirely different kinds of organizations.

Challenges, Opportunities, and Reasons to Act

Challenges

- United governance may be impossible without war, and war given today’s weapons might mean the extinction of humanity. Even after such a war (if we survived), united governance may be impossible.

- It may be impossible to govern these technologies transparently (Bostrom’s sand and a microwave analogy. Plus, AI is just code).

- It may be the case that an ungoverned proliferation of AI/neurotech and the conflict that results therefrom would create a vastly more capable and powerful AI which would have a much greater ability to survive, learn, and populate the galaxy. It is impossible to tell what paths would lead to more or less suffering.

- Cultures that aren’t used to or very interested in liberal freedom (freedom of speech, elected officials) and don’t have the will to change their governance structures from dictatorships (i.e. China’s Confucian values, history of emperors, and the “Mandate of Heaven”).

Opportunities

- We may be able to eliminate the extremities of poverty and eliminate the incentives for international war

- We may be able to maintain our consciousnesses for a long time in expansive and blissful worlds (see previous essays: Sugar Cubes, Digitized and Digested).

- We may be able to proliferate sentient good on earth (AI or uplifting), and proliferate utilitarian good across the galaxy and possibly beyond (see my TEDx talk: “Can AI Make the World a Better Place?”).

- We may be able to leverage technology to grow the cosmopolitan spirit across all nations and boundaries, making a felt sense of solidarity become the norm (see Ibn Khaldun’s idea of solidarity).

Reasons to Act

- By default, the development of AI and neurotechnologies will be done in an arms race dynamic between the most powerful international players (mostly the USA and China, with some other nations in the mix as innovators, while most smaller and weak nations will attempt to be regulators), and only deliberate global effort is likely to deter overt conflict.

- If we don’t destroy ourselves, future intelligences from earth will potentially be able to populate the galaxy and explore and discover billions of times more about the universe than we humans ever could.

- If there is a meaning or purpose to the cosmos, AGI is vastly more likely to discover it than human beings (who have no such ultimate understanding).

- The ability to mold the future of intelligence is the highest conceivable creative act of humanity and the most morally consequential action we could possibly take.

While I believe there are compelling reasons to bring these post-human transition issues to the forefront for international governance and collaboration, there is absolutely no clear path forward. Indeed, some of the variables and factors involve make the following goals near-impossible without conflict: (a) globally human solidarity, and (b) shared goals for the development of AI and neurotechnologies.

Some of these factors that may completely prevent a peaceful intelligence takeoff include:

- The speed that these technologies develop is faster than a bureaucracy could deal with them. Tech governance is hard, global tech governance (i.e. more bureaucracy) is almost certainly much harder.

- Agreement on preferable human futures (“North Star goals”) is probably impossible, and agreement on how they should be regulated is also probably impossible.

- Forecasting the risks and opportunities of new and powerful emerging technologies is essentially impossible.

- This list is potentially endless

Concluding Thought: “To the Strongest”?

The crux of the matter here is incentives.

Nations and organizations will be incentivized to (a) develop or control artificial general intelligence, and (b) appear virtuous and benevolent while doing so. The goal will be what the goal has always been:

Unassailable relative power.

A “moat” of hegemonic power so vast that the winning entity becomes the shot caller of the planet and the greatest prime mover of the future. The group that dominates these technologies will almost certainly be the most powerful and potentially final human empire (essay: Final Kingdoms).

I’ve said it before in my essay called Substrate Monopoly, but here’s the general premise:

In the remaining part of the 21st century, all competition between the world’s most powerful nations or organizations (whether economic, political, or military) is about gaining control over the computational substrate that houses human experience and artificial intelligence.

As people live more and more in virtual worlds, and as AI and neurotech drastically alter the human condition and nature of society, the power struggle for ultimate control will come into the fore. Without a direct plan and overt international efforts to avoid conflict and arms race, all we’ll get is conflict and arms race.

The natural state will be what the natural state always is: The state of nature. Competitive, violent and with cooperation only happening so long as it behooves the parties involved.

We unite, or we will fight. And in a world of cognitively enhanced human beings and super powerful AI-enabled weapons, fighting doesn’t do much good for anyone.

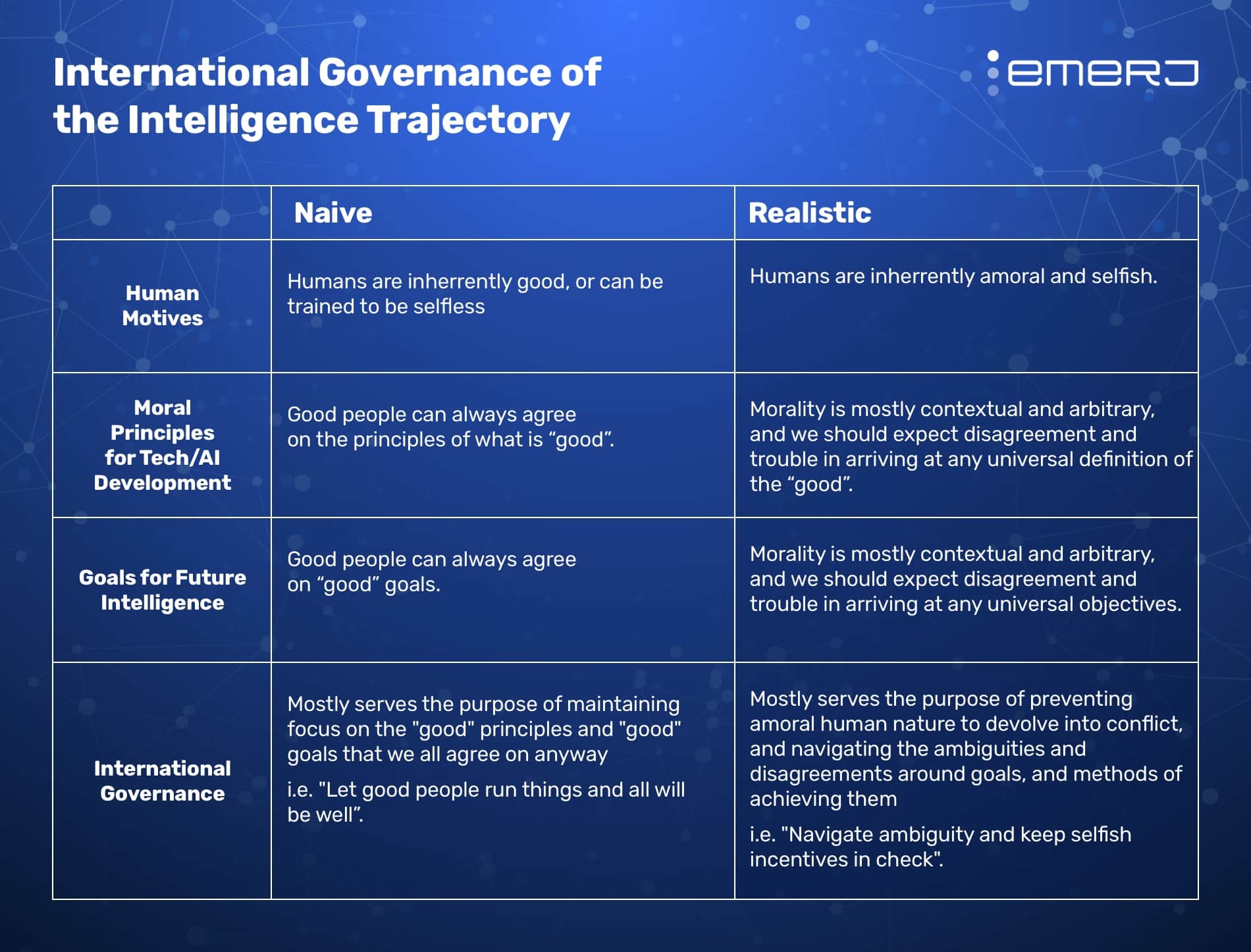

Many of the initial responses to this global loggerheads scenario are childish and unrealistic:

- Convince people of a singular moral good to agree upon and simply have that be a shared human goal (literally impossible)

- Teach humanity to stop being selfish and amoral (literally impossible)

I believe that any kind of realistic approach to the creation of an international order that exists to make sure that humans move towards the shared post-human trajectory goals that we believe are good is to:

- Accept the selfish nature of man, including ourselves, our allies, and all other humans and groups

- Admit there is no universal “right” for values or morality and we must live in and deal with that grey area permanently

- Find an international order that ensures broad benefits to humanity and hedges directly against selfish motives

I hope sincerely that we’ll be able to endure the transitions ahead.

On his deathbed, Alexander was asked:

“My king, to whom will we give the kingdom?”

He is purported to have replied:

“To the strongest.”

Alexander was right. The kingdom goes to the strongest.

So what’s stronger: Human selfishness and tribalism or human solidarity in the face of insurmountable challenge?

Time will tell.