In this installment of the FutureScape series, we discuss what our survey participants had to say about how we’d reach the singularity.

For those who are new to the series, we interviewed 32 PhD researchers (almost all of whom are in the AI field) and asked them about the technological singularity: a hypothetical future event where computer intelligence would surpass and exceed that of human intelligence with profound consequences for society.

Although not necessary to understand this article, readers can navigate to the other FutureScape articles below:

- When Will We Reach the Singularity? – A Timeline Consensus from AI Researchers

- (You are here) How We Will Reach the Singularity – AI, Neurotechnologies, and More

- Will Artificial Intelligence Form a Singleton – or Will There Be Many AGI Agents? – An AI Researcher Consensus

- After the Singularity, Will Humans Matter? – AI Researcher Consensus

- Should the United Nations Play a Role in Guiding Post-Human Artificial Intelligence?

- The Role of Business and Government Leaders in Guiding Post-Human Intelligence – AI Researcher Consensus

How We Will Reach the Singularity?

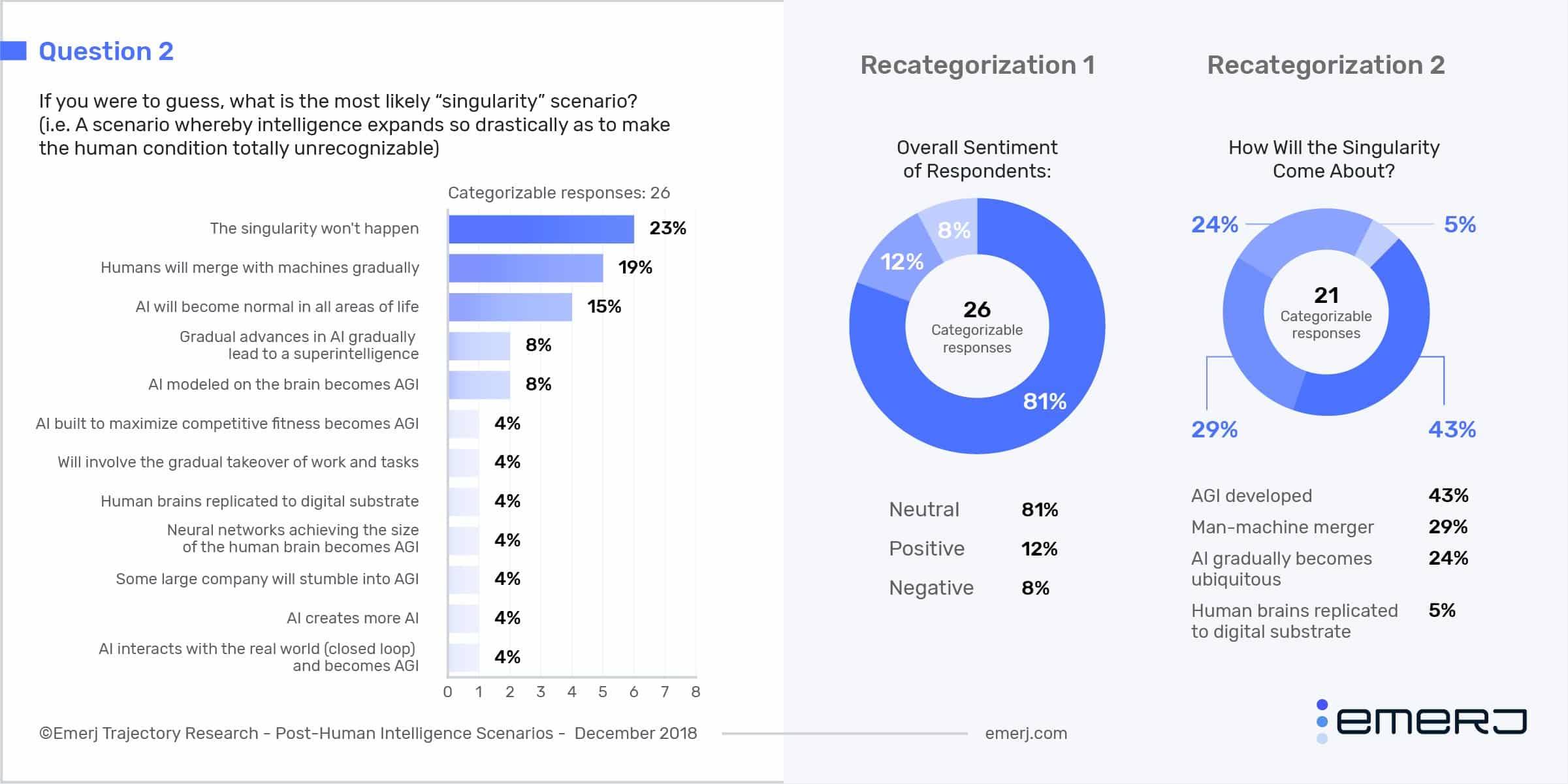

There are many ways that our experts believed we would reach the singularity. We gave them the freedom to describe the path that they expect to see (in free text), and we then categorized the responses into a number of different scenarios.

The most popular opinion about how the singularity would come about is from the development of artificial general intelligence (AGI). This is not surprising given the fact that our interviewees were mostly (but not entirely) AI researchers. We might suspect a different array of answers from experts in neuroscience or nanotechnology.

A full 43% thought that AI would eventually develop general reasoning capabilities, where it would be able to solve problems with common sense in a similar way humans can.

24% believed that AI would slowly become integrated into everything, automating and fundamentally changing everything from transportation, to business, to scientific research.

The gradualist approach would be us slowly giving our autonomy to AI, as some of the most important tasks in society are automated by AI tools. Conversely, developing a general intelligence would involve us developing a super-powerful tool that could do just about anything a human could do and maybe more. A human-level general intelligence could, as we will explore later, have the power to reshape or destroy society (see our CEO’s essay Is Artificial Intelligence Worse Than Nukes?).

We broke our participants’ responses down into six broader categories for discussion:

- Intelligence creep

- Neuromorphic AI

- Human-machine merger

- Brain emulations

- Intelligence explosion

- The singularity never happens

Some responses were also categorized as “other” because they were either incomprehensible or they did not answer the question. These uncategorizable responses will be left out of the discussion below.

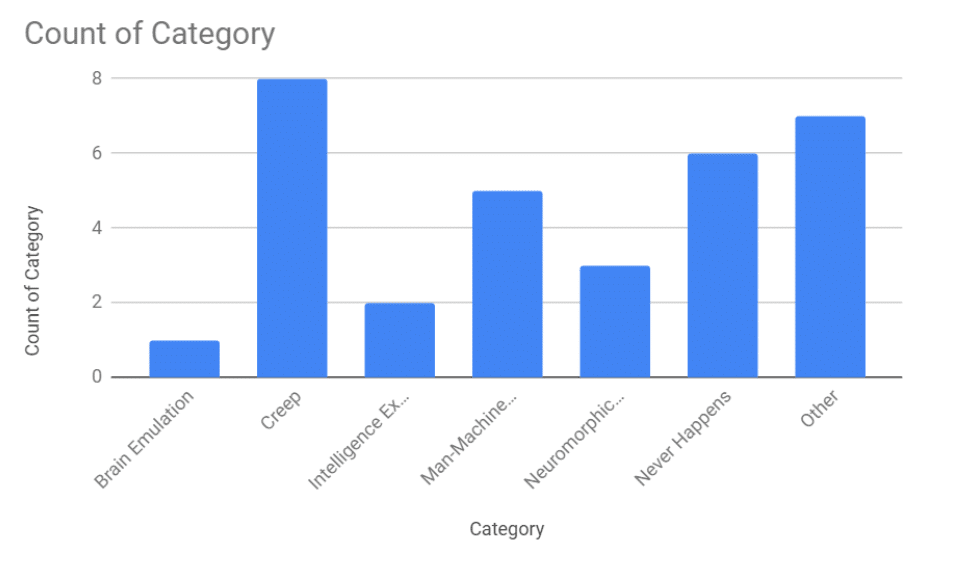

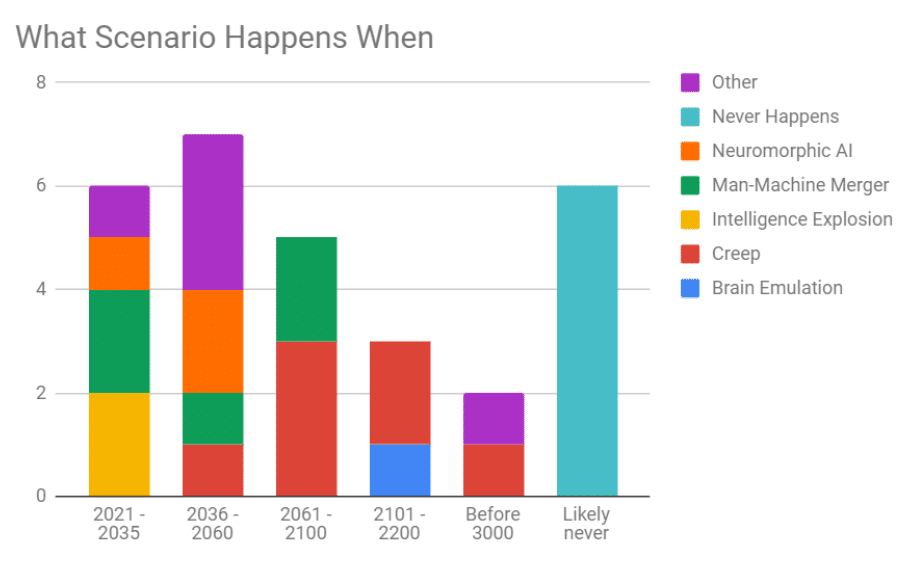

First, what are the timeframes given for the different singularity scenarios? We looked at the broader singularity scenario categories that we had sorted our respondents answers into and then organized them by the timeframe given for when the singularity would occur:

The graph above seems to suggest the following:

- Intelligence creep scenarios project the singularity will happen in the medium-to-long term future, with significant deviations from the aggregate data.

- Man-Machine merger scenarios project the singularity to happen in the near-to-medium term future, likely premised on how soon advances in biotech happen.

- Neuromorphic AI scenarios project the singularity to happen in the near-term future, likely due to having examples such as the human brain to work with.

- Brain emulations, which received only one response, projects the singularity to happen in the 23rd century or beyond.

- Intelligence explosion scenarios project the singularity to happen between 2021-2035, likely due to the accelerating growth of AI capabilities.

In order to add more color to the categories we’ve clustered, we’ll explore representative quotes from our respondents in the sections below:

Intelligence Creep

Eight of our experts believed that the singularity would occur gradually over a long period of time.

Rather than some large and sudden change in technology, this group of experts believes in a relatively gradual increase in machine intelligence. Answers within an intelligence creep scenario often refer to gradual automation and piecemeal improvements towards general intelligence.

The idea that AI becomes more normal in all areas of life or that it would replace all tasks, received 15% and 4% of total responses, respectively.

Hardly anybody noticed when phone switch operators were replaced by automated switches, or when directory assistance people were replaced by speech recognition. The singularity… has already started – Andras Kornai

We can see some of this today, as AI is slowly being rolled out in everything from heavy industry to agriculture. More and more tasks will be handed off as new AI tools are developed and its capabilities increase, which may eventually create a fundamental change in the human condition.

AI is so powerful that it becomes an essential part of any human activities – Alex Lu

This seems like the least radical idea and closer to the status quo than other scenarios. However, even this is radical in terms of societal impact. It fundamentally changes the way that the economy works, as market forces will make any company that doesn’t have an edge in AI obsolete.

This trend would be even more impactful in our professional life, where companies not relying on AI will quickly disappear. All this will have tremendous implications from the social point of view, and we will have to redesign whole paradigms such our understanding of work-life balance. – Miguel Martinez

Another way that intelligence could creep up on is from the gradual improvements of our hardware and software until we develop a machine that’s significantly smarter than a human in all domains. This idea of machine intelligence gradually increasing received 8% of total responses.

I don’t believe in a sudden singularity event that occurs in a matter of days or weeks (such as some unintended AI “waking up” and taking over the world). But I do believe the capacity, generality, and complexity of AI will steadily increase beyond human levels. – Keith Wiley

The development of AI like this would be almost analogous to the way that computers have steadily improved over the years. Personal computers back in the 70s and 80s had only a few kilobytes of memory or storage, compared to today’s personal computers that have gigabytes of memory and usually host up to a terabyte of storage.

But just like the steady improvement of normal computers, there will be decades before we reach this level of intelligence.

It seems more likely that such a level of intelligence in machines would come about just as any other major technological advance: on the back of many more incremental steps. And while some recent advances are certainly large moves forward given our current capabilities, in the context of general AI they remain incremental steps—there is a very long way left to go. – Sean Holden

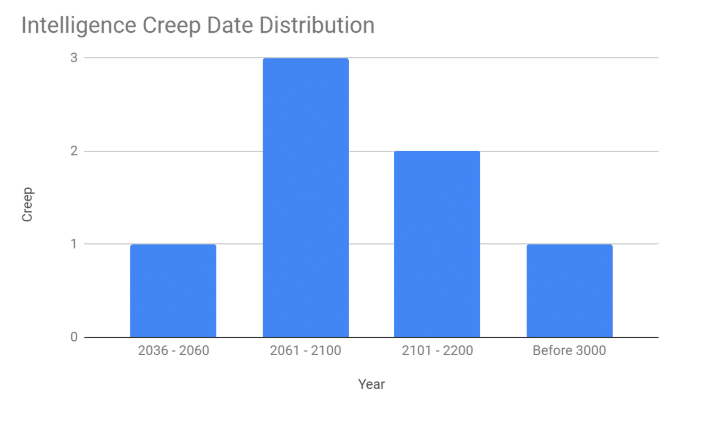

For the intelligence creep scenario, our experts believe it would take far longer than the other scenarios. Nobody who suggested this scenario believed it would happen anytime soon. No respondents believed that we would reach the singularity in by 2035, and only one respondent thought it would happen between 2036 and 2060.

Below is a distribution of when intelligence creep might result in the singularity:

This is the complete opposite of the aggregated responses, where between 2021-2060 received 45% of total responses. The intelligence creep scenario falls on the tail end of the distribution, being responsible for all 2101-2200 responses and one of the ‘Before 3000’ responses.

This makes sense because the idea behind this scenario is that we reach the singularity through incremental steps. Artificial intelligence will slowly envelop all aspects of society, automating away huge swaths of labor, becoming integral for societal functions such as dating and entertainment.

It’s possible that unless a highly capable general intelligence is stumbled upon, AI tools and processes will slowly be developed and integrated into the world. Steadily improving these tools and developing a general intelligence will likely take time.

Neuromorphic AI

“Good artists copy; great artists steal.” Picasso’s quote holds just as true for people in computer science as in the arts.

Why create an intelligence from scratch when evolution has already produced one of the most complex and powerful intelligences in the known universe: the human brain.

Some of our respondents have suggested that the path to the singularity is to reverse engineer the brain to develop new forms of hardware and software.

A shift in AI development towards more human-like cognitive architectures—away from current big data, machine learning approaches—will allow us to develop programs that can understand and improve their own design. – Peter Voss

Advancements in today’s computer chips are already using the brain as a model. Current computer chips are very good at shuffling numbers around to do complex calculations. But up and coming neuromorphic chips are considerably better at machine learning or deep learning-specific, such as object recognition.

Great artists, however, don’t copy; they steal. AI will not look like or be limited by the brain’s own limitations. Airplanes do not look like or fly like birds, and we have exceeded the speed, height, and distances of any bird. It is theoretically possible that humanity (or cognitively enhanced humans) could similarly improve upon the brain’s structures to make AIs even more intelligent.

Real neuromorphic computing. When we can build chips or devices that can mimic the function of the human brain. Slowly we will reverse engineer each part and rebuild the individual functions to be 100X more powerful. – Charles H. Martin

While many are waiting for and worried about human-level general intelligence, the iterations of AI that are used leading up to human-level intelligence could have a vast impact on our world. An intelligence that only has the raw power of a crow’s brain, but that is designed specifically to do something like engineering or science, could exceed the capabilities of a human in that domain and could fundamentally change society.

Professor Roman Yampolskiy similarly suggests that the path to the singularity is through building a neural network the size of the human brain:

A superintelligent system will most likely result from scaling of deep neural networks to the size of the human brain.

We presume that Yampolskiy isn’t talking about physical size, but rather the total number of connections between neurons in the human brain (roughly 100 trillion).

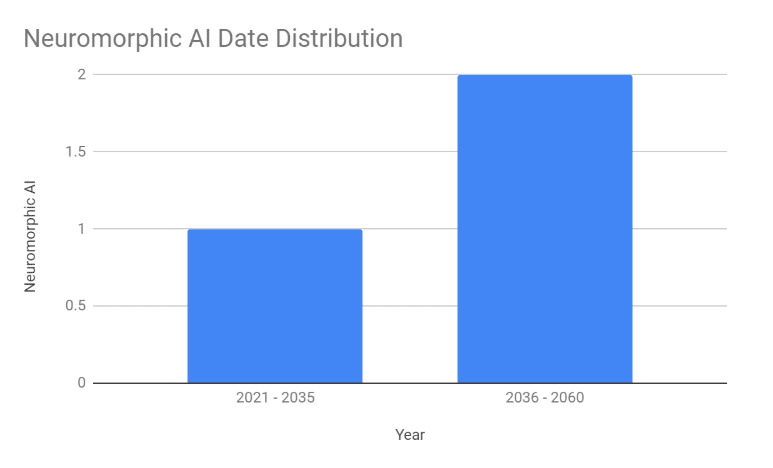

For the neuromorphic scenario, our respondents believed that it would happen in the near term future. Out of the three respondents for this scenario, one believed that it would happen between 2021-2035, and the last two believed it would happen between 2036-2050. The distribution below reflects this:

It’s likely that the dates chosen for neuromorphic are sooner because engineers already have the human brain to work with and model. One respondent noted that neuromorphic designs are key to an AI being able to understand and improve itself. Neuromorphic computer chips are already slated to make massive improvements in AI capabilities, so work in using the brain as inspiration for further developments is probably likely.

Machine-Human Merger

This was our most popular individual scenario, with 19% of our respondents thinking that we would reach the singularity by integrating biological and technological intelligence.

The scenario will likely involve biological human augmentation and direct integration with technology, driven by AI at multiple levels… Without transcending limits such as cognitive capacity and sensory bandwidth it will be impossible for us to actively guide or even understand the course of events at the level of complexity this scenario presents… Fast, high-bandwidth interaction between cognitively augmented humans and autonomous AI’s is likely to be a key characteristic of the singularity. – Helgi Páll Helgason

Human augmentation has been traditionally thought of as science fiction by many. But, the technology for this is already being developed. The latest advances of biotechnology has pushed us past cochlear implants and pacemakers, to mind-controlled robotic exoskeletons and more. Elon Musk’s NeuraLink and even Facebook have entered the neurotech business in an effort to create brain-to-machine communication interfaces.

Due to the continuing integration of technology and humans the distinction between the virtual and the real world will fade away. Artificial Intelligence and ‘natural’ intelligence will also be integrated both enhancing humans and make them part of the Intelligence Cloud. Once these boundaries are gone we have entered the singularity. – Tijn van der Zant

But why would humans and machines need to be connected for the singularity to occur?

Transhumans that integrate computational hardware as an artificial cognitive faculty provide the ability to create abstractions and formulate problems that are solved by a range of artificial intelligence approaches. – Pieter J. Mosterman

Creating a general intelligence that not only works but has an understanding of human values and intentions, will likely be very difficult. So, some of our experts think that it would be easier, and probably safer for ourselves, if humans were merged with AI. It would augment both human and artificial intelligence, extending the reach and scope of our capabilities.

Lots of data will be processed in [real time] from many IoT devices (cars, homes, phones, streets, air vehicles), connected to people’s brains via cognitive prostheses, then connected via blockchain-type processes to become a cognitive brain(s) available for use by everyone. – Eyal Amir

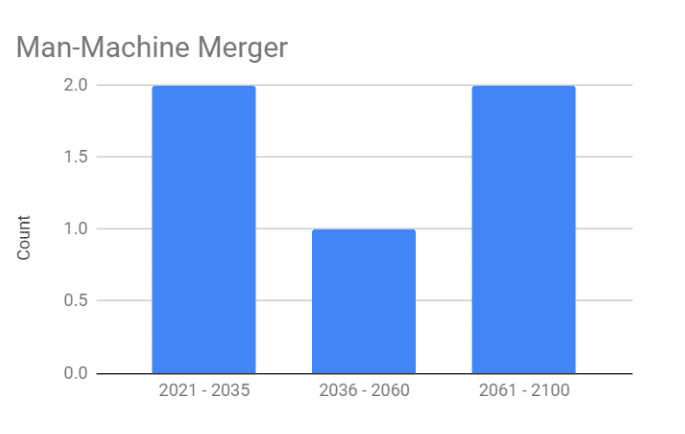

For the man-machine merger scenario, our respondents seemed to believe it would come in the near-to-long term future. Out of the five responses that we received for Man-Machine Merger, two believed it would happen between 2021-2035, one thought it would happen between 2036 and 2060, and the last two believed that it would happen between 2061 and 2100.

The two humps in the distribution are likely due to whether or not our respondents believe that advanced biotechnology will come sooner rather than later. If we’re likely to make large advancements in biotechnology in the near future, then integrating human and artificial minds might lead to the singularity in a relatively short time-span. However, if human-machine interfaces or other human-augmentation technologies like nano-tech end up being a bottleneck, it might not be possible to have a singularity until the end of the century.

Brain Emulations

One respondent had a very unique proposal for what the singularity would look like. Instead of biological humans merging, or AIs inspired by the architecture of the brain, what if human brains were uploaded emulated on a computer?

George Mason University professor Robin Hanson, author of The Age of Em, suggests that the singularity would occur in the far future once we have the ability to deconstruct the human brain, scan every neuron, and then recreate it and run it on a computer.

‘Ems,’ or brain emulations, i.e., cell-by-cell models with the same signal input-output behavior of a particular human brain.” – Robin Hanson

These AIs would not be like the classic ‘alien-like computer minds’ that are often depicted by other experts. Rather, they would essentially be real people (direct models of an individual human brain), just in a digital substrate, able to operate at much faster computational speeds than normal human brains limited to biological wetware.

Intelligence Explosion

What if you could build a machine that built another better machine. Then, that machine built an even better machine, and then that built another even more powerful one, etc. This is the idea behind an intelligence explosion, which was suggested by 8% of respondents.

The most likely singularity scenario is via creation of artificial programmers, who would be able to work on improving artificial programmers and more. This is the most standard scenario described by I.J.Good and Vernor Vinge. – Michael Bukatin

How fast would this takeoff be? Nobody really knows, but there has been extensive debate about it. Some, including Robin Hanson, believe that a slow takeoff that takes years or even decades is more likely. Whereas others think that a fast takeoff that could happen over the course of months, days, and even minutes could be possible.

Is it possible for a machine to become incredibly intelligent through self-improvement? Nobody knows if this scenario, in particular, will happen, but there are some examples that many experts will cite. For example, AlphaGo, the AI that beat the world-champion of Go in a match that was heralded as a milestone in computing, had even more powerful versions.

A few months later, AlphaGo Zero was developed. AlphaGo Zero didn’t learn from human games like AlphaGo did. Instead, it taught itself how to play by playing against itself. Not only did it beat the original AlphaGo 100-0, but it overturned centuries of collective human wisdom on how to play the game. It did all of this with less power consumption and less time than the original AlphaGo.

It isn’t clear whether the same kind of leaps and bounds will be made outside of the relatively limited world of games – but many of our respondents suspect that this might be possible.

[The singularity] might be caused by a generally intelligent, self-optimizing system… that maximizes its competitive fitness. There are numerous utility functions that are congruent with this, directly or indirectly. – Joscha Bach

Both the brain emulation and intelligence explosion had the fewest responses, with one and two responses respectively. According to these results, the brain emulation scenario wouldn’t happen until the 22nd century. Meanwhile, our respondents believe that an intelligence explosion could happen by 2035.

Brain emulations could take a very long time because of the technology necessary to make it happen. Scanners that could deconstruct and scan the brain neuron by neuron and computers that could house and run the emulations would have to be developed. With the intelligence explosion however, respondents believe that it could happen soon likely because exponential progress could compound gains incredibly quickly.

The Singularity Never Happens

The last option is that the singularity just never happens. This was also a popular choice for many of our respondents, who believed for one reason or another that the singularity was unlikely.

Oh come on; the singularity is nonsense – Roger Schank

Some of our respondents dismissed the singularity for a variety of reasons. The first being that computer intelligence will not displace the role of human intelligence. They believe that economic incentives will push AI into areas that are complimentary to human intelligence, as opposed to substituting it.

It’s the same way that the Industrial Revolution created new machines that, while destroying many roles in society, created new roles for humans in production and society. The singularity would never occur, because humans would still maintain supremacy in general intelligence.

Singularity will never happen. Machines will get more and more intelligent but in the direction orthogonal to that of humans. We will remain [irreplaceable], forever” – Noel Sharkey

The other concern was some of them considered the singularity to be an unscientific concept, with no hard evidence that really suggests it could happen.

There is absolutely no evidence for the singularity. As scientists, we cannot say never but it seems highly unlikely. You could argue from beliefs about continuations of the speed of technological progress. But you could also argue that there are teacups and saucers orbiting the sun in a distant galaxy. – Riza Berkan

Header Image Credit: Heise Advocacy Group