1 – Google’s Visual AI “Inception”

The in middle of last month, Google published an article on the Google Research blog called “Inceptionism: Going Deeper in Neural Networks.” Frankly, the title didn’t seem all that appealing, and it’s unlikely that it would have jumped out as anything other than science-jargon, even to people signed up for RSS of Google’s blog.

By this week, however, this “Inception” (or “Dreamtime”) has gathered a flurry of attention as one of the most interesting (and in some ways creepy or humorous) visual projects undertaken by AI.

As Google explains in their blandly titled blog post:

“We train an artificial neural network by showing it millions of training examples and gradually adjusting the network parameters until it gives the classifications we want. The network typically consists of 10-30 stacked layers of artificial neurons. Each image is fed into the input layer, which then talks to the next layer, until eventually the ‘output’ layer is reached. The network’s ‘answer’ comes from this final output layer.”

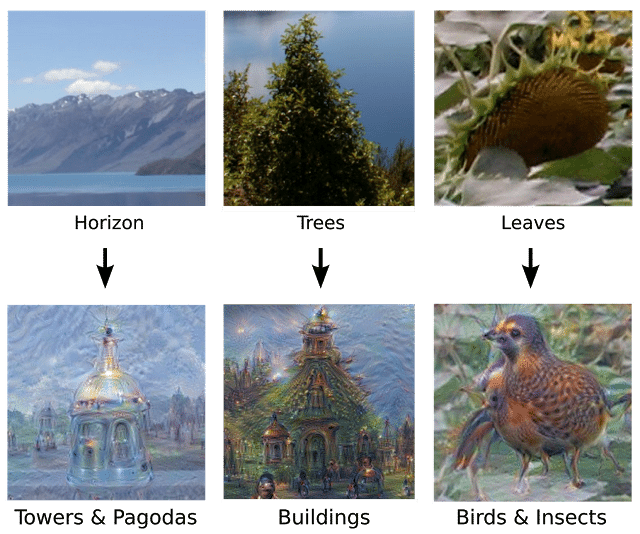

The result? Some of the most psychedelic art the world has ever seen, created entirely by neural networks aiming to make sense other otherwise “normal” images. Here’s a few interesting samples from the original article:

The images make it evident that the AI is aiming to make sense of the images it “sees” based on the shapes and forms it’s familiar with, and the objects that it’s used to seeking out in a given image… but the free-association of the machine extends radically to a kind of creative interpretation that varies from fascinating to revolting (see collection of additional “inception” images from reddit here).

This tweet from MIT Technology Review’s Will Knight showed up in my feed yesterday, linking to a new subreddit dedicated entirely to these mesmerizing, psychedelic “inception” images:

New sub-reddit http://t.co/fLGRLLzmDX is the best place on the internet right now.

— Will Knight (@willknight) July 7, 2015

While machine learning and machine vision might still be considered to be in their infancy, this inception experiment certainly makes the developments (and possibilities) of AI more visceral. And fascinating.

2 – The Washington Post Covers AI Safety

Elon Musk’s 7-figure investment in the Future of Life Institute, the concerned words from other big names (like Bill Gates and Stephen Hawking) have been gaining ground across mainstream media. For those of us who have been reading Nick Bostrom before he was “cool” (our Emerj interview with Bostrom can be found here), the words of concern bring more attention that substance – but attention is what I believe this topic needs.

Recent films like Transcendence, Her and Ex Machina are placing some of the ethical considerations of AI in the public eye, but film hasn’t seem to have propted serious ethical concern in the general educated public. While recent AI films have brought the concept of AI to light, the comments of celebrities and tech influencers may be more likely to provoke serious though (not just sci-fi thrills) from average citizens.

This week, the Washington Post published a blog chronicling their pick of the “best” ideas for preventing artificial intelligence from wrecking the planet (which you can read here).

What’s interesting about this article is that a credible and mainstream publication like the Washington Post is taking the time to highlight otherwise little-known AI researchers who are aiming to crack the ethical nuts related to AI’s development – experts like Heather Roff Perkins (University of Denver), Manuela Veloso (Carnegie Mellon), and Nick Bostrom (Oxford). It’s my belief that if this more esoteric, boots-on-the-ground work can “become interesting,” and can make it into the general tech conversation, we’re likely to be moving closer to taking AI’s ethical considerations from sci-fi excitement or unfounded uneasiness to real consideration and action. One can only hope.