Over the last two years there has been a general “up-tick” in media attention around the risks of artificial general intelligence, and it seems safe to say that though Bill Gates, Stephan Hawking, and many others have publicly articulated their fears, no one has moved the media needle more than Elon Musk.

When I set out to gather perspectives from businesspersons on AI risk, I aimed to sift through the “whiz-bang” re-blogged articles about Musk’s statements and figure out what the man actually said about the matter… and as it turns out, that was rather difficult. Due to the possibly sensational and novel claims (combined with Musk’s growing celebrity attention), most of the articles about “what Musk said” are in fact not about “what Musk said,” but about what some reporter said about what a reporter said about what Musk said.

So I set out to find every actual statement from Musk himself on the topic of AI risk, and lay them out for others to reference. My goal (as a writer) is to have this catalogue for myself, but I’m sure there are other folks who’d want direct quotes as well.

In addition, I’d like to use this article as a jump-off point to other useful sources (authors, research, existing academic discussion) of AI risk debate, including some resources that Musk himself is undoubtedly familiar with.

Bear in mind, I’ll likely continue to update this list, and is by no means complete yet (if you’ve found other statements from Musk, please feel free to email me here at dan [at] Emerj.com). Here’s the homework I’ve done thus far, chronologically, from the top:

April 12, 2014 – Inc. Magazine Chat with Steve Jurvetson

(Hop along to 4:15 in the video about to see the first mention of AI and jesting about “Terminator”)

This video by Inc Magazine is the first video that I’ve been able to find of Musk actually articulating his fears about AI risk. Many people believe that the first video was around 4 months later during his talk at MIT (see below), but this video makes clear mention of some of the same notions, if not with the same seriousness or shock value

Steve Jurvetson (of DFJ fame) actually mentions that the SpaceX data center has “Cyberdyne Systems” (the fictitious company that created “Skynet” in the Terminator movie series). As it turns out, this is true. Here’s a photo from Jurvetson’s Flikkr account:

Whoa.

Whoa.

Apparently, that’s the real deal. Interesting. It seems obvious that this is a bit playful, but it’s curious, especially given Musk’s overtly stated concerns about AI running amok.

I would wager that most people are unaware of this video, or of Musk’s playful naming of his SpaceX server cluster, so I figured it would be noteworthy to mention this video. If you can find an earlier video of Elon stating his concerns about AI, please do let me know, this is about as early as I could find.

June 17, 2014 – CNBC’s “Disruptor Number 1” Interview

The video above was taken from this interview on CNBC (original was not embeddable), and it includes Musk articulating why he had invested in AI companies DeepMind and Vicarious – to keep an eye on what’s going on in AI.

When CNBC’s hosts express surprise at Musk’s statement about AI being “dangerous,” he casually mentions that there have been movies about dangerous AI, and references Terminator (in partial jest).

In the interview he responds with “I don’t know” to questions such as “What can we do about this?” and “What should AI really be used for?” Frankly I don’t think I’d have a better response, those questions are big ones.

I don’t know… but there are some scary outcomes… and we should try to make sure that the outcomes are good and not bad.

Not much of a tremendous splash was made by these comments in the media, and admittedly, it was more of a glossing-over of some potential concerns.

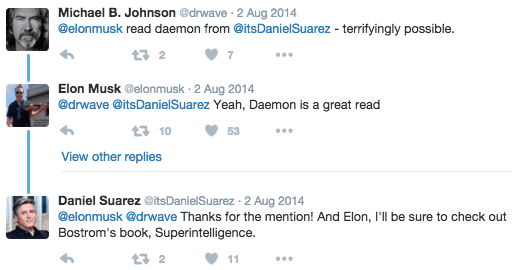

August 2-3, 2014 – Twitter Mention of Bostrom’s “Superintelligence”, and Biological Boot Loaders

Worth reading Superintelligence by Bostrom. We need to be super careful with AI. Potentially more dangerous than nukes.

— Elon Musk (@elonmusk) August 3, 2014

As far as I can tell, this is Musk’s first “from the horse’s mouth” statement about artificial intelligence and risk. He overtly mentions Prof. Nick Bostrom’s Superintelligence in his tweet, which seems likely to have been a force in helping the book sell as well as it has.

Nick Bostrom was interviewed here on Emerj about the same time as this tweet, interestingly enough, though his interview didn’t go live until September 24th of that year.

The tweet itself didn’t generate a tremendous amount of noise in the AI space, at least initially, but seemed to build steam with the comments made at MIT in October 2014 (see below).

Hope we're not just the biological boot loader for digital superintelligence. Unfortunately, that is increasingly probable

— Elon Musk (@elonmusk) August 3, 2014

Above is Musk’s second tweet. If you’re unaware of the term “bootloader,” you can find an adequate definition here.

CBS and other media sources picked up these tweets on Aug 4th (and in some of the week’s following).

October 24, 2014 – MIT’s AeroAstro 1914-2014 Centennial Symposium

(Fast forward to about 1 hour into this video to see Musk’s comments about AI)

The first Musk-AI press-bomb was during his MIT AeroAstro talk on October 24th, 2014. The Washington Post (and other publishers) actually covered Musk’s AI comments before this video (embedded above) even went live. However, I wanted to link to the actual MIT video (accuracy kind of our shtick around here – as much as we can handle it).

This is the interview that spawned all of the “Elon Musk is calling AI a demon” statements. Curiously enough, the actual source video from MIT (embedded above) has vastly less views than many of the articles written about the video. Not surprisingly, VICE is better at marketing than MIT AeroAstro, for which neither can be blamed.

Here’s the statement from Musk that received the most attention:

With artificial intelligence we are summoning the demon. You know all those stories where there’s the guy with the pentagram and the holy water and he’s like… (wink) yeah he’s sure he can control the demon… doesn’t work out.

Musk didn’t in fact liken AI to a “demon” in an analogy, but rather referred to the two directly, making the statement easy to sensationalize, though it seems rather likely that a metaphor was implied.

Here’s the statement that I believe might be more insightful as to his general sentiment towards AI risk:

I’m inclined to think that there should be some regulatory oversight… at the national and international level… just to make sure that we don’t do something very foolish.

You can watch the video above and come to your own conclusions.

Interestingly enough the “demon” analogy might have been spurned by Musk’s reading of Daniel Suarez’s “Daemon” novel. Directly under his August 2nd tweet, we see this brief mention of said book, including a comment from Suarez himself:

October 6, 2014 – Vanity Fair’s “New Establishment Summit” Interview

The above video was posted October 8th, 2014, but the actual conversation was held on October 6th.

This video is fruitful because Musk makes a few short quotes that I believe turn out to be useful jump-off points into the existing and ardent (albeit niche) conversation about AI risk and control. We’ll start with this quote:

Most people don’t understand just how quickly machine intelligence is advancing, it’s much faster than almost anyone realized, even within Silicon Valley… (When asked “Why is that dangerous?”): If there is a superintelligence… particularly if it is engaged in recursive self-improvement…

This brings us to the debate around what is playfully (or not so playfully?) referred to as the “AI foom” – or – when AI can improve itself so rapidly that it gains capacity and intelligence at an exponential rate. This debate was made popular via a discussion between MIRI founder Eliezer Yudowski and Dr. Robin Hanson of George Mason University

My interview with Dr. Hanson in early 2013 touched briefly on topics of AI risk, but the most robust record of the “AI foom” debate can be found on MIRI’s website here.

Another quote from vanity fair:

If there is a superintelligence who’s utility function is something that’s detrimental to humanity, then it will have a very bad effect… it could be something like getting rid of spam email… well the best way to get rid of spam is to get rid of… humans.

In 2003, Nick Bostrom articulated a hypothetical scenario where a super-powerful AI is programmed with the goal of making as many paperclips as possible.

It is supposed, in Bostrom’s example (which you can read on his personal website here), that such a superintelligence would not necessarily know any kind of rational limit the number of paperclips to make, and so may desire to use all available resources on earth (and eventually the universe) to make paperclips.

At some point, the AI will have a physical method of obtaining it’s own resources, and will not need humans to bring it’s raw materials… and so it will be motivated (or so the example goes) to exterminate humans… if nothing else, just so that it can use the atoms in human beings to create more paperclips.

January 15, 2015 – Interview with the Future of Life Institute, and the AI Open Letter

Good primer on the exponential advancement of technology, particularly AI https://t.co/1c30ZwJ8Y5

— Elon Musk (@elonmusk) January 23, 2015

The Future of Life Institute (which bears a rather similar name to the Future of Humanity Institute at Oxford… and I imagine has been the basis for some inter-academic grumblings) proudly announced Musk’s donation of $10M to fund projects related to AI safety.

One of the funded projects from Elon’s donation pool was awarded to Wendell Wallach, a past interviewee here on the Emerj podcast.

Those interested in more of Musk’s conversations with Max Tegmark (one of FLI’s founders) might enjoy this video as well.

Around this same time, FLI’s AI Open Letter was released, with Musk as one of it’s first signatories.

This event garnered oodles of press, some of it quite critical of the validity of AI fears.

January and February 2015 – Tweets Referring to WaitbutWhy’s AGI Article

Good primer on the exponential advancement of technology, particularly AI https://t.co/1c30ZwJ8Y5

— Elon Musk (@elonmusk) January 23, 2015

Excellent and funny intro article about Artificial Superintelligence! Highly recommend reading https://t.co/jisxabYrl9

— Elon Musk (@elonmusk) February 26, 2015

Musk mentions WaitbutWhy’s AGI article two times (those lucky sons of…). The article (which you can read by clicking the link the in the tweets) is a long one, but for people who haven’t read Bostrom and Kurzweil regularly, it’s a good overview of some of the major risk considerations of AI. Plus it’s awful humorous.

March 29, 2015 – Boao Forum, Conversation with Robin Li and Bill Gates

(Zip ahead to about 17 minutes into the video above to hear the conversation turn to the topic of AI)

In his discussion with Robin Li, Musk again articulates his relatively firm supposition about a “hard take-off” for AI, with it’s capacities zipping vastly beyond human level general intelligence right around the time that it might attain human-level general intelligence. This fear is not mirrored by many AI researchers, but it is a fear that many respected thinkers have considered.

Ben Goertzel (AGI researcher and OpenCog founder) has written about the concept of “hard takeoff,” which might be of interest to anyone aiming to get a better understanding about the speed of AI’s increasing capacities in the future. You can listen to Ben’s entire interview about AI acceleration and risk in this article based on his Emerj podcast interview. I’d also admonish anyone interested in the subject to explore more of Ben’s blog.

Musk aims to articulate his concerns about controlling AI in the following statement to Baidu CEO Robin Li:

(Considering nuclear research / weaponry) …releasing energy is easy; containing that energy safely is very difficult. And so I think the right emphasis on AI research is on AI safety. We should put vastly more effort into AI safety than we should into advancing AI in the first place.

Musk re-iterates that his stance is not against AI research in another statement to Li:

So I’m not against the advancement of AI – I want to be really clear about this. But I do think that we should be extremely careful.

Some folks (notably AGI researchers) consider Musk’s statement’s to be unnecessarily stifling for AI, and others see the statements as fear-hype that will be more dangerous to AI’s development that AI ever would be to humans in the near future.

With all the AI software developers he’s recruiting, it seems clear that he isn’t entirely against AI itself – though skeptics may believe that he wants to hog all the AI smarts to himself and slow everyone else down. I don’t know who’s right, but all of these opinions are out there.

July 27, 2015 – Tweet about Autonomous Weapons Open Letter

If you’re against a military AI arms race, please sign this open letter:https://t.co/yyF9rcm9jz

— Elon Musk (@elonmusk) July 28, 2015

FLI’s Autonomous Weapons Open Letter made another reasonable media splash, and Musk bumped the link out to his followers.

September 10, 2015 – CNN Money Interview

In this brief clip, Musk sums up a few important notions about what kind of “AI” might prove itself to be a legitimate risk. This tends to de-bunk a lot of the “Elon fears the Terminator” hype that has stuck itself on blogs all over the net (this is the kind of click-happy material that appeals to the broad market of online readers, but skews the point being discussed).

Though it seems that many AI researchers disagree with his fears (some do agree, too), he isn’t articulating any kind of fear of terminators. Here’s a quote:

…the pace of (AI) progress is faster than people realize. It would be fairly obvious if you saw a robot walking around talking and behaving like a person, you’d be like ‘Whoa… that’s like… what’s that?”… that would be really obvious. What’s not obvious is a huge server bank in a dark vault somewhere with an intelligence that’s potentially vastly greater than what a human mind can do. It’s eyes and ears would be everywhere, every camera, every microphone, and device that’s network accessible.

October 2015 – Interview (alongside Sam Altman) for Vanity Fair

Most of this joint interview covers future-oriented topics and views outside of AI, but there are a couple of notable points. In the beginning of the interview, when asked in what area he’d start a new company (Tesla and SpaceX being “out of the picture”), Musk mentions AI as one of three options (about 2:14 in conversation). “It’s one of those things where if you don’t do something, maybe not doing something is worse,” Musk states, noting that AI and genetics (another option) are fraught with issues but most likely to change the destiny of humanity.

At about 13:02 in the interview, Musk divulges what he views as the two most compelling reasons to establish civilization on Mars and become a multi-planetary species. One of those, though not a direct statement on AI, is certainly related to sentience in general: “to ensure that the light of consciousness as we know it is not extinguished or lasts much longer.”

An audience member (at 37:27 in the interview) asks Musk and Altman to share their positive vision of AI for the future. Altman speaks first about the two ultra positive and negative camps, both of which he acknowledges are not likely to be realized fully, but instead leans toward the idea of the evolution of a symbiotic relationship between humans and AI as a realistically happy median.

Musk agrees with Altman, adding that there is “effectively already a human machine collective symbiote”, referencing current society as a giant cyborg. He makes clear his “affinity for the human portion of that cyborg” and thinks we (corporations and policy makers pulling the strings) should ensure that AI is optimally beneficial for humans—a notion echoed throughout many of Musk’s past statements and interviews.

October 7, 2015 – Interview with DFJ General Partner Steve Jurvetson at Stanford

Similar to the Vanity Fair interview and conducted around the same time, most of this Stanford-based discussion touches on future-oriented topics other than AI, but Musk makes some references. Around 8:42 in the conversation, he recalls during college identifying AI as one of the primary areas that would influence humanity in a grand way—the others being the internet, sustainable energy, multi planetary species, and genetics. Musk references AI (and genetics) as being a double-edged sword i.e. never really knowing whether you’re working on the right edge at the right point in time,

At 29:43 into the conversation and on the topic of looking 20 years into future, Musk says he believes AI will (not surprisingly) be incredibly sophisticated. He references and describes a recursive y axis theory of AI acceleration—i.e. if you find predictions are, in any given year, going further out or coming closer in, that’s one way to think of acceleration—and gives the example of autonomous driving, noting that his own predictions for the technology have lessened, or come closer faster, than his original predictions over the last decade. Musk also states his belief that brain-computer interface, alongside accelerating AI, may be the only chance we have at communicating with a potentially far greater intelligence—a possibility two decades from now.

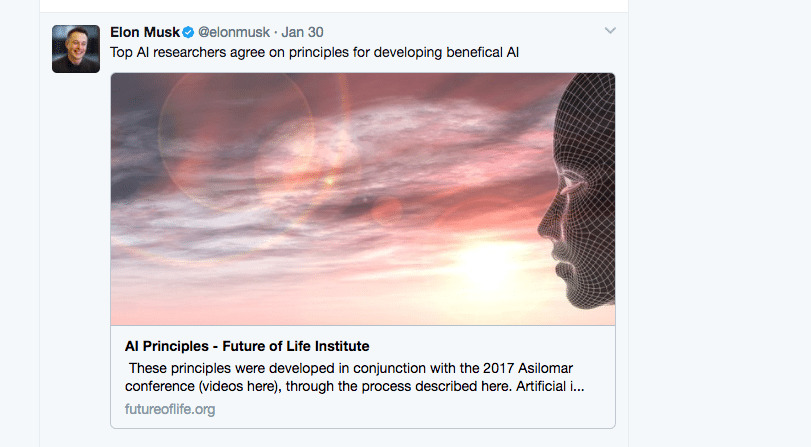

January 30, 2017 – Tweet Referencing Future of Life Institute’s AI Principles

Elon Musk promotes the AI principles set forth by the Future of Life Institute, to date signed by 1,197 AI researchers (including DeepMind’s Demis Hassabis) and 2,320 “others”—Elon Musk is a signatory.

February 3, 2017 – Musk Speaks at World Government Summit 2017 Dubai

In Musk’s most recent sit-down discussion at the 2017 World Government Summit in Dubai, he bridges the topic of the potential utility and dangers of AI on multiple occasions. At about 8:36 in the conversation in giving his hoped for vision of the future in 50 years, Musk (among other things) mentions autonomy in AI, specifically autonomous vehicles—though he notes the latter is much more near-term i.e. within next 10 years. He goes on to make the distinction between narrow and general AI, commenting that there’s potential for general AI to be both humanity’s greatest helper and its greatest threat.

About 20:00 into the video, Musk is asked to give three points of advice for the 139 government representatives present. His first point of advice:

“Pay close attention to the development of AI, we need to be very careful in how we adopt AI and make sure that researchers don’t get carried away.”

At 25:11, Musk also discusses the possibility of biological beings merging with machine intelligence. Far beyond the point of present-day “digitally-augmented cyborgs”, he states that there will likely be the need for a much faster, direct human-computer interface in order to keep up communications with much more advanced forms of AI.

Wrapping Up

Musk’s claims about AI’s near-term risk may be overblown, but they may not be. Given my own assessment in a recent poll of 33 AI researchers (which may be biased, as many of our guests have at least a marginal interest in AGI to begin with), essentially of what Musk has said seems reasonable when couched in the existing rational debates on the topic.

What I think is counter-productive, is both the media hype around “Terminators”, and the back-lash from some of the AGI community likening Musk to an evil luddite (I’ve written about this “politicking” previously). Though we could easily imagine that Musk has some potential agenda at play with respect to his articulated AI fears (possibly increasing regulation on Google and Facebook in order to gain greater prestige and wealth than other tech giants?… maybe?… somewhat unlikely), it seems that on the aggregate he’s up to speed with many pretty reasonable AGI thinkers.

The point of this post, however, wasn’t to give my perspective, but to show Musk’s actual statements and let you make your own call.

In addition to updating me with important quotes that I missed (which can be done via email at dan [at] Emerj.com), feel free to leave your own comments below this article.

All the best,

– Dan

This article has been updated as of April 28, 2017 to reflect new press and media content.