In the ongoing “pop culture” debate as to whether or not the pursuit of AI will result in Terminators that destroy humanity, there are many other more informed and nuanced discussions occurring in academic and business circles about the consequences and implications of continued advances in AI. A surprising number of legitimate AI researchers are of the belief that many of us will live to see “conscious” artificial intelligence in our lifetime.

But what are the ramifications of replicating awareness, and not just intelligence, in our machines?

My preliminary thinking on the implications of “awake” machines began in graduate school at UPENN, where I studied positive psychology and cognitive science, but was heightened by reading the essays of folks like Ben Goertzel and Nick Bostrom, as well as the neuroscience research of BrainGate and Ted Berger.

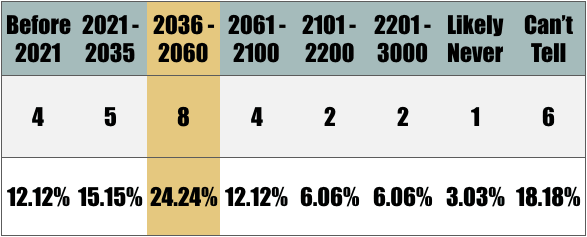

Bostrom’s 2014 poll of AI researchers shed light on the reasonably large portion of PhDs in the field who are optimistic about seeing human-level intelligence within their lifetimes. My own 2015 poll focused on another facet of replicating life in silicon: consciousness. I asked 33 AI researchers when they believed that we might see the development of machines that are legitimately self-aware, in the same way that animals are today.

You can see and interact with the entire set of responses from this poll in a previous article highlighting our AI consciousness consensus. Below is a highlight of the responses, organized by pre-provided timeframes, with eight researchers predicting 2036-2060 as the timeframe in which sentient machines are likely to emerge:

While having an “awake” computer doesn’t seem to have grand ethical ramifications in and of itself, it’s worth noting that most people (and a great many philosophers) agree that consciousness is what makes something morally relevant—”good” or “bad”—if you will.

If I kick my vacuum cleaner, it might be morally consequential in that it might hurt my foot, or it might be indicative of my anger or frustration. The vacuum is not affected, because it is not aware of itself. If someone were to kick their dog, this would be an entirely different story. When an entity is conscious, it weighs on a moral scale. You don’t have to be a utilitarian or abolitionist (in the Pearce-ian sense) to follow this reasoning.

A hypothetical computer “awareness”—assuming it followed the trajectory of other computer technologies over years of development—could be expected to be more “aware” each passing year, potentially trumping the self-reflective and sensory capacity of animals, or even of man.

In terms of moral gravity at stake, here’s how the thought experiment goes:

IF: Consciousness is what ultimately matters on a moral scale

AND: We may be able to create and exponentially expand consciousness itself in machines

THEN: Isn’t the digital creation of consciousness of the greatest conceivable ethical gravity?

Though I’m not one to prognosticate, I’m also not easily able to forget something so compelling. Even if it was our grandchildren who’d have to juggle the consequences of aware and intelligence machines (though many experts don’t believe the wait will be that long), wouldn’t that still warrant an open-minded, well-intended, and interdisciplinary conversation around how these technologies will be managed and permitted to enter the world?

If consciousness is what ultimately counts, it would seem that we might want to think critically about how we play with that morally-relevant “stuff”, assuming we are in fact moving closer to self-aware machines. Even if some PhDs are wrong and we are hundreds of years off from reaching such a precipice in consciousness, isn’t it worth seriously thinking about how we design and roll out what will potentially come after us, and potentially supersede us?

Since the end of 2012, I’ve been relatively consumed with the implications inherent in this potential state of affairs, so much so that it has spawned hundreds of expert interviews; Emerj as a company; and presentations addressing the topic, from Stanford to Paris.

All these things considered, it’s not what I write about most at present, simply because it’s not where the immediate utility and growth of Emerj lies. Emerj, as it exists today, will not make a name for itself (at least in the immediate future) as a media site about future AI ethical concerns, but as a market research firm that helps technology executives make the right choices in investing in AI applications and initiatives in industry (see our about page).

Empirically-backed decision support for executives is a business, whereas dealing in ethics is not. Our present work in market research is a tremendous opportunity and will provide our company the opportunity to profitably grow as a potential force for transparency and discourse around AI and machine learning in the years to come.

That being said, the moral cause in proliferating a global conversation around “tinkering with consciousness” remains just as real and pressing. When governments and organizations eventually come to need further clarity on the ethical and social ramifications of these technologies (in addition to their ability to impact the bottom line), our vision is to be first in line to help better inform conversations around ethics and policy. We’d like to expand faster than a non-profit, which is why we designed Emerj primarily as a media site and business that will eventually help catalyze these important conversations.

Fortunately, we haven’t crossed any of the major metaphorical bridges with respect to super-intelligence or aware machines, and time (at least to some degree) may be on our side. When all is said and done, it’s the intersection of technology and awareness that has the grandest moral consequence; the intersection of technology and intelligence is part—but not all—of the nut that needs to be cracked in order to bridge the frontier of consciousness.

Since writing the original version of this editorial in February 2016, there has been a multiplying of organizations and initiatives popping up in the intersection of AI and ethics, including The Leverhulme Centre for the Future of Intelligence and a new joint initiative between MIT Media Lab and the Berkman Klein Center for Internet and Society at Harvard University. There is a clear interest by some of the most well-respected organizations in the field to further these conversations and explore hypothetical scenarios and issues regarding how AI might shape the future of humanity and vice versa. I view this as evidence that my own thought experiments over the last five years and the far-reaching aim of Emerj are all the more relevant and necessary. As Emerj grows this year as a profitable company and increases its value to the business community, I’m excited to tune in to and be a participating voice in these expanding global conversations.

[This editorial has been updated as of March 29, 2017.]

(A special thanks and credit to all of the researchers who were part of our 2015 AI Researcher Consensus for contributing quotes, theories, and predictions to help inform the TEDx talk, and much of the related writing about AI and awareness that’s been featured here at Emerj and otherwise)