Founded in 2003 as Tesla Motors, the electric vehicle and clean energy company based in California currently has a market cap of over $700 billion – making it more valuable than the top seven automakers combined. Today, Tesla is well-known for its electric vehicles but the company also produces products for sustainable energy generation and storage such as solar panels, solar roof tiles, and more to enable “homeowners, businesses, and utilities to manage renewable energy generation, storage, and consumption”.

Tesla claims that its mission is “to accelerate the world’s transition to sustainable energy” and in 2020, the company sold over 500,000 units of its electric cars, exceeding 1 million vehicles produced. In the same year, Tesla also had the highest sales in the plug-in and battery electric passenger car segments.

In this article, we explore two different AI and ML-related use-cases at Tesla:

- Computer Vision for Autopilot AI – how Tesla works with PyTorch to create, train and improve the neural networks that aim to enable its cars’ Autopilot technology

- Opticaster Energy Asset Monitoring – Opticaster is one of the software developed by the Tesla Energy subsidiary as part of the company’s suite of energy optimization, management and storage solutions called Autonomous Control

Computer Vision for Autopilot AI

Tesla claims that Autopilot is a driving assistance system that aims to improve driver safety and convenience. When utilized correctly, the system could potentially decrease the driver’s total effort. Computer vision is just one part of a broader set of AI applications that allow for Autopilot. Autopilot allows users the convenience of self-driving, and purportedly greater safety.

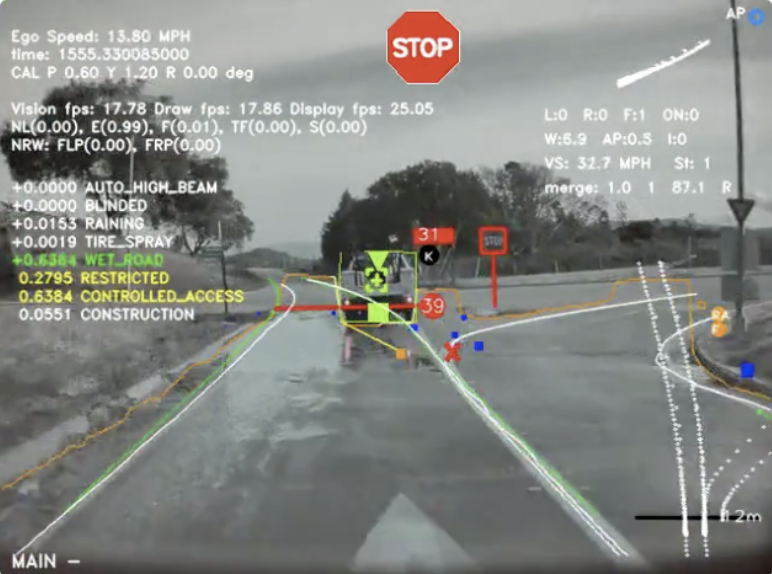

The company offers five different models and each new Tesla vehicle is outfitted with “8 exterior cameras, 12 ultrasonic sensors, and a powerful onboard computer” as well as Autopilot technology, according to the company. Autopilot relies on these cameras and sensors as the company does not use Light Detection and Ranging (LiDAR) or high-definition maps to interact with the environment like its competitors.

One potential reason that Tesla is the only major car manufacturer to focus on computer vision instead of LiDAR could be the prevalence of data because the company collects all of the data from its vehicles to continue training and improving its deep neural networks. Andrej Karpathy, Head of AI and Computer Vision at Tesla, explained at a 2019 conference that Tesla is a vertically integrated company in general and this is no different when it comes to Autopilot intelligence.

Karpathy further stated that the company builds its own vehicles, place sensors on each vehicle, collect all of their own data, label this data and use it to “train GPU clusters and then we take it through the entire stack, we run these networks on our own custom hardware that we develop in-house and then…we deploy them to our fleet of almost 3 quarter million cars and look at telemetry and try to improve the feature[s] over time.”

He explains that his team worked with PyTorch to train multiheaded neural networks or “hyrdranets” to analyze and collect data (images) for road boundaries, traffic signals, obstacles, cars, and other things it may interact with. He explains how the neural networks output a road layout prediction:

Very quickly you run across tasks that have to be a function of multiple images at the same time. For example…if you’re trying to predict road layout, you might actually need to borrow features from multiple other hydranets. So what this looks like is that we have all these different hydranets for different cameras but then you might want to pull in some features from these hydranets and go through a second round of processing, optionally recurrent, and actually produce something like a road layout prediction.

According to Tesla, there are a number of features that can be used with Autopilot technology including Autopilot navigation, Autosteer+, Smart Summon, Autopark, and more. The company demonstrates how to enable these features safely while driving on the highway here:

According to Tesla’s Q4 2020 Safety Report accident data registered only one accident for every 3.45 million miles driven in which users had engaged Autopilot. The company further stated,

“For those driving without Autopilot but with our active safety features, we registered one accident for every 2.05 million miles driven. For those driving without Autopilot and without our active safety features, we registered one accident for every 1.27 million miles driven. By comparison, NHTSA’s most recent data shows that in the United States there is an automobile crash every 484,000 miles.”

Opticaster Energy Asset Monitoring

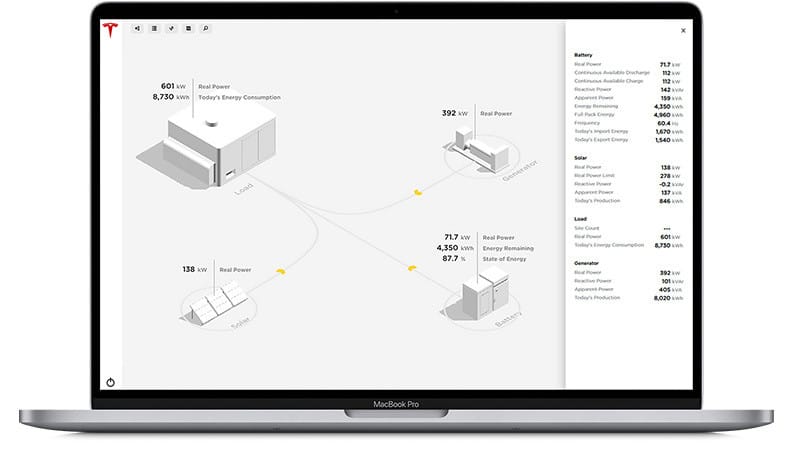

Autonomous Control is the name of Tesla’s software suite which, according to the company, uses machine learning, forecasting, optimization, and other technologies to potentially benefit its users in a number of ways from reducing energy bill cost to facilitating remote microgrid control. This software suite contains four different programs: Autobidder, Powerhub, Microgrid Controller, and Opticaster.

Opticaster is “the fundamental machine learning and optimization engine for Tesla energy software” that ultimately aims to optimize the economic advantages of distributed energy resources while also ensuring their long-term viability, according to Tesla. More specifically, the software platform is claimed to optimize site-level energy spend and allow customers to employ their energy assets “to provide valuable services to the grid.”

Colin Breck, Senior Staff Software Engineer, and Cloud Platforms Lead at Tesla spoke at QCon Software Development Conference, stating:

Software platforms… allow customers to monitor the performance of their systems in real-time or inspect historical performance over days, weeks, or even years. Now, these products for solar generation, energy storage, transportation, and charging, all have an edge computing platform. Zooming in on that edge computing platform for energy, it’s used to interface with a diverse set of sensors and controllers, things like inverters, bus controllers, and power stages.

As of today, we have been unable to identify any specific client use-cases with stated improvements to their operations as a result of Opticaster or other Autonomous Control software and products. Overall, the company doesn’t provide much detail about what data specifically is collected and how it is processed. Telsa claims that,

Opticaster solves complex use cases that combine multiple revenue streams while also respecting site export limits, throughput and backup constraints…Once a site is enrolled in an aggregation or grid service program, Opticaster ingests special price signals sent to site over-the-air. For example, a demand response dispatch may be called 24 hours ahead of time. The dispatch signal is sent to the local site controller where Opticaster will ingest it and adjust the day’s optimal dispatch schedule to generate the most value through co-optimized dispatch.

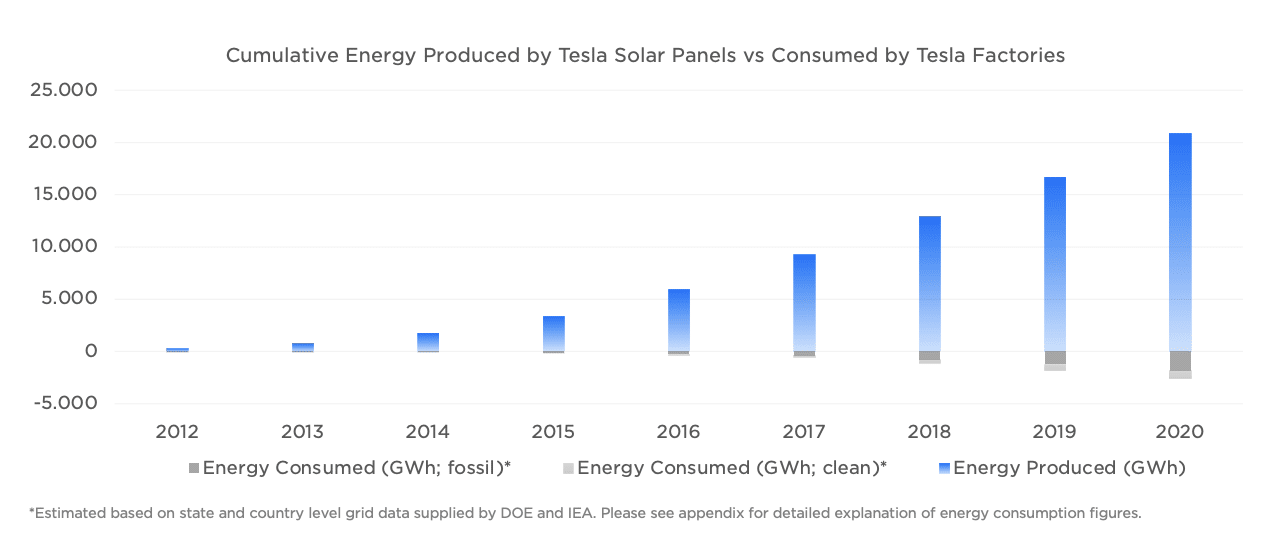

However, the company did state in its 2020 Impact Report: “As of the end of 2020, Tesla (including SolarCity prior to its 2016 acquisition by Tesla) has installed almost 4.0 Gigawatts of solar systems and cumulatively generated over 20.8 Terawatt-hours (TWh) of emissions-free electricity.”

Tesla purports that these figures are several times more clean energy produced than what was necessary for the company to run all of its vehicle factories since production began in 2012. They presented these metrics in a bar chart:

Finally, the company reported that in 2020, all of its products, from vehicles to solar panels, allowed Tesla customers and clients to avoid 5.0 million metric tons of carbon dioxide emissions.