Virtual assistants operating in response to voice or text interactions have steadily gained traction and formed a profitable sector, according to Research and Markets. The market research firm projects that total revenue will hit $15.8 billion in 2021 up from $1.6 billion in 2015. The firm also estimates that total global consumers will reach 1.8 billion by 2021.

The healthcare sector is a growing area of interest for the integration of virtual assistants. According to a study published by the American College of Physicians, primary care physicians spent 49 percent of their workday completing administrative tasks.

In an effort to reduce the administrative burden of medical transcription and clinical documentation, researchers are developing AI-driven virtual assistants for the healthcare industry.

This article will set out to determine the answers to the following questions:

- What types of AI applications are emerging to improve management of administrative tasks, such as logging medical information and appointment notes, in the medical environment?

- How is the healthcare market implementing these AI applications?

Our research suggests that the majority of AI use cases and emerging applications for virtual medical assistants appear to fall into three main categories:

- Medical Record Navigation: When companies and medical professionals use machine learning and natural language processing to search, analyze and record clinical data in a patient’s electronic health record or EHR.

- Medical Transcription: When medical professionals use machine learning and natural language processing to transcribe clinical data recorded during patient visits.

- Medical Information Search: When companies develop machine learning algorithms for chatbots to deliver personalized responses to searches for medical information by consumers and healthcare professionals based on consumer or patient data.

Below, we present representative examples from each category, as well as the current progress (funds raised, pilot applications, etc) of each example.

Medical Record Navigation

Nuance

Founded in 1992 with headquarters in Burlington, Mass. and offices throughout the globe, Nuance claims that its Dragon Medical Virtual Assistant uses AI and voice recognition technology to help automate the process of documenting clinical information. The company has focused on “speech, imaging and keypad solutions” for business and consumers. Applications include speech recognition software for the healthcare market and dictation software for companies to more rapidly record information.

In September 2017, the firm announced the release of the virtual assistant, which would add to its suite of Dragon healthcare solutions.

According to the company, when a clinician records a note with medical information using the Dragon Medical assistant IPhone application, the cloud-based virtual assistant captures the medical terminology spoken. The voice recognition assistant is designed to integrate into Electronic Health Record (EHR) systems that clinicians already use and access via desktop, mobile or online. Voice recordings can be added as notes into a patient’s file.

The Dragon Medical Virtual Assistant also allows clinicians to navigate their patient EHRs from their preferred device using voice commands to perform tasks such as prescribing medications, finding lab results or scheduling appointments.

The 2-minute video below presents examples of how a clinician may use the virtual assistant to navigate medical records using voice commands:

In a case study, Nuance reports that one of its Dragon healthcare solutions helped Universal Health Services, Inc. (UHS) reduce transcription costs by 69 percent translating to $3 million in savings. UHS has a network of 26 acute care hospitals across the U.S. During the implementation period, Nuance reports that the rates of appropriate reimbursement increased at two UHS facilities in nine weeks.

To date, the company claims its suite of healthcare solutions services over “500,000 clinicians in 10,000 healthcare organizations globally.” Examples of partners and clients include Partners Healthcare an affiliate of Harvard Medical School, U.S. Veterans Healthcare Administration, and the Children’s Hospital of Boston.

According to the company’s Linkedin page, there are hundreds of associated professionals listing machine learning expertise.

Employees include Yuen Yee Lo, a Senior Research Scientist with a PhD in Speech Recognition from the University of Paris and Paul Teeper, a PhD in Computer Science who leads Nuance’s Cognitive Innovation Group AI Lab.

Suki

Founded in 2017 and based in Redwood City, California, startup Suki, formerly called Robin AI, claims that its digital assistant uses AI to help physicians manage medical documentation.

Designed to integrate with a clinician’s existing EHR system, the system is similarly voice-activated and responds by performing the requested commands given using natural language. According to the company’s website, the system is cloud-based and accessible through mobile or desktop interfaces.

Still a relatively new company, case studies on product impact have yet to be published on Suki’s website. As of May 2018, the company has reportedly raised a total of $20 million in Seed and Series A funding.

Combined industry experience from Suki’s CEO Punit Singh Soni and co-founder and CTO Karthik Rajan, includes Google, Motorola, Salesforce and Oracle.

While we could not find a demo for Suki, this 13-minute video below gives a demonstration of its previous, consumer-oriented application, called Robin AI.

According to the startup’s Linkedin page, associated professionals with AI and machine learning expertise include Lead NLP Engineer Ray Mendoza, a PhD in Mathematical Behavioral Science from the University of California, Irvine and Mohith Julapalli a JD and MS in Computer Science from Stanford University.

Medical Transcription

Robin Healthcare

Founded in 2017, and based in Berkeley, Calif. emerging startup Robin Healthcare, which is unrelated to the above-noted Robin AI, claims that its virtual scribe leverages machine learning to document clinical information based real-time natural dialogue between a physician and patient.

In a May 2018 press release, the startup announced its launch from stealth mode and appears to be initially targeting “orthopedics and other surgical subspecialties” with a goal to eventually expand to all specialties. According to the press release, the company has secured $3.5 million in Seed funding.

To operate the assistant called Robin, the Robin-branded smart speaker device is placed in the clinic room in a location that is unobtrusive to the physician. While the physician is interacting and communicating with the patient, Robin’s speaker records while its built-in software continuously transcribes without requiring wake words, specialized dictation or any other augmentation of the normal workflow procedure. The process should result in a complete transcript of formatted, billable clinical notes sent directly into the patient EHR, acoording to the company.

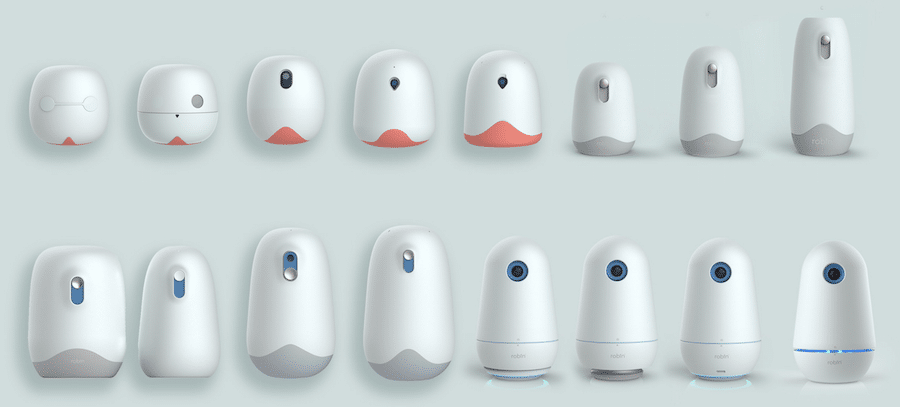

The image below shows what a Robin’s smart speakers look like:

Physicians are required to review the notes before they are submitted for any errors or necessary changes and then sign their name to approve. The algorithm used to develop the assistant was reportedly trained on “tens of thousands of patients encounters to complete clinical documentation” but data sources are not specified.

Clients include San Francisco Orthopedic Surgeons Medical Group and American Association of Orthopaedic Executives (AAOE). Case studies on how Robin has impacted administrative efficiency and cost savings have not yet been reported.

Robin Healthcare backs its transcription accuracy claims with a guarantee that if any reimbursement claim is denied as a result of incomplete documentation, the “company will issue a refund for that documentation.”

According to Linkedin, professionals associated with the company who possessi machine learning expertise include Machine Learning Engineer Adam Richards, a PhD with post-doctoral training from Duke University.

A demonstration video of this application could not be found through Youtube, Vimeo, or website searches. However, we did find Robin’s visual portfolio pitch deck here.

Medical Information Search

MedWhat

Founded in 2010 and headquartered in San Francisco, Calif., MedWhat claims that its virtual assistant uses machine learning to respond instantly to medical and health questions from both patients and doctors.

The AI Personal Medical Assistant app can respond to verbal or typed questions from consumers after the user sets up a secure health profile, according to the company. Information requested in the profile includes “age, gender and current medical conditions.” For clinicians using the assistant in the medical environment, interactions are personalized based on data entered from patient EHRs.

While the source of medical data used to train the chatbot is not specifically stated on MedWhat’s website, the company does note that it has partnerships with Microsoft Accelerator Seattle and StartX a startup accelerator associated with Stanford University.

The app is first downloaded to a user’s smartphone and questions can be asked to the assistant by either speaking or typing. Based on user interactions with the app, contextual health notifications and reminders, such as when to take medication, are periodically sent back to the user from the app.

The company says that information received from the virtual assistant should be used for informational purposes only and consumers with deeper concerns should see their physician.

In the 6-minute video below Founder and CEO Arturo Devesa provides a walkthrough and demo of the chatbot and its features at the 2:50 minute mark.

To date, the company has raised $3.2 million and according to Linkedin current team members include Julian Kates-Harbeck, a Harvard PhD student with expertise in deep learning and neural networks.

Concluding Thoughts

Among current and emerging applications of virtual assistants in the healthcare industry, our research finds that machine learning applications show a trend. While the objectives of these platforms are generally similar, to make the administrative workload less burdensome for clinicians, there are slight variations worth noting.

Navigation of electronic health records seems to be a primary focus for companies developing virtual assistant technology and for more established firms aiming to increase their competitiveness, as in the case of Nuance.

There is certainly much more traction to be gained in this market as many physicians experience frustration managing and navigating EHRs. In a survey of 7,609 physicians conducted by the research firm KLAS, 43 percent reported overall dissatisfaction with their EMR experience. The report also highlights the factors contributing to clinician satisfaction. Personalization is among these factors and is a prime opportunity for machine learning applications in this sector.

“Successful organizations understand that personalization settings are the key to making a one-size-fits-all EMR work for everyone. Personalizations that allow clinicians to quickly retrieve data or review a chart are the most powerful in improving clinician satisfaction.” – Creating the EMR Advantage – KLAS (November 2017 Report)

The use of virtual assistants for medical transcription support is also an area poised for growth. Market research firm Technavio estimates that the market size will reach over $72 billion by 2022 and voice-enabled technologies will have a significant impact.

Robin Healthcare sets a tone in the market by guaranteeing refunds for rejected reimbursement claims processed using their technology. Reliable and high transcription accuracy could be a primary concern for physicians and healthcare care institutions who may consider adopting this technology.

More research and use cases are needed to quantify cost savings and actual physician time saved. For example, Robin requires physician review of every transcript which could become a separate administrative task depending upon the volume of patients.

Virtual assistants for medical information searches seem to be the smallest application category as we found limited evidence of competition in this space. As in the example of MedWhat, while the bot is marketed to both consumers and clinicians, it may be more rapidly adopted by consumers. The necessity of personalized and quickly accessible medical information is relevant but may be a lower priority for the average physician compared to navigating EHRs, for example.

We should anticipate continued growth and expansion of applications in this sector given the increasing rate of EHR adoption by physicians. For example, the Centers for Disease Control and Prevention report that roughly 87 percent of all office-based physicians are currently using EMR/EHR system. To grasp an idea of the size of the current workforce, research published by the Federation of State Medical Boards suggests there are over 1 million licensed physicians servicing more than 320 million patients.

Header image credit: Pixabay