This is a contributed article by The Future Society, edited by Emerj and authored by Samuel Curtis, Sacha Alanoca, Nicolas Miailhe, Yolanda Lannquist, Adriana Bora. To inquire about contributed articles from outside experts, contact [email protected].

The rapid development of artificial intelligence (AI) is prompting urgent ethical and consumer protection issues, from potential bias in algorithmic recruiting decisions to the privacy implications of health monitoring applications. Policymakers have been exploring these issues in-depth and are well aligned on identifying the most important ethical principles. They have now reached a pivotal point: moving from articulating principles to implementing public policy.

However, for these efforts to be fruitful, policymakers and companies need to be aligned. Coordination between stakeholders is critical to develop pragmatic policy and governance approaches that are informed by constraints and realities on the ground.

If alignment between policymakers and companies is paramount, the inevitable question is: how aligned are they currently? What issues are policymakers prioritizing as they focus on emerging applications of AI? To what extent are companies aware of, and planning for, these new issues?

To answer these questions, The Future Society and EYQ (Ernst & Young’s global think tank) undertook a global survey of policymakers and companies. The 2020 report reveals key gaps in alignment between the private sector and policymakers, which create new market and legal risks.

Companies are Misaligned with Policymakers at this Critical Moment

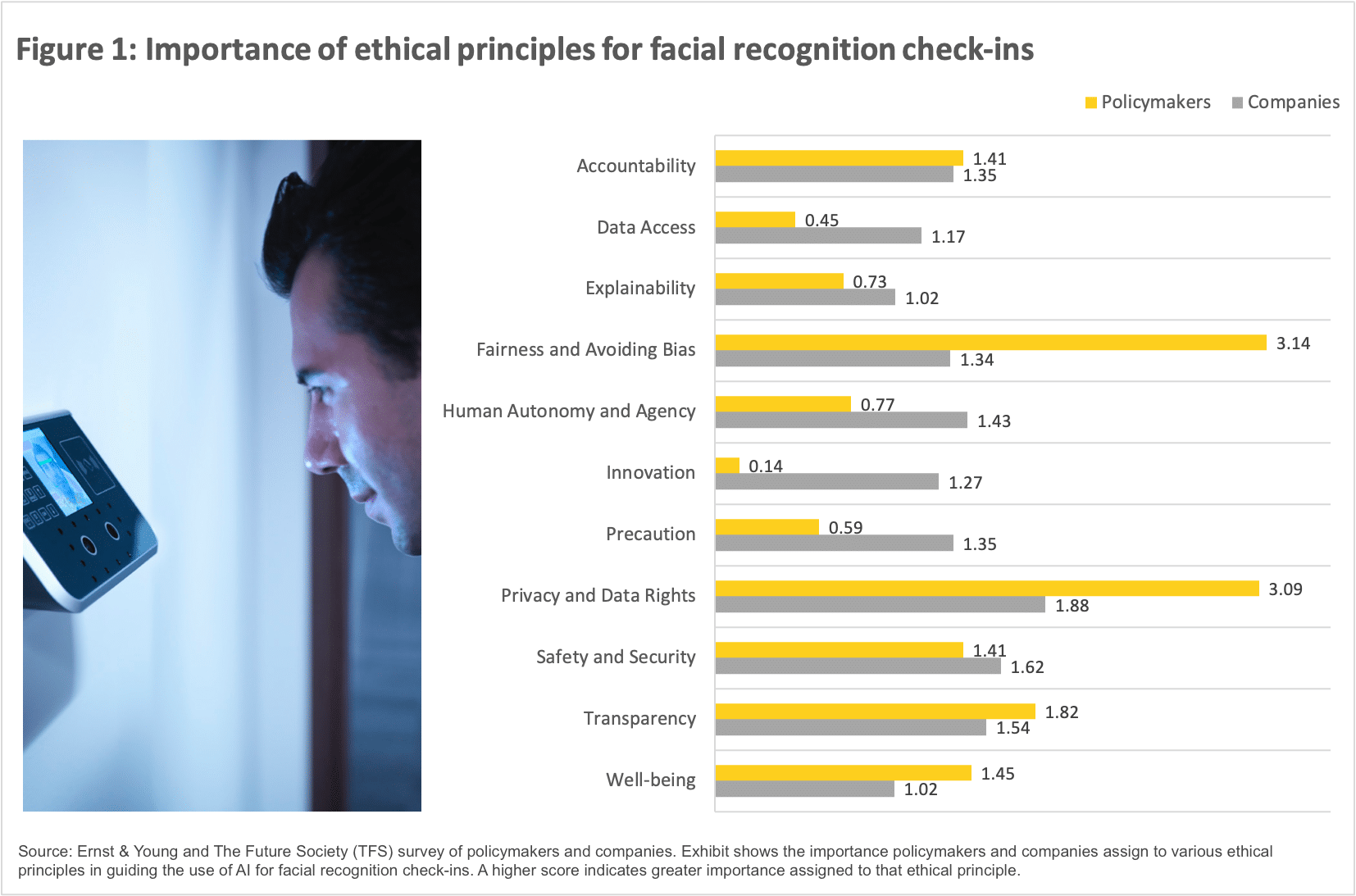

Policymakers are approaching consensus on the ethical principles they perceive as most relevant across AI use cases—consistently gravitating toward one or two principles by a wide margin. With respect to facial recognition check-ins, for instance, policymakers demonstrably preferred “fairness and avoiding bias” and “privacy and data rights” (See Figure 1). This reflects the considerable privacy concerns and potential for bias in its use (e.g., due to differential performance across races and genders).

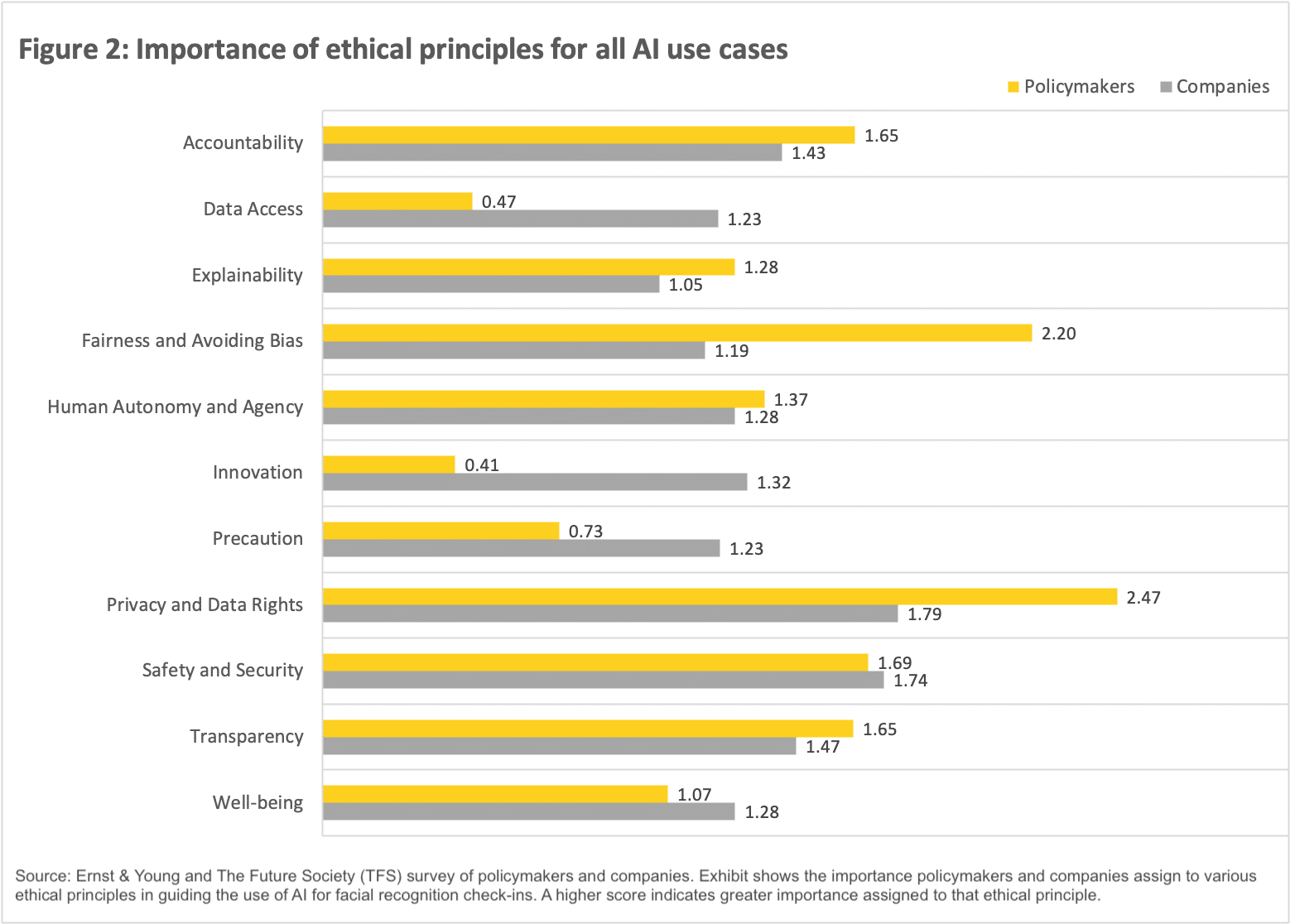

In contrast to policymakers, consensus does not seem to exist among companies. Their prioritization of ethical principles is relatively undifferentiated, with preferences distributed quite evenly across AI use cases and principles (See Figure 2). With respect to both facial recognition check-ins, for example, respondents demonstrated only marginal preferences towards “privacy and data rights” and “safety and security,” though these preferences had much narrower margins than those of policymakers.

Companies focus on principles with existing regulatory mechanisms, such as privacy and cybersecurity, as opposed to more abstract principles like accountability and human agency. These priorities may be explained by companies’ incentives and goals e.g. maximizing revenue, outcompeting competitors, and satisfying shareholders. Companies may be inclined to treat ethical principles as legal-compliance checklists rather than uncompromisable tenets. In contrast, policymakers are more sensitive to the impacts—proximate and downstream—of technologies beyond a business’s bottom line. Consequently, policymakers tend to focus relatively more on principles that are socially beneficial and less tangible, such as well-being and fairness—topics visited frequently in contemporary AI ethics.

Companies’ Misalignment with Policymakers Creates New Risks

Companies’ misalignment with policymakers will likely create a host of risks in this space, since firms may be developing products and services that are misaligned with the market and regulatory environment in which they emerge:

- Market or Competitive Risk. Consumers are expressing strong concerns specific to different applications of AI, and policymakers are proceeding to address these concerns. Firms whose products or services don’t address these concerns will have fundamentally misread market demand, and risk losing market share.

- Reputational Risk. Today’s consumers have big megaphones thanks to social media. AI products and services that cause damage to a consumer could erode the company’s brand and perception.

- Compliance Risk. If companies are not actively involved in shaping emerging regulations and don’t understand the ethical principles policymakers are prioritizing, they risk developing products and services that aren’t designed to comply with future regulatory requirements.

- Legal Risk. The inability to comply with regulations, could open companies to litigation and financial penalties.

To mitigate these risks, it is in companies’ best interest to coordinate with policymakers in developing realistic and effective policy measures and governance frameworks.

Recommendations for Companies

1. Focus on AI’s emerging ethical issues. GDPR was just the beginning. AI will raise a host of new ethical challenges.

- Which AI ethical principles are most important in your sector or segment?

- How will they affect your business?

- How should your strategy respond?

2. Engage with policymakers. If you’re not at the table, you’re on the menu. Policymakers are ready to move ahead — but, without industry input, blind spots could lead to unrealistic or onerous regulations.

- How do policymakers view AI governance and regulation in your sector or segment?

- What real-world issues are critical to understanding your business?

- How will you be part of the conversation?

3. Be proactive with soft and self-regulation. Stakeholders expect more now. If companies want to lead on AI innovation, they need to lead on AI ethics as well.

- Have you developed a corporate code conduct for AI—and does it have teeth?

- How aligned is it with the ethical principles consumers and policymakers prioritize?

- How are you working with your peers(e.g., through trade organizations) on the issues?

4. Understand and mitigate risks. AI governance — and particularly the “hard regulation” variant — will create new challenges and risks. Companies’ misalignment with policymakers only increases those risks.

- What risks might the move to AI governance/regulation create for your business?

- How are you mitigating and preparing for these challenges?

About The Future Society

Read the full report Bridging AI’s trust gaps: Aligning policymakers and companies by EYQ and The Future Society.

The Future Society is an independent nonprofit (U.S. 501(c)(3)) ‘think-and-do-tank’ originally incubated at Harvard Kennedy School in 2014. The Future Society’s mission is to advance the responsible adoption of AI and emerging technologies to benefit humanity. The Future Society is in ‘Best Think Tanks for Artificial Intelligence’ by University of Pennsylvania Lauder Institute’s Global Go To Think Tank Index. Find the list of our AI policy research, advisory services, seminars & summits, education projects, events, AI technical projects at https://thefuturesociety.org.