In the fourth installment of the FutureScape series, we discuss what our survey participants said when asked whether or not humans will play a role in the world once we reach the singularity.

As a recap, we interviewed 32 PhD AI researchers and asked them about the technological singularity: a hypothetical future event where computer intelligence would surpass and exceed that of human intelligence. This would fundamentally change society and our place in it.

Readers can navigate to the other FutureScape articles below, but reading them isn’t necessary for understanding this article:

- When Will We Reach the Singularity? – A Timeline Consensus from AI Researchers

- How We Will Reach the Singularity – AI, Neurotechnologies, and More

- Will Artificial Intelligence Form a Singleton or Will There Be Many AGI Agents? – An AI Researcher Consensus

- (You are here) After the Singularity, Will Humans Matter? – AI Researcher Consensus

- Should the United Nations Play a Role in Guiding Post-Human Artificial Intelligence?

- The Role of Business and Government Leaders in Guiding Post-Human Intelligence – AI Researcher Consensus

What Role Will Humans Play in a Post-Singularity World?

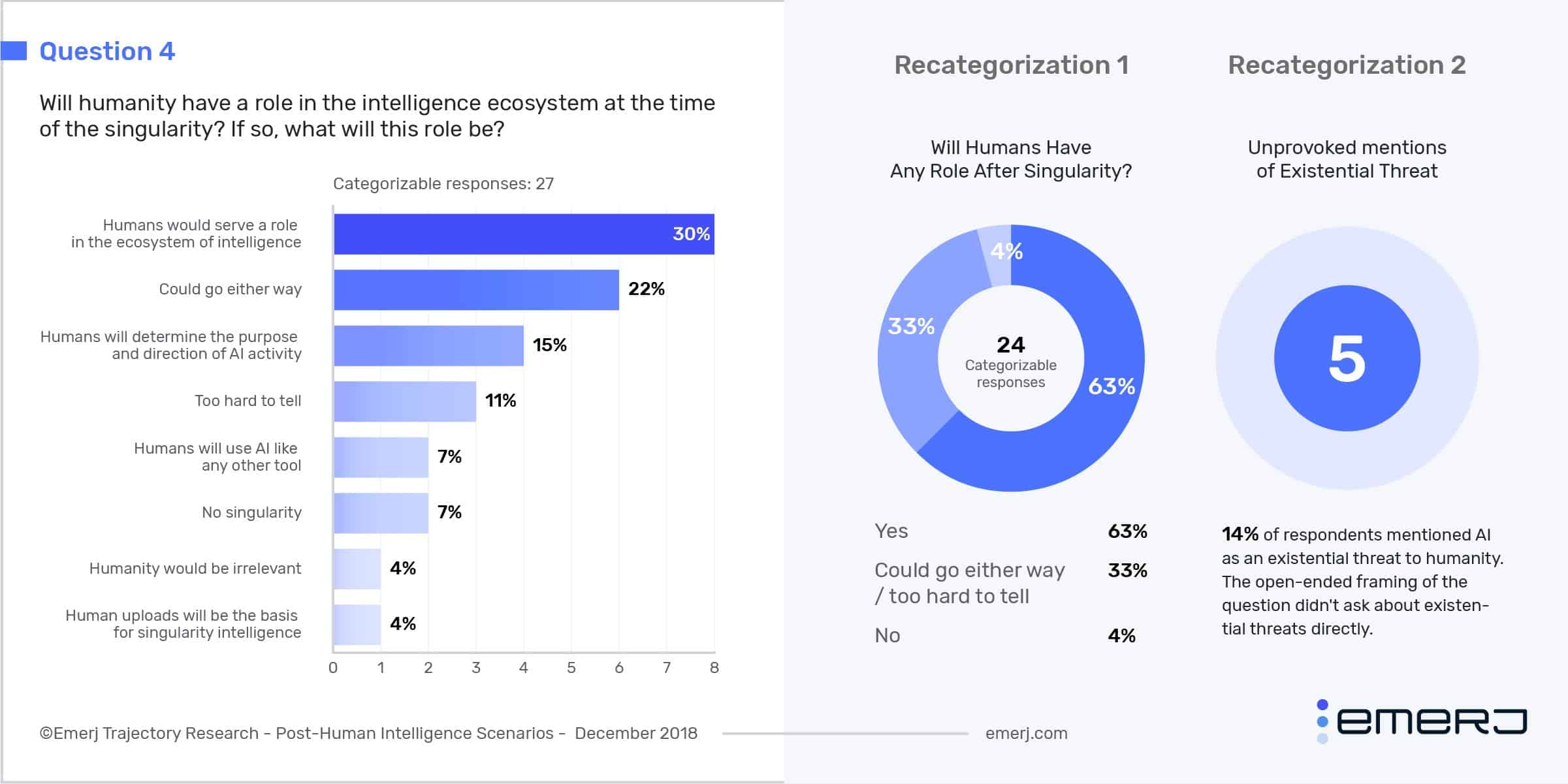

Our respondents were overall fairly optimistic about the role that humans would play in a post-singularity world, but a large portion of respondents was also uncertain.

- 63% agree that humans would still have a role in the world.

- Only one respondent was sure that humans would become irrelevant after AI vastly exceeds the capabilities of humans.

- 33% of our respondents were highly uncertain about the future, saying that it could go either way.

Below, we’ve divided up the responses for discussion, examining relevant quotes and exploring the predictions of individual experts in our survey:

Humans Matter After the Singularity

In a world where the intelligence of machines vastly exceeds that of humans, it’s easy to think that humans might become obsolete. After all, if there are machines that can do anything that a human can do, but a thousand times better, what role could humans possible serve?

The majority of our respondents, however, believe that humans will still play a roll in a post-human world, and some respondents believe that humanity will remain in control of future AGI (artificial general intelligence) technologies.

Many of our respondents believe that humanity will act as the managers of the world, directing society and using AI tools to aid them in automating work and gaining insights. This scenario received a total of 22% of responses, with 15% believing that humans would choose the purpose and direction of AI activity and 7% believing that we would use it like any other tool.

This makes sense since artificial intelligence is being used as a tool for corporations and governments right now. As the intelligence of these machines increases, humans will still want to maintain some control over autonomous systems, using AI to automate as many tasks as possible and to augment their insights and provide them with information:

I believe that AI will still be a tool for humankind, rather than a future enemy, and we will still be in control, directing it to solve the problems that we find most important. – Miguel Martinez

As we are already seeing today, humans will continue to increasingly set goals for machines and AI to achieve at higher and higher levels of abstraction… I expect this trend of humans directing high-level goals and policies to follow us into the singularity. – Helgi Páll Helgason

Some of our respondents believe that this will be the case because unconscious machines lack motivation and intention, meaning they do not have desires or purpose.

AI agents that are not conscious, as in they do not have a first-person perspective, cannot ascribe meaning or value to anything. These machines lack volition or motivation and are essentially just powerful algorithms. Humans will then be the providers of meaning, acting as the bedrock for deciding the purpose of objects in the world. “Man is the measure of all things” will continue to hold true:

The uniqueness of humanity is that we tend to assign a purpose or meaning to anything we do. Machines could surpass human on intelligence and many tasks but they don’t have a purpose or motivation. So I strongly believe the world will still be driven by us humans. – Alex Lu

Another type of response was that humans will find a niche within the ecosystem of intelligences. 30% of our respondents believed that this was a likely scenario.

Rather than AI being a tool that reflects the agency of humans, humans and other forms of intelligence will share autonomy over the world. Society could potentially be organized in a number of ways, everything from a fully automated world where much of human decision-making has been handed off to AI, to a world with both human and post-human intelligence share power:

Humanity certainly has a foundational role in choosing the values and goals of these systems and in creating a regulatory framework. If it is designed properly, the whole network will exist to serve human needs. Humans will work with intelligent systems to enhance their own insights and intelligence and will be central to further developments. – Steve Omohundro

Humans in all sorts of forms, enhanced and unenhanced, virtual and in physical appearance, will be part of the ecosystem. The different forms will not have equal influence on the total developmental process of humanity. – Tijn van der Zant

Some of our respondents even believe that humanity wouldn’t be sharing power with just other AI programs, but machines that are actually conscious.

Conscious AI would live alongside humans and share autonomy over the world’s affairs. However, some of our respondents think that human intelligence will be unique in some respect, whether because conscious AIs will be designed to fill another niche or because there’s a human quality that makes us stand out, preventing humans from being fully excluded from society:

I think the most likely outcome is that eventually… fully mind uploaded humans will be as important players in the world to come as any other [non-biological] beings whose intelligence is of a de novo origin (AI without an uploaded origin).” – Keith Wiley

Much like Neanderthals lived alongside Home Sapiens, there will be different forms of intelligence that have their idiosyncratic as well as legacy value and place in an overall constellation of intelligence. The versatile and creative thinking quality of humans alongside with their emotional intelligence will continue to play an important part in a society infused with intelligence beyond human limitations. – Pieter J. Mosterman

The last type of response that we got was a combination of the previous two answers, that humans will serve as the basis of singularity intelligence, while also being the primary benefactors and managers of capital. This scenario received only one response:

For any kind of AI, biological humans can serve as rich capitalists, who direct overall spending of their wealth from a distance. With ems, humans also serve as the initial source of specific em minds, via destructive brain scans of humans. – Robin Hanson

In this scenario, humans are still the managers of world affairs through their spending and investments, while they also serve as the basis for brain emulations that perform most of the labor in society.

However, not all scenarios where humans play a role in society are good. A few of our respondents were also worried about the extreme inequality it could create. Experts are already worried about this, with inequality in the United States at a historic high. Members of the EU Parliament have even considered measures for a robot tax to ease inequality from automation. In the far future, however, this disparity could grow to extreme inequality:

Rich people will live comfortably. The poor will be ignored. – Andras Kornai

We Choose Whether We Matter

A lot of people now days worry that AI and robots will take their jobs. In a world with intelligences that are orders of magnitude more powerful than human beings, humans might just become outright irrelevant.

Maybe humans would be kept in special habitats, as we do with monkeys today, or used for experiments, as we also do with monkeys. Or, maybe humans will have to radically upgrade their brains with cognitive enhancements in order to keep up with the progress of machines:

If a singularity happens, it is unlikely that human minds will play an important role afterwards (unlike the AI is whimsical enough to leave a zoo for us). To compete with optimized AI, human minds would have to change in ways that would remove all relevant traces of humanity. – Joscha Bach

Only one respondent outright said that humans will become irrelevant. However, 22% of our respondents believe that the future is entirely up to us and that the choices we make will ultimately determine the still undecided future of humanity:

People will not be competitive with superintelligence, so today is our best chance to shape the future. We need to design superintelligence to be safe and beneficial for humanity as a whole. – Roman Yampolskiy

“We make machines with our own purposes in mind, and that will be the starting point for any genuine AI. It therefore seems up to us how this develops.” – Sean Holden

A common theme throughout most of our responses was that our future, for lack of a better term, will probably get pretty weird. The vast changes in technological power will fundamentally reshape society in the same way the agricultural, industrial, and information revolutions did. However, this shift does not have to be for the benefit of humankind.

It’s entirely possible that this discontinuous change in intelligence could spell the end of the human race; similar to the discontinuous change in firepower from the atomic bomb that first gave humans the option to end the species.

Many experts such as Nick Bostrom and the late Stephen Hawking worry that a very powerful general intelligence that is not aligned with human values could be disastrous. The reason for this is because these machines do what they’re programmed to do, not what we intend. They often find solutions we never would have thought of.

Some of these solutions may accidentally be dangerous to humans. These optimization machines are not malicious, nor do they hate humans. The cleaning robot that throws away the cat is just trying to maximize its reward function. Similarly, the super intelligent AI that tiles the planet in computers to solve a path problem is just taking the more efficient route to solve the problem it was given. AIs don’t make mistakes; humans do.

Five of our respondents said that AI could pose an existential threat to humanity. However, it’s ultimately up to people to ensure that it doesn’t become a threat to human wellbeing:

I think the chances are 50-50 that we will be on the verge of extinction.” – Kevin LaGrandeur

Whether we are headed for extinction or not, we are on the verge of the dissolution of human civilization as we know it, and our ability to harness that intelligence ecosystem is our only hope of retaining some semblance of our current way of life in the decades to come. – Patrick Ehlen

But ultimately, forecasting the future is incredibly difficult, even for our experts. Attempts to predict the future are often wrong. The 1950s were filled with science fiction depictions of fusion energy and regular commutes to the moon. It’s always been “50 years away.”

It’s difficult to predict. We are talking about a system with turbulent, chaotic dynamics. It’s like trying to ask: “what would the weather be on this day next year”. We hope for a good outcome, even for the ideal outcome, where everybody will be able to live and to create according to their own preferences, within reason, but we really don’t know. – Michael Bukatin

Analysis

In this section, we dive deeper into the answers that our respondents gave. We looked at commonalities and trends in the data from our previous installments of this series.

A Role for Humans Post-Singularity by Year

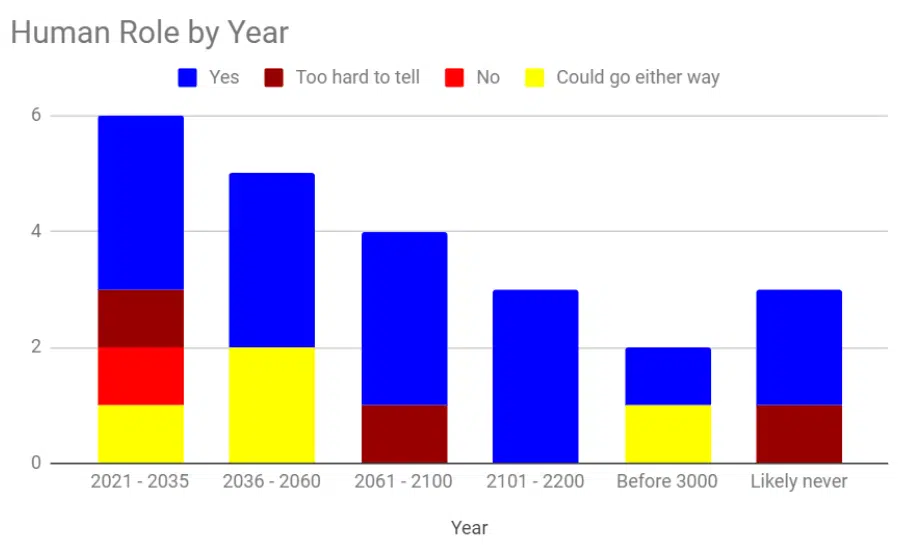

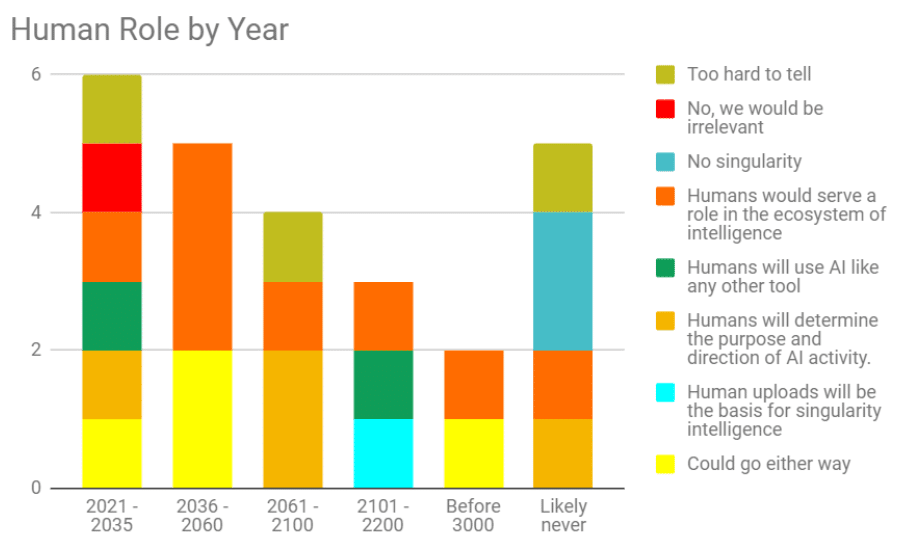

First, are there any relationships between when respondents expect the singularity to occur and what they expected the role humans will have?

Overall, most of our respondents were optimistic that humans will play some role in a post-singularity world. However, it seems like our respondents on the aggregate are less optimistic if the singularity happens sooner.

Between 2021-2021, “humans not having a role,” “going either way,” and “being too hard to tell” all got one response. Then, between 2036-2060, “going either way” received two responses. Whereas between 2061-2200, only one respondent believed that it was too hard to tell. So it would seem as if our respondents become more optimistic over time.

This trend is likely because the faster the singularity occurs, the less time society has to prepare and adapt to this transition. This lack of preparation could be anything from international organizations not having enough time to coordinate, to there not being enough time to ensure that the technology is safe for humans.

A further break down of the data reveals our respondents are more uncertain about the specific role humans will play in the short term. Between 2021-2035, every response except for human uploads and no singularity received one vote.

After 2035, the data homogenizes slightly, with more consensus about what the future might look like. So it would seem as time goes on, our respondents have less uncertainty or variance in what the future might look like. However, this might also be explained more by the lack of pessimistic responses rather than a growing consensus.

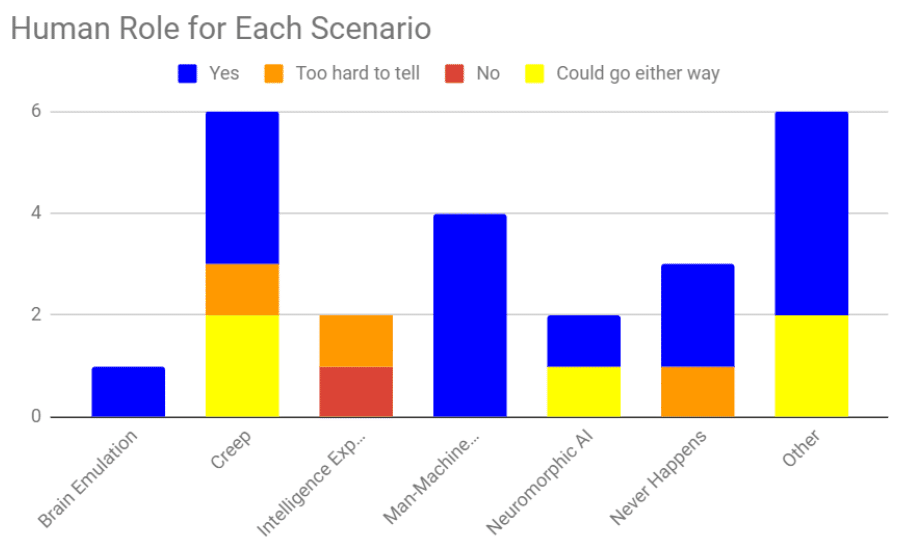

A Role for Humans by Scenario

This section explores whether there are any relationships when comparing what respondents said about what they expected the singularity to look like and what they expected the role of humans to be.

There are a few insights that can be gained from this graph. First, the intelligence creep scenario seems to be the most uncertain of all the other scenarios. Two of the respondents in this scenario said that it could go either way, and one said that it was too hard to tell. The intelligence explosion scenario was more pessimistic, but also only received two responses, so it’s hard to derive any insight from that.

The second insight is that the man-machine merger scenario is overwhelmingly optimistic about humanity playing a role in society post-singularity. This is obviously expected because humans are not being replaced, but are rather adapting and the technology is integrated into them.

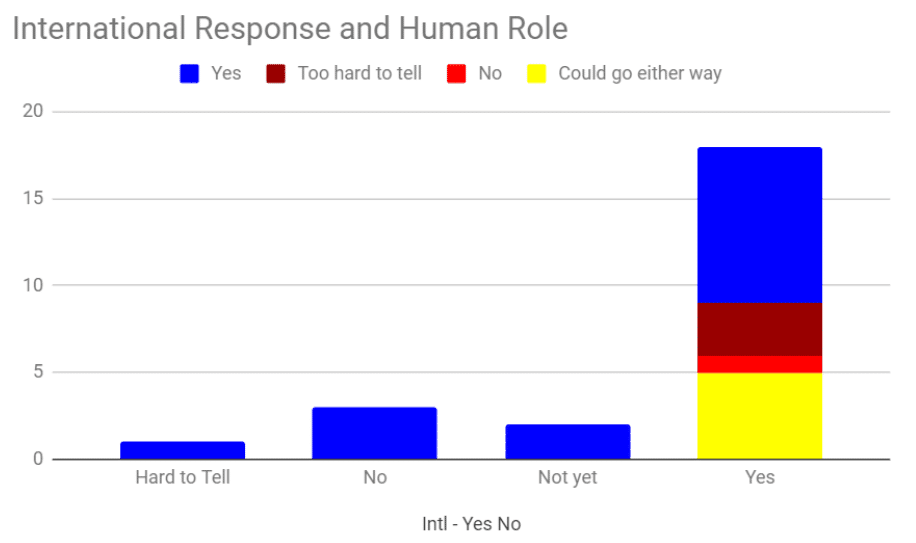

International Response and Human Role

This section explores the relationship between whether or not international organizations like the UN should get involved with AI, and whether or not humans will have a role in a post-singularity world.

Every respondent who thought that humans would not have a role in the post-singularity world, or that it could go either way, advocated for some response from international organizations. This makes sense because they would see these organizations has being more necessary to prevent harm.