Founded by Steve Jobs, Ronald Wayne, and Steve Wozniak in 1976, Apple is a global technology corporation specializing in consumer devices, software, and online services.

As of 2021, Apple is the world’s most valuable corporation and the world’s largest technology firm by revenue, and with $274 billion in sales in 2020, it is also the world’s most profitable company.

According to the company, its “more than 100,000 employees are dedicated to making the best products on earth, and to leaving the world better than [they] found it”. Apple is well-known for its “innovation with iPhone, iPad, Mac, Apple Watch, Apple TV, and Airpods” devices which each use particular software platforms such as iOS on iPhones and macOS on Mac computers and laptops.

In this article, we explore two discrete AI-related applications at Apple:

- Siri – Apple’s AI-enabled assistant that is available across Apple devices and aims to help users quickly accomplish daily tasks

- Smart HDR and Deep Fusion Photos – a look at Apple’s machine learning-powered image processing systems available in their latest devices

Siri Voice Assistant

Siri, the first virtual personal assistant, was the result of decades of artificial intelligence research at SRI. The cognitive assistant was created in collaboration with EPFL, the Swiss institute of technology, as part of the SRI-led Cognitive Assistant that Learns and Organizes (CALO) project inside DARPA’s Personalized Assistant that Learns (PAL) program, the largest-known AI project in US history.

In 2007, SRI formed Siri, Inc. to deliver the technology to the general public, receiving $24 million in two rounds of funding. Siri was bought by Apple in April 2010 and debuted as an integrated feature of the Apple iPhone 4S in October 2011.

As an AI-enabled assistant, Siri is built on large-scale machine learning algorithms that combine speech recognition and natural language processing (NLP). Speech recognition involves translating human speech into its written equivalent while NLP algorithms aim to decipher the intent of what a user is saying.

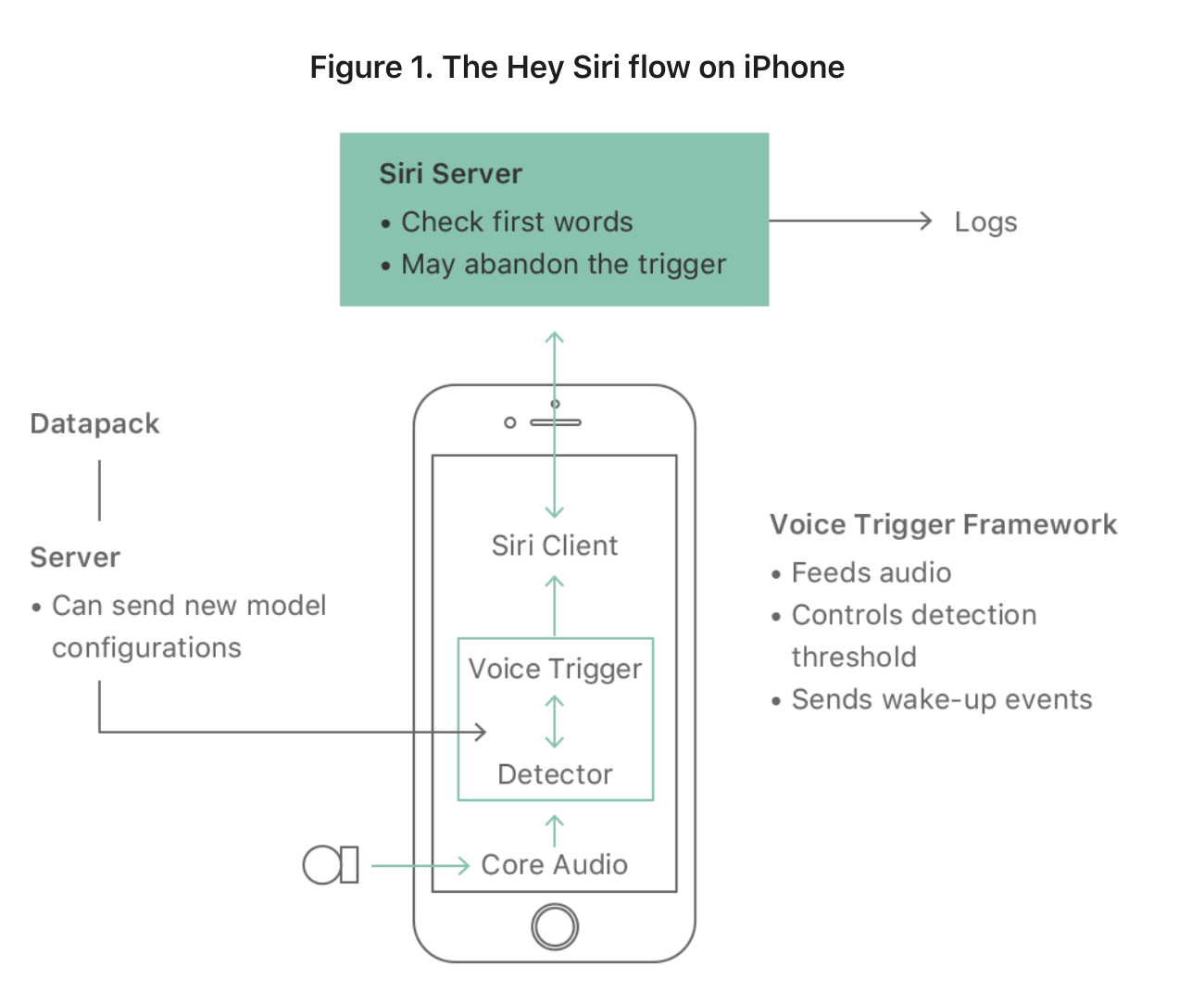

Apple’s Siri Team explains in an article how Siri detects user queries through the “Hey Siri” command:

A very small speech recognizer runs all the time and listens for just those two words. When it detects “Hey Siri”, the rest of Siri parses the following speech as a command or query. The “Hey Siri” detector uses a Deep Neural Network (DNN) to convert the acoustic pattern of your voice at each instant into a probability distribution over speech sounds. It then uses a temporal integration process to compute a confidence score that the phrase you uttered was “Hey Siri”. If the score is high enough, Siri wakes up.

The team also details how they trained this model by creating a language-specific phonetic specification for the “Hey Siri” phrase. For some languages, this meant training with different regional pronunciations. The importance of the way the phrase is pronounced is that each phonetic symbol in the phrase is categorized into three sound classes which each have corresponding outputs from the acoustic model.

Worldwide Developers Conference (WWDC) in 2020, Yael Garten, Director of Siri Data Science and Engineering claimed that Siri processes 25 billion requests per month.

Our secondary research was unable to identify substantial support that Apple has sold more products because of Siri or upgrades to Siri’s software.

Smart HDR and Deep Fusion Photos

With the release of the iPhone 11 in 2018, Apple introduced its Smart HDR photo mode. One year later, the company announced an upgrade to Smart HDR photo-taking mode as well as a new image processing system called Deep Fusion.

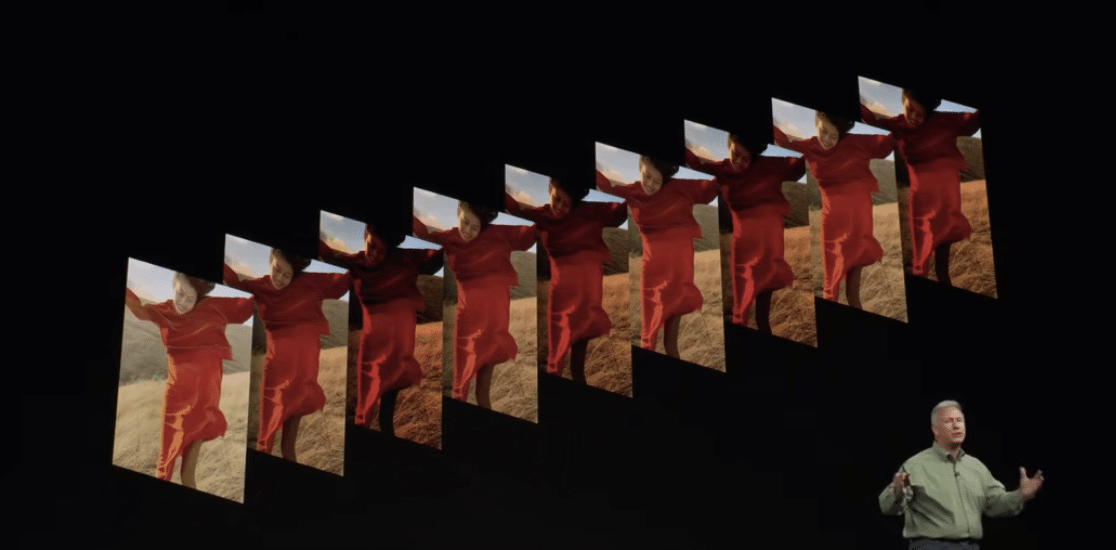

At the 2018 Worldwide Developers Conference, Phil Schiller introduced and explained how Smart HDR photos work:

One year later, the company announced the launch of its news application machine learning through the neural engine of the A13 Bionic chip. Schiller stated,

It shoots nine images before you press the shutter button, it’s already shot four short images, four secondary images. When you press the shutter button it takes one long exposure. And then in just one second, the neural engine analyses the fuzed combination of long and short images, picking the best among them, selecting all the pixels, and pixel by pixel, going through 24 million pixels to optimize for detail and low noise… It is computational photography mad science.

Although our secondary research did find support for the claim that camera or image quality was one of the top influencing factors for young consumers deciding on a smartphone, it was overall unable to identify substantial evidence that Apple’s product sales have been impacted as a result of these camera and photo processing upgrades. But, given the overall impact of the camera quality on the smartphone market, this appears to be an important innovation, and we can expect camera quality to be a continuing point of competition between major smartphone brands into the future.