Natural language processing (NLP) is a branch of artificial intelligence meant for analyzing, understanding, and generating human language. It enables computers to process and interpret natural language data, allowing for more natural interactions between humans and machines.

The origins of NLP can be traced back to the 1950s when researchers began exploring ways to enable computers to understand and generate human language. Early work focused on rule-based approaches, but in the late 1980s, there began a shift towards statistical and machine learning methods. It was driven by increasing computational power and the availability of large datasets that enabled the development and application of more sophisticated models and techniques.

Since then, NLP has seen rapid advancements, particularly with the rise of deep learning and neural network models. The evolution therein has led to significant improvements in tasks like machine translation, sentiment analysis, and language generation.

Matt Berseth is the Co-founder and Chief Information Officer of NLP Logix. The fast-growing AI services firm based in Florida caters to a diverse clientele in both the public and private sectors. True to their name, NLP Logix has been invested in natural language processing technologies and capabilities for over a decade, placing them in a leading position for driving AI adoptions for advanced text-based generative enterprise capabilities.

Matt gave a presentation on the history of AI through the lens of NLP as a discipline before the fifth annual conference for the Florida Association of Accountable Care Organizations, the premier organization for healthcare professionals in the state. Over the last decade, NLP Logix built its reputation on driving responsible outcomes by keeping stakeholder teams of every shape and size abreast of how AI systems work in practice. Much of their expertise in these areas are showcased in Matt’s keynote before the conference.

This article examines three critical insights for business leaders about deploying AI at their organizations given in Berseth’s history of AI development, and what that history says about the current moment of the technological hype cycle.

- The shift to data-centric AI development: Explaining the emerging focus among business leaders on acquiring and utilizing high-quality data to maximize AI performance, as improvements are now driven more by data quality than by developing new algorithms.

- AI-assisted development to increase productivity: Adopting AI tools like GitHub Copilot to assist developers with code suggestions, leading to significant productivity enhancements.

- The value differences between narrow AI, generative AI (GenAI), and supervised learning: The functional differences between ‘narrow’ AI applications used for specific tasks and new generative use cases that show the value for business leaders going forward will be in supervised learning techniques behind the step-level changes between these capabilities.

Watch the full presentation in the video below:

Featured Expert: Matt Breseth, Co-Founder & CIO, NLP Logix

Expertise: AI, Data Science, Software Engineering

Brief recognition: Matt is the Co-founder and CIO of NLP Logix, where he leads a team of data scientists and engineers who deliver AI solutions. He has a Masters degree from North Dakota State University in software engineering. He has taught as an adjunct at Jacksonville University and the University of North Florida since 2017, covering topics such as data science, database management, and software engineering.

The Shift to Data-Centric AI Development

The beginning of Matt’s presentation sets the stage for the creation of AI and its ensuing history. He surmises that AI relies on three fundamental components:

- Data

- Algorithms

- Computational power

Berseth goes on to explain how the emphasis of traditional approaches to AI focused on developing sophisticated algorithms, which required expertise in mathematics, statistics, computer science, and hardware. The process was complex and akin to low-level programming, making it challenging to scale and requiring highly skilled individuals.

However, over the past decade, Berseth notes there has been a significant shift. Now, cloud providers like AWS, Azure, and Google offer ready-made tools and pre-trained models, also known as foundational models. He goes on to explain that these changes mean that developers can use existing models and fine-tune them rather than building them from scratch, which is more cost-effective and efficient. As a result, the focus has shifted from algorithm development to leveraging these pre-built models and tools, making AI development more accessible and less daunting.

He highlights that the emphasis on AI development has moved from being model-centric to data-centric. Previously, improvements in AI were driven by better algorithms and coding. Now, the approach involves using the best models from previous years and enhancing them by providing more and better data. That shift means that only high-quality and immaculate data can significantly improve AI performance, even if the algorithms are not the latest:

“And the way that we talked about that at our business is ‘Better data beats fancier algorithms.’ And if you think about that, as if you gave me a world-class algorithm, the best algorithm, but not the best kind of data, you’re going to end up with a failure. Your bad data is going to ruin your model – garbage in, garbage out. But if you have excellent data, and you gave me an algorithm from three or four years ago, you’re going to do pretty well; you’re probably going to have success because the data is what’s driving the performance more than the algorithm.”

–Matt Breseth, Co-Founder & CIO of NLP Logix

AI-Assisted Development to Increases Productivity

Matt then discusses the concept of AI applications evolving from a “copilot” mode to full “self-driving” automation. In the copilot framework, AI systems assist by making suggestions for human agents, but those agents ultimately control the process and are responsible for outcomes. The transition to augment purely human-based workflows is the step where many AI applications start, as it allows for an easy entry point between human agents and their workflows.

Matt explains that, as AI capabilities become more sophisticated, they take on a more significant role, leading to increased scalability because fewer humans are needed. The ultimate goal is for AI to handle most of tasks autonomously, similar to a self-driving car. He provides an example from his own experience. While driving, the AI-driven ADAS programming in Matt’s car prevented a collision by automatically applying the brakes, acting as a copilot that intervenes when necessary.

Matt explains that this incremental shift from copilot to self-driving is mirrored in NLP Logix’s business operations. For instance, their developers use GitHub Copilot, an AI tool that suggests code snippets as they write. The AI might suggest a Python code snippet to sort an array of larger coding blocks when it detects that the developer intends to do so. The developers then accept these suggestions about 27% of the time, illustrating a significant boost in productivity as copilot programs assist with repetitive or predictable tasks.

The Value Differences between Narrow AI, Generative AI, and Supervised Learning

Matt then explains the different types of AI, starting with narrow AI, which is designed to perform a single task at a human level. Examples include image classification workflows like identifying cats, dogs, hot dogs, and vehicles. Narrow AI systems are highly specialized and cannot perform tasks outside their specific function.

He then contrasts narrow AI with artificial general intelligence (AGI), which aims to perform all tasks at human levels. He feels achieving AGI is a significant goal for organizations like OpenAI for the tremendous value they would bring to any enterprise. Lastly, Matt touches on superintelligent AI, which can perform all tasks at a superhuman level, as the next step beyond AGI.

Matt emphasizes that currently, whenever AI is mentioned in the news or marketing, it refers to narrow AI. There is no clear timeline for the development of AGI, and Matt believes that we won’t achieve AGI through machine learning or deep learning alone; a new paradigm shift is required, but he is uncertain what that might be, as many popular applications today are simply engineered by comparison:

“Google’s business is $80 billion of showing us advertising. [That’s a] very successful application of narrow AI. We’ve got Amazon selling us things that are all AI-driven. One of the earlier consumer-based AI applications that we may all remember is Netflix. You remember signing up for your Netflix account, going in, and seeing your shows. So you get more shows like that coming at you. It’s something like self-driving fields. It’s really advanced AI, but it’s really an engineering problem. It’s got modules, it’s got… I don’t even know how many different narrow AI algorithms looking for cars and vehicles and the lane, pedestrians, and different systems, but you put all that together. It seems very advanced. But you know, under the covers, it’s a lot of engineering.”

– Matt Breseth, Co-Founder & CIO of NLP Logix

Like Netflix’s recommendation algorith, even advanced applications like self-driving cars and ChatGPT rely on multiple narrow AI models and extensive engineering rather than a single, all-encompassing AI system. Such complexity might not be widely discussed because it could detract from the perceived sophistication of these technologies.

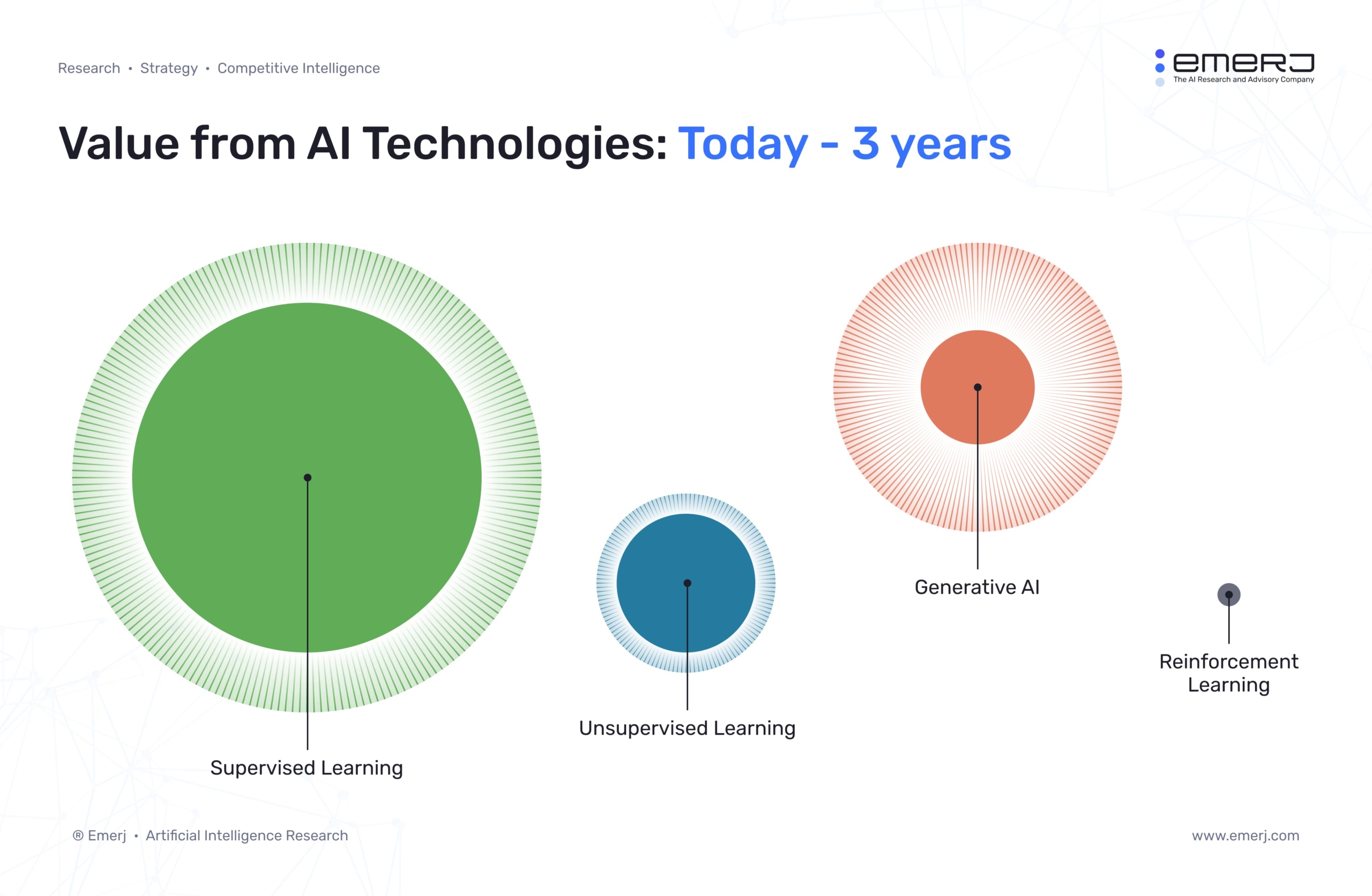

Matt then touches on the importance and value of different types of AI. He uses the diagram below to illustrate the relationships between various AI techniques, noting that:

- Generative AI (GenAI) is currently prominent but smaller than unsupervised learning and significantly smaller than supervised learning.

- Supervised learning was the dominant focus before ChatGPT’s release in 2022 and involves tasks like labeling and predicting, such as identifying whether a diagnotistic x-ray is indicative a tumor or not or whether an image depicts a specific object.

- Underneath the current hype around GenAI, Matt emphasizes that most of the value today still comes from supervised learning.

A recreation of a slide from Matt Berseth’s presentation on the history of AI before the fifth annual conference for the Florida Association of Accountable Care Organizations, emphasizing the growth of supervised learning compared to other areas of AI.

He believes these trends will remain valid for the next few years because supervised learning techniques are well understood and effectively implemented, creating substantial value.

Matt mentions that, although GenAI will continue to find new applications, supervised learning will still generate more value in the near future. He finds this noteworthy and suggests that the landscape of AI could look very different in the next 5, 10, or 15 years, with potential new technologies emerging well beyond the scope of narrow AI.

Finally, Matt highlights the growing concern regarding the long-term viability of large language models (LLMs), not due to their impressive capabilities but because of their high operational costs. He mentions a report by the Wall Street Journal about Microsoft’s GitHub Copilot, an LLM for developers, which loses $20 per month per user despite charging the same amount. It implies that it costs $40 per user each month (at the cost of a $20 per month subscription) to operate, resulting in significant losses.

Additionally, there is speculation that ChatGPT also incurs losses with each user query. These models are massive and require substantial resources to create, train, operate, and enhance, making them very expensive to maintain. Matt ends on a note emphasizing the need to monitor these issues closely to understand how these financial challenges will affect the future of LLMs.